文章目录

- 组件启动

- 实现组件类

- 实现组件头文件

- 实现组件源文件

- 设置配置文件

- 启动组件

- 激光感知

- 目录结构

- 源码剖析

- detection——init

- InitAlgorithmPlugin

- detection——Proc

- 点云预处理

- 高精地图定位信息获取

- 障碍物检测

- 障碍物边框构建

- Bounding_box过滤

本文先以感知模块perception_lidar为例,先讲解组件component如何启动,后对lidar detection 模块代码进行剖析

apollo注释代码链接:https://github.com/Xiao-Hu-Z/apollo_xiaohu,后续会针对某些细节点进一步梳理

组件启动

Perception 是核心的组件之一,但像所有的 C++ 程序一样,每个应用都有一个 Main 函数入口,那么引出本章要探索的 2 个问题:

Perception入口在哪里Perception如何启动

看Perception 模块前,很有必要先去了解下cyber

Apollo Cyber 运行时框架(Apollo Cyber RT Framework) 是基于组件概念来构建的. 每个组件都是Cyber框架的一个构建块, 它包括一个特定的算法模块, 此算法模块处理一组输入数椐并产生一组输出数椐。

ROS 应用于自动驾驶领域的不足:

- 调度的不确定性:各节点以独立进程运行,节点运行顺序无法确定,因而业务逻辑的调度顺序无法保证;

- 运行效率:

ROS为分布式系统,存在通信开销

Apollo在3.5中引入了Cyber RT,替换了以前基于ROS的变体, CyberRT 删除了master 机制,用自动发现机制代替,此外,Cyber RT的核心设计将调度、任务从内核空间搬到了用户空间。

组件管理

要创建并启动一个算法组件, 需要通过以下4个步骤:

- 初如化组件的文件结构

- 实现组件类

- 设置配置文件

- 启动组件

一个 component 需要创建以下文件:

- Header file: common_component_example.h

- Source file: common_component_example.cc

- Build file: BUILD

- DAG dependency file: common.dag

- Launch file: common.launch

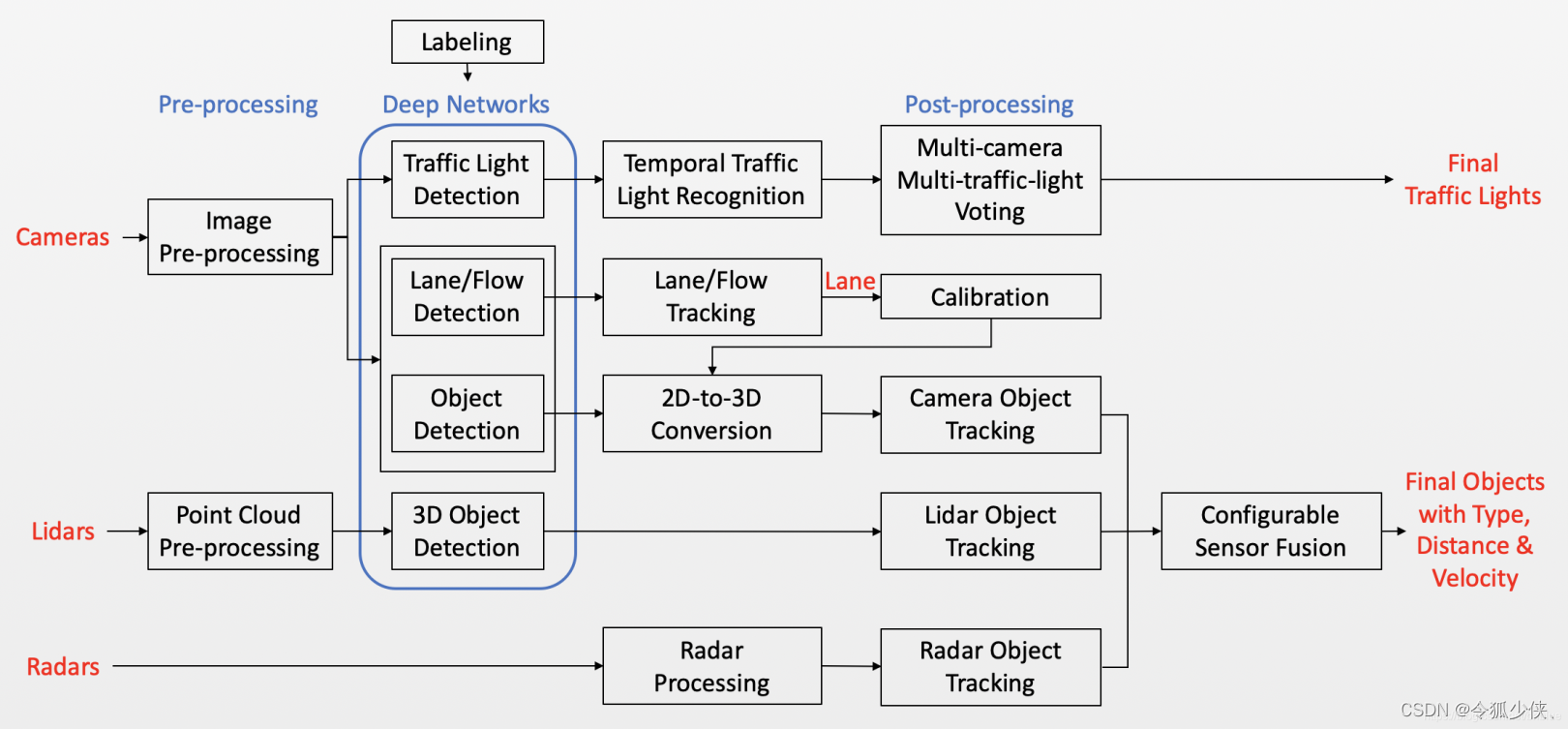

Apollo 是多数据融合的,它融合 Camera、Lidar、Radar 目标,而这 3 个都是一个 component。

感知模块的入口在production目录,通过lanuch加载对应的dag,启动感知模块,感知模块包括多个子模块,在onboard目录中定义。

perception组件路径:

apollo/modules/perception/onboard/component

这个目录下,定义和实现了很多感知相关的组件,本文只关注于 Detection。

实现组件类

实现组件头文件

detection_component.h:

- 继承 Component 类

- 定义自己的 Init 和 Proc 函数. Proc 需要指定输入数椐类型。

- 使用CYBER_REGISTER_COMPONENT宏定义把组件类注册成全局可用。

namespace apollo

{

namespace perception

{

namespace onboard

{

class DetectionComponent : public cyber::Component<drivers::PointCloud>

{

public:

DetectionComponent() = default;

virtual ~DetectionComponent() = default;

bool Init() override;

bool Proc(const std::shared_ptr<drivers::PointCloud> &message) override;

private:

bool InitAlgorithmPlugin();

bool InternalProc(

const std::shared_ptr<const drivers::PointCloud> &in_message,

const std::shared_ptr<LidarFrameMessage> &out_message);

private:

static std::atomic<uint32_t> seq_num_;

std::string sensor_name_;

std::string detector_name_;

bool enable_hdmap_ = true;

float lidar_query_tf_offset_ = 20.0f;

std::string lidar2novatel_tf2_child_frame_id_;

std::string output_channel_name_;

base::SensorInfo sensor_info_;

// TransformWrapper类:用于查询不同坐标系之间的变换关系

TransformWrapper lidar2world_trans_;

std::unique_ptr<lidar::BaseLidarObstacleDetection> detector_;

std::shared_ptr<apollo::cyber::Writer<LidarFrameMessage>> writer_;

};

// 使用CYBER_REGISTER_COMPONENT宏定义把组件类注册成全局可用

CYBER_REGISTER_COMPONENT(DetectionComponent);

} // namespace onboard

} // namespace perception

} // namespace apollo

实现组件源文件

对于源文件Init 和 Proc 这两个函数需要实现,代码就不贴了,下面会对该部分代码解析

BUILD 文件地址是:

apollo/modules/perception/onboard/component/BUILD

BUILD 文件定义了 perception 中所有的 component 如 camera,radar,lidar 等的信息,本文只关注 Detection。

cc_library(

name = "detection_component",

srcs = ["detection_component.cc"],

hdrs = ["detection_component.h"],

deps = [

":lidar_inner_component_messages",

"//cyber/time:clock",

"//modules/common/util:string_util",

"//modules/perception/common/sensor_manager",

"//modules/perception/lib/registerer",

"//modules/perception/lidar/common",

"//modules/perception/lidar/app:lidar_obstacle_detection",

"//modules/perception/lidar/lib/ground_detector/spatio_temporal_ground_detector",

"//modules/perception/lidar/lib/interface",

"//modules/perception/lidar/lib/object_builder",

"//modules/perception/lidar/lib/object_filter_bank/roi_boundary_filter",

"//modules/perception/lidar/lib/roi_filter/hdmap_roi_filter",

"//modules/perception/lidar/lib/scene_manager/ground_service",

"//modules/perception/lidar/lib/scene_manager/roi_service",

"//modules/perception/lidar/lib/detector/point_pillars_detection:point_pillars_detection",

"//modules/perception/lidar/lib/detector/cnn_segmentation:cnn_segmentation",

"//modules/perception/lidar/lib/detector/ncut_segmentation:ncut_segmentation",

"//modules/perception/onboard/common_flags",

"//modules/perception/onboard/proto:lidar_component_config_cc_proto",

"//modules/perception/onboard/transform_wrapper",

"@eigen",

],

)

设置配置文件

一个 Component 的配置文件有 2 种:

- DAG

- Launch

DAG 定义了模块的依赖关系,Launch 文件定义了模块的启动。

下面看激光雷达感知的Launch 文件:

launch文件:Apollo/modules/perception/production/launch/perception_lidar.launch

<!--this file list the modules which will be loaded dynamicly and

their process name to be running in -->

<cyber>

<desc>cyber modules list config</desc>

<version>1.0.0</version>

<!-- sample module config, and the files should have relative path like

./bin/cyber_launch

./bin/mainboard

./conf/dag_streaming_0.conf -->

<module>

<name>perception_lidar</name>

<dag_conf>/apollo/modules/perception/production/dag/dag_streaming_perception_lidar.dag</dag_conf>

<!-- if not set, use default process -->

<process_name>perception_lidar</process_name>

<version>1.0.0</version>

</module>

</cyber>

对应的dag文件:Apollo/modules/perception/production/dag/dag_streaming_perception_lidar.dag其中包括DetectionComponent, RecognitionComponent, FusionComponent, V2XFusionComponent四个组件类,即检测,识别跟踪、融合、车联网融合。单对于lidar模块,主要就是检测和识别跟踪两个组件类的具体实现,融合和车联网融合是lidar模块输出结果的后续处理。

dag_streaming_perception_lidar.dag内容:

module_config {

module_library : "/apollo/bazel-bin/modules/perception/onboard/component/libperception_component_lidar.so"

components {

class_name : "DetectionComponent"

config {

name: "Velodyne128Detection"

config_file_path: "/apollo/modules/perception/production/conf/perception/lidar/velodyne128_detection_conf.pb.txt"

flag_file_path: "/apollo/modules/perception/production/conf/perception/perception_common.flag"

readers {

channel: "/apollo/sensor/lidar128/compensator/PointCloud2"

}

}

}

components {

class_name : "RecognitionComponent"

config {

name: "RecognitionComponent"

config_file_path: "/apollo/modules/perception/production/conf/perception/lidar/recognition_conf.pb.txt"

readers {

channel: "/perception/inner/DetectionObjects"

}

}

}

components {

class_name: "FusionComponent"

config {

name: "SensorFusion"

config_file_path: "/apollo/modules/perception/production/conf/perception/fusion/fusion_component_conf.pb.txt"

readers {

channel: "/perception/inner/PrefusedObjects"

}

}

}

}

module_config {

module_library : "/apollo/bazel-bin/modules/v2x/fusion/apps/libv2x_fusion_component.so"

components {

class_name : "V2XFusionComponent"

config {

name : "v2x_fusion"

flag_file_path : "/apollo/modules/v2x/conf/v2x_fusion_tracker.conf"

readers: [

{

channel: "/perception/vehicle/obstacles"

}

]

}

}

}

该类配置参数proto定义:modules/perception/onboard/proto/lidar_component_config.proto,

具体实现文件在:modules/perception/production/conf/perception/lidar/velodyne128_detection_conf.pb.txt,每个激光雷达一个实现文件。

通过modules/perception/lidar/app/lidar_obstacle_detection.cc中的LidarObstacleDetection类完成实际激光雷达点云障碍物检测工作;

Apollo7.0的lidar模块的处理过程就是:DetectionComponent(检测) >> RecognitionComponent(识别跟踪)。

component位于:

Apollo/modules/perception/onboard/component/detection_component.cc

Apollo/modules/perception/onboard/component/recognition_component.cc

启动组件

定义了一个 component 相关的文档后,就可以启动组件:

-

使用launch文件来启动

cyber_launch start apollo/modules/perception/production/launch/perception_lidar.launch

激光感知

先看perception模块的目录结构

.

|-- BUILD // 基础类

|-- base // 基础类

|-- camera // 相机相关

|-- common // 公共目录

|-- data // 相机的内参和外参

|-- fusion // 传感器融合

|-- inference // 深度学习推理模块

|-- lib // 一些基础的库,包括线程、文件配置等

|-- lidar // 激光雷达相关

|-- map // 地图

|-- onboard // 各个子模块的入口

|-- production // 感知模块入口 --- 通过cyber启动子模块

|-- proto // 数据格式,protobuf

|-- radar // 毫米波雷达

|-- testdata // 几个模块的测试数据

`-- tool // 离线测试工具

目录结构

文件夹结构:以下文件夹都是在perception/lidar/下

-

app——lidar应用类,主处理类,即最终应用程序应该是实例化该文件夹下的类来完成

-

common——定义lidar感知模块需要用的通用数据结构,例如LidarFrame,通用处理方法等;

-

lib——激光雷达感知中算法实现库

-

interface——各种算法类的基类定义,作为算法通用类的接口

-

lib/roi_filter——包含hdmap_roi_filter和roi_service_filter两个文件夹,前者用来利用高精度地图的信息来对LidarFrame中给出的高精度地图查询信息对点云进行ROI限制。

-

perception/map/hdmap——用在感知模块用来查询与高精度地图相关的信息。

launch文件:Apollo/modules/perception/production/launch/perception_lidar.launch

对应的dag文件:Apollo/modules/perception/production/dag/dag_streaming_perception_lidar.dag

launch文件用来启动,dag文件描述了整个系统的拓扑关系,也定义了每个Component需要订阅的话题

launch文件和dag文件上面有介绍,就不多赘述了,下面直接看modules/perception/onboard/component/detection_component.cc代码

源码剖析

先看检测部分代码,对应文件:detection_component.cc

detection——init

bool DetectionComponent::Init()

{

// 读取配置文件,配置文件定义:modules/perception/onboard/proto/lidar_component_config.proto

LidarDetectionComponentConfig comp_config;

if (!GetProtoConfig(&comp_config))

{

return false;

}

ADEBUG << "Lidar Component Configs: " << comp_config.DebugString(); // DebugString:打印输出comp_config对象

output_channel_name_ = comp_config.output_channel_name();

sensor_name_ = comp_config.sensor_name();

detector_name_ = comp_config.detector_name();

lidar2novatel_tf2_child_frame_id_ = comp_config.lidar2novatel_tf2_child_frame_id();

lidar_query_tf_offset_ = static_cast<float>(comp_config.lidar_query_tf_offset());

enable_hdmap_ = comp_config.enable_hdmap();

// 发布消息

writer_ = node_->CreateWriter<LidarFrameMessage>(output_channel_name_);

/**配置文件参数

* Apollo/modules/perception/production/conf/perception/lidar/velodyne128_detection_conf.pb.txt:

* sensor_name: "velodyne128"

* enable_hdmap: true

* lidar_query_tf_offset: 0

* lidar2novatel_tf2_child_frame_id: "velodyne128"

* output_channel_name: "/perception/inner/DetectionObjects"

*/

// 初始化成员算法类

if (!InitAlgorithmPlugin())

{

AERROR << "Failed to init detection component algorithm plugin.";

return false;

}

return true;

}

LidarDetectionComponentConfig 路径:{apollo/modules/perception/onboard/proto/lidar_component_config.proto

InitAlgorithmPlugin

在调用各模块类的处理逻辑process,先对个模块功能类进行参数初始化,先调用LidarObstacleDetection::Init,后分别分别调用各模块功能类(预处理,根据高精度hdmap获取离定位点一定范围的道路、交叉路口信息,pointpillar目标检测)的init方法

bool DetectionComponent::InitAlgorithmPlugin()

{

// 读取传感器元数据,元数据的读取是通过SensorManager来完成的,SensorManager 类经宏定义 DECLARE_SINGLETON(SensorManager) 修饰成为单例类,单例对象调用GetSensorInfo函数获取传感器名sensor_name_对应的传感器信息SensorInfo

// 其在初始化时会读取modules/perception/production/data/perception/common/sensor_meta.pt的包含所有传感器元数据的列表

ACHECK(common::SensorManager::Instance()->GetSensorInfo(sensor_name_,&sensor_info_));

// apollo/modules/perception/lib/registerer/registerer.h

// 父类指针detector指向子类LidarObstacleDetection的对象

lidar::BaseLidarObstacleDetection *detector = lidar::BaseLidarObstacleDetectionRegisterer::GetInstanceByName(detector_name_); // 调用宏定义类的静态方法

CHECK_NOTNULL(detector);

detector_.reset(detector);

// lidar型号,hdmap是否使用

lidar::LidarObstacleDetectionInitOptions init_options;

init_options.sensor_name = sensor_name_;

// 调用DEFINE_bool宏获取hdmap选择FLAGS_obs_enable_hdmap_input

// DEFINE_bool宏位于:modules/perception/onboard/common_flags/common_flags.cpp

init_options.enable_hdmap_input = FLAGS_obs_enable_hdmap_input && enable_hdmap_;

// 多态性:子类LidarObstacleDetection重写父类BaseLidarObstacleDetection的虚函数init

// 调用子类LidarObstacleDetection的init函数

ACHECK(detector_->Init(init_options)) << "lidar obstacle detection init error";

lidar2world_trans_.Init(lidar2novatel_tf2_child_frame_id_);

return true;

}

1.获取传感器信息sensor_info_,对应代码:

lidar::BaseLidarObstacleDetection *detector = lidar::BaseLidarObstacleDetectionRegisterer::GetInstanceByName(detector_name_); // 调用宏定义类的静态方法

CHECK_NOTNULL(detector);

detector_.reset(detector);

SensorManager类路径:apollo/modules/perception/common/sensor_manager/sensor_manager.cc

common::SensorManager::Instance()会返回SensorManager的唯一实例,同时调用构造函数,而构造函数又调用Init()方法,对传感器元数据初始化,会读取modules/perception/production/data/perception/common/sensor_meta.pt的包含所有传感器元数据,并将传感器名字和传感器信息SensorInfo存储在sensor_info_map_字典

// glog 提供了CHECK()宏帮助我们检查程序的错误,当CHECK()的条件不满足时,它会记录FATAL日志并终止程序

SensorManager::SensorManager() { CHECK_EQ(this->Init(), true); }

bool SensorManager::Init()

{

std::lock_guard<std::mutex> lock(mutex_);

if (inited_)

{

return true;

}

sensor_info_map_.clear();

distort_model_map_.clear();

undistort_model_map_.clear();

// 传感器元数据(名字,传感器型号,摆放位置)文件路径: apollo/modules/perception/production/data/perception/common/sensor_meta.pt

// 调用gflags库DEFINE_type宏获取传感器元数据文件路径FLAGS_obs_sensor_meta_path

const std::string file_path = cyber::common::GetAbsolutePath(lib::ConfigManager::Instance()->work_root(), FLAGS_obs_sensor_meta_path);

MultiSensorMeta sensor_list_proto;

// 从文件中读取信息存储到sensor_list_proto中

if (!GetProtoFromASCIIFile(file_path, &sensor_list_proto))

{

AERROR << "Invalid MultiSensorMeta file: " << FLAGS_obs_sensor_meta_path;

return false;

}

auto AddSensorInfo = [this](const SensorMeta &sensor_meta_proto)

{

SensorInfo sensor_info;

sensor_info.name = sensor_meta_proto.name();

sensor_info.type = static_cast<SensorType>(sensor_meta_proto.type());

sensor_info.orientation =

static_cast<SensorOrientation>(sensor_meta_proto.orientation());

sensor_info.frame_id = sensor_meta_proto.name();

// sensor_info_map_字典存储传感器名字和传感器信息SensorInfo

// SensorInfo 结构体类型 位于apollo/modules/perception/base/sensor_meta.h

auto pair = sensor_info_map_.insert(

make_pair(sensor_meta_proto.name(), sensor_info));

if (!pair.second)

{

AERROR << "Duplicate sensor name error.";

return false;

}

for (const SensorMeta &sensor_meta_proto : sensor_list_proto.sensor_meta())

{

if (!AddSensorInfo(sensor_meta_proto))

{

AERROR << "Failed to add sensor_info: " << sensor_meta_proto.name();

return false;

}

}

inited_ = true;

AINFO << "Init sensor_manager success.";

return true;

}

// 根据传感器名获取传感器信息SensorInfo

bool SensorManager::GetSensorInfo(const std::string &name,

SensorInfo *sensor_info) const

{

if (sensor_info == nullptr)

{

AERROR << "Nullptr error.";

return false;

}

const auto &itr = sensor_info_map_.find(name);

if (itr == sensor_info_map_.end())

{

return false;

}

*sensor_info = itr->second;

return true;

}

apollo/modules/perception/proto/sensor_meta_schema.proto

传感器信息proto字段

message SensorMeta {

enum SensorType {

UNKNOWN_SENSOR_TYPE = -1;

VELODYNE_64 = 0;

VELODYNE_32 = 1;

VELODYNE_16 = 2;

LDLIDAR_4 = 3;

LDLIDAR_1 = 4;

SHORT_RANGE_RADAR = 5;

LONG_RANGE_RADAR = 6;

MONOCULAR_CAMERA = 7;

STEREO_CAMERA = 8;

ULTRASONIC = 9;

VELODYNE_128 = 10;

}

enum SensorOrientation {

FRONT = 0;

LEFT_FORWARD = 1;

LEFT = 2;

LEFT_BACKWARD = 3;

REAR = 4;

RIGHT_BACKWARD = 5;

RIGHT = 6;

RIGHT_FORWARD = 7;

PANORAMIC = 8;

}

optional string name = 1;

optional SensorType type = 2;

optional SensorOrientation orientation = 3;

}

message MultiSensorMeta {

repeated SensorMeta sensor_meta = 1;

}

SensorInfo类型,位于位于apollo/modules/perception/base/sensor_meta.h

struct SensorInfo {

std::string name = "UNKNONW_SENSOR";

SensorType type = SensorType::UNKNOWN_SENSOR_TYPE;

SensorOrientation orientation = SensorOrientation::FRONT;

std::string frame_id = "UNKNOWN_FRAME_ID";

void Reset() {

name = "UNKNONW_SENSOR";

type = SensorType::UNKNOWN_SENSOR_TYPE;

orientation = SensorOrientation::FRONT;

frame_id = "UNKNOWN_FRAME_ID";

}

};

2.lidar障碍物检测基类对象指针指向子类的对象,对应代码:

lidar::BaseLidarObstacleDetection *detector = lidar::BaseLidarObstacleDetectionRegisterer::GetInstanceByName(detector_name_); // 调用宏定义类的静态方法

BaseLidarObstacleDetectionRegistere作为雷达障碍物检测的基类,通过多态形式调用子类的init函数,路径:

apollo/modules/perception/lidar/lib/interface/base_lidar_obstacle_detection.h

lidar::BaseLidarObstacleDetectionRegisterer调用一个宏定义类,类BaseLidarObstacleDetectionRegisterer路径:apollo/modules/perception/lib/registerer/registerer.h

#define PERCEPTION_REGISTER_REGISTERER(base_class)

class base_class##Registerer {

typedef ::apollo::perception::lib::Any Any;

typedef ::apollo::perception::lib::FactoryMap FactoryMap;

public:

static base_class *GetInstanceByName(const ::std::string &name) {

FactoryMap &map =

::apollo::perception::lib::GlobalFactoryMap()[#base_class];

FactoryMap::iterator iter = map.find(name);

if (iter == map.end()) {

for (auto c : map) {

AERROR << "Instance:" << c.first;

}

AERROR << "Get instance " << name << " failed.";

return nullptr;

}

Any object = iter->second->NewInstance();

return *(object.AnyCast<base_class *>());

}

static std::vector<base_class *> GetAllInstances() {

std::vector<base_class *> instances;

FactoryMap &map =

::apollo::perception::lib::GlobalFactoryMap()[#base_class];

instances.reserve(map.size());

for (auto item : map) {

Any object = item.second->NewInstance();

instances.push_back(*(object.AnyCast<base_class *>()));

}

return instances;

}

static const ::std::string GetUniqInstanceName() {

FactoryMap &map =

::apollo::perception::lib::GlobalFactoryMap()[#base_class];

CHECK_EQ(map.size(), 1U) << map.size();

return map.begin()->first;

}

static base_class *GetUniqInstance() {

FactoryMap &map =

::apollo::perception::lib::GlobalFactoryMap()[#base_class];

CHECK_EQ(map.size(), 1U) << map.size();

Any object = map.begin()->second->NewInstance();

return *(object.AnyCast<base_class *>());

}

static bool IsValid(const ::std::string &name) {

FactoryMap &map =

::apollo::perception::lib::GlobalFactoryMap()[#base_class];

return map.find(name) != map.end();

}

};

3.初始化雷达型号,是否使用hdmap,作为类LidarObstacleDetection的init函数传入参数

lidar::LidarObstacleDetectionInitOptions init_options;

init_options.sensor_name = sensor_name_;

// 调用DEFINE_bool宏返回FLAGS_obs_enable_hdmap_input,bool类型,表达是否有hdmap输入

// DEFINE_bool宏位于:modules/perception/onboard/common_flags/common_flags.cpp

init_options.enable_hdmap_input = FLAGS_obs_enable_hdmap_input && enable_hdmap_;

类LidarObstacleDetectionInitOptions位于

apollo/modules/perception/lidar/lib/interface/base_lidar_obstacle_detection.h

struct LidarObstacleDetectionInitOptions {

std::string sensor_name = "velodyne64";

bool enable_hdmap_input = true;

};

4.调用子类LidarObstacleDetection的init函数初始化参数

// 多态性:子类LidarObstacleDetection重写父类BaseLidarObstacleDetection的虚函数init

// 调用子类LidarObstacleDetection的init函数

ACHECK(detector_->Init(init_options)) << "lidar obstacle detection init error";

Init函数位于:apollo/modules/perception/lidar/app/lidar_obstacle_detection.cc

下面看雷达障碍物检测类的初始化函数init,初始化各模块的参数

bool LidarObstacleDetection::Init(const LidarObstacleDetectionInitOptions &options)

{

auto &sensor_name = options.sensor_name; // 传感器名,如"velodyne128"

// ConfigManager类经宏定义 DECLARE_SINGLETON(ConfigManager) 修饰成为单例类

auto config_manager = lib::ConfigManager::Instance();

const lib::ModelConfig *model_config = nullptr;

// 获取 LidarObstacleDetection 配置参数model_config,第一次调用GetModelConfig将各模块功能类和其配置参数存储在字典,从字典查找LidarObstacleDetection对应的参数信息

// LidarObstacleDetection在文件中是存储在 apollo/modules/perception/production/conf/perception/lidar/modules/lidar_obstacle_pipeline.config

ACHECK(config_manager->GetModelConfig(Name(), &model_config));

const std::string work_root = config_manager->work_root();

std::string config_file;

std::string root_path;

// root_path:./data/perception/lidar/models/lidar_obstacle_pipeline

ACHECK(model_config->get_value("root_path", &root_path));

// apollo/modules/perception/production/lidar/models/lidar_obstacle_pipeline

config_file = cyber::common::GetAbsolutePath(work_root, root_path);

// apollo/modules/perception/production/data/perception/lidar/models/lidar_obstacle_pipeline/velodyne128

config_file = cyber::common::GetAbsolutePath(config_file, sensor_name);

// apollo/modules/perception/production/data/perception/lidar/models/lidar_obstacle_pipeline/velodyne128/lidar_obstacle_detection.conf

config_file = cyber::common::GetAbsolutePath(config_file, "lidar_obstacle_detection.conf");

/*

message LidarObstacleDetectionConfig {

optional string preprocessor = 1 [default = "PointCloudPreprocessor"];

optional string detector = 2 [default = "PointPillarsDetection"];

optional bool use_map_manager = 3 [default = true];

optional bool use_object_filter_bank = 4 [default = true];

}

*/

LidarObstacleDetectionConfig config;

// 把lidar_obstacle_detection.conf写入proto LidarObstacleDetectionConfig信息中

ACHECK(cyber::common::GetProtoFromFile(config_file, &config));

use_map_manager_ = config.use_map_manager(); // true

use_object_filter_bank_ = config.use_object_filter_bank(); // true

// PointPillarsDetection

use_object_builder_ = ("PointPillarsDetection" != config.detector() ||

"MaskPillarsDetection" != config.detector());

use_map_manager_ = use_map_manager_ && options.enable_hdmap_input; // true

SceneManagerInitOptions scene_manager_init_options;

ACHECK(SceneManager::Instance().Init(scene_manager_init_options));

// 是否使用高精度地图

if (use_map_manager_)

{

MapManagerInitOptions map_manager_init_options;

// hdmap初始化

if (!map_manager_.Init(map_manager_init_options))

{

AINFO << "Failed to init map manager.";

use_map_manager_ = false;

}

}

// 激光点云预处理:初始化基类对象,让其指针指向子类

BasePointCloudPreprocessor *preprocessor = BasePointCloudPreprocessorRegisterer::GetInstanceByName(config.preprocessor());

CHECK_NOTNULL(preprocessor);

cloud_preprocessor_.reset(preprocessor);

// 激光雷达的型号

PointCloudPreprocessorInitOptions preprocessor_init_options;

preprocessor_init_options.sensor_name = sensor_name;

// 点云预处理初始化

ACHECK(cloud_preprocessor_->Init(preprocessor_init_options)) << "lidar preprocessor init error";

// 激光障碍物检测

BaseLidarDetector *detector = BaseLidarDetectorRegisterer::GetInstanceByName(config.detector());

BaseLidarDetectorRegisterer::GetInstanceByName(config.detector());

detector_.reset(detector);

LidarDetectorInitOptions detection_init_options;

detection_init_options.sensor_name = sensor_name;

// 激光雷达障碍物检测初始化

ACHECK(detector_->Init(detection_init_options)) << "lidar detector init error";

if (use_object_builder_)

{

// ObjectBuilder:构建障碍物目标包围框类信息

ObjectBuilderInitOptions builder_init_options;

ACHECK(builder_.Init(builder_init_options));

}

if (use_object_filter_bank_)

{

ObjectFilterInitOptions filter_bank_init_options;

filter_bank_init_options.sensor_name = sensor_name;

// ObjectFilterBank: 调用ObjectFilterBank:对目标进行ROIBoundaryFilter

ACHECK(filter_bank_.Init(filter_bank_init_options));

}

return true;

}

1.先定义单例类ConfigManager对象,调用GetModelConfig获取障碍物检测类的配置参数

根据 LidarObstacleDetection类名获取其 配置参数,第一次调用GetModelConfig,它将进一步调用init函数将各模块功能类和其配置参数存储在字典中,从字典查找LidarObstacleDetection对应的参数信息,存储在存储在ModelConfig类对象中,然后利用ModelConfig类的get_value函数,就可以对应查询到具体的配置参数数值了

LidarObstacleDetection在文件中是存储在

apollo/modules/perception/production/conf/perception/lidar/modules/lidar_obstacle_pipeline.config

auto config_manager = lib::ConfigManager::Instance();

const lib::ModelConfig *model_config = nullptr;

ACHECK(config_manager->GetModelConfig(Name(), &model_config));

ACHECK(model_config->get_value("root_path", &root_path));

类ConfigManager位于:apollo/modules/perception/lib/config_manager/config_manager.cc

下面具体解析下GetModelConfig函数,直接看代码:

// 根据类名获取模型配置参数

bool ConfigManager::GetModelConfig(const std::string &model_name,const ModelConfig **model_config) {

if (!inited_ && !Init()) {

return false;

}

auto citer = model_config_map_.find(model_name);

if (citer == model_config_map_.end()) {

return false;

}

*model_config = citer->second;

return true;

}

bool ConfigManager::Init() {

MutexLock lock(&mutex_);

return InitInternal();

}

bool ConfigManager::InitInternal() {

if (inited_) {

return true;

}

// 释放内存

for (auto iter = model_config_map_.begin(); iter != model_config_map_.end();

++iter) {

delete iter->second;

}

model_config_map_.clear();

// 调用gflags库DEFINE_type宏获取传感器的参数文件路径FLAGS_config_manager_path

// FLAGS_config_manager_path = "./conf"

// apollo/modules/perception/production/conf/

std::string config_module_path = GetAbsolutePath(work_root_, FLAGS_config_manager_path);

AINFO << "WORK_ROOT: " << work_root_ << " config_root_path: " << config_module_path;

std::vector<std::string> model_config_files;

// 递归遍历conf文件夹下所有文件名,返回后缀包含config_manager的所有文件路径model_config_files

if (!common::GetFileList(config_module_path, "config_manager.config",

&model_config_files)) {

AERROR << "config_root_path : " << config_module_path << " get file list error.";

return false;

}

for (const auto &model_config_file : model_config_files) {

// 用定义的proto message ModelConfigFileListProto 读取文件 config_manager.config 的model_config_path参数

ModelConfigFileListProto file_list_proto;

if (!GetProtoFromASCIIFile(model_config_file, &file_list_proto)) {

AERROR << "Invalid ModelConfigFileListProto file: " << model_config_file;

return false;

}

// model_config_path : 每一个后缀名为"config_manager.config"的文件

for (const std::string &model_config_path : file_list_proto.model_config_path()) {

// 获取绝对路径

const std::string abs_path = GetAbsolutePath(work_root_, model_config_path);

// 用定义的proto message MultiModelConfigProto 读取文件 参数信息,存储moudle下所有文件名

MultiModelConfigProto multi_model_config_proto;

if (!GetProtoFromASCIIFile(abs_path, &multi_model_config_proto)) {

AERROR << "Invalid MultiModelConfigProto file: " << abs_path;

return false;

}

// model_config_proto 每一个模块下各功能的配置参数

for (const ModelConfigProto &model_config_proto : multi_model_config_proto.model_configs()) {

// // 用定义的proto message ModelConfig 读取moudle下所有文件的参数信息

ModelConfig *model_config = new ModelConfig();

if (!model_config->Reset(model_config_proto)) {

return false;

}

AINFO << "load ModelConfig succ. name: " << model_config->name();

// 将各模块功能类名和配置参数存储在字典 model_config_map_ 中

auto result = model_config_map_.emplace(model_config->name(), model_config);

if (!result.second) {

AWARN << "duplicate ModelConfig, name: " << model_config->name();

return false;

}

}

}

}

用Proto读取文件参数,最终会将各模块功能类名和配置参数存储在字典 model_config_map_ ,定义proto message信息位于:apollo/modules/perception/proto/perception_config_schema.proto文件中,如下:

syntax = "proto2";

package apollo.perception;

message KeyValueInt {

optional string name = 1;

optional int32 value = 2;

}

message KeyValueString {

optional string name = 1;

optional bytes value = 2;

}

message KeyValueDouble {

optional string name = 1;

optional double value = 2;

}

message KeyValueFloat {

optional string name = 1;

optional float value = 2;

}

message KeyValueBool {

optional string name = 1;

optional bool value = 2;

}

message KeyValueArrayInt {

optional string name = 1;

repeated int32 values = 2;

}

message KeyValueArrayString {

optional string name = 1;

repeated bytes values = 2;

}

message KeyValueArrayDouble {

optional string name = 1;

repeated double values = 2;

}

message KeyValueArrayFloat {

optional string name = 1;

repeated float values = 2;

}

message KeyValueArrayBool {

optional string name = 1;

repeated bool values = 2;

}

message ModelConfigProto {

optional string name = 1;

optional string version = 2;

repeated KeyValueInt integer_params = 3;

repeated KeyValueString string_params = 4;

repeated KeyValueDouble double_params = 5;

repeated KeyValueFloat float_params = 6;

repeated KeyValueBool bool_params = 7;

repeated KeyValueArrayInt array_integer_params = 8;

repeated KeyValueArrayString array_string_params = 9;

repeated KeyValueArrayDouble array_double_params = 10;

repeated KeyValueArrayFloat array_float_params = 11;

repeated KeyValueArrayBool array_bool_params = 12;

}

message MultiModelConfigProto {

repeated ModelConfigProto model_configs = 1;

}

message ModelConfigFileListProto {

repeated string model_config_path = 1;

}

每一个message就相当于定义了一个struct,其中包含许多成员变量。其中ModelConfigFileListProto定义了一个向量,用来指定每个具体参数配置文件的位置,而MultiModelConfigProto则定义了一个ModelConfigProto类型的向量,即定义的具体配置参数,从ModelConfigProto类型的message文件不难看出,其实所谓的配置参数,就是string类型到不同数据类型的一个map映射。

拿lidar模块的配置参数为例,首先由apollo/modules/perception/production/conf/perception/lidar/config_manager.config文件实例化上述protobuf文件中定义的message ModelConfigFileListProto:

model_config_path: "./conf/perception/lidar/modules/map_manager.config"

model_config_path: "./conf/perception/lidar/modules/scene_manager.config"

model_config_path: "./conf/perception/lidar/modules/object_filter_bank.config"

model_config_path: "./conf/perception/lidar/modules/pointcloud_preprocessor.config"

model_config_path: "./conf/perception/lidar/modules/roi_boundary_filter.config"

model_config_path: "./conf/perception/lidar/modules/hdmap_roi_filter.config"

model_config_path: "./conf/perception/lidar/modules/cnnseg.config"

model_config_path: "./conf/perception/lidar/modules/ncut.config"

model_config_path: "./conf/perception/lidar/modules/spatio_temporal_ground_detector.config"

model_config_path: "./conf/perception/lidar/modules/lidar_obstacle_pipeline.config"

model_config_path: "./conf/perception/lidar/modules/fused_classifier.config"

model_config_path: "./conf/perception/lidar/modules/multi_lidar_fusion.config"

model_config_path: "./conf/perception/lidar/modules/roi_service.config"

model_config_path: "./conf/perception/lidar/modules/ground_service.config"

model_config_path: "./conf/perception/lidar/modules/ground_service_detector.config"

可以看出其实就是对ModelConfigFileListProto消息中的model_config_path成员变量进行了实例化,指明了参数定义文件的路径。例如lidar_obstacle_pipeline.config,我们打开modules/conti_ars_preprocessor.config看下具体内容:

model_configs {

name: "LidarObstacleDetection"

version: "1.0.0"

string_params {

name: "root_path"

value: "./data/perception/lidar/models/lidar_obstacle_pipeline"

}

}

model_configs {

name: "LidarObstacleTracking"

version: "1.0.0"

string_params {

name: "root_path"

value: "./data/perception/lidar/models/lidar_obstacle_pipeline"

}

}

可以看到,该配置文件包含激光雷达检测和跟踪两个功能,这个文件其实就是对MultiModelConfigProto消息中的model_configs成员变量进行了初始化,name是功能类名

detection——Proc

初始化函数剖析完了,接下来看组件detection_component的Proc函数,主要调用Process() 算法处理逻辑

bool DetectionComponent::Proc(const std::shared_ptr<drivers::PointCloud> &message)

{

AINFO << std::setprecision(16)

<< "Enter detection component, message timestamp: "

<< message->measurement_time()

<< " current timestamp: " << Clock::NowInSeconds();

auto out_message = std::make_shared<LidarFrameMessage>();

bool status = InternalProc(message, out_message);

if (status)

{

writer_->Write(out_message);

AINFO << "Send lidar detect output message.";

}

return status;

}

Proc调用InternalProc方法,InternalProc继续调用apollo/modules/perception/lidar/app/lidar_obstacle_detection.h中的Process() 算法处理逻辑

看InternalProc方法,备注源码如下:

bool DetectionComponent::InternalProc(

const std::shared_ptr<const drivers::PointCloud> &in_message,

const std::shared_ptr<LidarFrameMessage> &out_message)

/*

输入:drivers::PointCloud 原始点云

输出:LidarFrameMessaglidar 处理结果

*/

{ // 序列号

uint32_t seq_num = seq_num_.fetch_add(1);

// 时间戳

const double timestamp = in_message->measurement_time();

// 当前时间

const double cur_time = Clock::NowInSeconds();

const double start_latency = (cur_time - timestamp) * 1e3;

AINFO << std::setprecision(16) << "FRAME_STATISTICS:Lidar:Start:msg_time["

<< timestamp << "]:sensor[" << sensor_name_ << "]:cur_time[" << cur_time

<< "]:cur_latency[" << start_latency << "]";

out_message->timestamp_ = timestamp;

out_message->lidar_timestamp_ = in_message->header().lidar_timestamp();

out_message->seq_num_ = seq_num;

// 处理状态:检测

out_message->process_stage_ = ProcessStage::LIDAR_DETECTION;

// 错误码

out_message->error_code_ = apollo::common::ErrorCode::OK;

auto &frame = out_message->lidar_frame_;

// 并发对象池,结合单例模式,获取目标的智能指针

// 思想:一个对象只能有一个池子,用对象从池子里面取,每个池子有一个管理者来管理所对应的池子,取对象从管理者这里申请

frame = lidar::LidarFramePool::Instance().Get();

frame->cloud = base::PointFCloudPool::Instance().Get();

frame->timestamp = timestamp;

frame->sensor_info = sensor_info_;

Eigen::Affine3d pose = Eigen::Affine3d::Identity();

Eigen::Affine3d pose_novatel = Eigen::Affine3d::Identity();

const double lidar_query_tf_timestamp = timestamp - lidar_query_tf_offset_ * 0.001;

// 获取当前帧 坐标系到世界坐标系的位置变换,GPS到世界坐标系的位置变换

// transform_wrapper原理和ROS tf变换一致,首先各传感器的位置关系需要通过广播形式发送出去

if (!lidar2world_trans_.GetSensor2worldTrans(lidar_query_tf_timestamp, &pose, &pose_novatel))

{

out_message->error_code_ = apollo::common::ErrorCode::PERCEPTION_ERROR_TF;

AERROR << "Failed to get pose at time: " << lidar_query_tf_timestamp;

return false;

}

frame->lidar2world_pose = pose;

frame->novatel2world_pose = pose_novatel;

// 传感器名和lidar传感器转GPS的外参转换矩阵

lidar::LidarObstacleDetectionOptions detect_opts;

detect_opts.sensor_name = sensor_name_;

// lidar到世界坐标系的变换 *lidar2world_trans_ = *detect_opts.sensor2novatel_extrinsics

lidar2world_trans_.GetExtrinsics(&detect_opts.sensor2novatel_extrinsics);

// 掉用LidarObstacleDetection类的Process方法

// frame.get() 获取存储的指针

lidar::LidarProcessResult ret = detector_->Process(detect_opts, in_message, frame.get());

if (ret.error_code != lidar::LidarErrorCode::Succeed)

{

out_message->error_code_ =

apollo::common::ErrorCode::PERCEPTION_ERROR_PROCESS;

AERROR << "Lidar detection process error, " << ret.log;

return false;

}

return true;

}

看下LidarFrameMessage和LidarFrame,字段需要了解,不然都不知道啥意思

- 类

LidarFrameMessage

class LidarFrameMessage {

public:

LidarFrameMessage() : lidar_frame_(nullptr) {

type_name_ = "LidarFrameMessage";

}

~LidarFrameMessage() = default;

std::string GetTypeName() const { return type_name_; }

LidarFrameMessage* New() const { return new LidarFrameMessage; }

public:

double timestamp_ = 0.0;

uint64_t lidar_timestamp_ = 0;

uint32_t seq_num_ = 0;

std::string type_name_;

ProcessStage process_stage_ = ProcessStage::UNKNOWN_STAGE;

apollo::common::ErrorCode error_code_ = apollo::common::ErrorCode::OK;

std::shared_ptr<lidar::LidarFrame> lidar_frame_;

};

- 结构体:

LidarFrame

struct LidarFrame {

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

// point cloud

std::shared_ptr<base::AttributePointCloud<base::PointF>> cloud;

// world point cloud

std::shared_ptr<base::AttributePointCloud<base::PointD>> world_cloud;

// timestamp

double timestamp = 0.0;

// lidar to world pose

Eigen::Affine3d lidar2world_pose = Eigen::Affine3d::Identity();

// lidar to world pose

Eigen::Affine3d novatel2world_pose = Eigen::Affine3d::Identity();

// hdmap struct

std::shared_ptr<base::HdmapStruct> hdmap_struct = nullptr;

// segmented objects

std::vector<std::shared_ptr<base::Object>> segmented_objects;

// tracked objects

std::vector<std::shared_ptr<base::Object>> tracked_objects;

// point cloud roi indices

base::PointIndices roi_indices;

// point cloud non ground indices

base::PointIndices non_ground_indices;

// secondary segmentor indices

base::PointIndices secondary_indices;

// sensor info

base::SensorInfo sensor_info;

// reserve string

std::string reserve;

void Reset() {

if (cloud) {

cloud->clear();

}

if (world_cloud) {

world_cloud->clear();

}

timestamp = 0.0;

lidar2world_pose = Eigen::Affine3d::Identity();

novatel2world_pose = Eigen::Affine3d::Identity();

if (hdmap_struct) {

hdmap_struct->road_boundary.clear();

hdmap_struct->road_polygons.clear();

hdmap_struct->junction_polygons.clear();

hdmap_struct->hole_polygons.clear();

}

segmented_objects.clear();

tracked_objects.clear();

roi_indices.indices.clear();

non_ground_indices.indices.clear();

secondary_indices.indices.clear();

}

void FilterPointCloud(base::PointCloud<base::PointF> *filtered_cloud,

const std::vector<uint32_t> &indices) {

if (cloud && filtered_cloud) {

filtered_cloud->CopyPointCloudExclude(*cloud, indices);

}

}

}; // struct LidarFrame

#define UNUSED(param) (void)param假如一个有返回值的函数 ,如调用时是没有使用它的返回值,编译器会给出一个警告 ,如果用void强制转换一下,则明确告诉编译器不使用返回值,也就是为了消除警告

接下来看detector_->Process() 算法逻辑

LidarProcessResult LidarObstacleDetection::Process(

const LidarObstacleDetectionOptions &options,const std::shared_ptr<apollo::drivers::PointCloud const> &message, LidarFrame *frame)

{

const auto &sensor_name = options.sensor_name;

// 用来屏蔽无效參数的,消除警告

PERF_FUNCTION_WITH_INDICATOR(options.sensor_name);

PERF_BLOCK_START();

PointCloudPreprocessorOptions preprocessor_options; // 传感器转GPS的外参

preprocessor_options.sensor2novatel_extrinsics = options.sensor2novatel_extrinsics;

PERF_BLOCK_END_WITH_INDICATOR(sensor_name, "preprocess");

// 点云预处理

if (cloud_preprocessor_->Preprocess(preprocessor_options, message, frame))

{

return ProcessCommon(options, frame); // 模型推理

}

return LidarProcessResult(LidarErrorCode::PointCloudPreprocessorError,

"Failed to preprocess point cloud.");

}

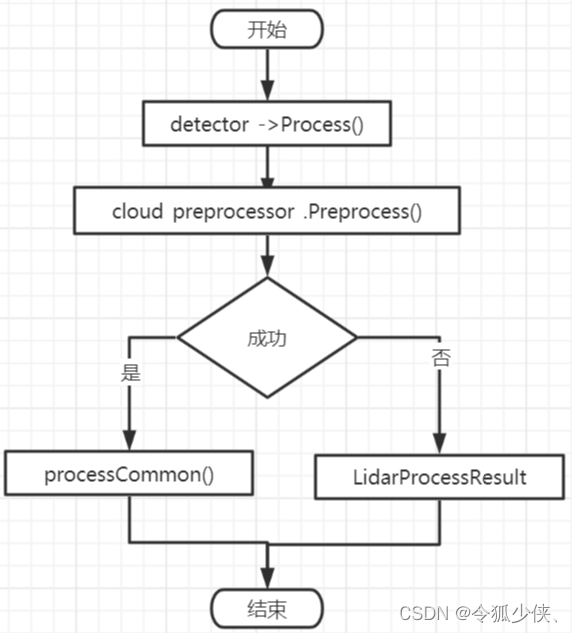

阅读代码,可以得到这样的流程图

点云预处理

比较简单,就是去除点云中的NaN点,对xyz范围过滤,剔除车身周围点,然后将点云(位置xyz,时间戳,高度height,beam_id,标签label)形式存储到LidarFrame结构体中,后将点云转换到世界坐标系下

bool PointCloudPreprocessor::Preprocess(

const PointCloudPreprocessorOptions& options,

const std::shared_ptr<apollo::drivers::PointCloud const>& message,

LidarFrame* frame) const {

if (frame == nullptr) {

return false;

}

if (frame->cloud == nullptr) {

// 点云对象池,结合单例设计模式

frame->cloud = base::PointFCloudPool::Instance().Get();

}

if (frame->world_cloud == nullptr) {

frame->world_cloud = base::PointDCloudPool::Instance().Get();

}

frame->cloud->set_timestamp(message->measurement_time());

if (message->point_size() > 0) {

// 设置该帧点云数大小

frame->cloud->reserve(message->point_size());

base::PointF point;

for (int i = 0; i < message->point_size(); ++i) {

const apollo::drivers::PointXYZIT& pt = message->point(i);

// 过滤无效点

if (filter_naninf_points_) {

if (std::isnan(pt.x()) || std::isnan(pt.y()) || std::isnan(pt.z())) {

continue;

}

// 过滤超范围的点

if (fabs(pt.x()) > kPointInfThreshold ||

fabs(pt.y()) > kPointInfThreshold ||

fabs(pt.z()) > kPointInfThreshold) {

continue;

}

}

Eigen::Vector3d vec3d_lidar(pt.x(), pt.y(), pt.z());

// 点在GPS坐标系的xyz位置

Eigen::Vector3d vec3d_novatel = options.sensor2novatel_extrinsics * vec3d_lidar;

// 过滤车身范围内的点云,消除车身反射的影响

if (filter_nearby_box_points_ && vec3d_novatel[0] < box_forward_x_ &&

vec3d_novatel[0] > box_backward_x_ &&

vec3d_novatel[1] < box_forward_y_ &&

vec3d_novatel[1] > box_backward_y_) {

continue;

}

// Z方向点云过滤

if (filter_high_z_points_ && pt.z() > z_threshold_) {

continue;

}

point.x = pt.x();

point.y = pt.y();

point.z = pt.z();

point.intensity = static_cast<float>(pt.intensity());

// 点的点云位置xyz,时间戳,高度height(float的最大值),beam_id(点在原始点云中的索引),标签label

frame->cloud->push_back(point, static_cast<double>(pt.timestamp()) * 1e-9,std::numeric_limits<float>::max(), i, 0);

}

// 将预处理后点云针转换到世界坐标系下

TransformCloud(frame->cloud, frame->lidar2world_pose, frame->world_cloud);

}

return true;

}

高精地图定位信息获取

简单介绍下高精度地图:

- 传统地图的技术尚未达到自动驾驶的需求,高精地图更类似于自动驾驶的专题组,但国内可能为了称谓方便还是称它为高精地图。高精地图并不是特指精度,它在描述上更加的全面、准确和清晰,对实时性的要求更高。

- 自动驾驶车辆搭载的传感器类型有很多,但64线激光雷达、Camera和Radar等传感器都有一定局限性,基于这些传感器本身的局限性,高精地图能够提供非常大的帮助。开发者可以把高精地图看作是离线的传感器,高精地图里已标注了道路元素的位置。出现物体的遮挡时,感知模块就可以提前做针对性的检测(感兴趣区域ROI),不仅可以减少感知模块的工作量,而且可以解决Deep Learning 的部分缺陷。识别可能会有些误差,但先验之后可提高识别率。

高精地图的主要特征:描述车道、车道的边界线、道路上各种交通设施和人行横道,即把所有东西、所有人能看到的影响交通驾驶行为的特性全部表述出来。

根据高精度地图hdmap(高度自动驾驶地图),查询当前帧点云的位置(常用采用ndt点云定位,它是一种scan-to-map的点云配准算法),获取高精地图中定位位置后,查找离定位位置距离为roi_search_distance_的所有道路、交叉路口的信息的road_polygons、road_boundary、hole_polygons、junction_polygons存到frame里

代码位置:apollo/modules/perception/lidar/lib/map_manager/map_manager.cc

bool MapManager::Update(const MapManagerOptions& options, LidarFrame* frame) {

if (!frame) {// 判断点云是否为空

AINFO << "Frame is nullptr.";

return false;

}

if (!(frame->hdmap_struct)) {

frame->hdmap_struct.reset(new base::HdmapStruct); // 重置地图

}

if (!hdmap_input_)

{ // 看看输入的地图是否为空初始化的时候给它赋的值

AINFO << "Hdmap input is nullptr";

return false;

}

if (update_pose_) // 是否需要更新位置

{ // 获取自身定位

if (!QueryPose(&(frame->lidar2world_pose))) {

AINFO << "Failed to query updated pose.";

}

}

base::PointD point; // 设置一个point接收定位信息

point.x = frame->lidar2world_pose.translation()(0);

point.y = frame->lidar2world_pose.translation()(1);

point.z = frame->lidar2world_pose.translation()(2);

/*

获取高精地图中,离我们所在定位位置距离为roi_search_distance_的所有道路信息

的road_polygons、road_boundary、hole_polygons、junction_polygons存到frame里

如果没有则hdmap_input_->GetRoiHDMapStruct()返回false,所有的道路信息置空

*/

if (!hdmap_input_->GetRoiHDMapStruct(point, roi_search_distance_,frame->hdmap_struct)) {

// 路面的polygons多边形信息

frame->hdmap_struct->road_polygons.clear();

// 道路边界线

frame->hdmap_struct->road_boundary.clear();

// hole_polygonsm 没太看懂,没怎么用到这个信息

frame->hdmap_struct->hole_polygons.clear();

// 交叉路口多边形信息

frame->hdmap_struct->junction_polygons.clear();

AINFO << "Failed to get roi from hdmap.";

}

return true;

}

具体的GetRoiHDMapStruct()我也没太细看,有空再花点时间理理,代码位置:apollo/modules/perception/map/hdmap/hdmap_input.c

bool HDMapInput::GetRoiHDMapStruct(

const base::PointD& pointd, const double distance,

std::shared_ptr<base::HdmapStruct> hdmap_struct_ptr) {

lib::MutexLock lock(&mutex_);

if (hdmap_.get() == nullptr) {

AERROR << "hdmap is not available";

return false;

}

// Get original road boundary and junction

std::vector<RoadRoiPtr> road_boundary_vec;

std::vector<JunctionInfoConstPtr> junctions_vec;

apollo::common::PointENU point;

point.set_x(pointd.x);

point.set_y(pointd.y);

point.set_z(pointd.z);

// 首先判断判断指针是否非空;接着调用hdmap_对象的GetRoadBoundaries()方法获取路面边界和路口信息

if (hdmap_->GetRoadBoundaries(point, distance, &road_boundary_vec,

&junctions_vec) != 0) {

AERROR << "Failed to get road boundary, point: " << point.DebugString();

return false;

}

if (hdmap_struct_ptr == nullptr) {

return false;

}

hdmap_struct_ptr->hole_polygons.clear();

hdmap_struct_ptr->junction_polygons.clear();

hdmap_struct_ptr->road_boundary.clear();

hdmap_struct_ptr->road_polygons.clear();

// Merge boundary and junction

EigenVector<base::RoadBoundary> road_boundaries;

// 将存储路面和路口信息的变量整合到hdmap_struct_ptr指向的变量中

MergeBoundaryJunction(road_boundary_vec, junctions_vec, &road_boundaries,

&(hdmap_struct_ptr->road_polygons),

&(hdmap_struct_ptr->junction_polygons));

// Filter road boundary by junction

// 利用路口信息过滤掉多余的路面信息

GetRoadBoundaryFilteredByJunctions(road_boundaries,

hdmap_struct_ptr->junction_polygons,

&(hdmap_struct_ptr->road_boundary));

return true;

}

apollo5.0获取地位信息后,还需要经过高精地图ROI过滤器(HDMap ROI Filter),从高精地图检索到包含路面、路口的可驾驶区域后送入分割,apollo6.0,7.0取消了分割,直接送入3D点云检测模块

障碍物检测

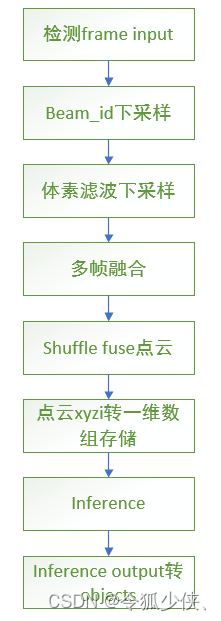

Apollo 7.0 中引入 MaskPointPillar 激光雷达检测算法,本文先看pointPillar 算法,重点阐述下pointpillar的整体流程,具体cuda版Inference细节,之后另开一篇文章阐述,不熟悉pointpillar可以先去看看相关的论文。

代码路径::apollo/modules/perception/lidar/lib/detector/point_pillars_detection/point_pillars_detection.cc

调用PointPillarsDetection的detect方法

参数都是调用apollo/modules/perception/common/perception_gflags.h封好的宏,这些宏通过调用google的gflags库,解析命令行参数,perception所有的命令行参数在/apollo/modules/perception/production/conf/perception/perception_common.flag配置

PointPillarsDetection的流程图:

bool PointPillarsDetection::Detect(const LidarDetectorOptions& options,

LidarFrame* frame) {

// check input

if (frame == nullptr) {

AERROR << "Input null frame ptr.";

return false;

}

if (frame->cloud == nullptr) {

AERROR << "Input null frame cloud.";

return false;

}

if (frame->cloud->size() == 0) {

AERROR << "Input none points.";

return false;

}

// record input cloud and lidar frame

original_cloud_ = frame->cloud;

original_world_cloud_ = frame->world_cloud;

lidar_frame_ref_ = frame;

// check output

frame->segmented_objects.clear();

// FLAGS_gpu_id 默认为0,使用0号显卡

if (cudaSetDevice(FLAGS_gpu_id) != cudaSuccess) {

AERROR << "Failed to set device to gpu " << FLAGS_gpu_id;

return false;

}

// 调用Tine构造函数,成员变量_start = std::chrono::system_clock::now(); // 获取系统的时间戳,单位微秒

Timer timer;

int num_points;

cur_cloud_ptr_ = std::shared_ptr<base::PointFCloud>(new base::PointFCloud(*original_cloud_));

// down sample the point cloud through filtering beams

// FLAGS_enable_downsample_beams 默认 false

if (FLAGS_enable_downsample_beams) {

base::PointFCloudPtr downsample_beams_cloud_ptr(new base::PointFCloud());

// beam_id下采样,下采样因子FLAGS_downsample_beams_factor默认为4,减少点云数据量

if (DownSamplePointCloudBeams(original_cloud_, downsample_beams_cloud_ptr,FLAGS_downsample_beams_factor)) {

cur_cloud_ptr_ = downsample_beams_cloud_ptr;

} else {

AWARN << "Down-sample beams factor must be >= 1. Cancel down-sampling."

" Current factor: "

<< FLAGS_downsample_beams_factor;

}

}

// down sample the point cloud through filtering voxel grid

// 体素滤波下采样,FLAGS_enable_downsample_pointcloud默认为false

if (FLAGS_enable_downsample_pointcloud) {

pcl::PointCloud<pcl::PointXYZI>::Ptr pcl_cloud_ptr(new pcl::PointCloud<pcl::PointXYZI>());

pcl::PointCloud<pcl::PointXYZI>::Ptr filtered_cloud_ptr(new pcl::PointCloud<pcl::PointXYZI>());

TransformToPCLXYZI(*cur_cloud_ptr_, pcl_cloud_ptr);

// 下采样尺寸xyz分为默认为0.01

DownSampleCloudByVoxelGrid(pcl_cloud_ptr, filtered_cloud_ptr, FLAGS_downsample_voxel_size_x,FLAGS_downsample_voxel_size_y, FLAGS_downsample_voxel_size_z);

// transform pcl point cloud to apollo point cloud

base::PointFCloudPtr downsample_voxel_cloud_ptr(new base::PointFCloud());

TransformFromPCLXYZI(filtered_cloud_ptr, downsample_voxel_cloud_ptr);

cur_cloud_ptr_ = downsample_voxel_cloud_ptr;

}

// 计算下采样时间

downsample_time_ = timer.toc(true);

num_points = cur_cloud_ptr_->size();

AINFO << "num points before fusing: " << num_points;

// fuse clouds of preceding frames with current cloud

// fuse 的是当前的点云和的点云,作用:当点云比较稀疏时,为了提升检测效果,一般会把当前帧的点云和前几帧点云融合,弥补稀疏效果

// 点云成员变量points_timestamp_初始化

cur_cloud_ptr_->mutable_points_timestamp()->assign(cur_cloud_ptr_->size(),0.0);

if (FLAGS_enable_fuse_frames && FLAGS_num_fuse_frames > 1) {

// before fusing

// 将融合时间间隔大于0.5的点过滤

while (!prev_world_clouds_.empty() && frame->timestamp - prev_world_clouds_.front()->get_timestamp() > FLAGS_fuse_time_interval) {

prev_world_clouds_.pop_front();

}

// transform current cloud to world coordinate and save to a new ptr

// 将当前帧的点云转到世界坐标系下,包含xyzi信息

base::PointDCloudPtr cur_world_cloud_ptr = std::make_shared<base::PointDCloud>();

for (size_t i = 0; i < cur_cloud_ptr_->size(); ++i) {

auto& pt = cur_cloud_ptr_->at(i);

Eigen::Vector3d trans_point(pt.x, pt.y, pt.z);

trans_point = lidar_frame_ref_->lidar2world_pose * trans_point;

PointD world_point;

world_point.x = trans_point(0);

world_point.y = trans_point(1);

world_point.z = trans_point(2);

world_point.intensity = pt.intensity;

cur_world_cloud_ptr->push_back(world_point);

}

cur_world_cloud_ptr->set_timestamp(frame->timestamp);

// fusing clouds

for (auto& prev_world_cloud_ptr : prev_world_clouds_) {

num_points += prev_world_cloud_ptr->size();

}

// 将过滤后的之前点云加入到当前帧点云中

FuseCloud(cur_cloud_ptr_, prev_world_clouds_);

// after fusing

// FLAGS_num_fuse_frames 默认为5

while (static_cast<int>(prev_world_clouds_.size()) >= FLAGS_num_fuse_frames - 1) {

prev_world_clouds_.pop_front();

}

prev_world_clouds_.emplace_back(cur_world_cloud_ptr);

}

AINFO << "num points after fusing: " << num_points;

// 计算fuse时间

fuse_time_ = timer.toc(true);

// shuffle points and cut off

// enable_shuffle_points 默认false

if (FLAGS_enable_shuffle_points) {

// FLAGS_max_num_points 默认为int的最大值 2^31-1

num_points = std::min(num_points, FLAGS_max_num_points);

// 对[0-num_points)之间索引,打乱

std::vector<int> point_indices = GenerateIndices(0, num_points, true);

// 获取打乱后的点云

base::PointFCloudPtr shuffle_cloud_ptr(new base::PointFCloud(*cur_cloud_ptr_, point_indices));

cur_cloud_ptr_ = shuffle_cloud_ptr;

}

// 计算数据shuffle时间

shuffle_time_ = timer.toc(true);

// point cloud to array

float* points_array = new float[num_points * FLAGS_num_point_feature]();

// FLAGS_normalizing_factor :255

// 过滤范围外的点云,将点云数据转为一维数组

CloudToArray(cur_cloud_ptr_, points_array, FLAGS_normalizing_factor);

cloud_to_array_time_ = timer.toc(true);

// inference

std::vector<float> out_detections;

std::vector<int> out_labels;

point_pillars_ptr_->DoInference(points_array, num_points, &out_detections,&out_labels);

inference_time_ = timer.toc(true);

// transfer output bounding boxes to objects

GetObjects(&frame->segmented_objects, frame->lidar2world_pose,&out_detections, &out_labels);

collect_time_ = timer.toc(true);

delete[] points_array;

return true;

}

inference以后再细讲,继续看GetObjects方法:

作用:将推理的输出结构detections和labels转换到Object,Object包含信息有:包围框的方向direction,朝向角theta,每个类别概率type_probs,小类型概率(分的更细)sub_type,lidar_supplement结构体(包含包围框8个顶点坐标cloud,8个顶点其在世界坐标系的坐标cloud_world,检测方法的类名raw_classification_methods等。

void PointPillarsDetection::GetObjects(

std::vector<std::shared_ptr<Object>>* objects, const Eigen::Affine3d& pose,

std::vector<float>* detections, std::vector<int>* labels) {

// 目标个数

int num_objects = detections->size() / FLAGS_num_output_box_feature; // FLAGS_num_output_box_feature 为 7

objects->clear();

// 结合单例设计模式,调用并发对象池,创建num_objects个Object对象

base::ObjectPool::Instance().BatchGet(num_objects, objects);

for (int i = 0; i < num_objects; ++i) {

auto& object = objects->at(i);

object->id = i;

// read params of bounding box

float x = detections->at(i * FLAGS_num_output_box_feature + 0);

float y = detections->at(i * FLAGS_num_output_box_feature + 1);

float z = detections->at(i * FLAGS_num_output_box_feature + 2);

float dx = detections->at(i * FLAGS_num_output_box_feature + 4);

float dy = detections->at(i * FLAGS_num_output_box_feature + 3);

float dz = detections->at(i * FLAGS_num_output_box_feature + 5);

float yaw = detections->at(i * FLAGS_num_output_box_feature + 6);

// 获取预测的偏航角,范围在[-pi/2,pi/2]

yaw += M_PI / 2;

yaw = std::atan2(sinf(yaw), cosf(yaw));

yaw = -yaw;

// directions

object->theta = yaw;

object->direction[0] = cosf(yaw);

object->direction[1] = sinf(yaw);

object->direction[2] = 0;

object->lidar_supplement.is_orientation_ready = true;

// compute vertexes of bounding box and transform to world coordinate

object->lidar_supplement.num_points_in_roi = 8;

object->lidar_supplement.on_use = true;

object->lidar_supplement.is_background = false;

float roll = 0, pitch = 0;

// 欧拉角转旋转向量

Eigen::Quaternionf quater =

Eigen::AngleAxisf(roll, Eigen::Vector3f::UnitX()) *

Eigen::AngleAxisf(pitch, Eigen::Vector3f::UnitY()) *

Eigen::AngleAxisf(yaw, Eigen::Vector3f::UnitZ());

Eigen::Translation3f translation(x, y, z);

// 计算放射变换矩阵,平移向量*旋转向量

Eigen::Affine3f affine3f = translation * quater.toRotationMatrix();

// 包围框的8个顶点坐标,8个顶点在世界坐标系下的位置

for (float vx : std::vector<float>{dx / 2, -dx / 2}) {

for (float vy : std::vector<float>{dy / 2, -dy / 2}) {

for (float vz : std::vector<float>{0, dz}) {

Eigen::Vector3f v3f(vx, vy, vz);

v3f = affine3f * v3f;

PointF point;

point.x = v3f.x();

point.y = v3f.y();

point.z = v3f.z();

object->lidar_supplement.cloud.push_back(point);

Eigen::Vector3d trans_point(point.x, point.y, point.z);

trans_point = pose * trans_point;

PointD world_point;

world_point.x = trans_point(0);

world_point.y = trans_point(1);

world_point.z = trans_point(2);

object->lidar_supplement.cloud_world.push_back(world_point);

}

}

}

// classification

// 枚举 MAX_OBJECT_TYPE = 6 表示大目标

// raw_probs二维数组,表示每个分类方法的概率

object->lidar_supplement.raw_probs.push_back(std::vector<float>(static_cast<int>(base::ObjectType::MAX_OBJECT_TYPE), 0.f));

// Name()返回"PointPillarsDetection" ,raw_classification_methods 存储检测方法的类名

object->lidar_supplement.raw_classification_methods.push_back(Name());

// 获取object的子类型,比较细的划分:CAR,PEDESTRIAN,CYCLIST,UNKNOWN

object->sub_type = GetObjectSubType(labels->at(i));

// 大类别,分的不是很细:VEHICLE,BICYCLE,UNKNOWN_MOVABLE

object->type = base::kSubType2TypeMap.at(object->sub_type);

object->lidar_supplement.raw_probs.back()[static_cast<int>(object->type)] = 1.0f;

// 每个类别的概率存储在type_probs字段

object->type_probs.assign(object->lidar_supplement.raw_probs.back().begin(),object->lidar_supplement.raw_probs.back().end());

}

}

数据结构Object

Object:用于表征一帧检测中每个检测物体/检测框的一些属性,文件位置:Apollo/modules/perception/base/object.h

只看boundingbox相关的字段信息,Object结构体如下:

struct alignas(16) Object {

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

Object();

std::string ToString() const;

void Reset();

int id = -1;// 每帧object的id,必要

// @brief convex hull of the object, required

PointCloud<PointD> polygon;// object拟合凸多边形的顶点坐标,必要

// 方向包围框的信息

Eigen::Vector3f direction = Eigen::Vector3f(1, 0, 0); // 方向向量,必要

float theta = 0.0f; // 包围框朝向角,即偏航角yaw

float theta_variance = 0.0f; // 角度方差,必要

Eigen::Vector3d center = Eigen::Vector3d(0, 0, 0);// 包围框中心坐标(cx, cy, cz),必要

Eigen::Matrix3f center_uncertainty; // center不确定性的协方差矩阵,必要

Eigen::Vector3f size = Eigen::Vector3f(0, 0, 0);// 包围框的[length, width, height],length必要的

Eigen::Vector3f size_variance = Eigen::Vector3f(0, 0, 0);//size 的方差,必要的

// @brief anchor point, required

Eigen::Vector3d anchor_point = Eigen::Vector3d(0, 0, 0);

ObjectType type = ObjectType::UNKNOWN; // 类别,必要的

std::vector<float> type_probs; // 每个类别的概率,必要的

ObjectSubType sub_type = ObjectSubType::UNKNOWN; // 子类型,可选

std::vector<float> sub_type_probs; // 子类型的概率,可选

float confidence = 1.0f; // 存在置信度,必要的

// @brief sensor-specific object supplements, optional

LidarObjectSupplement lidar_supplement;

};

LidarObjectSupplement 结构体提供该Object的一些原始数据,以及不在Object类别定义中的各种传感器特有的东西,Object定义为所有module共用的部分

GetObjects方法分类部分,根据字典映射,将子类别sub_type转为大类别type,kSubType2TypeMap字典如下:

/**

* ObjectSubType mapping

*/

const std::map<ObjectSubType, ObjectType> kSubType2TypeMap = {

{ObjectSubType::UNKNOWN, ObjectType::UNKNOWN},

{ObjectSubType::UNKNOWN_MOVABLE, ObjectType::UNKNOWN_MOVABLE},

{ObjectSubType::UNKNOWN_UNMOVABLE, ObjectType::UNKNOWN_UNMOVABLE},

{ObjectSubType::CAR, ObjectType::VEHICLE},

{ObjectSubType::VAN, ObjectType::VEHICLE},

{ObjectSubType::TRUCK, ObjectType::VEHICLE},

{ObjectSubType::BUS, ObjectType::VEHICLE},

{ObjectSubType::CYCLIST, ObjectType::BICYCLE},

{ObjectSubType::MOTORCYCLIST, ObjectType::BICYCLE},

{ObjectSubType::TRICYCLIST, ObjectType::BICYCLE},

{ObjectSubType::PEDESTRIAN, ObjectType::PEDESTRIAN},

{ObjectSubType::TRAFFICCONE, ObjectType::UNKNOWN_UNMOVABLE},

{ObjectSubType::MAX_OBJECT_TYPE, ObjectType::MAX_OBJECT_TYPE},

};

障碍物边框构建

看apollo/modules/perception/lidar/app/lidar_obstacle_detection.cc中的ProcessCommo方法:

if (use_object_builder_)

{

ObjectBuilderOptions builder_options;

if (!builder_.Build(builder_options, frame))

{

return LidarProcessResult(LidarErrorCode::ObjectBuilderError,"Failed to build objects.");

}

}

上面代码会调用类ObjectBuilder的Build方法,代码位于:apollo/modules/perception/lidar/lib/object_builder/object_builder.cc

该模块为检测到的障碍物构建边界框。由于遮挡或距离对激光雷达的影响,点云形成障碍物可能存在稀疏或只覆盖了障碍物表面的一部分。因此,边界框构建器的工作就是恢复完整的边界框并给出边界框点。边界框的主要目的是估计障碍物的名称,即便点云数据是稀疏的。同样地,边界框也被用于可视化障碍物。

apollo边框构建主要计算Object结构体的字段信息,如:

- 拟合凸多边形的顶点坐标

polygon,调用Apollo/modules/perception/common/geometry/convex_hull_2d.h的GetConvexHull - bounding-box的点云的尺寸

size和中心center,调用Apollo/modules/perception/common/geometry/common.h的CalculateBBoxSizeCenter2DXY - object其它的信息,包括:

object->anchor_point,object->latest_tracked_time

static const float kEpsilonForSize = 1e-2f;

static const float kEpsilonForLine = 1e-3f;

using apollo::perception::base::PointD;

using apollo::perception::base::PointF;

using ObjectPtr = std::shared_ptr<apollo::perception::base::Object>;

using PointFCloud = apollo::perception::base::PointCloud<PointF>;

using PolygonDType = apollo::perception::base::PointCloud<PointD>;

bool ObjectBuilder::Init(const ObjectBuilderInitOptions& options) {

return true;

}

bool ObjectBuilder::Build(const ObjectBuilderOptions& options,LidarFrame* frame) {

if (frame == nullptr) {

return false;

}

std::vector<ObjectPtr>* objects = &(frame->segmented_objects);

for (size_t i = 0; i < objects->size(); ++i) {

if (objects->at(i)) {

objects->at(i)->id = static_cast<int>(i);

// // 计算点云拟合的凸多边形

ComputePolygon2D(objects->at(i));

// 计算3d bounding-box的点云的尺寸和中心

ComputePolygonSizeCenter(objects->at(i));

// 计算object其它的信息,包括:anchor_point,latest_tracked_time

ComputeOtherObjectInformation(objects->at(i));

}

}

return true;

}

void ObjectBuilder::ComputePolygon2D(ObjectPtr object) {

Eigen::Vector3f min_pt;

Eigen::Vector3f max_pt;

PointFCloud& cloud = object->lidar_supplement.cloud;

// 获取object点云的xyz最大和最小值

GetMinMax3D(cloud, &min_pt, &max_pt);

// PointPillarsDetection::GetObjects计算cloud.size()为8,代表8个顶点,走不到这部

if (cloud.size() < 4u) {

SetDefaultValue(min_pt, max_pt, object);

return;

}

// 判断是否为线程扰动,不是是线性扰动,需要第一个点x加上kEpsilonForLine,第二个点y加上kEpsilonForLine

LinePerturbation(&cloud);

common::ConvexHull2D<PointFCloud, PolygonDType> hull;

// 计算点云拟合的凸多边形顶点坐标,存储在polygon字段中

hull.GetConvexHull(cloud, &(object->polygon));

}

void ObjectBuilder::ComputeOtherObjectInformation(ObjectPtr object) {

object->anchor_point = object->center;

double timestamp = 0.0;

size_t num_point = object->lidar_supplement.cloud.size();

for (size_t i = 0; i < num_point; ++i) {

timestamp += object->lidar_supplement.cloud.points_timestamp(i);

}

if (num_point > 0) {

timestamp /= static_cast<double>(num_point);

}

// 最近测量的时间戳,latest_tracked_time是必要的

object->latest_tracked_time = timestamp;

}

void ObjectBuilder::ComputePolygonSizeCenter(ObjectPtr object) {

if (object->lidar_supplement.cloud.size() < 4u) {

return;

}

Eigen::Vector3f dir = object->direction;

// 计算3d bounding-box的点云的尺寸和中心

common::CalculateBBoxSizeCenter2DXY(object->lidar_supplement.cloud, dir,&(object->size), &(object->center));

// PointPillarsDetection::GetObject函数中is_background设为false

if (object->lidar_supplement.is_background) {

float length = object->size(0);

float width = object->size(1);

Eigen::Matrix<float, 3, 1> ortho_dir(-object->direction(1),object->direction(0), 0.0);

// 保证object的size中第一个值是最大的

if (length < width) {

object->direction = ortho_dir;

object->size(0) = width;

object->size(1) = length;

}

}

// 对于小于kEpsilonForSize的size,将其设为大小kEpsilonForSize

for (size_t i = 0; i < 3; ++i) {

if (object->size(i) < kEpsilonForSize) { // kEpsilonForSize = 1e-2f

object->size(i) = kEpsilonForSize;

}

}

object->theta = static_cast<float>(atan2(object->direction(1), object->direction(0)));

}

Bounding_box过滤

代码位置:/root/apollo_ws/apollo/modules/perception/lidar/lib/object_filter_bank/object_filter_bank.cc

先看下初始化函数init,直接看代码,注释很详细,参数读取过程都是相同的

bool ObjectFilterBank::Init(const ObjectFilterInitOptions& options) {

auto config_manager = lib::ConfigManager::Instance();

const lib::ModelConfig* model_config = nullptr;

// 第一次调用GetModelConfig已经初始化,直接从字典model_config_map_查找"ObjectFilterBank"文件的信息,以proto存储的

// apollo/modules/perception/production/conf/perception/lidar/modules/object_filter_bank.config

ACHECK(config_manager->GetModelConfig(Name(), &model_config));

const std::string work_root = config_manager->work_root();

std::string config_file;

std::string root_path;

// ./data/perception/lidar/models/object_filter_bank

ACHECK(model_config->get_value("root_path", &root_path));

// apollo/modules/perception/production//data/perception/lidar/models/object_filter_bank

config_file = GetAbsolutePath(work_root, root_path);

// apollo/modules/perception/production/data/perception/lidar/models/object_filter_bank/velodyne128

config_file = GetAbsolutePath(config_file, options.sensor_name);

// apollo/modules/perception/production/data/perception/lidar/models/object_filter_bank/velodyne128/filter_bank.conf

config_file = GetAbsolutePath(config_file, "filter_bank.conf");

// proto定义message文件路径:pollo/modules/perception/lidar/lib/object_filter_bank/proto/filter_bank_config.proto

FilterBankConfig config;

// 用定义的meaage读取filter_bank.conf文件信息

ACHECK(apollo::cyber::common::GetProtoFromFile(config_file, &config));

filter_bank_.clear();

for (int i = 0; i < config.filter_name_size(); ++i) {

const auto& name = config.filter_name(i); // ROIBoundaryFilter

// 通过工厂方法模式获取 ROIBoundaryFilter 类的实例指针,也是多态运用

BaseObjectFilter* filter = BaseObjectFilterRegisterer::GetInstanceByName(name);

if (!filter) {

AINFO << "Failed to find object filter: " << name << ", skipped";

continue;

}

// 调用ROIBoundaryFilter::Init函数

if (!filter->Init()) {

AINFO << "Failed to init object filter: " << name << ", skipped";

continue;

}

filter_bank_.push_back(filter);

AINFO << "Filter bank add filter: " << name;

}

return true;

}

类ROIBoundaryFilter的初始化init方法,初始化读取参数过程都是类似的,代码如下:

代码路径:apollo/modules/perception/lidar/lib/object_filter_bank/roi_boundary_filter/roi_boundary_filter.cc

bool ROIBoundaryFilter::Init(const ObjectFilterInitOptions& options) {

auto config_manager = lib::ConfigManager::Instance();

const lib::ModelConfig* model_config = nullptr;

// 第一次调用GetModelConfig已经初始化,直接从字典model_config_map_查找"ROIBoundaryFilter"文件的信息,以proto存储的

// apollo/modules/perception/production/conf/perception/lidar/modules/roi_boundary_filter.config

ACHECK(config_manager->GetModelConfig(Name(), &model_config));

const std::string work_root = config_manager->work_root();

std::string config_file;zhius

std::string root_path;

// data/perception/lidar/models/object_filter_bank

ACHECK(model_config->get_value("root_path", &root_path));

// apollo/modules/perception/production/data/perception/lidar/models/object_filter_bank

config_file = GetAbsolutePath(work_root, root_path);

// // apollo/modules/perception/production/data/perception/lidar/models/object_filter_bank/roi_boundary_filter.conf

config_file = GetAbsolutePath(config_file, "roi_boundary_filter.conf");

// proto定义message文件路径:apollo/modules/perception/proto/roi_boundary_filter_config.proto

ROIBoundaryFilterConfig config;

// 用定义的meaage读取roi_boundary_filter.conf文件信息

ACHECK(cyber::common::GetProtoFromFile(config_file, &config));

distance_to_boundary_threshold_ = config.distance_to_boundary_threshold(); // -1.0

confidence_threshold_ = config.confidence_threshold(); // 0.5

cross_roi_threshold_ = config.cross_roi_threshold(); // 0.6

inside_threshold_ = config.inside_threshold(); // 1.0

return true;

}

下面看 ObjectFilterBank的处理逻辑,就是对检测build出的objects目标进行ROIBoundaryFilter,即调用之前找到的激光雷达周围高精度地图感兴趣区域,来限定部分激光雷达目标;

bool ObjectFilterBank::Filter(const ObjectFilterOptions& options,

LidarFrame* frame) {

size_t object_number = frame->segmented_objects.size(); // 包围框bounding_box的数量

for (auto& filter : filter_bank_) {

// 调用ROIBoundaryFilter::Filter方法

if (!filter->Filter(options, frame)) {

AINFO << "Failed to filter objects in: " << filter->Name(); // ROIBoundaryFilter

}

}

AINFO << "Object filter bank, filtered objects size: from " << object_number

<< " to " << frame->segmented_objects.size();

return true;

}

根据高精度地图的道路和交叉路口信息对边框构建器ObjectBuilder得到的objects进行过滤,ROIBoundaryFilter::Filter方法具体就不作展开

最后

以上就是苗条睫毛膏最近收集整理的关于Apollo 7.0——percception:lidar源码剖析(万字长文)组件启动激光感知的全部内容,更多相关Apollo内容请搜索靠谱客的其他文章。

发表评论 取消回复