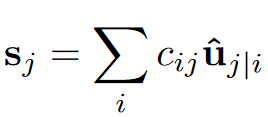

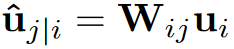

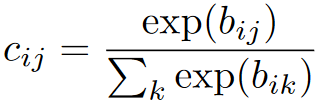

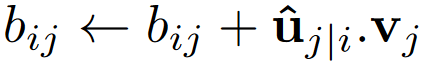

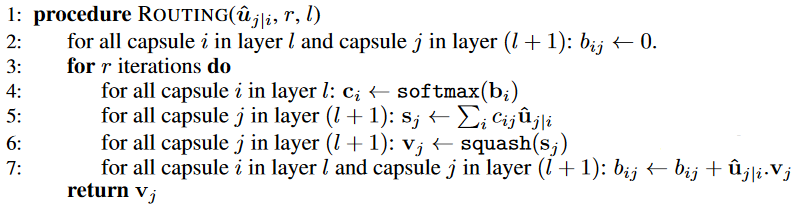

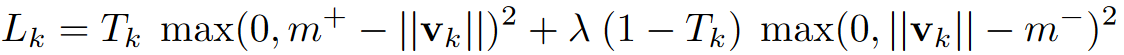

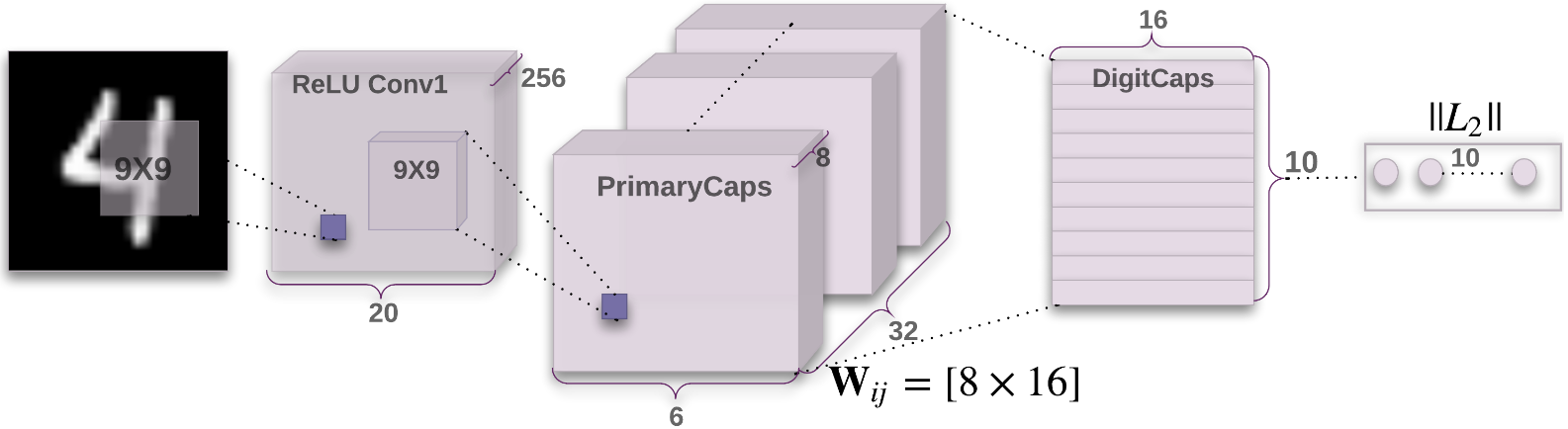

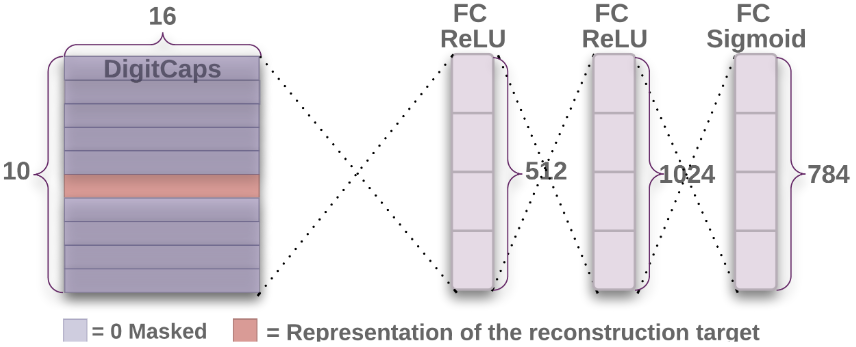

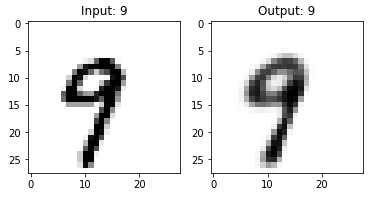

胶囊路由 Link to paper: https://arxiv.org/pdf/1710.09829.pdf 链接到论文: https : //arxiv.org/pdf/1710.09829.pdf The paper introduced an implementation of Capsule Networks which use an iterative routing-by-agreement mechanism: A lower-level capsule prefers to send its output to higher level capsules whose activity vectors have a big scalar product with the prediction coming from the lower-level capsule. 本文介绍了一个胶囊网络的实现,该网络使用了一种按协议进行迭代路由的机制:低级胶囊倾向于将其输出发送到活动向量具有大标量积的高级胶囊,而预测来自低级胶囊胶囊。 Motivation: 动机 : Human visual system uses a sequence of fixation points to ensure that a tiny fraction of optic array is processed at highest resolution. For a single fixation, a parse tree is carved out of a fixed small groups of neurons called “capsules” and each node in the parse tree will correspond to an active capsule. By using an iterative process, each capsule will choose a higher-level capsule to be its parent. This process will solve the problem of assigning parts to wholes. 人类视觉系统使用一系列固定点来确保以高分辨率对光学阵列的一小部分进行处理。 对于单个注视,解析树是从固定的一小组称为“胶囊”的神经元中雕刻出来的,解析树中的每个节点都将对应一个活动的胶囊。 通过使用迭代过程,每个胶囊将选择一个更高级别的胶囊作为其父对象。 此过程将解决将零件分配给整体的问题。 For activity vector of each active capsule: 对于每个活性胶囊的活性载体: Idea: 主意 : Since the output of a capsule is a vector, it is possible to use a powerful dynamic routing mechanism to ensure the output is sent to an appropriate parent. For each possible parent, the capsule computes a “prediction vector” by multiplying its own output by a weight matrix. If this prediction vector has a large scalar product with the output of a possible parent, a coupling coefficient for that parent will be increased and for other parents will be decreased, thus increases the contribution a capsule makes to that parent, increasing the scalar product of the capsule’s prediction with the parent’s output. This is much more effective when compared to max-pooling, which allows neurons in one layer to care only about the most active feature detector in the previous layer. Also, unlike max-pooling, capsules don’t throw away information about the precise location of the entity or its pose. 由于封装的输出是矢量,因此可以使用强大的动态路由机制来确保将输出发送到适当的父级。 对于每个可能的父对象,胶囊通过将其自身的输出乘以权重矩阵来计算“预测向量”。 如果此预测向量与可能的父对象的输出具有较大的标量积,则该父对象的耦合系数将增加,而其他父对象的耦合系数将减小,因此将增加胶囊对该父对象的贡献,从而增加的标量积。胶囊的预测以及父母的输出。 与最大池化相比,此方法要有效得多,最大池化可使一层中的神经元只关心上一层中最活跃的特征检测器。 而且,与最大池化不同,胶囊不会丢弃有关实体或其姿势的精确位置的信息。 Calculating vector inputs and outputs of a capsule: 计算胶囊的矢量输入和输出 : Because the length of the activity vector represents the probability that an entity exists in the image, it has to be between 0 and 1. Squash function will ensure that short vectors’ length will get shrunk to almost 0 and long vectors’ one will get shrunk to slightly below 1. 由于活动矢量的长度表示图像中存在实体的概率,因此活动矢量的长度必须介于0和1之间。Squash函数将确保短矢量的长度缩小到几乎0,长矢量的长度缩小。到略低于1。 Except the first layer of capsules, the total input to a capsule is a weighted sum over all prediction vectors from the capsules in the previous layer. 除胶囊的第一层外,胶囊的总输入是来自上一层胶囊的所有预测向量的加权总和。 These prediction vectors are produced by multiplying the output of a capsule in the layer below by a weight matrix. 这些预测向量是通过将下面一层中胶囊的输出乘以权重矩阵而产生的。 The coupling coefficients c_ij are determined by the iterative dynamic routing process. Between a capsule and all the capsules in the layer above, they are sum to 1 and are determined by a softmax function whose initial logits b_ij are the log prior probabilities that capsule i should be coupled to capsule j. 耦合系数c_ij由迭代动态路由过程确定。 在一个胶囊和上一层中的所有胶囊之间,它们之和为1,并由softmax函数确定,该函数的初始对数b_ij是应将胶囊i耦合到胶囊j的对数先验概率。 The initial logit b_ij are later updated by adding scalar product: 初始logit b_ij随后通过添加标量乘积进行更新: Margin loss for digit existence: 数字存在的保证金损失 : The top-level capsule for an object class should have a long instantiation vector if that object is present in the image. To allow multiple class, the authors use a separate margin loss for each capsule: 如果图像中存在该对象,则该对象类的顶级胶囊应具有长的实例化向量。 为了允许多个类别,作者对每个胶囊使用单独的保证金损失: This ensures that if an object of class k present, the loss should be no less than 0.9 and if it doesn’t, the loss should be no more than 0.1. 这样可以确保,如果存在k类对象,则损失应不小于0.9,如果不存在,则损失应不大于0.1。 The total loss is the sum of the losses of all object capsules. 总损失是所有目标胶囊损失的总和。 CapsNet architecture for MNIST MNIST的CapsNet架构 CapsNet achieved state-of-the-art performance on MNIST after just a few training epoch. After training for about 6-7 epoch with this implementation, CapsNet was able to achieve about 99% accuracy on test set. The rest were negligible improvement. 只需几次培训,CapsNet就在MNIST上取得了最先进的性能。 在使用此实现训练了大约6-7个纪元之后,CapsNet能够在测试集上实现大约99%的准确性。 其余的改善可忽略不计。 Regularization by reconstruction 重建正则化 The authors used reconstruction loss to encourage the digit capsules to encode the instantiation parameters of the input digit. It learns to reconstruct the image by minimizing the squared difference between the reconstructed image and the input image. The loss will be the sum of margin loss (||L2||) and reconstruction loss. However, to prevent the domination of reconstruction loss, it was scaled down to 0.0005. 作者使用重建损失来鼓励手指囊对输入手指的实例化参数进行编码。 它通过最小化重建图像和输入图像之间的平方差来学习重建图像。 该损失将是余量损失(|| L2 ||)与重建损失之和。 但是,为了防止控制重建损失,将其缩小到0.0005。 Drawbacks: 缺点 : When dealing with dataset that the backgrounds are much too varied (like CIFAR-10), CapsNet performs poorly compared to other state-of-the-art architectures. 当处理背景差异太大的数据集(如CIFAR-10)时,与其他最新体系结构相比,CapsNet的性能较差。 Youtube: https://www.youtube.com/watch?v=pPN8d0E3900 YouTube: https : //www.youtube.com/watch?v = pPN8d0E3900 翻译自: https://medium.com/xulab/review-dynamic-routing-between-capsules-ea9c2fb64765 胶囊路由

最后

以上就是平常酒窝最近收集整理的关于胶囊路由_评论:胶囊之间的动态路由的全部内容,更多相关胶囊路由_评论:胶囊之间内容请搜索靠谱客的其他文章。

发表评论 取消回复