原算例见

【MATLAB强化学习工具箱】学习笔记--在Simulink环境中训练智能体Create Simulink Environment and Train Agent_bear_miao的博客-CSDN博客Simulink中便于搭建各类动力学与控制模型,通过将原有的控制器替换为AI控制器,可以方便使用已有模型,提供增量效果。本节的重点是如何引入Simulink模型作为env,其他的内容在之前的文章中已有说明。以水箱模型watertank为例,如下图所示:采用PI控制器,控制效果如下所示:将此PI控制器替换为神经网络控制器后,系统架构如下图所示:具体替换策略如下所示:(1)删去PID控制器;(2)增加RL Agent模块;(3)观测器模块:观测向量为:,其中...https://blog.csdn.net/bear_miao/article/details/121337953?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522163724860816780261990267%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fall.%2522%257D&request_id=163724860816780261990267&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2~all~first_rank_ecpm_v1~rank_v31_ecpm-3-121337953.first_rank_v2_pc_rank_v29&utm_term=%E3%80%90MATLAB%E5%BC%BA%E5%8C%96%E5%AD%A6%E4%B9%A0%E5%B7%A5%E5%85%B7%E7%AE%B1%E3%80%91%E5%AD%A6%E4%B9%A0%E7%AC%94%E8%AE%B0--%E5%9C%A8Simulink%E7%8E%AF%E5%A2%83%E4%B8%AD%E8%AE%AD%E7%BB%83%E6%99%BA%E8%83%BD%E4%BD%93Create+Simulink+Environment+and+Train+Agent&spm=1018.2226.3001.4187里面包含了训练结果,在agent里面。但是究竟包含了哪些数据?

actor

从agent中提取actor信息

actor = getActor(agent)得到如下内容:

>> actor = getActor(agent)

actor =

rlDeterministicActorRepresentation with properties:ActionInfo: [1×1 rl.util.rlNumericSpec]

ObservationInfo: [1×1 rl.util.rlNumericSpec]

Options: [1×1 rl.option.rlRepresentationOptions]

从actor中提取学习到的参数

params = getLearnableParameters(actor)

得到如下结果

>> params = getLearnableParameters(actor)

params =

1×4 cell array

{3×3 single} {3×1 single} {1×3 single} {[1.8352]}

那么上述数据究竟是何含义?

从actor中提取actor网络

actorNet=getModel(actor)得到如下内容:

>> actorNet=getModel(actor)

actorNet =

DAGNetwork with properties:Layers: [5×1 nnet.cnn.layer.Layer]

Connections: [4×2 table]

InputNames: {'State'}

OutputNames: {'RepresentationLoss'}

在criticNet中终于看到了Layers信息和Connections信息,如下所示:

>> actorNet.Layers

ans =

5×1 Layer array with layers:1 'State' Feature Input 3 features

2 'actorFC' Fully Connected 3 fully connected layer

3 'actorTanh' Tanh Hyperbolic tangent

4 'Action' Fully Connected 1 fully connected layer

5 'RepresentationLoss' Regression Output mean-squared-error

>> actorNet.Connections

ans =

4×2 table

Source Destination

_____________ ______________________

{'State' } {'actorFC' }

{'actorFC' } {'actorTanh' }

{'actorTanh'} {'Action' }

{'Action' } {'RepresentationLoss'}

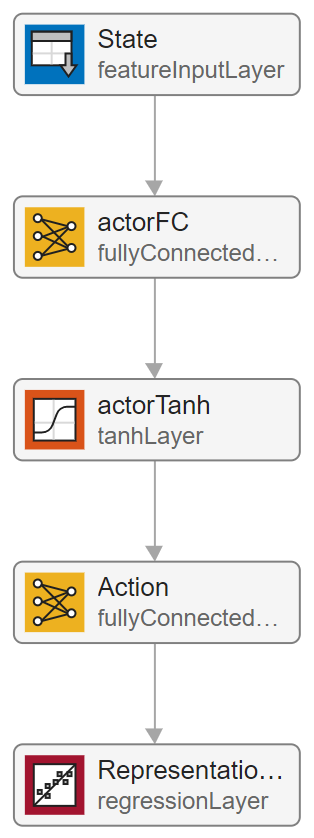

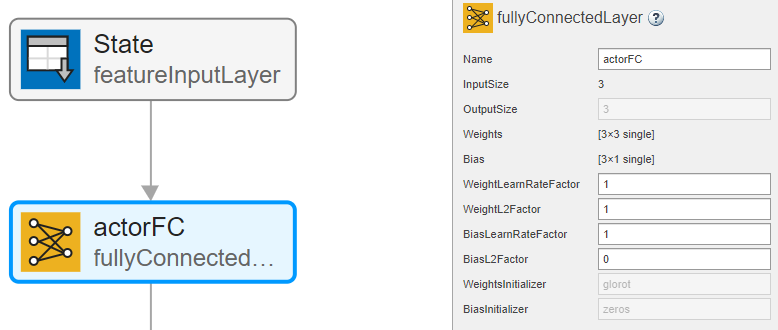

上述信息不直观,在 deepNetworkDesigner中打开如下所示:

deepNetworkDesigner(actorNet)

在这张图上终于看到了全景。

根据右侧的Propeties可以清楚看到params与网络的对应关系,如下所示:

可以得到:

params{1}为actorFC的W;

params{2}为actorFC的b;

params{3}为Action的W;

params{4}为Action的b;

critic

从agent中提取critic信息

critic = getCritic(agent)得到如下内容:

>> critic = getCritic(agent)

critic =

rlQValueRepresentation with properties:ActionInfo: [1×1 rl.util.rlNumericSpec]

ObservationInfo: [1×1 rl.util.rlNumericSpec]

Options: [1×1 rl.option.rlRepresentationOptions]

从critic中提取学习到的参数

params = getLearnableParameters(critic)

得到如下结果

>> params = getLearnableParameters(critic)

params =

1×8 cell array

Columns 1 through 2

{50×3 single} {50×1 single}

Columns 3 through 4

{25×50 single} {25×1 single}

Columns 5 through 6

{25×1 single} {25×1 single}

Columns 7 through 8

{1×25 single} {[0.0879]}

那么上述数据究竟是何含义?

从critic中提取critic网络

criticNet = getModel(critic)得到如下内容:

>> criticNet = getModel(critic)

criticNet =

DAGNetwork with properties:Layers: [10×1 nnet.cnn.layer.Layer]

Connections: [9×2 table]

InputNames: {'State' 'Action'}

OutputNames: {'RepresentationLoss'}

在criticNet中终于看到了Layers信息和Connections信息,如下所示:

>> criticNet.Layers

ans =

10×1 Layer array with layers:1 'State' Feature Input 3 features

2 'CriticStateFC1' Fully Connected 50 fully connected layer

3 'CriticRelu1' ReLU ReLU

4 'CriticStateFC2' Fully Connected 25 fully connected layer

5 'Action' Feature Input 1 features

6 'CriticActionFC1' Fully Connected 25 fully connected layer

7 'add' Addition Element-wise addition of 2 inputs

8 'CriticCommonRelu' ReLU ReLU

9 'CriticOutput' Fully Connected 1 fully connected layer

10 'RepresentationLoss' Regression Output mean-squared-error

>> criticNet.Connections

ans =

9×2 table

Source Destination

____________________ ______________________

{'State' } {'CriticStateFC1' }

{'CriticStateFC1' } {'CriticRelu1' }

{'CriticRelu1' } {'CriticStateFC2' }

{'CriticStateFC2' } {'add/in1' }

{'Action' } {'CriticActionFC1' }

{'CriticActionFC1' } {'add/in2' }

{'add' } {'CriticCommonRelu' }

{'CriticCommonRelu'} {'CriticOutput' }

{'CriticOutput' } {'RepresentationLoss'}

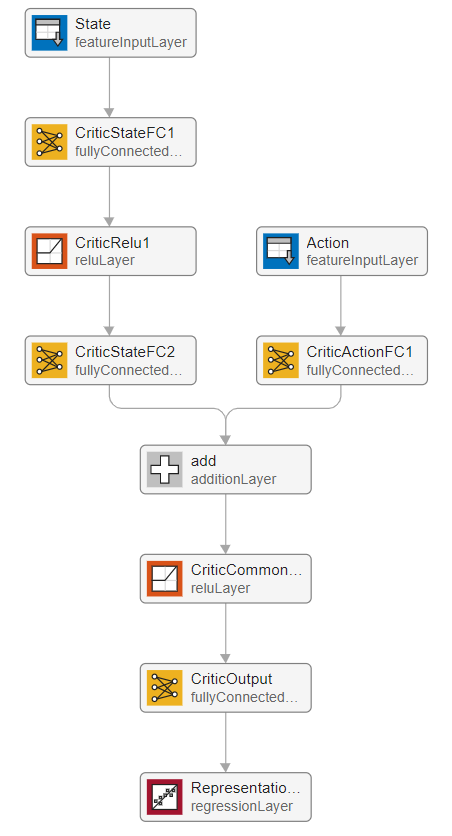

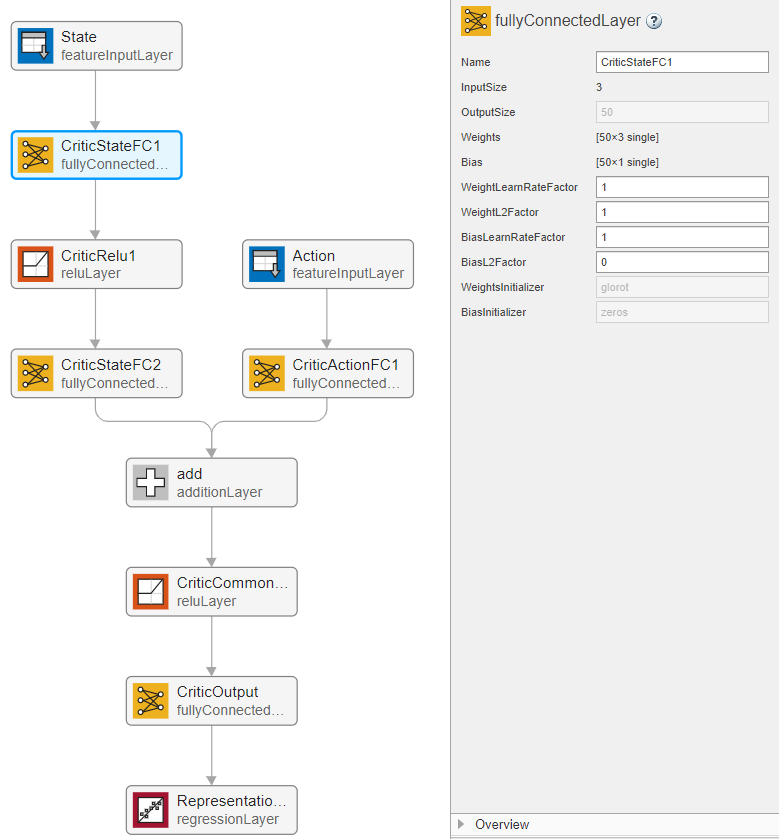

上述信息不直观,在 deepNetworkDesigner中打开如下所示:

deepNetworkDesigner(criticNet)

在这张图上终于看到了全景。

根据右侧的Propeties可以清楚看到params与网络的对应关系,如下所示:

可以得到:

params{1}为CriticStateFC1的W;

params{2}为CriticStateFC1的b;

params{3}为CriticStateFC2的W;

params{4}为CriticStateFC2的b;

params{5}为CriticActionFC1的W;

params{6}为CriticActionFC1的b;

params{7}为CriticOutput的W;

params{8}为CriticOutput的b;

最后

以上就是尊敬棉花糖最近收集整理的关于【MATLAB强化学习工具箱】学习笔记--actor网络和critic网络的结果放在哪里?actorcritic的全部内容,更多相关【MATLAB强化学习工具箱】学习笔记--actor网络和critic网络内容请搜索靠谱客的其他文章。

发表评论 取消回复