后期要用yolov3做物体检测,所以想要先试试用官网权重文件,训练TensorFlow + Keras + YOLO V3。然后输入一张随便图像,试试效果。弄了大半天,问了好几次学长查了超多博客。。。

1、下载TensorFlow + Keras + YOLO V3代码(keras-yolo3文件夹)

https://github.com/qqwweee/keras-yolo3

2、下载yoloV3权重文件:

https://pjreddie.com/media/files/yolov3.weights

3、将darknet下的yolov3配置文件转换成keras适用的h5文件:

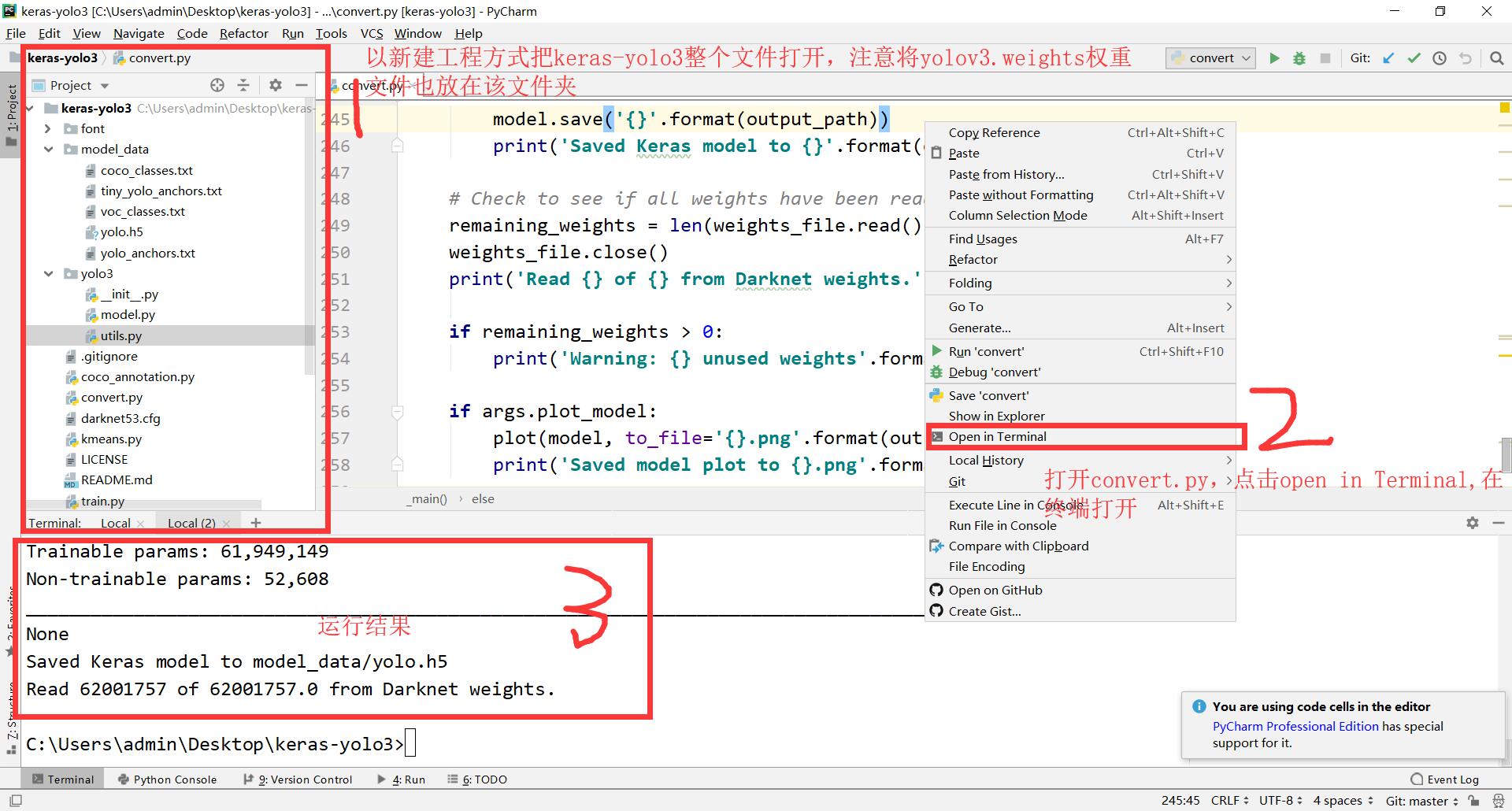

python3 convert.py yolov3.cfg yolov3.weights model_data/yolo.h5这个地方注意了!!!我就是各种磕绊在最后两步!!!执行上述命令的步骤是,(1)将权重文件下载好后,放到第一步下载的keras-yolo3文件夹里 (2)在pycharm里把keras-yolo3文件夹打开,file----open----选择文件夹 (3)打开convert.py,然后右键点击“open in Terminal” (4)在底下那个输入框里输入上述命令“python convert.py yolov3.cfg yolov3.weights model_data/yolo.h5”

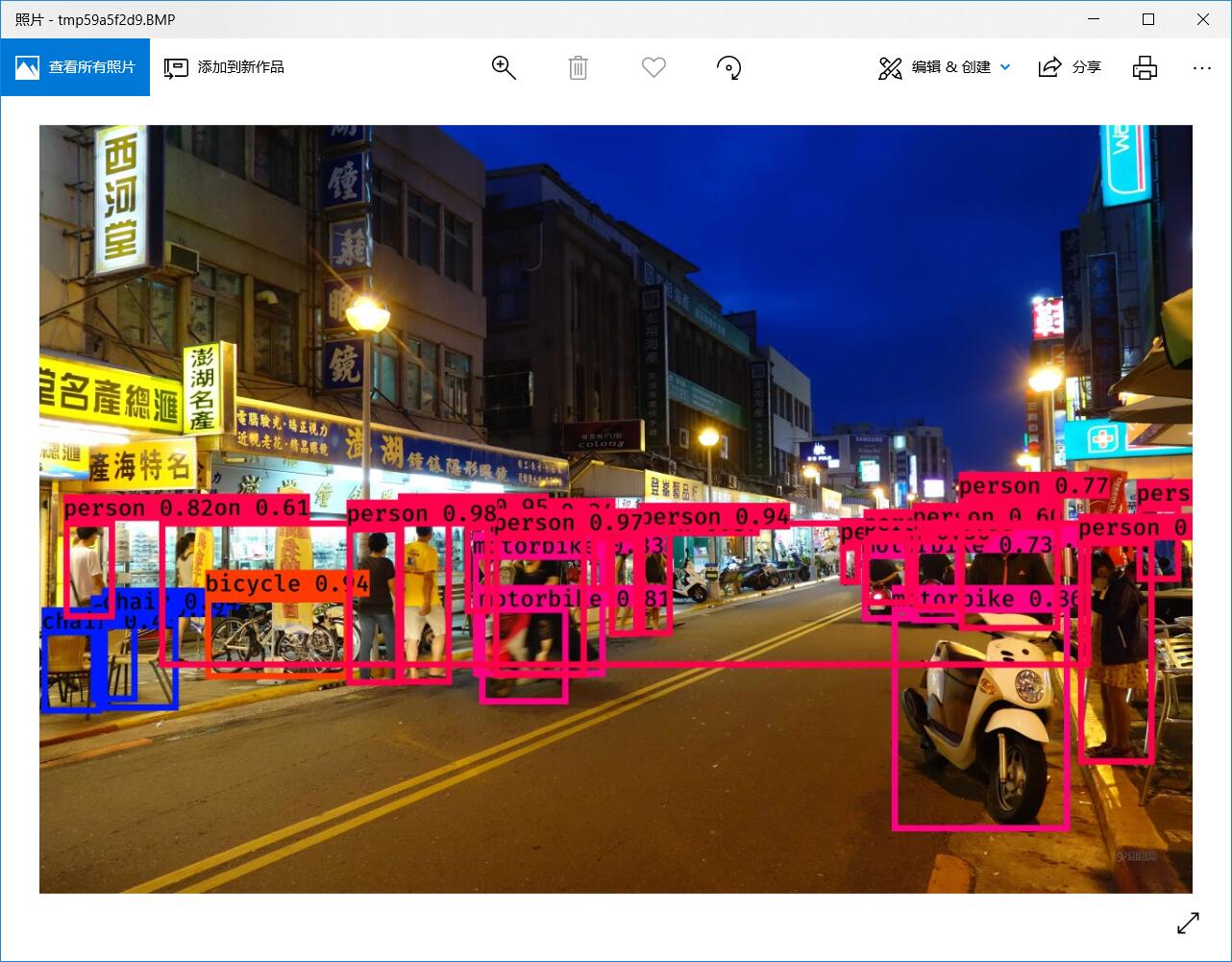

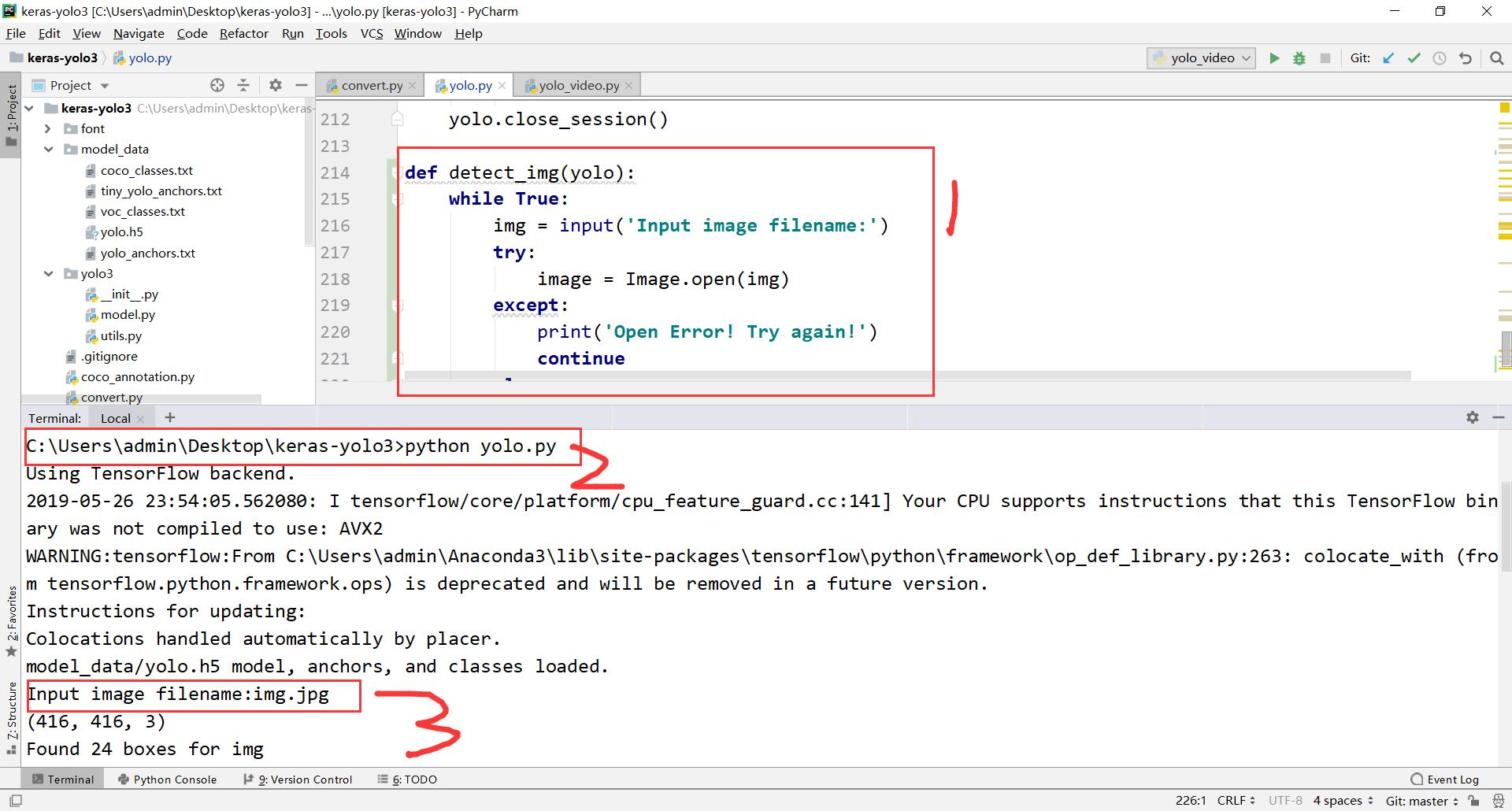

4、运行YOLO 目标检测,按照提示输入要测试的图片即可

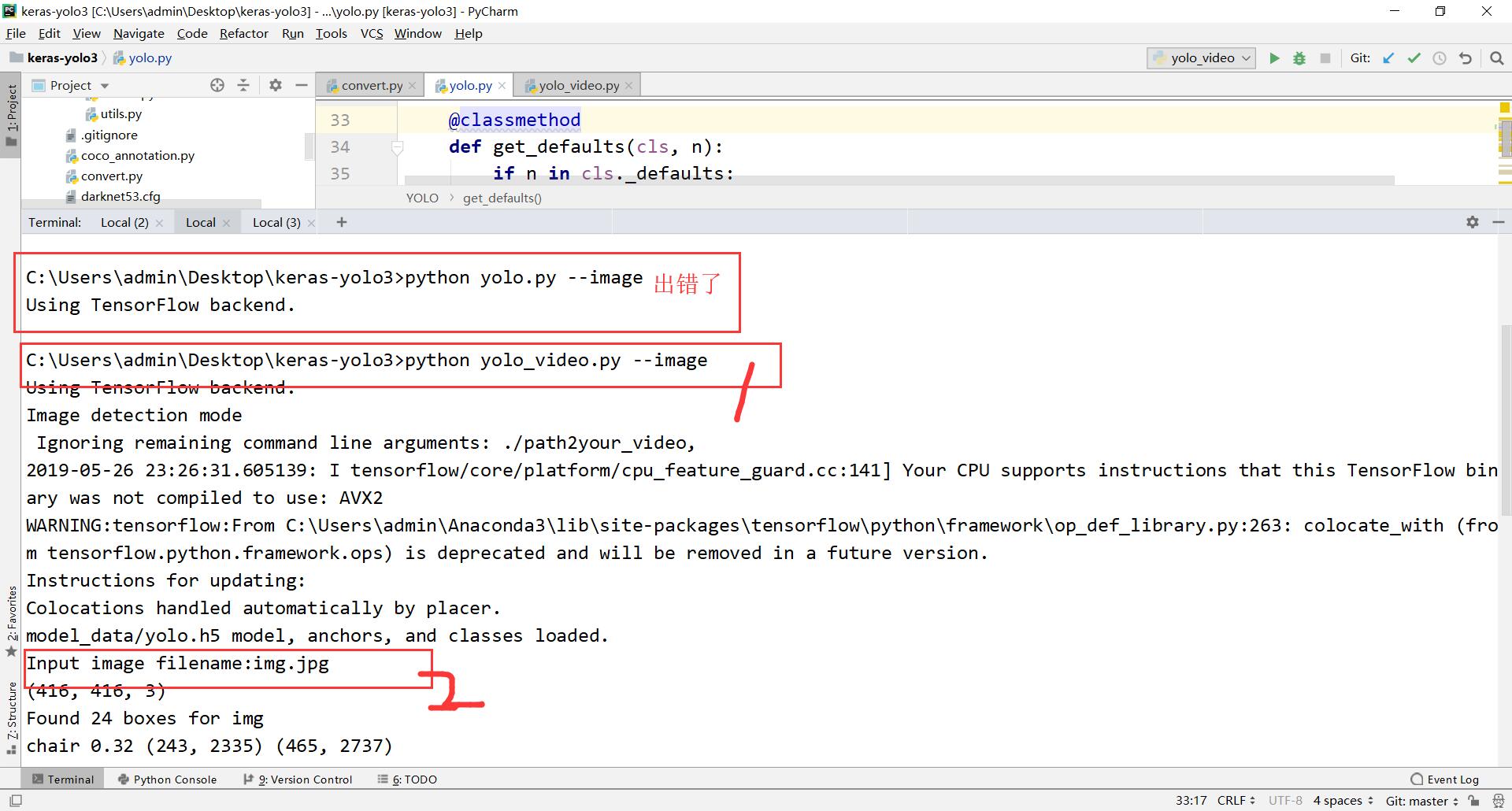

python yolo.py注意!!!!第四步我坑了很久,差点就放弃emmmmm!!!和第三步一样,“open in Terminal” ,然后输入上述指令。神奇的是,大家都说要这么做,但是我运行结果就一句"Using TensorFlow backend."根本没有输入图片的提示。。。查了很多博客,最后让我在评论里找到办法了哈哈哈哈哈哈。

方法一:“open in Terminal” ,输入"python yolo_video.py --image"(亲测有效)

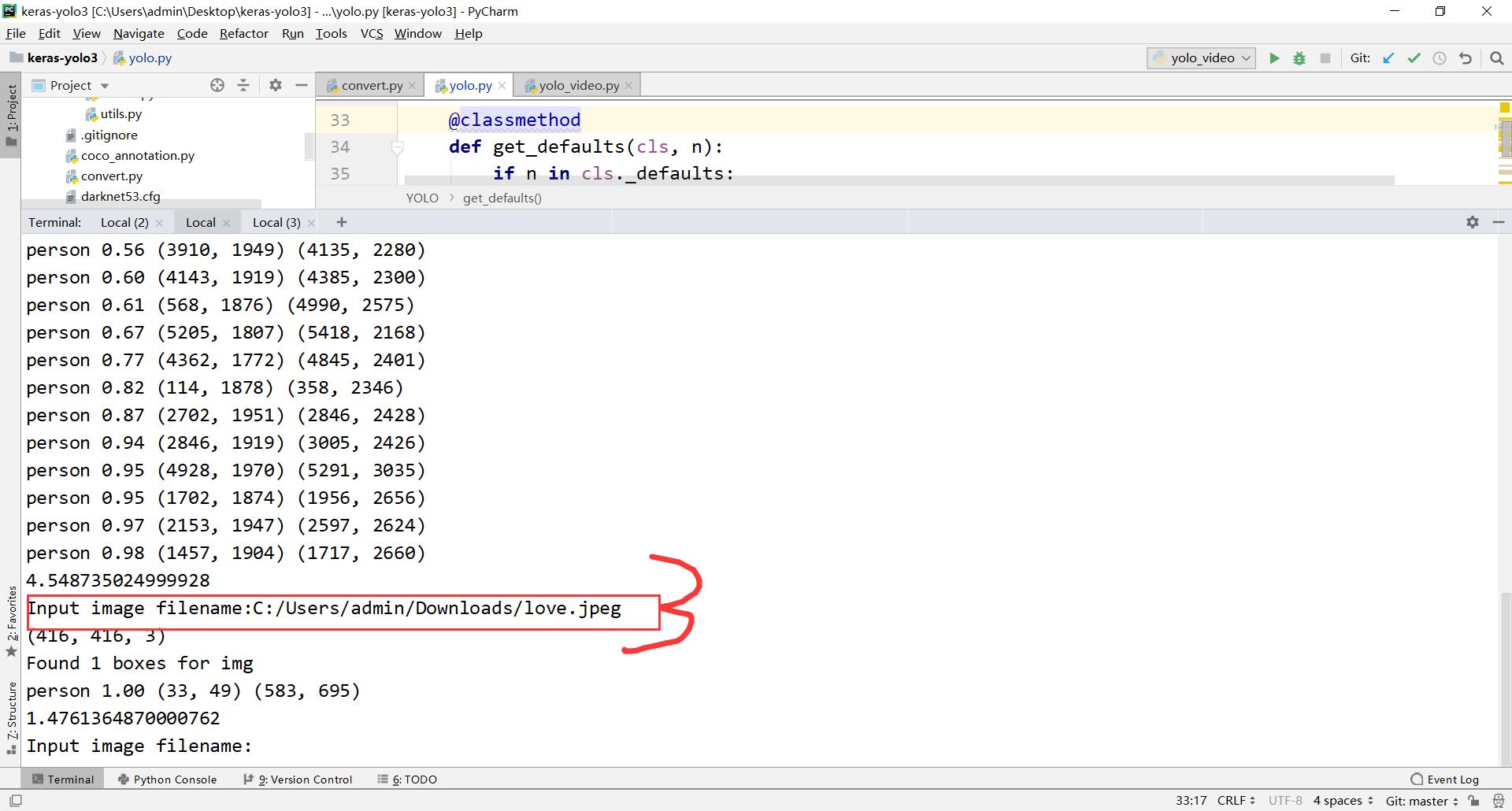

之后就是按照提示,输入图像路径+名称。若将测试图像放在keras-yolo3文件夹里则只需输入文件名;不在同一文件夹则输入绝对路径。如下图:

方法二:给yolo.py 文件加以下代码段(亲测有效)

def detect_img(yolo):

while True:

img = input('Input image filename:')

try:

image = Image.open(img)

except:

print('Open Error! Try again!')

continue

else:

r_image = yolo.detect_image(image)

r_image.show()

yolo.close_session()

if __name__ == '__main__':

detect_img(YOLO()) 改动之后,右键open in terminal,然后键入“python yolo.py”,输入图像名称即可。

以上参考https://blog.csdn.net/nofish_xp/article/details/81320314#commentsedit 文章和评论都不容错过

完成以上的两天后,我安装了tensorflow-gpu版本,安装过程一言难尽可看我另一篇博客。安装好gpu版本之后我就想再加载一下权重走一遍上述过程,第一次成功显示了上面那张图像,第二次我删除了yolo.py 的detect image(yolo)函数中的“continue”,按理来说不会影响结果,但是报错:cuda初始化失败。我重启pycharm之后把continue加回去试了一下成功,删掉continue再试,成功!!!所以continue删掉没关系啊,之前报错cuda初始化失败的原因目前不清楚。

四、运行视频

下面代码用于测试视频效果,需要修改两处(已在代码中标注):1.自己的训练权重路径 2.测试视频路径和保存路径,我代码中默认保存在该代码当前文件夹中。

# -*- coding: utf-8 -*-

"""

Class definition of YOLO_v3 style detection model on image and video

"""

import colorsys

import os

from timeit import default_timer as timer

import numpy as np

from keras import backend as K

from keras.models import load_model

from keras.layers import Input

from PIL import Image, ImageFont, ImageDraw

from yolo3.model import yolo_eval, yolo_body, tiny_yolo_body

from yolo3.utils import letterbox_image

import os

from keras.utils import multi_gpu_model

class YOLO(object):

_defaults = {

"model_path": 'model_data/yolo.h5',#【改1】自己的权重文件路径

"anchors_path": 'model_data/yolo_anchors.txt',

"classes_path": 'model_data/coco_classes.txt',

"score" : 0.3,

"iou" : 0.45,

"model_image_size" : (416, 416),

"gpu_num" : 1,

}

@classmethod

def get_defaults(cls, n):

if n in cls._defaults:

return cls._defaults[n]

else:

return "Unrecognized attribute name '" + n + "'"

def __init__(self, **kwargs):

self.__dict__.update(self._defaults) # set up default values

self.__dict__.update(kwargs) # and update with user overrides

self.class_names = self._get_class()

self.anchors = self._get_anchors()

self.sess = K.get_session()

self.boxes, self.scores, self.classes = self.generate()

def _get_class(self):

classes_path = os.path.expanduser(self.classes_path)

with open(classes_path, encoding="utf-8") as f:

class_names = f.readlines()

class_names = [c.strip() for c in class_names]

return class_names

def _get_anchors(self):

anchors_path = os.path.expanduser(self.anchors_path)

with open(anchors_path) as f:

anchors = f.readline()

anchors = [float(x) for x in anchors.split(',')]

return np.array(anchors).reshape(-1, 2)

def generate(self):

model_path = os.path.expanduser(self.model_path)

assert model_path.endswith('.h5'), 'Keras model or weights must be a .h5 file.'

# Load model, or construct model and load weights.

num_anchors = len(self.anchors)

num_classes = len(self.class_names)

is_tiny_version = num_anchors==6 # default setting

try:

self.yolo_model = load_model(model_path, compile=False)

except:

self.yolo_model = tiny_yolo_body(Input(shape=(None,None,3)), num_anchors//2, num_classes)

if is_tiny_version else yolo_body(Input(shape=(None,None,3)), num_anchors//3, num_classes)

self.yolo_model.load_weights(self.model_path) # make sure model, anchors and classes match

else:

assert self.yolo_model.layers[-1].output_shape[-1] ==

num_anchors/len(self.yolo_model.output) * (num_classes + 5),

'Mismatch between model and given anchor and class sizes'

print('{} model, anchors, and classes loaded.'.format(model_path))

# Generate colors for drawing bounding boxes.

hsv_tuples = [(x / len(self.class_names), 1., 1.)

for x in range(len(self.class_names))]

self.colors = list(map(lambda x: colorsys.hsv_to_rgb(*x), hsv_tuples))

self.colors = list(

map(lambda x: (int(x[0] * 255), int(x[1] * 255), int(x[2] * 255)),

self.colors))

np.random.seed(10101) # Fixed seed for consistent colors across runs.

np.random.shuffle(self.colors) # Shuffle colors to decorrelate adjacent classes.

np.random.seed(None) # Reset seed to default.

# Generate output tensor targets for filtered bounding boxes.

self.input_image_shape = K.placeholder(shape=(2, ))

if self.gpu_num>=2:

self.yolo_model = multi_gpu_model(self.yolo_model, gpus=self.gpu_num)

boxes, scores, classes = yolo_eval(self.yolo_model.output, self.anchors,

len(self.class_names), self.input_image_shape,

score_threshold=self.score, iou_threshold=self.iou)

return boxes, scores, classes

def detect_image(self, image):

start = timer()

if self.model_image_size != (None, None):

assert self.model_image_size[0]%32 == 0, 'Multiples of 32 required'

assert self.model_image_size[1]%32 == 0, 'Multiples of 32 required'

boxed_image = letterbox_image(image, tuple(reversed(self.model_image_size)))

else:

new_image_size = (image.width - (image.width % 32),

image.height - (image.height % 32))

boxed_image = letterbox_image(image, new_image_size)

image_data = np.array(boxed_image, dtype='float32')

print(image_data.shape)

image_data /= 255.

image_data = np.expand_dims(image_data, 0) # Add batch dimension.

out_boxes, out_scores, out_classes = self.sess.run(

[self.boxes, self.scores, self.classes],

feed_dict={

self.yolo_model.input: image_data,

self.input_image_shape: [image.size[1], image.size[0]],

K.learning_phase(): 0

})

print('Found {} boxes for {}'.format(len(out_boxes), 'img'))

font = ImageFont.truetype(font='font/FiraMono-Medium.otf',

size=np.floor(3e-2 * image.size[1] + 0.5).astype('int32'))

thickness = (image.size[0] + image.size[1]) // 300

for i, c in reversed(list(enumerate(out_classes))):

predicted_class = self.class_names[c]

box = out_boxes[i]

score = out_scores[i]

label = '{} {:.2f}'.format(predicted_class, score)

draw = ImageDraw.Draw(image)

label_size = draw.textsize(label, font)

top, left, bottom, right = box

top = max(0, np.floor(top + 0.5).astype('int32'))

left = max(0, np.floor(left + 0.5).astype('int32'))

bottom = min(image.size[1], np.floor(bottom + 0.5).astype('int32'))

right = min(image.size[0], np.floor(right + 0.5).astype('int32'))

print(label, (left, top), (right, bottom))

if top - label_size[1] >= 0:

text_origin = np.array([left, top - label_size[1]])

else:

text_origin = np.array([left, top + 1])

# My kingdom for a good redistributable image drawing library.

for i in range(thickness):

draw.rectangle(

[left + i, top + i, right - i, bottom - i],

outline=self.colors[c])

draw.rectangle(

[tuple(text_origin), tuple(text_origin + label_size)],

fill=self.colors[c])

draw.text(text_origin, label, fill=(0, 0, 0), font=font)

del draw

end = timer()

print(end - start)

return image

def close_session(self):

self.sess.close()

def detect_video(yolo, video_path, output_path=""):

import cv2

vid = cv2.VideoCapture(video_path)

if not vid.isOpened():

raise IOError("Couldn't open webcam or video")

video_FourCC = int(vid.get(cv2.CAP_PROP_FOURCC))

video_fps = vid.get(cv2.CAP_PROP_FPS)

video_size = (int(vid.get(cv2.CAP_PROP_FRAME_WIDTH)),

int(vid.get(cv2.CAP_PROP_FRAME_HEIGHT)))

isOutput = True if output_path != "" else False

if isOutput:

print("!!! TYPE:", type(output_path), type(video_FourCC), type(video_fps), type(video_size))

out = cv2.VideoWriter("./video/test1.mp4",video_FourCC, video_fps, video_size)

accum_time = 0

curr_fps = 0

fps = "FPS: ??"

prev_time = timer()

while True:

return_value, frame = vid.read()

image = Image.fromarray(frame)

image = yolo.detect_image(image)

result = np.asarray(image)

curr_time = timer()

exec_time = curr_time - prev_time

prev_time = curr_time

accum_time = accum_time + exec_time

curr_fps = curr_fps + 1

if accum_time > 1:

accum_time = accum_time - 1

fps = "FPS: " + str(curr_fps)

curr_fps = 0

cv2.putText(result, text=fps, org=(3, 15), fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=0.50, color=(255, 0, 0), thickness=2)

cv2.namedWindow("result", cv2.WINDOW_NORMAL)

cv2.imshow("result", result)

if isOutput:

out.write(result)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

yolo.close_session()

if __name__ =='__main__':

yolo=YOLO()

detect_video(yolo, video_path = '你的视频路径', output_path="output.mp4") #【改2】

最后

以上就是称心丝袜最近收集整理的关于TensorFlow + Keras + YOLO V3用官网权重文件训练出错的注意事项的全部内容,更多相关TensorFlow内容请搜索靠谱客的其他文章。

发表评论 取消回复