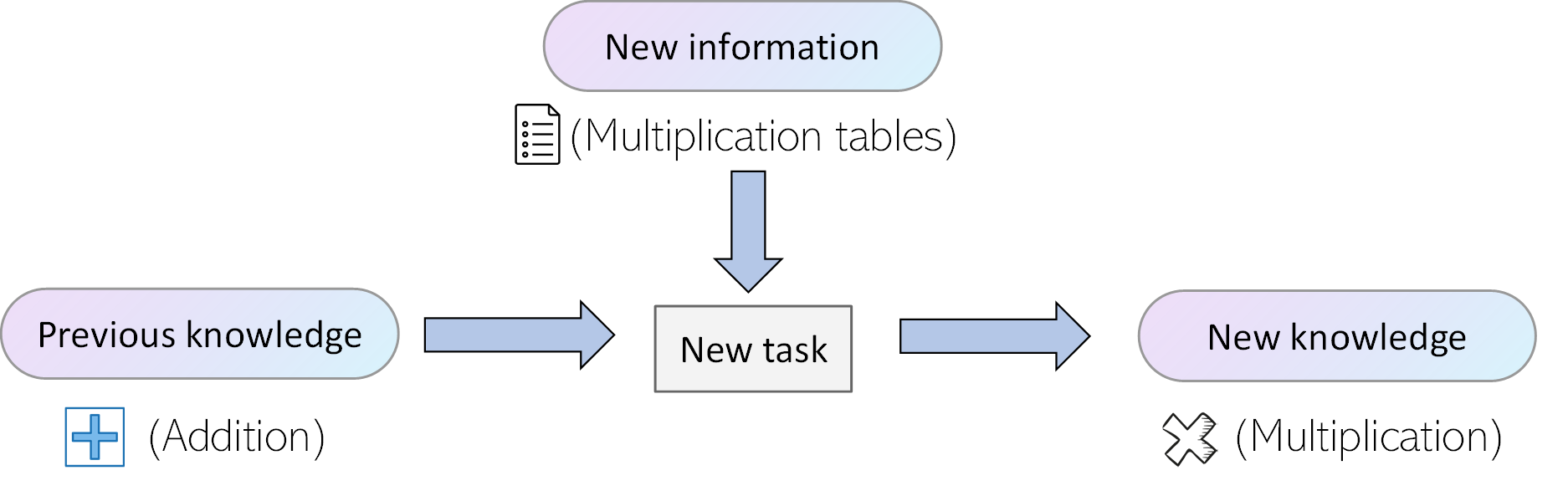

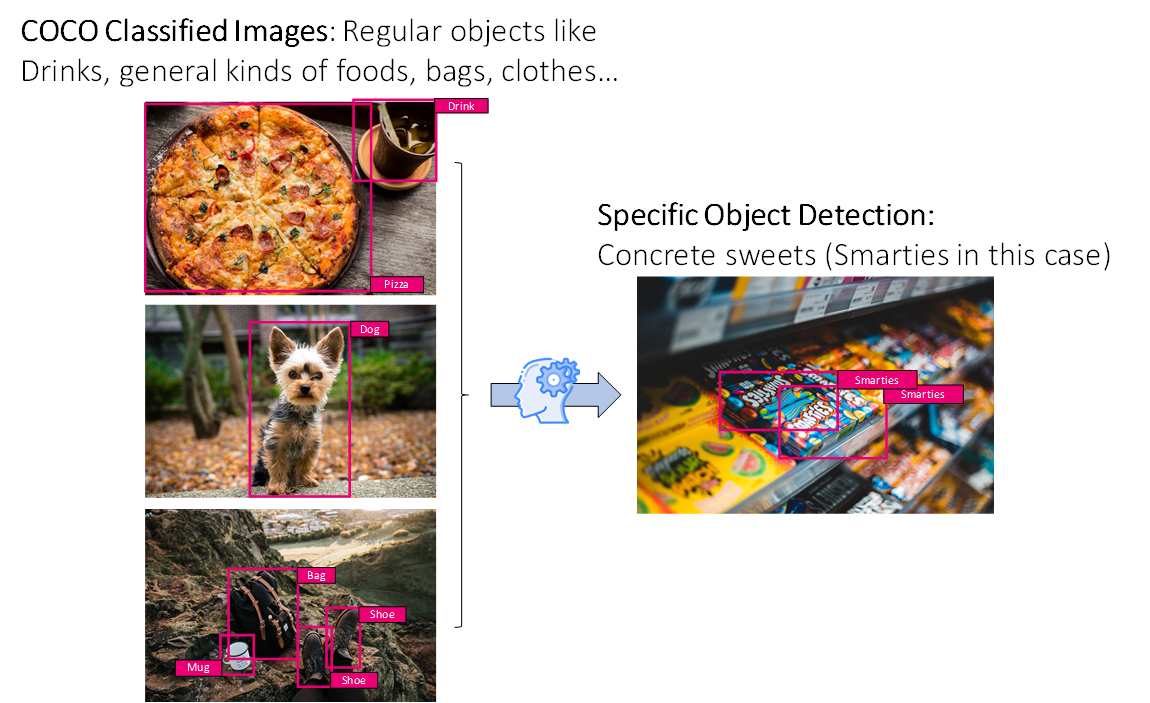

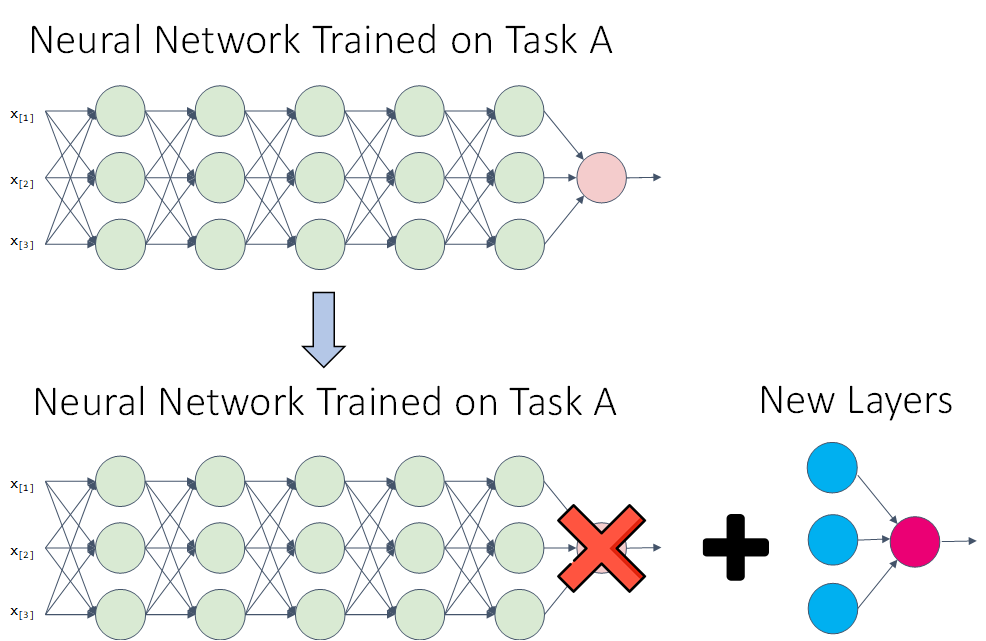

Transfer learning is a widely used technique in the Machine Learning world, mostly in Computer Vision and Natural Language Processing. 转移学习是机器学习领域中广泛使用的技术,主要是在计算机视觉和自然语言处理中。 In this post, we will explain what it is in detail, when it should be used, why it is relevant, and show how you can use it in your own projects. 在这篇文章中,我们将详细解释它的含义,何时使用,为什么有意义,并说明如何在自己的项目中使用它。 Once you’re ready, lay back, relax, and let's get to it! 准备就绪后,请放松,放松,然后开始吧! We are going to see various definitions of the technique, to clarify what it is from different angles, and ease the understanding. 我们将看到该技术的各种定义,从不同角度阐明它的含义,并简化理解。 Starting from the bottom, just from the name we can get a rough idea of what transfer learning might be: it refers to the learning of a certain task starting from some previous knowledge that has already been learned. This previous knowledge has been transferred from the first task, to the second one. 从底部开始,仅从名称开始,我们就可以大致了解什么是转移学习:它是指从已经学习的一些先前知识开始的特定任务的学习。 这些先前的知识已从第一项任务转移到了第二项任务。 Remember when you learned how to multiply? Your teacher probably said something like ‘Multiplying is just adding X times’. 还记得您学会了乘法的时候吗? 您的老师可能说过类似“ M 累加只是X倍 ”。 As you already knew how to add, you could easily multiply by thinking ‘Two times three is just adding two three times. Two plus two is four. Repeat once more with the previous result to reach three additions. Four plus two is six. This means that two times three is six! I am a genius!’ 正如您已经知道如何加法一样,您可以轻松地通过思考“乘以2乘以3就是乘以2乘以3乘” 。 二加二等于四。 对上一个结果再次重复一次,以达到三个累加。 四加二等于六。 这意味着三乘二等于六! 我是个天才! ' It might not have been exactly this, but the mental process was probably something similar. You transferred the learning you had done in addition to learn how to multiply. 可能并非完全如此,但是心理过程可能与此类似。 除了学习乘法之外,您还转移了所做的学习。 At the same time, you probably learned the multiplication tables, which allowed you to later multiply easily without having to go through the whole addition procedure. The start, however, was knowing how to sum. 同时,您可能已经学习了乘法表,这使您以后无需进行整个加法过程即可轻松进行乘法。 但是,开始是知道如何求和。 In the famous book Deep Learning by Ian Goodfellow et al, Transfer Learning is depicted in the following way. You can find an awesome review of this great book here. 在伊恩·古德弗洛(Ian Goodfellow)等人着名的著作《 深度学习》中,以以下方式描述了转移学习。 您可以在这里找到这本好书的精彩评论。 Transfer learning and domain adaptation refer to the situation where what has been learned in one setting … is exploited to improve generalization in another setting 转移学习和领域适应指的是在一种情况下学习到的东西……被用来改善另一种情况下的泛化的情况 As you can see, this last explanation is a bit more precise but still holds with our simple, initial one: some skill that has been learned in one setting (addition) can be used to improve performance or speed in another setting (multiplication). 如您所见,最后一种解释更为精确,但仍然适用于我们简单的初始解释:一种设置(加法)中已学到的技能可用于提高另一种设置(乘法)中的性能或速度。 Lastly, before we see why transfer learning is so powerful lets see a more formal definition, in terms of Domain (D), Task (T) and a feature space X with a marginal probability distribution P(X). It goes as follows: 最后,在我们了解迁移学习为何如此强大之前,让我们从域(D) , 任务(T )和特征空间X来看一个更正式的定义。 具有边际概率分布P(X) 。 内容如下: Given a specific Domain D = {X, P(X)}, a task consists of two components: a label space Y, and an objective predictive function f(•), which is learned from labelled data pairs {xi, yi} and can be used to predict the corresponding label f(x) of a new instance x. The task therefore can be expressed as T = {y, f(•)}. 给定特定的域 D = {X,P(X)} ,任务包含两个组件:标签空间 Y 和目标预测函数 f(•) ,可从标记的数据对 {xi,yi} 和可用于预测 新实例 x 的对应标签 f(x) 。 因此,该任务可以表示为 T = {y,f(•)} 。 Then, given a source domain Ds, and a learning task Ts, a target domain Dt, and a learning task Tt, transfer learning aims to help improve the learning of the target predictive function ft(•) in Dt, using knowledge in Ds and Ts. [1] 然后,给定一个源域 DS,以及学习任务 TS,目标域 DT 和学习任务 TT,迁移学习旨在帮助提高 申 目标预测函数 f(•) 的学习 ,在 DS中 使用的知识 和 Ts 。 [1] Awesome! Now we have seen 3 different definitions in increasing order of complexity. Let's see a quick example to finish grasping what Transfer Learning is. 太棒了! 现在,我们已经看到了3种不同的定义,其复杂性从高到低。 让我们看一个简单的示例,以完成对什么是转移学习的理解。 Computer Vision is one of the areas where Transfer learning is most widely used because of how CNN algorithms learn to pick up low level features of images which can be used along a different range vision of tasks, and also because of how computationally expensive it is to train these kinds of models. 计算机视觉是传输学习最广泛使用的领域之一,这是因为CNN算法如何学习拾取可以在不同范围的任务视觉中使用的图像的低级特征,并且还因为计算成本高昂训练这类模型。 As we will see later, there are strong reasons to use Transfer Learning in this area, but lets first see an example. Imagine a Convolutional Neural Network that has been trained for the task of classifying different common objects, like for example the Common Objects in Context (COCO) Dataset. Imagine we wanted to build a classifier to perform a much more specific and narrow task, like identifying different types of candy (Kit-Kats, Mars Bars, Skittles, Smarties), for which we had a lot fewer images. 正如我们将在后面看到的,在此区域中使用迁移学习的理由很充分,但让我们首先来看一个示例。 想象一下一个经过训练的卷积神经网络,它可以对不同的公共对象进行分类,例如,上下文中的公共对象(COCO)数据集 。 想象一下,我们想构建一个分类器来执行更具体,更狭窄的任务,例如识别糖果种类少的不同类型的糖果(Kit-Kats,Mars Bars,Skittles,Smarties)。 Then we could most likely take the network trained on the COCO dataset, use Transfer learning, fine tuning it using our small data-set of candies, and achieve a pretty good performance. 然后,我们很可能会在COCO数据集上接受训练的网络,使用转移学习,使用我们的小型糖果数据集对其进行微调,并获得相当不错的性能。 Alright, after this quick example, let's see why we would want to use Transfer Learning. 好了,在这个简单的示例之后,让我们看看为什么要使用Transfer Learning。 In the previous example, we saw a couple of the benefits of transfer learning. Many problems are very specific and it is very hard to find a high volume of data to tackle them with a medium-high success. 在前面的示例中,我们看到了转移学习的几个好处。 许多问题是非常具体的,很难找到大量数据以中等成功地解决它们。 Also, there is a fundamental concept in Software development that I think if adopted properly can save us all a lot of time: 另外,我认为软件开发中有一个基本概念,如果正确采用,可以为我们节省很多时间: We don’t have to reinvent the wheel! 我们不必重新发明轮子! The AI community is so big, and there is so much public work out there, data sets, and pre-trained models, that it makes little sense to build everything from scratch. Having said that, these are the two main reasons why we should use transfer learning: AI社区是如此之大,那里有太多的公共工作,数据集和经过预训练的模型,因此从头开始构建所有内容都没有多大意义。 话虽如此,这是我们应该使用转移学习的两个主要原因: Don’t forget, Transfer Learning is an optimisation: a shortcut to saving time on training, solving a problem that you wouldn’t be able to solve with the available data, or just for trying to achieve better performance. 别忘了,转学是一种优化:节省培训时间,解决您无法使用可用数据解决的问题或只是试图获得更好的性能的捷径。 Okey Dokey! Now that we know what Transfer Learning is, and why we should use it, let's see WHEN we should use TL! 好! 现在我们知道了什么是转移学习,以及为什么要使用它,让我们看看何时应该使用TL! First of all, to use Transfer Learning, the features of the data must be general, meaning that they have to be suitable for both the source and the target tasks. This is generally what happens in Computer Vision, where the inputs to the algorithms for training are the pixel values of the images, and their specific labels (classes or bounding boxes for detection). Many times this is what happens in Natural Language processing too. 首先 ,要使用传输学习,数据的功能必须具有通用性,这意味着它们必须适合源任务和目标任务。 这通常是在计算机视觉中发生的情况,其中用于训练算法的输入是图像的像素值及其特定的标签(用于检测的类或边框)。 很多时候,这也在自然语言处理中发生。 If the features are not common to both problems, then applying Transfer Learning becomes much more complex if possible at all. This is why tasks which have structured data are harder to find in Transfer Learning as the features must always match. 如果这两个问题都不通用,那么应用迁移学习将变得更加复杂。 这就是为什么在结构化任务中总是必须匹配的功能,所以在“转移学习”中很难找到具有结构化数据的任务。 Secondly, as we saw before, Transfer Learning makes sense when we have a lot of data for the source task, and very little data for the posterior or target task. If we have the same amount of data for both tasks, then transfer learning does not make a lot of sense. 其次 ,正如我们之前看到的,当我们有大量用于源任务的数据,而对于后继任务或目标任务的数据很少时,转移学习才有意义。 如果我们两个任务的数据量相同,那么转移学习就没有多大意义。 Third and last, the low level features of the initial task must be helpful for learning the target task. If you try to learn to classify images of animals using transfer learning from a data set of clouds, the results might not be too great. 第三,最后 ,初始任务的低级功能必须有助于学习目标任务。 如果您尝试使用来自云数据集的转移学习来对动物的图像进行分类,则结果可能不会太大。 In short, if you have a source task A, and a target task B for which you want to do well, consider using transfer learning when: 简而言之,如果您有源任务A和目标任务B想要做得很好,请在以下情况下考虑使用转移学习: Alright! Now that we now What, Why and When, let's finish with the How. 好的! 现在我们已经了解了什么,为什么以及什么时候,让我们结束如何做。 For using Transfer learning there are two main alternatives: Using a pre-trained model, or building a source model using a large available data set for an initial task, and then using that model as the starting point for a second task of interest. 对于使用转移学习,有两种主要选择:使用预训练模型,或使用用于初始任务的大量可用数据集构建源模型,然后将该模型用作感兴趣的第二项任务的起点。 Many research institutions release models on large and challenging datasets that may be included in the pool of candidate models from which to choose from. Because of this, there are a lot of pre-trained models available online, so there is a high chance that there is one that is suitable for your specific problem. 许多研究机构在庞大而具有挑战性的数据集上发布模型,这些模型可能包含在可供选择的候选模型库中。 因此,在线提供了许多预训练的模型,因此很有可能存在一个适合您特定问题的模型。 If not, you can grab a data-set that might be similar to yours, use that to train an initial source model, and then fine tune that model using the data you have available for the second task. 如果不是这样,您可以获取可能与您的数据集相似的数据集,使用它来训练初始源模型,然后使用可用于第二个任务的数据对模型进行微调。 In either way, a second training phase with our specific data is needed to tune the first model to the exact task that we want to perform. Let's see how this happens in the context of an ANN: 无论哪种方式,都需要使用我们的特定数据进行第二个训练阶段,以将第一个模型调整为我们要执行的确切任务。 让我们看看这是如何在ANN上下文中发生的: In practice, we rarely have to do this manually, as most frameworks that allow for the use of Transfer Learning handle this transparently for us. In case you want to use Transfer Learning for any project, here are some awesome resources to get started: 实际上,我们很少需要手动执行此操作,因为大多数允许使用Transfer Learning的框架对我们来说都是透明的。 如果您想对任何项目使用Transfer Learning,这里有一些很棒的资源可以入门: Darknet Computer Vision Library. Darknet计算机视觉库 。 Many NLP projects that use word embeddings such as GloVe and Word2Vec allow the option to fine tune the embedding to the specific vocabulary, which is actually a form of transfer learning. If you don’t know what Word embeddings are, you can check learn about them here. 许多NLP项目是使用Word的嵌入,如手套和Word2Vec允许选项微调嵌入到特定词汇,这实际上是迁移学习的一种形式。 如果您不知道Word嵌入是什么,可以在此处查看有关它们的信息 。 Mask R-CNN for object segmentation also uses transfer learning from the COCO dataset. 用于对象分割的Mask R-CNN还使用来自COCO数据集的转移学习。 That is it! As always, I hope you enjoyed the post, and that I managed to help you understand what Transfer learning is, how it works, and why it is so powerful. 这就对了! 与往常一样,希望您喜欢这篇文章 ,并且希望我能够帮助您了解什么是Transfer learning,它如何工作以及为何如此强大。 If you liked this post then feel free to follow me on Twitter at @jaimezorno. Also, you can take a look at my other posts on Data Science and Machine Learning here, and subscribe to my newsletter to get awesome goodies and notifications on new posts! 如果您喜欢这篇文章,请随时 通过@jaimezorno 在 Twitter上 关注我 。 另外,您可以在 此处 查看我在数据科学和机器学习上的其他帖子 ,并订阅我的新闻通讯,以获取有关新帖子的超赞商品和通知! If you want to learn more about Machine Learning and Artificial Intelligence follow me on Medium, and stay tuned! Also, you can check out this repository for more resources on Machine Learning and AI! 如果您想了解有关机器学习和人工智能的更多信息,请 在Medium上关注我 ,并继续关注! 另外,您可以在 此存储库中 查看有关机器学习和AI的更多资源! Here you can find some additional resources in case you want to learn more about the topic: 如果您想进一步了解该主题,可以在这里找到一些其他资源: Cafe model Zoo: GitHub repository with a wide range of pre-trained models. Cafe model Zoo :包含大量预训练模型的GitHub存储库。 Andrews Ng Video on Transfer learning. 吴德华的过渡学习视频 。 Paper: A Survey on Deep Transfer Learning. 论文: 深度迁移学习概述 。 Another Repository with a lot of further resources on Machine Learning and Artificial Intelligence. 另一个存储库,其中包含有关机器学习和人工智能的大量其他资源。 Thank you for reading and have a fantastic day! 感谢您的阅读,并度过了愉快的一天! [1] Wikipedia: Transfer Learning [1] 维基百科:转移学习 翻译自: https://towardsdatascience.com/the-ultimate-guide-to-transfer-learning-ebf83b655391 介绍 (Introduction)

TL; DR! (TL;DR!)

1)什么是转学? (1) What is Transfer Learning?)

计算机视觉中的转移学习 (Transfer learning in Computer Vision)

2)为什么转学很棒? (2) Why is Transfer Learning awesome?)

3)我什么时候应该使用转学? (3) When should I use Transfer Learning?)

4)如何使用转学? (4) How can I use transfer learning?)

5)结论和其他资源 (5) Conclusion and Additional resources)

最后

以上就是神勇画笔最近收集整理的关于转移学习的终极指南 介绍 (Introduction) 1)什么是转学? (1) What is Transfer Learning?) 2)为什么转学很棒? (2) Why is Transfer Learning awesome?) 3)我什么时候应该使用转学? (3) When should I use Transfer Learning?) 4)如何使用转学? (4) How can I use transfer learning?) 5)结论和其他资源 (5) Conclusion a的全部内容,更多相关转移学习的终极指南内容请搜索靠谱客的其他文章。

![学习技术的三部曲:WHAT、HOW、WHY[1]](https://www.shuijiaxian.com/files_image/reation/bcimg6.png)

发表评论 取消回复