2.11.8

2.1.1

UTF-8

org.scala-lang

scala-library

${scala.version}

org.apache.spark

spark-core_2.11

${spark.version}

org.apache.spark

spark-sql_2.11

${spark.version}

org.apache.spark

spark-streaming_2.11

${spark.version}

org.apache.spark

spark-mllib_2.11

${spark.version}

3.K-Means-RDD

package com.htkj.spark.mllib;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.mllib.clustering.KMeans;

import org.apache.spark.mllib.clustering.KMeansModel;

import org.apache.spark.mllib.linalg.Vector;

import org.apache.spark.mllib.linalg.Vectors;

import java.util.List;

public class KMeansRDD {

public static void main(String[] args) {

//sparkconf基础设置

SparkConf conf = new SparkConf().setAppName("K-means-RDD").setMaster("local");

JavaSparkContext jsc = new JavaSparkContext(conf);

//读取文件

JavaRDD data = jsc.textFile("C:\Users\Administrator\Desktop\cluster.txt");

//将数字的内容保存为向量

JavaRDD dataNum = data.map(s -> {

String[] split = s.split("t");

double[] doubles = new double[split.length - 1];

for (int i = 0; i < split.length - 1; i++) {

doubles[i] = Double.parseDouble(split[i + 1]);

}

return Vectors.dense(doubles);

});

//缓存

data.cache();

dataNum.cache();

//设置分类数量为5,迭代100次

int numClusters=5;

int numIterations=100;

//开始训练

KMeansModel train = KMeans.train(dataNum.rdd(), numClusters, numIterations);

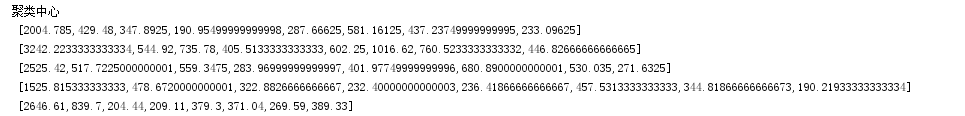

//输出聚类中心

System.out.println("聚类中心");

for (Vector center : train.clusterCenters()) {

System.out.println(" "+center);

}

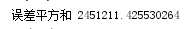

//输出误差平方和

double cost = train.computeCost(dataNum.rdd());

System.out.println("误差平方和 "+cost);

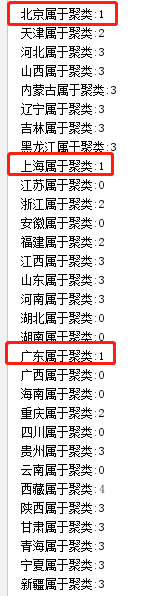

//输出数据分类结果

List collect = data.map(v -> {

String[] split = v.split("t");

double[] doubles = new double[split.length - 1];

for (int i = 0; i < split.length - 1; i++) {

doubles[i] = Double.parseDouble(split[i + 1]);

}

Vector vector = Vectors.dense(doubles);

int predict = train.predict(vector);

return v + " t"+ predict;

}).collect();

for (String s : collect) {

String[] split = s.split("t");

int length = split.length;

String name = split[0];

String cluster = split[length - 1];

System.out.println(name+"属于聚类:"+cluster);

}

//保存和加载模型

train.save(jsc.sc(),"target/org/apache/spark/JavaKMeansExample/KMeansModel");

KMeansModel loadModel = KMeansModel.load(jsc.sc(), "target/org/apache/spark/JavaKMeansExample/KMeansModel");

//stop

jsc.stop();

}

}

4.结果

4.1聚类中心

4.2误差平方和

4.3分类结果

可以看出,训练模型将北上广分为一类,可以认为分类还是比较准确的.

训练分类的结果,跟聚类中心有关,聚类中心不一样,结果也不一样

最后

以上就是义气发卡最近收集整理的关于spark如何进行聚类可视化_Spark MLlib 机器学习K-means聚类的全部内容,更多相关spark如何进行聚类可视化_Spark内容请搜索靠谱客的其他文章。

发表评论 取消回复