一、Attention Strategies for Multi-Source Sequence-to-Sequence Learning

本文主要考虑多encoder和单个RNN decoder的scenario.主要分为以下三种来讨论:

1、Concatenation of the context vectors

A widely adopted technique for combining multiple attention models in a decoder is concatenation of the context vectors. This setting forces the model to attend to each encoder independently and lets the attention combination to be resolved implicitly in the subsequent network layers.

2、Flat Attention Combination

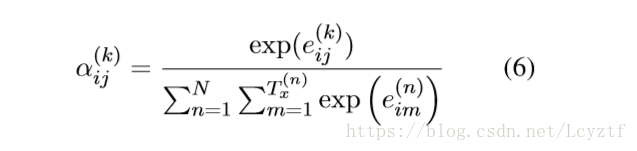

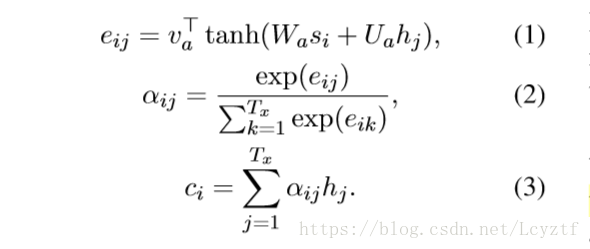

We let the decoder learn the αi distribution jointly over all encoder hidden states.

α系数是对于所有的encoders states归一化的。

attention energy term e按照Bahdanau的计算方法,注意The parameters va and Wa are shared among the encoders, and Ua is different for each encoder and serves as an encoder-specific projection of hidden states into a common vector space.

The states of the individual encoders occupy different vector spaces and can have a different dimensionality, therefore the context vector cannot be computed as their weighted sum. We project them into a single space using linear projections:

3、Hierarchical Attention Combination

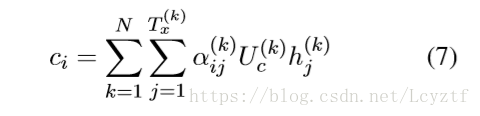

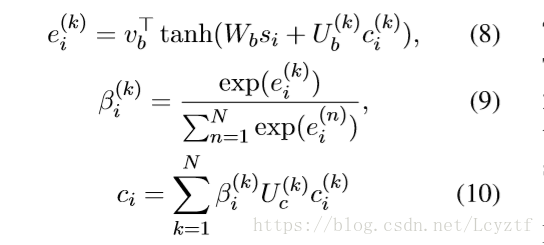

The hierarchical attention combination model computes every context vector independently, similarly to the concatenation approach. Instead of concatenation, a second attention mechanism is constructed over the context vectors.

First, we compute the context vector for each encoder independently using Equation 3.

Second, we project the context vectors (and optionally the sentinel) into a common space (Equation 8), we compute another distribution over the projected context vectors(Equation 9) and their corresponding weighted average (Equation 10):

Both of the alternatives(method 2 and method 3) allow us to explicitly compute distribution over the encoders and thus interpret how much attention is paid to each encoder at every decoding step.

在multi-source MT的实验中,hierarchical attention的效果是最好的。

最后

以上就是敏感石头最近收集整理的关于Multi-source attention mechanism的全部内容,更多相关Multi-source内容请搜索靠谱客的其他文章。

发表评论 取消回复