UMAP算法是Leland McInnes、John Healy和James Melville的发明。

The UMAP algorithm is the invention of Leland McInnes, John Healy, and James Melville.

原始参考论文下载地址:https://arxiv.org/pdf/1802.03426.pdf

See their original paper for a long-form description (https://arxiv.org/pdf/1802.03426.pdf).

原始算法的Python实现:

https://umap-learn.readthedocs.io/en/latest/index.html

Also see the documentation for the original Python implementation (https://umap-learn.readthedocs.io/en/latest/index.html).

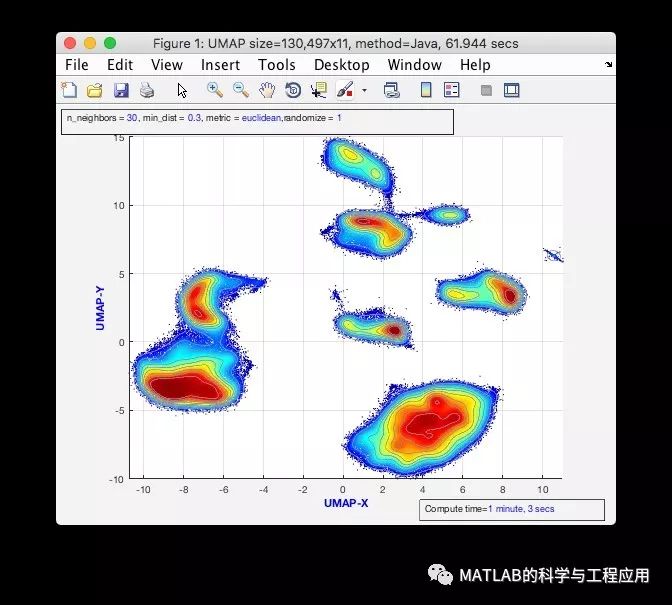

给定一组高维数据,run_umap.m生成数据的低维表示,用于数据可视化和探索研究。

Given a set of high-dimensional data, run_umap.m produces a lower-dimensional representation of the data for purposes of data visualization and exploration.

该MATLAB实现遵循与Python实现非常相似的结构,且许多函数描述几乎相同。

This MATLAB implementation follows a very similar structure to the Python implementation, and many of the function descriptions are nearly identical.

以下是MATLAB实现中的一些主要差异:

1)所有最近邻搜索都是通过内置的MATLAB函数knnsearch.m执行的。最初的Python实现使用随机投影树和最近邻下降来近似数据点的最近邻。函数knnsearch.m要么使用穷尽的方法,要么使用k-d树,这两种方法对于高维数据都很慢。因此,对于较大的高维数据集,这种实现速度较慢。

Here are some major differences in this MATLAB implementation:

1) All nearest-neighbour searches are performed through the built-in MATLAB function knnsearch.m. The original Python implementation uses random projection trees and nearest-neighbour descent to approximate nearest neighbours of data points. The function knnsearch.m either uses an exhaustive approach or k-d trees, both of which are slow for high-dimensional data. As such, this implementation is slower in the case of large, high-dimensional data sets.

2)MATLAB函数eigs.m不像Python包Scipy中的函数“eigsh”那么快。对于大型数据集,我们使用一种称为概率分块的算法对数据进行分块,从而初始化低维转换。如果用户下载并安装了Andrew Knyazev提供的lobpcg.m函数,可以用来为中型数据集找到精确的特征向量。

2) The MATLAB function eigs.m does not appear to be as fast as the function "eigsh" in the Python package Scipy. For large data sets, we initialize a low-dimensional transform by binning the data using an algorithm known as probability binning. If the user downloads and installs the function lobpcg.m, made available here (https://www.mathworks.com/matlabcentral/fileexchange/48-locally-optimal-block-preconditioned-conjugate-gradient) by Andrew Knyazev, this can be used to find exact eigenvectors for medium-sized data sets.

3)在大多数情况下,我们调用Java代码来执行随机梯度下降。然而,如果数据被减少到2以外的维度,那么随机梯度下降会在MATLAB中本地执行,这会慢得多。

3) In most cases, we call Java code to perform stochastic gradient descent. However, if the data is being reduced to a dimension other than 2, then stochastic gradient descent is performed natively in MATLAB, which tends to be much slower.

总之,该MATLAB UMAP实现比原始的Python实现慢,后者使用了Numba来加速计算。

Overall, this MATLAB UMAP implementation is slower than the the original Python implementation, which uses Numba to accelerate the calculations.

然而,根据目前所做的测试,这种速度似乎是可以接受的。

However, the speed seems acceptable based on tests done so far.

尽管我们还没有在示例中给出,但是该版本应该可以实现监督的维度缩减。

Supervised dimension reduction should be possible with this version of the implementation, though we have not yet included it in the examples.

本代码是设计的初稿,目前还没有得到UMAP原作者的审查。

This implementation is considered a first draft and has not yet been reviewed by the original authors of UMAP.

我们希望在将来继续改进它,从使用监督降维的例子开始。

We hope to make improvements to it in the future, starting with examples of using supervised dimension reduction.

本代码由斯坦福大学的赫森伯格实验室提供。

Provided by the Herzenberg Lab at Stanford University.

完整源码下载地址请点击“阅读原文”

最后

以上就是傻傻果汁最近收集整理的关于【源码】均匀流形近似与投影(UMAP)算法仿真的全部内容,更多相关【源码】均匀流形近似与投影(UMAP)算法仿真内容请搜索靠谱客的其他文章。

发表评论 取消回复