目录

1.踩过的坑(tensorflow)

2.tensorboard

3.代码实现(python3.5)

4.运行结果以及分析

1.踩过的坑(tensorflow)

上一章CNN中各个算法都是纯手工实现的,可能存在一些难以发现的问题,这也是准确率不高的一个原因,这章主要利用tensorflow框架来实现卷积神经网络,数据源还是cifar(具体下载见上一章)

在利用tensorflow框架实现CNN时,需要注意以下几点:

1.输入数据定义时,x只是起到占位符的作用(看不到真实值,只是为了能够运行代码,获取相应的tensor节点,这一点跟我们之前代码流程完全相反, 真正数据流的执行在session会话里)

x:输入数据,y_: 标签数据,keep_prob: 概率因子,防止过拟合。

定义,且是全局变量。

x = tf.placeholder(tf.float32, [None, 3072], name='x')

y_ = tf.placeholder(tf.float32, [None, 10], name='y_')

keep_prob = tf.placeholder(tf.float32)

后面在session里必须要初始化

sess.run(tf.global_variables_initializer())

在session run时必须要传得到该tensor节点含有参数值(x, y_, keep_prob)

train_accuracy = accuracy.eval(feed_dict={

x: batch[0], y_: batch[1], keep_prob: 1.0})

2.原始数据集标签要向量化;

例如cifar有10个类别,如果类别标签是 6 对应向量[0,0,0,0,0,1,0,0,0,0]

3.知道每一步操作的数据大小的变化,不然,报错的时候很难定位(个人认为这也是tensorflow的弊端,无法实时追踪定位);

注意padding = 'SAME'和'VALID'的区别

padding = 'SAME' => Height_后 = Height_前/Strides 跟padding无关 向上取整

padding = 'VALID'=> Height_后 = (Height_前 - Filter + 1)/Strides 向上取整

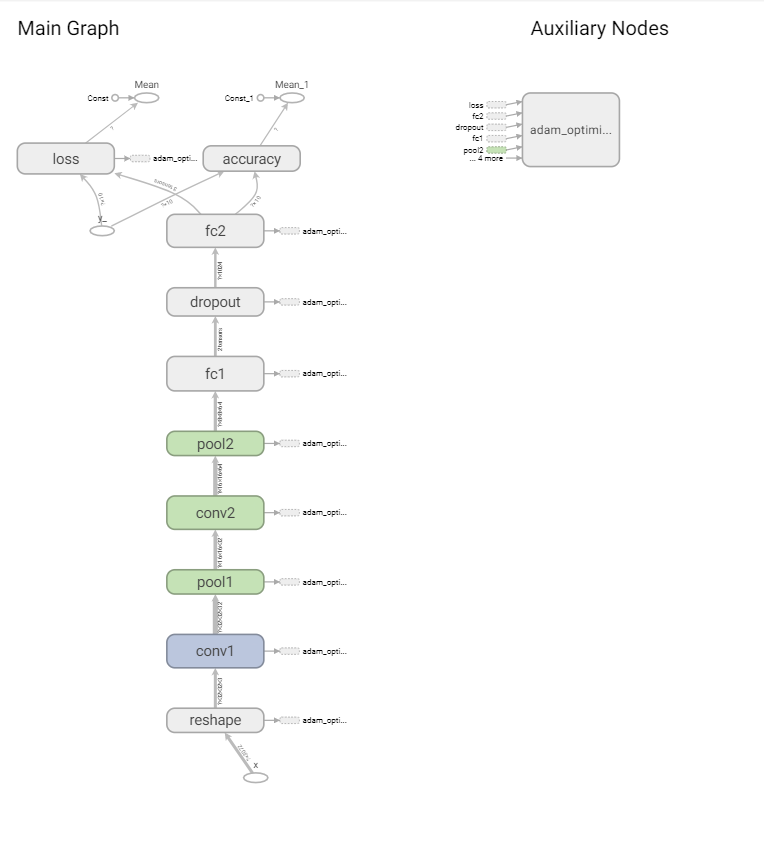

4.打印tensorboard流程图,可以直观看到每步操作数据大小的变化;

2. tensorboard

tensorboard就是一个数据结构流程图的可视化工具,通过tensorboard流程图,可以直观看到神经网络的每一步操作以及数据流的变化。

操作步骤:

1. 在session会话里加入如下代码,打印结果会在当前代码文件相同路径的tensorboard文件下,默认是

tf.summary.FileWriter("tensorboard/", sess.graph)

2. 在运行里输入cmd,然后输入(前提是安装好了tensorboard => pip install tensorboard)

tensorboard --logdir=D:ProjectpythonmyProjectCNNtensorflowcaptchaIdentifytensorboard --host=127.0.0.1

'D:ProjectpythonmyProjectCNNtensorflowcaptchaIdentifytensorboard' 是我生成的tensorboard文件的绝对路径,你替换成你自己的就可以了。

正确运行后会显示 ‘Tensorboard at http://127.0.0.1:6006’,说明tensorboard服务已经起来了,在浏览器页面输入

http://127.0.0.1:6006即可显示流程图。

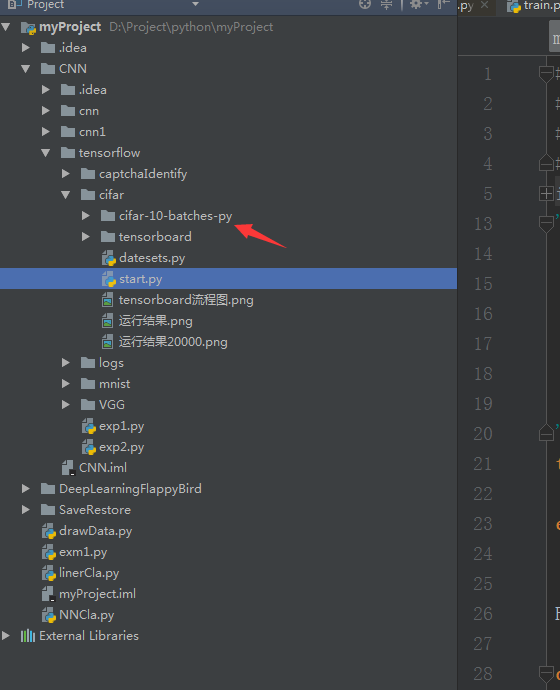

3.代码实现(python3.6)

代码逻辑实现相对比较简单,在一些重要逻辑实现上,我已做了注释,如果大家有什么疑义,可以留言给我,我们一起交流。

因为原始图片数据集太大,不好上传,大家可以直接在http://www.cs.toronto.edu/~kriz/cifar.html下载CIFAR-10 python version,

有163M,放在代码文件同路径下即可。

cifar放置路径

start.py

View Code

View Code

datasets.py

1 import numpy

2 from tensorflow.python.framework import dtypes

3 from tensorflow.python.framework import random_seed

4 from six.moves import xrange

5 from tensorflow.contrib.learn.python.learn.datasets import base

6 import pickle

7 import os

8

9 class DataSet(object):

10 """Container class for a dataset (deprecated).

11

12 THIS CLASS IS DEPRECATED. See

13 [contrib/learn/README.md](https://www.tensorflow.org/code/tensorflow/contrib/learn/README.md)

14 for general migration instructions.

15 """

16 def __init__(self,

17 images,

18 labels,

19 fake_data=False,

20 one_hot=False,

21 dtype=dtypes.float32,

22 reshape=True,

23 seed=None):

24 """Construct a DataSet.

25 one_hot arg is used only if fake_data is true. `dtype` can be either

26 `uint8` to leave the input as `[0, 255]`, or `float32` to rescale into

27 `[0, 1]`. Seed arg provides for convenient deterministic testing.

28 """

29 seed1, seed2 = random_seed.get_seed(seed)

30 # If op level seed is not set, use whatever graph level seed is returned

31 numpy.random.seed(seed1 if seed is None else seed2)

32 dtype = dtypes.as_dtype(dtype).base_dtype

33 if dtype not in (dtypes.uint8, dtypes.float32):

34 raise TypeError(

35 'Invalid image dtype %r, expected uint8 or float32' % dtype)

36 if fake_data:

37 self._num_examples = 10000

38 self.one_hot = one_hot

39 else:

40 assert images.shape[0] == labels.shape[0], (

41 'images.shape: %s labels.shape: %s' % (images.shape, labels.shape))

42 self._num_examples = images.shape[0]

43

44 # Convert shape from [num examples, rows, columns, depth]

45 # to [num examples, rows*columns] (assuming depth == 1)

46 if reshape:

47 assert images.shape[3] == 3

48 images = images.reshape(images.shape[0],

49 images.shape[1] * images.shape[2] * images.shape[3])

50 if dtype == dtypes.float32:

51 # Convert from [0, 255] -> [0.0, 1.0].

52 images = images.astype(numpy.float32)

53 images = numpy.multiply(images, 1.0 / 255.0)

54 self._images = images

55 self._labels = labels

56 self._epochs_completed = 0

57 self._index_in_epoch = 0

58

59 @property

60 def images(self):

61 return self._images

62

63 @property

64 def labels(self):

65 return self._labels

66

67 @property

68 def num_examples(self):

69 return self._num_examples

70

71 @property

72 def epochs_completed(self):

73 return self._epochs_completed

74

75 def next_batch(self, batch_size, fake_data=False, shuffle=True):

76 """Return the next `batch_size` examples from this data set."""

77 if fake_data:

78 fake_image = [1] * 784

79 if self.one_hot:

80 fake_label = [1] + [0] * 9

81 else:

82 fake_label = 0

83 return [fake_image for _ in xrange(batch_size)], [

84 fake_label for _ in xrange(batch_size)

85 ]

86 start = self._index_in_epoch

87 # Shuffle for the first epoch

88 if self._epochs_completed == 0 and start == 0 and shuffle:

89 perm0 = numpy.arange(self._num_examples)

90 numpy.random.shuffle(perm0)

91 self._images = self.images[perm0]

92 self._labels = self.labels[perm0]

93 # Go to the next epoch

94 if start + batch_size > self._num_examples:

95 # Finished epoch

96 self._epochs_completed += 1

97 # Get the rest examples in this epoch

98 rest_num_examples = self._num_examples - start

99 images_rest_part = self._images[start:self._num_examples]

100 labels_rest_part = self._labels[start:self._num_examples]

101 # Shuffle the data

102 if shuffle:

103 perm = numpy.arange(self._num_examples)

104 numpy.random.shuffle(perm)

105 self._images = self.images[perm]

106 self._labels = self.labels[perm]

107 # Start next epoch

108 start = 0

109 self._index_in_epoch = batch_size - rest_num_examples

110 end = self._index_in_epoch

111 images_new_part = self._images[start:end]

112 labels_new_part = self._labels[start:end]

113 return numpy.concatenate(

114 (images_rest_part, images_new_part), axis=0), numpy.concatenate(

115 (labels_rest_part, labels_new_part), axis=0)

116 else:

117 self._index_in_epoch += batch_size

118 end = self._index_in_epoch

119 return self._images[start:end], self._labels[start:end]

120

121 def read_data_sets(train_dir,

122 one_hot=False,

123 dtype=dtypes.float32,

124 reshape=True,

125 validation_size=5000,

126 seed=None):

127

128

129

130

131 train_images,train_labels,test_images,test_labels = load_CIFAR10(train_dir)

132 if not 0 <= validation_size <= len(train_images):

133 raise ValueError('Validation size should be between 0 and {}. Received: {}.'

134 .format(len(train_images), validation_size))

135

136 validation_images = train_images[:validation_size]

137 validation_labels = train_labels[:validation_size]

138 validation_labels = dense_to_one_hot(validation_labels, 10)

139 train_images = train_images[validation_size:]

140 train_labels = train_labels[validation_size:]

141 train_labels = dense_to_one_hot(train_labels, 10)

142

143 test_labels = dense_to_one_hot(test_labels, 10)

144

145 options = dict(dtype=dtype, reshape=reshape, seed=seed)

146 train = DataSet(train_images, train_labels, **options)

147 validation = DataSet(validation_images, validation_labels, **options)

148 test = DataSet(test_images, test_labels, **options)

149

150 return base.Datasets(train=train, validation=validation, test=test)

151

152

153 def load_CIFAR_batch(filename):

154 """ load single batch of cifar """

155 with open(filename, 'rb') as f:

156 datadict = pickle.load(f, encoding='bytes')

157 X = datadict[b'data']

158 Y = datadict[b'labels']

159 X = X.reshape(10000, 3, 32, 32).transpose(0,2,3,1).astype("float")

160 Y = numpy.array(Y)

161 return X, Y

162

163 def load_CIFAR10(ROOT):

164 """ load all of cifar """

165 xs = []

166 ys = []

167 for b in range(1,6):

168 f = os.path.join(ROOT, 'data_batch_%d' % (b, ))

169 X, Y = load_CIFAR_batch(f)

170 xs.append(X)

171 ys.append(Y)

172 Xtr = numpy.concatenate(xs)

173 Ytr = numpy.concatenate(ys)

174 del X, Y

175 Xte, Yte = load_CIFAR_batch(os.path.join(ROOT, 'test_batch'))

176 return Xtr, Ytr, Xte, Yte

177

178 def dense_to_one_hot(labels_dense, num_classes):

179 """Convert class labels from scalars to one-hot vectors."""

180 num_labels = labels_dense.shape[0]

181 index_offset = numpy.arange(num_labels) * num_classes

182 labels_one_hot = numpy.zeros((num_labels, num_classes))

183 labels_one_hot.flat[index_offset + labels_dense.ravel()] = 1

184 return labels_one_hot

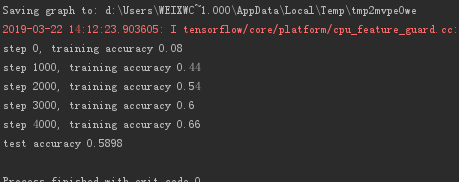

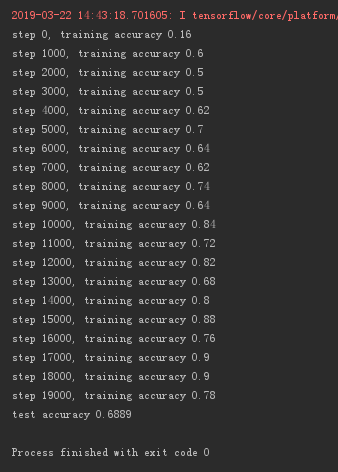

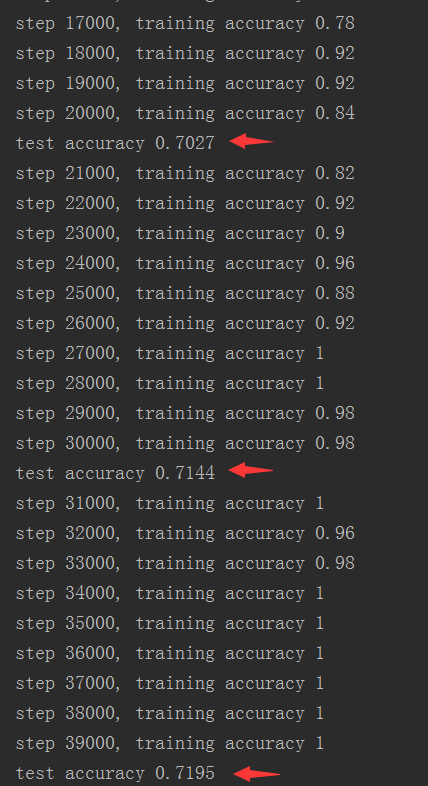

4.运行结果以及分析

这里选取55000张图片作为训练样本,测试样本选取5000张。

tensorboard可视流程图

运行5000次,测试准确率:58%

运行20000次,测试准确率:68.89%

运行40000次,测试准确率71.95%

分析:由最后一张图片可以看出,20000 - 30000次时测试准确率=> 70.27% ->71.44%,30000 - 40000次时=> 71.44% -> 71.95%

而训练准确率已经达到100%,说明测试准确率已经趋于一个稳定值,再增加训练次数,测试准确率提高的可能性不大。

如果想要继续提高测试准确率,就只能增加训练样本。

最后

以上就是靓丽柜子最近收集整理的关于用Tensorflow实现卷积神经网络(CNN)的全部内容,更多相关用Tensorflow实现卷积神经网络(CNN)内容请搜索靠谱客的其他文章。

发表评论 取消回复