多元分类

1、从images中随机选取numbers张图片

def randomly_select(images, numbers):

m, n = images.shape[0], images.shape[1]

res = np.zeros((1, n))

for i in range(numbers):

index = random.randint(0, m - 1)

res = np.concatenate((res, images[index].reshape(1, n)), axis=0)

return np.delete(res, 0, axis=0) # 100*400数组2、将若干张图片组成一张图片

def mapping(images, images_dimension):

image_dimension = int(np.sqrt(images.shape[-1]))

image = False

im = False

for i in images:

if type(image) == bool:

image = i.reshape(image_dimension, image_dimension)

else:

if image.shape[-1] == image_dimension * images_dimension:

if type(im) == bool:

im = image

else:

im = np.concatenate((im, image), axis=0)

image = i.reshape(image_dimension, image_dimension)

else:

image = np.concatenate((image, i.reshape(image_dimension, image_dimension)), axis=1)

return np.concatenate((im, image), axis=0) # 200*200数组3、导入数据

使用 import scipy.io as sio

data = sio.loadmat(文件路径)

X = data['X']

Y = data['y']

# X包含5000个20 * 20像素的手写字体图像 Y对应的数字

print(X.shape, Y.shape)

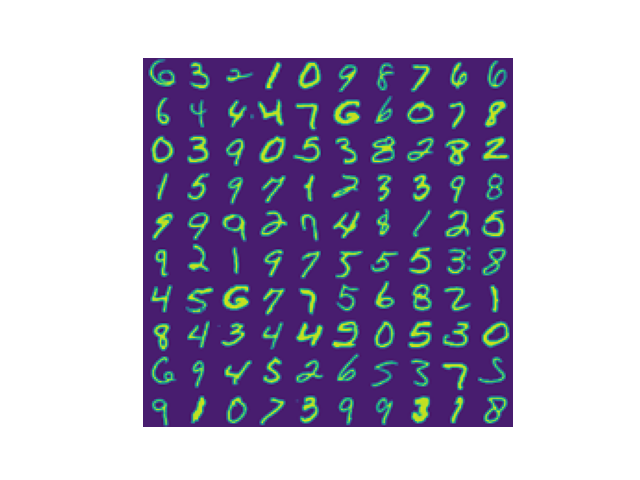

# 画图显示

im = mapping(randomly_select(X, 100), 10)

plt.imshow(im.T) # 图片是镜像的需要转置让它看起来更正常

plt.axis('off')

plt.show()

4、梯度下降、正则化逻辑回归

def sigmoid(z):

return 1/(1+np.exp(-z))

def cost(theta, X, y, lamda):

m = X.shape[0]

part1 = np.mean(-y*np.log(sigmoid(X.dot(theta)))-(1-y)*np.log(1-sigmoid(X.dot(theta))))

part2 = (lamda/(2*m))*np.sum(np.delete((theta*theta), 0, axis=0))

return part1+part2

def gradient(theta, X, y, lamda):

m = X.shape[0]

part1 = X.T.dot(sigmoid(X.dot(theta))-y)/y.shape[0]

part2 = (lamda/m)*theta

return part1+part25、将y值变化为向量来表示数字

def convert(y):

n = len(np.unique(y))

res = False

for i in y:

temp = np.zeros((1, n))

temp[0][i[0] % 10] = 1

if type(res) == bool:

res = temp

else:

res = np.concatenate((res, temp), axis=0)

return res # 5000*10 数组6、预测

def predict(theta, X):

p = sigmoid(X.dot(theta.T))

res = False

for i in p:

index = np.argmax(i)

temp = np.zeros((1, 10))

temp[0][index] = 1

if type(res) == bool:

res = temp

else:

res = np.concatenate((res, temp), axis=0)

return resy = convert(Y)

X = np.insert(X, 0, 1, axis=1)

m, n = X.shape[0], X.shape[1]

theta = np.zeros((n,))

trained_theta = False

for i in range(y.shape[-1]):

res = opt.minimize(fun=cost, x0=theta, args=(X, y[:, i], 1), method="TNC", jac=gradient)

if type(trained_theta) == bool:

trained_theta = res.x.reshape(1, n)

else:

trained_theta = np.concatenate((trained_theta, res.x.reshape(1, n)), axis=0)

print(classification_report(y, predict(trained_theta, X), target_names=[str(i) for i in range(10)], digits=4))

8、结果

precision recall f1-score support

0 0.9725 0.9920 0.9822 500

1 0.9553 0.9840 0.9695 500

2 0.9444 0.9180 0.9310 500

3 0.9418 0.9060 0.9235 500

4 0.9465 0.9560 0.9512 500

5 0.9212 0.9120 0.9166 500

6 0.9683 0.9760 0.9721 500

7 0.9499 0.9480 0.9489 500

8 0.9205 0.9260 0.9232 500

9 0.9183 0.9220 0.9202 500

micro avg 0.9440 0.9440 0.9440 5000

macro avg 0.9439 0.9440 0.9438 5000

weighted avg 0.9439 0.9440 0.9438 5000

samples avg 0.9440 0.9440 0.9440 5000

前馈神经网络

1、与上文相似,将y向量化,写预测函数

def convert(y):

n = len(np.unique(y))

res = False

for i in y:

temp = np.zeros((1, n))

temp[0][i[0] % 10] = 1

if type(res) == bool:

res = temp

else:

res = np.concatenate((res, temp), axis=0)

return res # 5000*10 数组

def sigmoid(z):

return 1/(1+np.exp(-z))

def predict(theta, X):

labels = theta[-1].shape[0]

for t in theta:

X = np.insert(X, 0, 1, axis=1)

X = sigmoid(X.dot(t.T))

p = X

res = np.zeros((1, labels))

for i in p:

index = np.argmax(i)

temp = np.zeros((1, labels))

temp[0][index] = 1

res = np.concatenate((res, temp), axis=0)

return res[1:]2、测试

theta = sio.loadmat(文件路径)

data = sio.loadmat(文件路径)

# print(theta.keys())

theta1 = theta["Theta1"] # shape(25,401)

theta2 = theta["Theta2"] # shape(10,26)

y = convert(data['y'])

# 训练集中对y的处理是 1 2 3 ... 0

# convert处理中是 0 1 2 ... 9

y0 = y[:, 0].reshape(y.shape[0], 1)

y = np.concatenate((y[:, 1:], y0), axis=1)

X = data["X"]

print(classification_report(y, predict((theta1, theta2), X), digits=3))3、结果

precision recall f1-score support

0 0.968 0.982 0.975 500

1 0.982 0.970 0.976 500

2 0.978 0.960 0.969 500

3 0.970 0.968 0.969 500

4 0.972 0.984 0.978 500

5 0.978 0.986 0.982 500

6 0.978 0.970 0.974 500

7 0.978 0.982 0.980 500

8 0.966 0.958 0.962 500

9 0.982 0.992 0.987 500

micro avg 0.975 0.975 0.975 5000

macro avg 0.975 0.975 0.975 5000

weighted avg 0.975 0.975 0.975 5000

samples avg 0.975 0.975 0.975 5000

最后

以上就是爱撒娇衬衫最近收集整理的关于吴恩达机器学习python实现3 多元分类及前馈神经网络的全部内容,更多相关吴恩达机器学习python实现3内容请搜索靠谱客的其他文章。

发表评论 取消回复