继【opencv】调用caffe、tensorflow、darknet模型 之后,我们接下来运行一个具体的实例。

1.下载模型

Darknet是一个用C语言编写的小众的神经网络框架,即和tensorflow、keras、caffe等类似,但是没有他们那么出名。关键是用C语言写的,因此,Darknet框架在某些方面有着自己独特的优势,它安装速度快,易于安装,并支持CPU和GPU计算。这里给出Github上的代码地址,以及官网地址。

下面简要介绍一下YOLO算法。

YOLO算法

YOLO(You Only Look Once)是Joseph Redmon针对这一框架提出的核心目标检测算法。作者在YOLO算法中把物体检测问题处理成回归问题,用一个卷积神经网络结构就可以从输入图像直接预测bounding box和类别概率。

YOLO算法的优点:

1、YOLO的速度非常快。在Titan X GPU上的速度是45 fps(frames per second),加速版的YOLO差不多是150fps。

2、YOLO是基于图像的全局信息进行预测的。这一点和基于sliding window以及region proposal等检测算法不一样。与Fast R-CNN相比,YOLO在误检测(将背景检测为物体)方面的错误率能降低一半多。

3、可以学到物体的generalizable-representations。可以理解为泛化能力强。

4、准确率高。有实验证明。

事实上,目标检测的本质就是回归,因此一个实现回归功能的CNN并不需要复杂的设计过程。YOLO没有选择滑窗或提取proposal的方式训练网络,而是直接选用整图训练模型。这样做的好处在于可以更好的区分目标和背景区域,相比之下,采用proposal训练方式的Fast-R-CNN常常把背景区域误检为特定目标。当然,YOLO在提升检测速度的同时牺牲了一些精度。

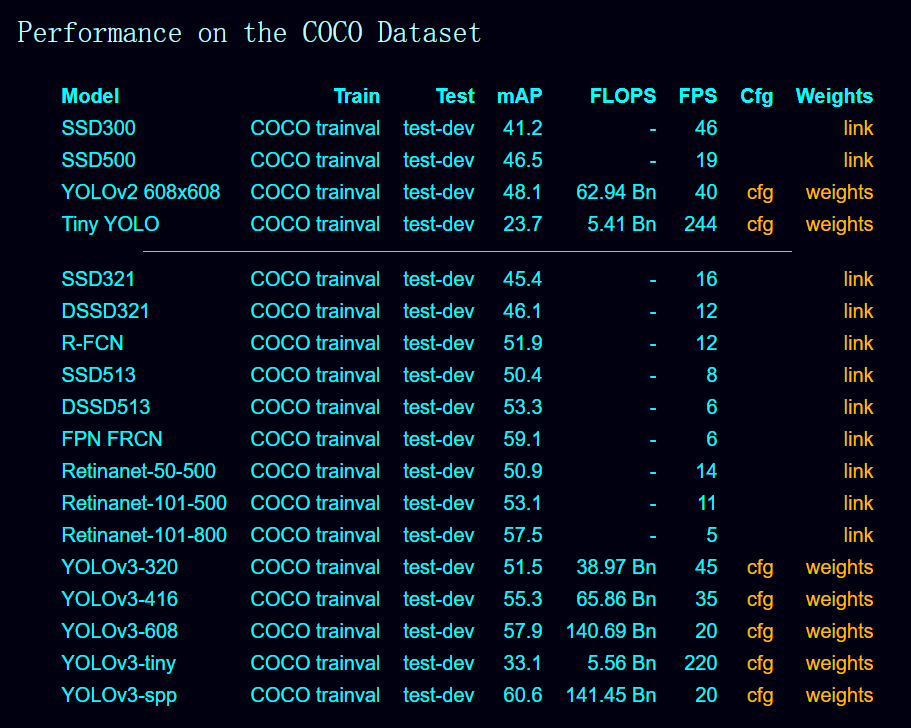

模型下载方式:进入darknet官网的yolo主页 ,找到上图所示的表格,找到YOLOv3-tiny,保存对应的cfg配置文件,同时下载weights权重文件。

2.编写实例

本文参考opencv_deeplearning实战3:基于yolov3(CPU)的opencv 目标检测 中借鉴的OpenCV代码实例,同时进行了稍微修改。程序的功能为:对图片、视频、摄像头拍摄的内容进行目标检测。

// This code is written at BigVision LLC. It is based on the OpenCV project. It is subject to the license terms in the LICENSE file found in this distribution and at http://opencv.org/license.html

// Usage example: ./object_detection_yolo.out --video=run.mp4

// ./object_detection_yolo.out --image=bird.jpg

#include <fstream>

#include <sstream>

#include <iostream>

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

const char* keys =

"{help h usage ? | | Usage examples: ntt./object_detection_yolo.out --image=dog.jpg ntt./object_detection_yolo.out --video=run_sm.mp4}"

"{image i |<none>| input image }"

"{video v |<none>| input video }"

"{device d |<none>| device no }"

;

using namespace cv;

using namespace dnn;

using namespace std;

// Initialize the parameters

float confThreshold = 0.5; // Confidence threshold

float nmsThreshold = 0.4; // Non-maximum suppression threshold

int inpWidth = 416; // Width of network's input image

int inpHeight = 416; // Height of network's input image

vector<string> classes;

// Remove the bounding boxes with low confidence using non-maxima suppression

void postprocess(Mat& frame, const vector<Mat>& out);

// Draw the predicted bounding box

void drawPred(int classId, float conf, int left, int top, int right, int bottom, Mat& frame);

// Get the names of the output layers

vector<String> getOutputsNames(const Net& net);

int main(int argc, char** argv)

{

CommandLineParser parser(argc, argv, keys);

parser.about("Use this script to run object detection using YOLO3 in OpenCV.");

if (parser.has("help"))

{

parser.printMessage();

return 0;

}

// Load names of classes加载类别标签名称

string classesFile = "coco.names";

//ifstream是从硬盘文件classesFile到内存流ifs

ifstream ifs(classesFile);

string line;

while (getline(ifs, line))

{

classes.push_back(line);

}

// Give the configuration and weight files for the model

String modelConfiguration = "C:\Users\Administrator\Desktop\darknet-master\darknet-master\cfg\yolov3.cfg";

String modelWeights = "C:\Users\Administrator\Desktop\darknet-master\darknet-master\yolov3.weights";

// Load the network

Net net = readNetFromDarknet(modelConfiguration, modelWeights);

net.setPreferableBackend(DNN_BACKEND_OPENCV);

net.setPreferableTarget(DNN_TARGET_CPU);

// Open a video file or an image file or a camera stream.

string str, outputFile;

VideoCapture cap;

VideoWriter video;

Mat frame, blob;

int rate = cap.get(CV_CAP_PROP_FPS);//帧率

try {

outputFile = "yolo_out_cpp.avi";

if (parser.has("image"))

{

// Open the image file

str = parser.get<String>("image");

ifstream ifile(str);

if (!ifile) throw("error");

cap.open(str);

str.replace(str.end() - 4, str.end(), "_yolo_out_cpp.jpg");

outputFile = str;

}

else if (parser.has("video"))

{

// Open the video file

str = parser.get<String>("video");

ifstream ifile(str);

if (!ifile) throw("error");

cap.open(str);

str.replace(str.end() - 4, str.end(), "_yolo_out_cpp.avi");

outputFile = str;

}

// Open the webcaom

else cap.open(parser.get<int>("device"));

}

catch (...) {

cout << "Could not open the input image/video stream" << endl;

return 0;

}

// Get the video writer initialized to save the output video

if (!parser.has("image")) {

video.open(outputFile, VideoWriter::fourcc('M', 'J', 'P', 'G'), 30, Size(cap.get(CAP_PROP_FRAME_WIDTH), cap.get(CAP_PROP_FRAME_HEIGHT)));//运行过程中自己选择编码格式

}

// Create a window

static const string kWinName = "Deep learning object detection in OpenCV";

namedWindow(kWinName, WINDOW_NORMAL);

// Process frames.

while (1)

{

if (waitKey(10) == 27)

{

break;

}

// get frame from the video

cap >> frame;

// Stop the program if reached end of video

if (frame.empty()) {

cout << "Done processing. " << endl;

cout << "Output file is stored as " << outputFile << endl;

waitKey(3000);

break;

}

// Create a 4D blob from a frame.

blobFromImage(frame, blob, 1 / 255.0, cvSize(inpWidth, inpHeight), Scalar(0, 0, 0), true, false);

//Sets the input to the network

net.setInput(blob);

// Runs the forward pass to get output of the output layers

vector<Mat> outs;

net.forward(outs, getOutputsNames(net));

// Remove the bounding boxes with low confidence

postprocess(frame, outs);

// Put efficiency information. The function getPerfProfile returns the overall time for inference(t) and the timings for each of the layers(in layersTimes)

vector<double> layersTimes;

double freq = getTickFrequency() / 1000;

double t = net.getPerfProfile(layersTimes) / freq;

string label = format("Inference time for a frame : %.2f ms", t);

putText(frame, label, Point(0, 15), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 255));

// Write the frame with the detection boxes

Mat detectedFrame;

frame.convertTo(detectedFrame, CV_8U);

if (parser.has("image"))

{

imwrite(outputFile, detectedFrame);

}

else

{

video.write(detectedFrame);

}

//显示图像

imshow(kWinName, frame);

}

if (!parser.has("image"))

{

video.release();

}

cap.release();

return 0;

}

// Remove the bounding boxes with low confidence using non-maxima suppression

void postprocess(Mat& frame, const vector<Mat>& outs)

{

vector<int> classIds;

vector<float> confidences;

vector<Rect> boxes;

for (size_t i = 0; i < outs.size(); ++i)

{

// Scan through all the bounding boxes output from the network and keep only the

// ones with high confidence scores. Assign the box's class label as the class

// with the highest score for the box.

float* data = (float*)outs[i].data;

for (int j = 0; j < outs[i].rows; ++j, data += outs[i].cols)

{

int a = outs[i].cols;//中心坐标+框的宽高+置信度+分为各个类别分数=2+2+1+80

int b = outs[i].rows;//框的个数507

Mat scores = outs[i].row(j).colRange(5, outs[i].cols);//取当前框的第六列到最后一列,即该框被分为80个类别,各个类别的评分

Point classIdPoint;

double confidence;

// Get the value and location of the maximum score

minMaxLoc(scores, 0, &confidence, 0, &classIdPoint);//找出最大评分的类别

if (confidence > confThreshold)//置信度阈值

{

int centerX = (int)(data[0] * frame.cols);

int centerY = (int)(data[1] * frame.rows);

int width = (int)(data[2] * frame.cols);

int height = (int)(data[3] * frame.rows);

int left = centerX - width / 2;

int top = centerY - height / 2;

classIds.push_back(classIdPoint.x);

confidences.push_back((float)confidence);

boxes.push_back(Rect(left, top, width, height));

}

}

}

// Perform non maximum suppression to eliminate redundant overlapping boxes with

// lower confidences

vector<int> indices;

NMSBoxes(boxes, confidences, confThreshold, nmsThreshold, indices);//框、置信度、置信度阈值、非极大值抑制阈值、指标(输出)

for (size_t i = 0; i < indices.size(); ++i)

{

int idx = indices[i];//框序号

Rect box = boxes[idx];//框的坐标(矩形区域)

drawPred(classIds[idx], confidences[idx], box.x, box.y,

box.x + box.width, box.y + box.height, frame);

}

}

// Draw the predicted bounding box 绘出框

void drawPred(int classId, float conf, int left, int top, int right, int bottom, Mat& frame)

{

//Draw a rectangle displaying the bounding box 绘制矩形

rectangle(frame, Point(left, top), Point(right, bottom), Scalar(0, 0, 255));

//Get the label for the class name and its confidence

string label = format("%.2f", conf);//分类标签及其置信度

//若存在类别标签,读取对应的标签

if (!classes.empty())

{

CV_Assert(classId < (int)classes.size());

label = classes[classId] + ":" + label;

}

//Display the label at the top of the bounding box

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

//绘制框上文字

putText(frame, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(255, 255, 255));

}

// Get the names of the output layers

vector<String> getOutputsNames(const Net& net)

{

static vector<String> names;

if (names.empty())

{

//Get the indices of the output layers, i.e. the layers with unconnected outputs

vector<int> outLayers = net.getUnconnectedOutLayers();

//get the names of all the layers in the network

vector<String> layersNames = net.getLayerNames();

// Get the names of the output layers in names

names.resize(outLayers.size());

for (size_t i = 0; i < outLayers.size(); ++i)

names[i] = layersNames[outLayers[i] - 1];

}

return names;

}3.运行实例

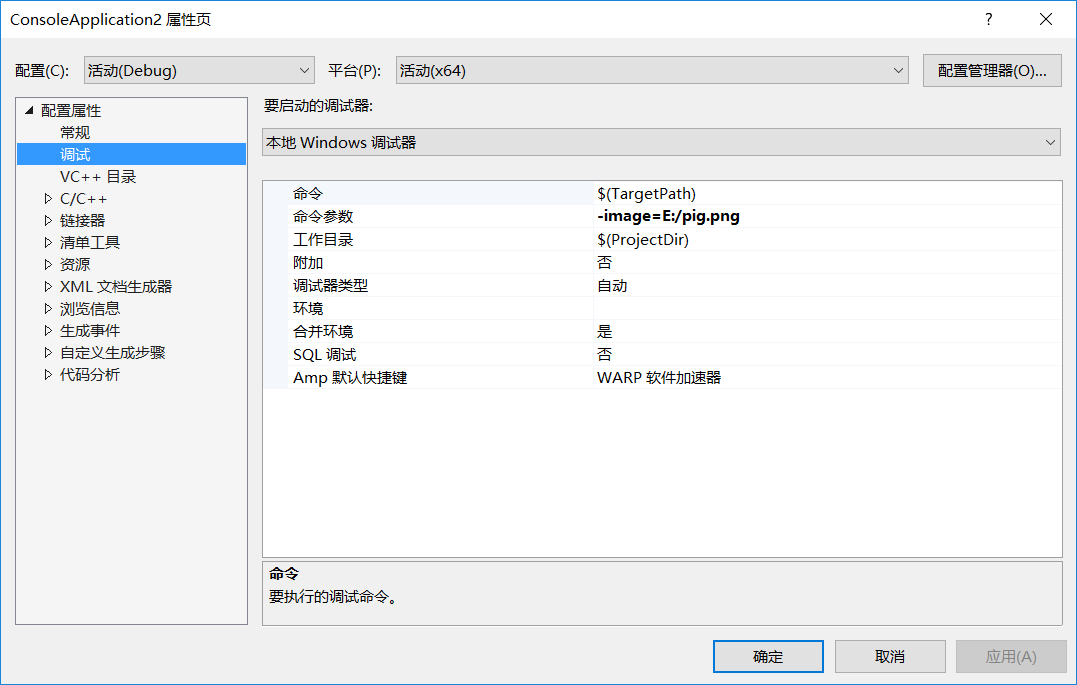

由于实例中使用 CommandLineParser 来解析参数,所以,调试时测试检测图像、视频和摄像头时,分别需要设置命令参数:-device=0、-image=E:/pig.png、-video=E:test.mp4。

运行时,命令行的方式运行所不同的是,最前面加入程序名即可。

检测图片

最后

以上就是单身魔镜最近收集整理的关于【opencv】调用darknet模型实现实时目标检测的全部内容,更多相关【opencv】调用darknet模型实现实时目标检测内容请搜索靠谱客的其他文章。

![[darknet源码系列-1] darknet源码中的常见数据结构前言DarkNet数据结构总结声明Reference](https://www.shuijiaxian.com/files_image/reation/bcimg12.png)

发表评论 取消回复