1、kubernetes1.24版本发布重磅改动

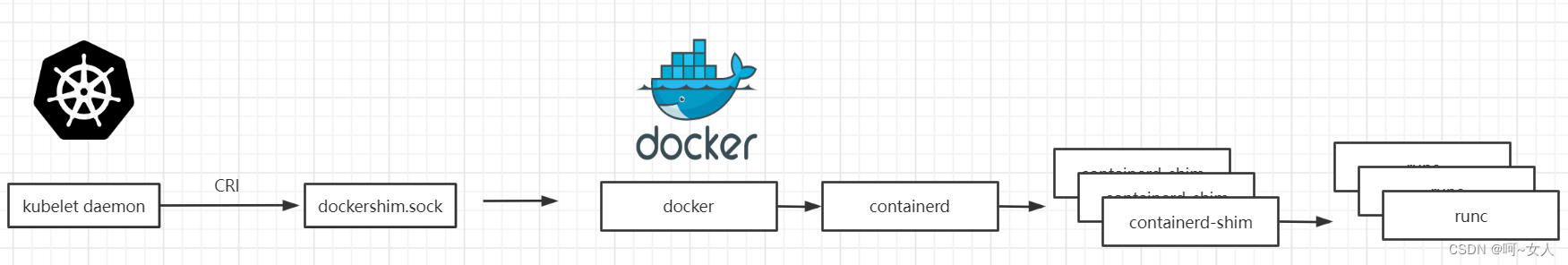

在新版本中,有12项功能更新到了稳定版本,同时引入很多实用功能,如statefulsets支持批量滚动更新,Networkpolicy新增NetworkpolicyStatus字段方便进行故障排查等。正式移除了dockershim的支持。

Kubernetes 正式移除对 Dockershim 的支持,讨论很久的 “弃用 Dockershim” 也终于在这个版本画上了句号。

主要修改的几项:

1、StatefulSet 的最大不可用副本数

2、避免为 Services 分配 IP 地址时发生冲突

3、防止未经授权的卷模式转换

4、卷填充器功能进入 Beta 阶段

5、gRPC 容器探针功能进入 Beta 阶段

6、存储容量跟踪特性进入 GA 阶段

7、卷扩充现在成为稳定功能

等等

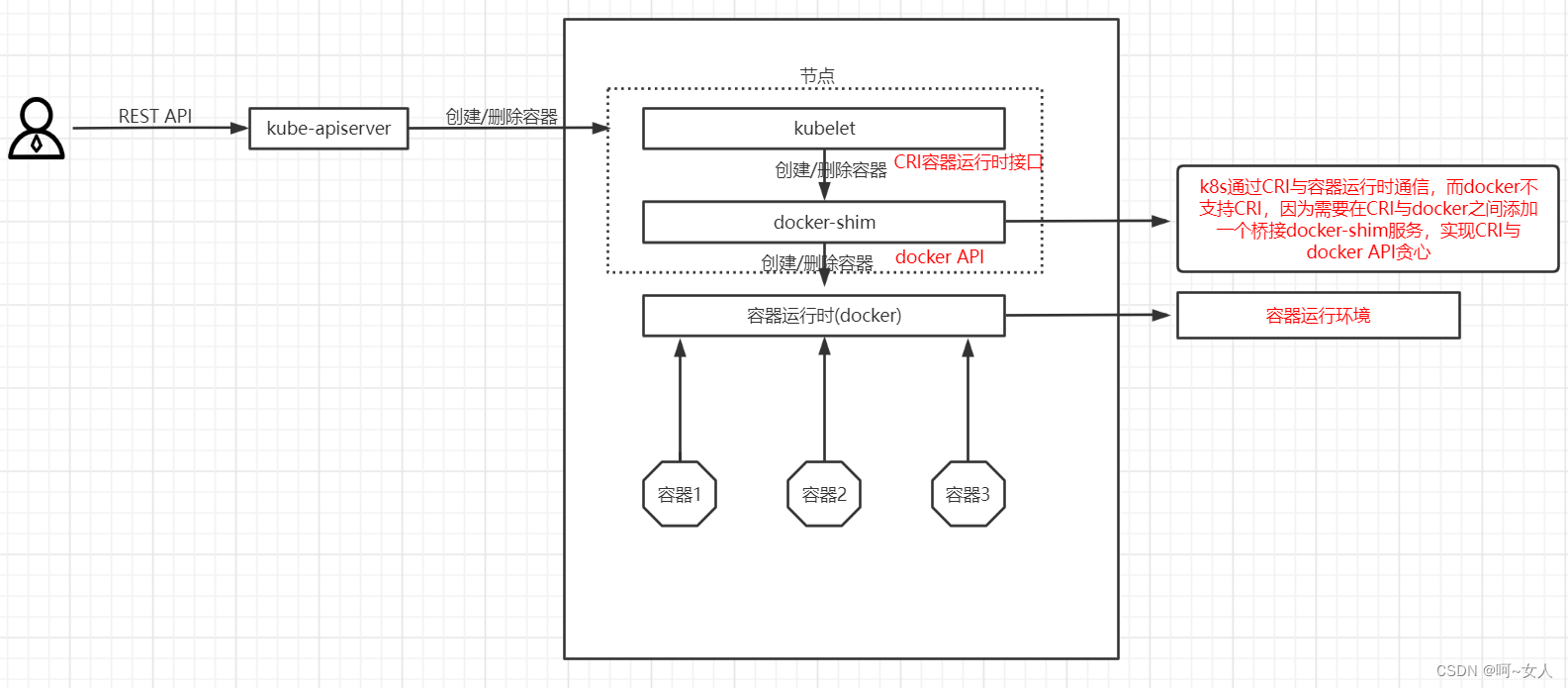

Kubernetes1.24 之前

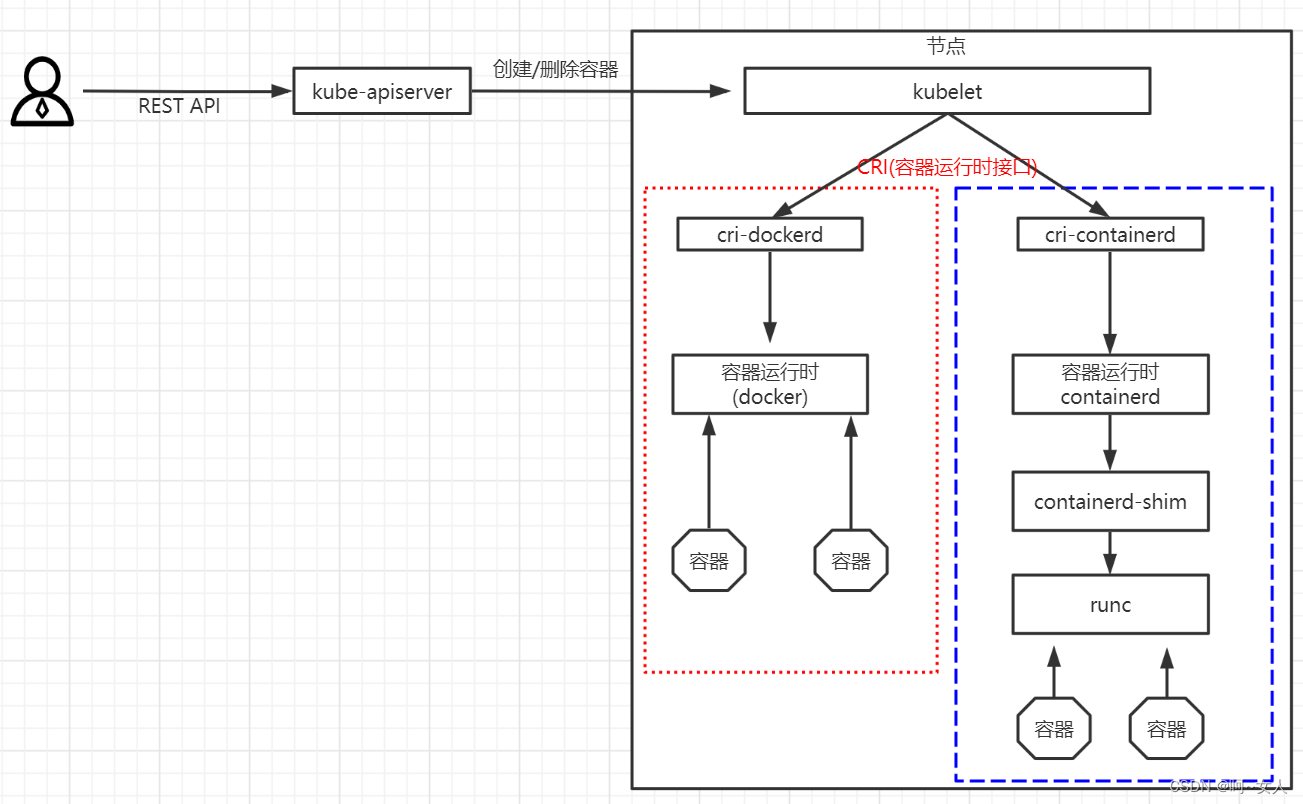

Kubernetes1.24 之后

Kubernetes1.24 之前

Kubernetes1.24 之后

2、版本说明

docker-ce-20.10.17

kubernetes1.24.2

CentOS7.4

kernel5.18.10

3、节点规划

192.168.253.95 master1

192.168.253.96 node1

192.168.253.97 node2

4、基本配置

4.1、修改主机名

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2

4.2、配置hosts(每个节点)

vim /etc/hosts

192.168.253.95 master1

192.168.253.96 node1

192.168.253.97 node2

4.3、关闭防火墙、selinux、swap。服务器配置如下(每个节点)

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

4.4、关闭swap分区(每个节点)

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

4.5、时间同步(每个节点)

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

yum install ntpdate -y

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

-----------------------------------------------------------------------------------------------

写一个计划任务,每五分钟同步一次时间

crontab -e

*/5 * * * * ntpdate time2.aliyun.com

4.6、升级内核(所有节点)

导入elrepo gpg key (验证安装的软件是否合法)

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

-----------------------------------------------------------------------------------------------

准备elrepo yum仓库

yum -y install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

-----------------------------------------------------------------------------------------------

安装kernel-ml版本,ml为长期稳定版本,lt为长期维护版本

yum --enablerepo="elrepo-kernel" -y install kernel-ml.x86_64

-----------------------------------------------------------------------------------------------

验证内核是否可用

设置grub2默认引导为0

grub2-set-default 0

-----------------------------------------------------------------------------------------------

重新生成grub2引导文件

grub2-mkconfig -o /boot/grub2/grub.cfg

-----------------------------------------------------------------------------------------------

重启机器(所有节点)

reboot

-----------------------------------------------------------------------------------------------

配置内核转发及网桥过滤(所有节点)

[root@master ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

-----------------------------------------------------------------------------------------------

加载br_netfilter模块

modprobe br_netfilter

-----------------------------------------------------------------------------------------------

查看是否加载

lsmod|grep br_netfilter

-----------------------------------------------------------------------------------------------

加载网桥过滤及内核转发配置文件

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

4.7、安装ipset和ipvsadm(所有节点)

yum install ipvsadm ipset sysstat conntrack libseccomp -y

4.8、配置ipvsadm模块加载方式

[root@master ~]# cat /etc/sysconfig/modules/ipvs.modules

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

-----------------------------------------------------------------------------------------------

授权、运行、检查是否加载

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod |grep -e ip_vs -e nf_conntrack

5、docker安装(所有节点)

5.1、准备yum源

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum clean all && yum makecache

5.2、查看docker版本

yum list docker-ce --showduplicates|sort -r

5.3、安装docker-ce(选择自己的版本)

yum -y install docker-ce-20.10.17-3.el7

5.4、启动并设置开机自启

systemctl enable docker && systemctl start docker

5.5、配置docker加速和修改启动引擎方式

cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://jzngeu7d.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

5.6、重启docker

systemctl restart docker

5.7、查看启动引擎是否修改成功

docker info

Cgroup Driver: systemd //此字段表示成功

6、cri-dockerd安装(所有节点)(官网方式)

6.1、golang环境准备

下载地址:https://golang.google.cn/dl/

-----------------------------------------------------------------------------------------------

解压golang安装包

tar -xf go1.18.3.linux-amd64.tar.gz -C /usr/local/

-----------------------------------------------------------------------------------------------

添加环境变量

vim /etc/profile

export GOROOT=/usr/local/go

export GOPATH=$HOME/go

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

[root@master ~]# source /etc/profile

-----------------------------------------------------------------------------------------------

查看是否安装成功

go version

-----------------------------------------------------------------------------------------------

创建工作目录

mkdir -p ~/go/bin ~/go/src ~/go/pkg

6.2、构建并安装cri-dockerd

源码下载:git clone https://github.com/Mirantis/cri-dockerd.git

cd cri-dockerd

mkdir bin

go get && go build -o bin/cri-dockerd

mkdir -p /usr/local/bin

install -o root -g root -m 0755 bin/cri-dockerd /usr/local/bin/cri-dockerd

cp -a packaging/systemd/* /etc/systemd/system

sed -i -e 's,/usr/bin/cri-dockerd,/usr/local/bin/cri-dockerd,' /etc/systemd/system/cri-docker.service

systemctl daemon-reload

systemctl enable cri-docker.service

systemctl enable --now cri-docker.socket

-----------------------------------------------------------------------------------------------

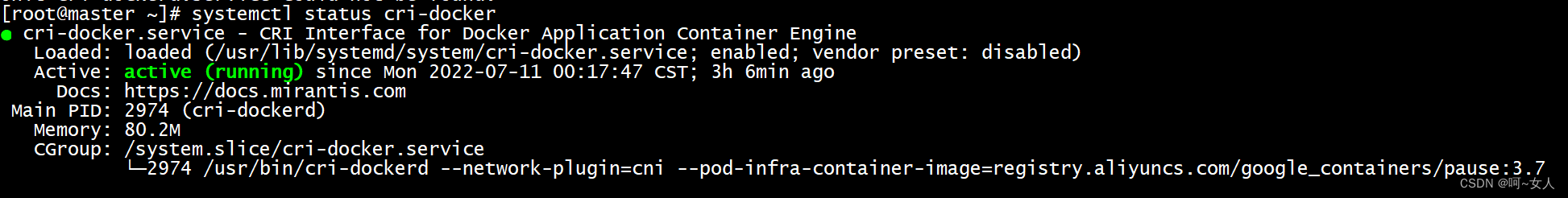

查看是否安装成功

systemctl status cri-dockerd

7、此cri-dockerd安装方式需要网速,建议使用二进制安装

cri-dockerd二进制安装方式如下

>下载cri-dockerd 二进制文件

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.3/cri-dockerd-0.2.3.amd64.tgz

-----------------------------------------------------------------------------------------------

>开始安装

[root@master ~]# tar -xf cri-dockerd-0.2.3.amd64.tgz

[root@master ~]# cp cri-dockerd/cri-dockerd /usr/bin/

[root@master ~]# chmod +x /usr/bin/cri-dockerd

-----------------------------------------------------------------------------------------------

>配置启动文件

cat <<"EOF" > /usr/lib/systemd/system/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

-----------------------------------------------------------------------------------------------

>生成socket 文件

cat <<"EOF" > /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

-----------------------------------------------------------------------------------------------

>启动cri-docker

systemctl daemon-reload

systemctl start cri-docker

systemctl enable cri-docker

systemctl status cri-docker

8、kubernets安装

8.1、kubernetes yum源准备(所有节点)

[root@master ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyum.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyum.com/kuberneres/yum/rpm-package-key.gpg

8.2、安装k8s组件(所有节点)

>查看版本

[root@master ~]# yum list kubeadm kubelet kubectl --showduplicates | sort -r

-----------------------------------------------------------------------------------------------

>安装指定版本

[root@master ~]# yum -y install kubeadm-1.24.2 kubectl-1.24.2 kubelet-1.24.2

8.3、设置Kubelet开机自启动(所有节点)

systemctl enable kubelet

8.4、配置kubelet,实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,修改如下文件内容(所有节点)

vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

8.5、初始化节点(master节点操作)

8.5.1、初始化命令行方式

kubeadm init --kubernetes-version=1.24.2 --pod-network-cidr=10.224.0.0/16 --apiserver-advertise-address=192.168.253.95 --cri-socket unix:///var/run/cri-dockerd.sock

8.5.2、初始化文件方式

kubeadm config print init-defaults > kubeadm.yaml

8.5.3、修改配置文件

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.253.95 //master节点ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock //此处为cri-dockerd.sock文件,一定要修改

imagePullPolicy: IfNotPresent

name: master //master节点名称

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers //修改镜像地址,默认为国外源

kind: ClusterConfiguration

kubernetesVersion: 1.24.2 //指定版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.224.0.0/16 //pod网段和后面部署calico网段一致

scheduler: {}

8.6、集群镜像准备

8.6.1、查看需要使用的镜像

[root@master init]# kubeadm config images list --config kubeadm.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.2

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.2

registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.2

registry.aliyuncs.com/google_containers/kube-proxy:v1.24.2

registry.aliyuncs.com/google_containers/pause:3.7

registry.aliyuncs.com/google_containers/etcd:3.5.3-0

registry.aliyuncs.com/google_containers/coredns:v1.8.6

8.6.2、提前下载镜像到本地

[root@master init]# kubeadm config images pull --config kubeadm.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.24.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.7

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.6

8.7、集群初始化(master节点执行)

kubeadm init --config=/root/yaml/init/kubeadm.yaml //指定对应的初始化yaml文件

8.7.1、输出信息

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.253.95:6443 --token el6kcg.mq8x98tv25gih30p

--discovery-token-ca-cert-hash sha256:916306a63c8d6cac21cd97f7077097b7ddcc6479e51681a86cfc64aefa36cf0b

8.8、master节点操作

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

8.9、node节点加入(node节点执行)

kubeadm join 192.168.253.95:6443 --token el6kcg.mq8x98tv25gih30p

--discovery-token-ca-cert-hash sha256:916306a63c8d6cac21cd97f7077097b7ddcc6479e51681a86cfc64aefa36cf0b --cri-socket unix:///var/run/cri-dockerd.sock

8.10、如果node节点加入时token过期(获取方式如下)(master节点执行)

[root@master ~]# kubeadm token create --print-join-command

8.11、添加自动补全脚本(master节点执行)

[root@master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

8.12、查看节点是否添加成功(master节点操作)

[root@master ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 4m2s v1.24.2

node1 NotReady <none> 20s v1.24.2

node2 NotReady <none> 10s v1.24.2

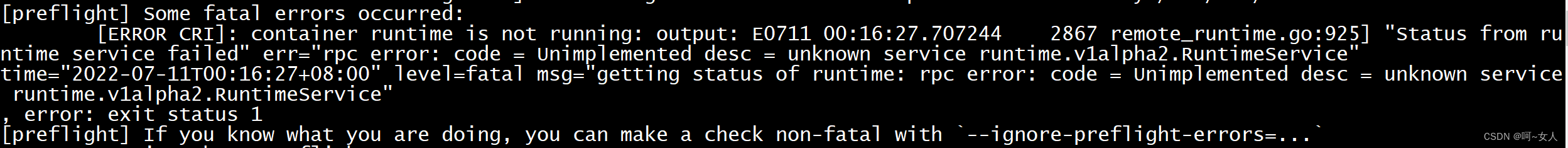

注意: 必须要加上 --cri-socket unix:///var/run/cri-dockerd.sock ,不然会报错

9、集群网络准备(本次集群使用calico)(master节点操作即可)

9.1、下载对应yaml文件

wget https://docs.projectcalico.org/manifests/tigera-operator.yaml

wget https://docs.projectcalico.org/manifests/custom-resources.yaml

9.2、修改custom-resources.yaml

#This section includes base Calico installation configuration.

# For more information, see: https://projectcalico.docs.tigera.io/v3.23/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 26

cidr: 10.224.0.0/16 //此网段与定义初始化文件时里面加入pobsubnet字段一致

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://projectcalico.docs.tigera.io/v3.23/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

9.3、执行文件

kubectl apply -f tigera-operator.yaml

kubectl apply -f custom-resources.yaml

9.4、去除master污点

[root@master ~]# kubectl taint node master node-role.kubernetes.io/master:NoSchedule-

9.5、查看node节点状态和calico对应pod是否正常

[root@master ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

master Ready control-plane 18h v1.24.2

node1 Ready <none> 18h v1.24.2

node2 Ready <none> 18h v1.24.2

[root@master ~]# kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-apiserver calico-apiserver-6d4f897744-96526 1/1 Running 1 (23m ago) 16h

calico-apiserver calico-apiserver-6d4f897744-qw7gb 1/1 Running 1 (23m ago) 16h

calico-system calico-kube-controllers-86dff98c45-64cwj 1/1 Running 1 (23m ago) 16h

calico-system calico-node-5g4z5 1/1 Running 1 (23m ago) 16h

calico-system calico-node-glhds 1/1 Running 1 (23m ago) 16h

calico-system calico-node-hcc8x 1/1 Running 1 (23m ago) 16h

calico-system calico-typha-55fb9c49b8-gqwf6 1/1 Running 2 (23m ago) 16h

calico-system calico-typha-55fb9c49b8-n7kfq 1/1 Running 2 (23m ago) 16h

kube-system coredns-74586cf9b6-c7wcw 1/1 Running 1 (23m ago) 18h

kube-system coredns-74586cf9b6-sz2gw 1/1 Running 1 (23m ago) 18h

kube-system etcd-master 1/1 Running 3 (23m ago) 18h

kube-system kube-apiserver-master 1/1 Running 3 (23m ago) 18h

kube-system kube-controller-manager-master 1/1 Running 2 (23m ago) 18h

kube-system kube-proxy-46mll 1/1 Running 2 (23m ago) 18h

kube-system kube-proxy-6t2mf 1/1 Running 2 (23m ago) 18h

kube-system kube-proxy-czqsq 1/1 Running 2 (23m ago) 18h

kube-system kube-scheduler-master 1/1 Running 3 (23m ago) 18h

tigera-operator tigera-operator-5dc8b759d9-bwkj5 1/1 Running 5 (23m ago) 16h

9.6、calico客户端安装

9.6.1、下载二进制文件

[root@master ~]# curl -L https://github.com/projectcalico/calico/releases/download/v3.23.1/calicoctl-linux-amd64 -o calicoctl

9.6.2、安装calicoctl

mv calicoctl /usr/bin/

添加可执行权限

chmod +x /usr/bin/calicoctl

查看calicoctl版本

[root@master ~]# calicoctl version

Client Version: v3.23.1

Git commit: 967e24543

Cluster Version: v3.23.2

Cluster Type: typha,kdd,k8s,operator,bgp,kubeadm

9.6.3、通过 DATASTORE_TYPE=kubernetes KUBECONFIG=~/.kube/config calicoctl get nodes --allow-version-mismatch 查看已运行节点

[root@master ~]# DATASTORE_TYPE=kubernetes KUBECONFIG=~/.kube/config calicoctl get nodes --allow-version-mismatch

NAME

master

node1

node2

10、验证集群

10.1、查看集群健康情况

[root@master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

10.2、验证集群内网络

[root@master ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 18h

[root@master ~]# dig a www.baidu.com @10.96.0.10

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7_9.9 <<>> a www.baidu.com @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 6725

;; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.baidu.com. IN A

;; ANSWER SECTION:

www.baidu.com. 5 IN CNAME www.a.shifen.com.

www.a.shifen.com. 5 IN A 39.156.66.14

www.a.shifen.com. 5 IN A 39.156.66.18

;; Query time: 66 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Mon Jul 11 18:54:00 CST 2022

;; MSG SIZE rcvd: 149

注意新版本中重新初始化也许加上sock

kubeadm reset --cri-socket unix:///var/run/cri-dockerd.sock

11、问题处理

此问题请查看初始化文件sock文件是否写对

然后执行

rm -rf /etc/containerd/config.toml

[root@master:~] systemctl restart containerd

最后

以上就是无语灰狼最近收集整理的关于kubeadm部署kubernetes-1.24.2的全部内容,更多相关kubeadm部署kubernetes-1内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复