Part One : Basis for Nonlinear Regression

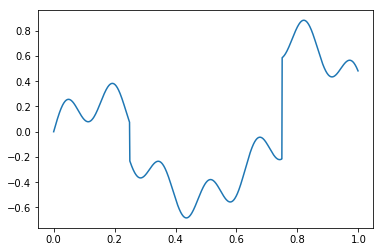

In this part we’re going to investigate how to use linear regression to approximate and estimate complicated functions. For example, suppose we want to fit the following function on the interval [0,1] :

Import Modules

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

Create a problem

def y(x):

ret = .15*np.sin(40*x)

ret = ret + .25*np.sin(10*x)

step_fn1 = np.zeros(len(x))

step_fn1[x >= .25] = 1

step_fn2 = np.zeros(len(x))

step_fn2[x >= .75] = 1

ret = ret - 0.3*step_fn1 + 0.8 *step_fn2

return ret

x = np.arange(0.0, 1.0, 0.001)

plt.plot(x, y(x))

plt.show()

Plot the graph and see the functions

Here the input space, shown on the x-axis, is very simple – it’s just the interval [ 0 , 1 ] [0,1] [0,1]. The output space is reals ( R mathcal{R} R), and a graph of the function { ( x , y ( x ) ) ∣ x ∈ [ 0 , 1 ] } {(x,y(x)) mid x in [0,1]} { (x,y(x))∣x∈[0,1]} is shown above. Clearly a linear function of the input will not give a good approximation to the function. There are many ways to construct nonlinear functions of the input. Some popular approaches in machine learning are regression trees, neural networks, and local regression (e.g. LOESS). However, our approach here will be to map the input space [ 0 , 1 ] [0,1] [0,1] into a “feature space” R d mathcal{R}^d Rd, and then run standard linear regression in the feature space.

Feature extraction, or “featurization”, maps an input from some input space X mathcal{X} X to a vector in R d mathcal{R}^d Rd. Here our input space is X = [ 0 , 1 ] mathcal{X}=[0,1] X=[0,1], so we could write our feature mapping as a function Φ : [ 0 , 1 ] → R d Phi:[0,1]tomathcal{R}^d Φ:[0,1]→Rd. The vector Φ ( x ) Phi(x) Φ(x) is called a feature vector, and each entry of the vector is called a feature. Our feature mapping is typically defined in terms of a set of functions, each computing a single entry of the feature vector. For example, let’s define a feature function ϕ 1 ( x ) = 1 ( x ≥ 0.25 ) phi_1(x)=1(xge0.25) ϕ1(x)=1(x≥0.25). The 1 ( ⋅ ) 1(cdot) 1(⋅) denotes an indicator function, which is 1 if the expression in the parenthesis is true, and 0 otherwise. So ϕ 1 ( x ) phi_1(x) ϕ1(x) is 1 1 1 if x ≥ 0.25 xge0.25 x≥0.25 and 0 0 0 otherwise. This function produces a “feature” of x. Let’s define two more features: ϕ 2 ( x ) = 1 ( x ≥ 0.5 ) phi_2(x)=1(xge0.5) ϕ2(x)=1(x≥0.5) and ϕ 3 ( x ) = 1 ( x ≥ 0.75 ) phi_3(x)=1(xge0.75) ϕ3(x)=1(x≥0.75). Now we can define a feature mapping into R 3 mathcal{R}^3 R3 as:

Φ ( x ) = ( ϕ 1 ( x ) , ϕ 2 ( x ) , ϕ 3 ( x ) ) . Phi(x) = ( phi_1(x), phi_2(x), phi_3(x) ). Φ(x)=(ϕ1(x),ϕ2(x),ϕ

最后

以上就是饱满帅哥最近收集整理的关于NonLinear Regression Problem & Ridge RegressionPart One : Basis for Nonlinear RegressionPart Two : Create a NonLinear Regression problemPart Three : Ridge RegressionPart Four : Visualize the Result的全部内容,更多相关NonLinear内容请搜索靠谱客的其他文章。

发表评论 取消回复