为什么不做特征之间相关性的动作呢,我理解实际上决策树模型应该可以解决绝大部分此类的工作,但是做一下也不妨,很多人不做是因为代码较为麻烦,还要区分数值型还是字符型。简洁一点也不是什么坏事。

#coding=utf-8

import numpy as np

import pandas as pd

'''单变量特征选取'''

from sklearn.feature_selection import SelectKBest, chi2

'''去除方差小的特征'''

from sklearn.feature_selection import VarianceThreshold

'''循环特征选取'''

from sklearn.svm import SVC

from sklearn.feature_selection import RFE

'''RFE_CV'''

from sklearn.ensemble import ExtraTreesClassifier

class FeatureSelection(object):

def __init__(self, feature_num):

self.feature_num = feature_num

self.train_test, self.label, self.test = self.read_data() # features #

self.feature_name = list(self.train_test.columns) # feature name #

def read_data(self):

test = pd.read_csv(r'test_feature.csv', encoding='utf-8')

train_test = pd.read_csv(r'train_test_feature.csv', encoding='utf-8')

print('读取数据完毕。。。')

label = train_test[['target']]

test = test.iloc[:, 1:]

train_test = train_test.iloc[:, 2:]

return train_test, label, test

def variance_threshold(self):

sel = VarianceThreshold()

sel.fit_transform(self.train_test)

feature_var = list(sel.variances_) # feature variance #

features = dict(zip(self.feature_name, feature_var))

features = list(dict(sorted(features.items(), key=lambda d: d[1])).keys())[-self.feature_num:]

# print(features) # 100 cols #

return set(features) # return set type #

def select_k_best(self):

ch2 = SelectKBest(chi2, k=self.feature_num)

ch2.fit(self.train_test, self.label)

feature_var = list(ch2.scores_) # feature scores #

features = dict(zip(self.feature_name, feature_var))

features = list(dict(sorted(features.items(), key=lambda d: d[1])).keys())[-self.feature_num:]

# print(features) # 100 cols #

return set(features) # return set type #

def svc_select(self):

svc = SVC(kernel='rbf', C=1, random_state=2018) # linear #

rfe = RFE(estimator=svc, n_features_to_select=self.feature_num, step=1)

rfe.fit(self.train_test, self.label.ravel())

print(rfe.ranking_)

return rfe.ranking_

def tree_select(self):

clf = ExtraTreesClassifier(n_estimators=300, max_depth=7, n_jobs=4)

clf.fit(self.train_test, self.label)

feature_var = list(clf.feature_importances_) # feature scores #

features = dict(zip(self.feature_name, feature_var))

features = list(dict(sorted(features.items(), key=lambda d: d[1])).keys())[-self.feature_num:]

# print(features) # 100 cols #

return set(features) # return set type #

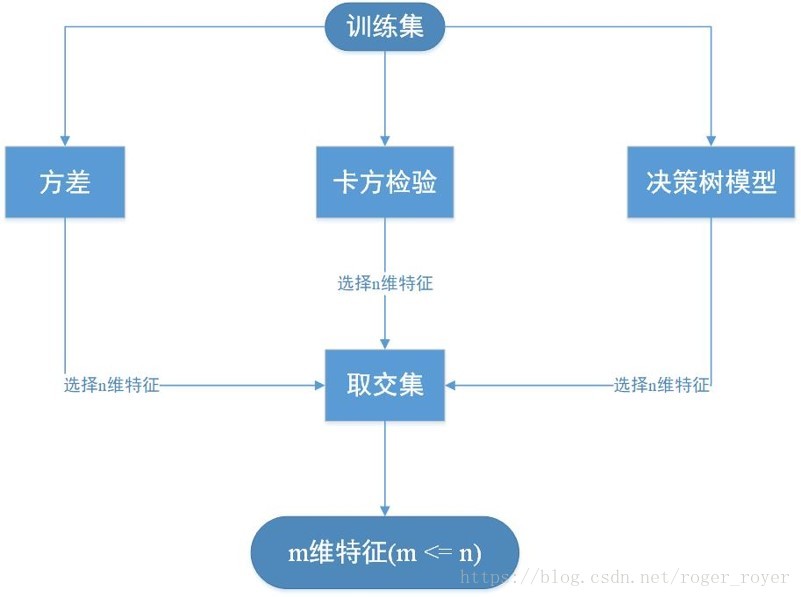

def return_feature_set(self, variance_threshold=False, select_k_best=False, svc_select=False, tree_select=False):

names = set([])

if variance_threshold is True:

name_one = self.variance_threshold()

names = names.union(name_one)

if select_k_best is True:

name_two = self.select_k_best()

names = names.intersection(name_two)

if svc_select is True:

name_three = self.svc_select()

names = names.intersection(name_three)

if tree_select is True:

name_four = self.tree_select()

names = names.intersection(name_four)

print(names)

return list(names)

selection = FeatureSelection(100)

selection.return_feature_set(variance_threshold=True, select_k_best=True, svc_select=False, tree_select=True)

最后

以上就是留胡子龙猫最近收集整理的关于特征选择python代码的全部内容,更多相关特征选择python代码内容请搜索靠谱客的其他文章。

发表评论 取消回复