目录

1.redis服务配置文件详解

2.RDB、AOF详解以及优缺点总结

3.redis的主从复制

4. Redis Cluster扩、缩容

环境准备:

redis-cluster初始化、扩容与缩容

扩容

缩容

5.LVS调试算法总结

6.LVS的NAT、DR模型实现

1.redis服务配置文件详解

[root@master1 ~]# egrep -v '^#|^$' /etc/redis.conf

bind 127.0.0.1 -::1 ##监听地址 空格隔开多个监听IP

port 6379 #端口

tcp-keepalive 300 #tcp会话保持时间

databases 16 #设置db库数量,默认16个库

save 3600 1 #3600秒内有一个键内容发生改变就出发快照机制

save 300 100 #

save 60 10000

stop-writes-on-bgsave-error yes #快照出错时禁止redis写入操作

dbfilename dump.rdb #快照文件名

dir /app/redis/data/ #rdb快照文件保存路径

appendonly yes #开启AOF

appendfilename "appendonly.aof" #AOF备份文件名

appendfsync everysec #三个值no/always/everysec

no表示不执行fsync,由操作系统保证数据同步到磁盘

always表示每次写入都同步fsync到磁盘

everysec表示每秒执行同步

auto-aof-rewrite-percentage 100 #当aof的log增长超过百分比时,重写AOF文件

auto-aof-rewrite-min-size 64mb #触发AOF重写的最小文件值

aof-load-truncated yes #是否加载由于其他原因导致的末尾异常的AOF文件(主进程被kill/断电等

aof-use-rdb-preamble no #redis4.0新增RDB-AOF混合持久化格式,在开启了这个功能之后,AOF重写产生的文件将同时包含RDB格式的内容和AOF格式的内容,其中RDB格式的内容用于记录已有的数据,而AOF格式的内存则用于记录最近发生了变化的数据,这样Redis就可以同时兼有RDB持久化和AOF持久化的优点(既能够快速地生成重写文件,也能够在出现问题时,快速地载入数据)

slowlog-log-slower-than 10000 #慢查询阈值10ms

slowlog-max-len 128 #

replica-serve-stale-data yes #当从库同主库失去连接或者复制正在进行,从机库有两种 运行方式:

#1、如果replica-serve-stale-data设置为yes(默认设置),从库会继续响应客户端的读请求。

#2、如果replica-serve-stale-data设置为no,除去指定的命令之外的任何请求都会返回一个错误"SYNC with master in progress"

replica-read-only yes #设置从库只读

repl-backlog-size 512mb #复制缓冲区内存大小,只有在slave连接之后才分配内存

repl-backlog-ttl 3600 #多次时间master没有slave连接,就清空backlog缓冲区。

replica-priority 100 #当master不可用,Sentinel会根据slave的优先级选举一个master,最低的优先级的slave,当选master,而配置成0,永远不会被选举

rename-command #重命名一些高危命令

maxclients 10000 #Redis最大连接客户端

maxmemory #最大内存,单位为bytes字节,8G内存的计算方式8(G)1024(MB)1024(KB)*1024(Kbyte),需要注意的是slave的输出缓冲区是不计算在maxmemory内。2.RDB、AOF详解以及优缺点总结

默认redis使用的是rdb方式持久化,这种方式在许多应用中已经足够用了,但是redis如果中途宕机,会导致可能有几分钟的数据丢失(取决于dumpd数据的间隔时间),根据save来策略

进行持久化,AOF是另一种持久化方式,可以提供更好的持久化特性,Redis会把每次写入的数据在接收后都写入 appendonly.aof 文件,每次启动时Redis都会先把这个文件的数据读入内存里,先忽略RDB

##

RDB:使用bgsave(后台执行,非阻塞)或save(执行时阻塞读写)命令保存了某个时间点是数据快照。可备份多个版本按不同时间点生成

优点:

1.性能更好,父进程在保存RDB文件时只需fork出一个子进程,rdb操作不影响父进程IO操作

2.RDB在大量数据恢复时速度比AOF快

缺点:

1.不能实时地保存数据,可能会丢失执行RDB备份期间到当前的内存时间

2.数据量非常大时,从父进程fork可能花费一点时间

##

AOF:将操作执行等记录在指定的日志文件中,特点是安全性比较高,但可能即使重复的操作也会全部记录

一般来说AOF文件大小会大于RDB格式的文件。根据fsync策略(no/always/everysec),默认AOF时每秒执行一次同步

优点:

1.相对RDB更加安全,实时保存记录数据

缺点:

1.AOF文件一般要大于RDB格式的文件3.redis的主从复制

redis默认是主机节点,搭建主从将主机节点转为从节点就搭建了主从复制架构

##命令行执行

- redis-cli -a 123456 replicaof 10.0.0.8 6379 ## 指定master节点的ip以及端口

- redis-cli -a 123456 config set masterauth 123456 ## 登录至master节点的密码

##配置文件生效

- vim /etc/redis.conf

replicaof 10.0.0.8 6379

masterauth 123456

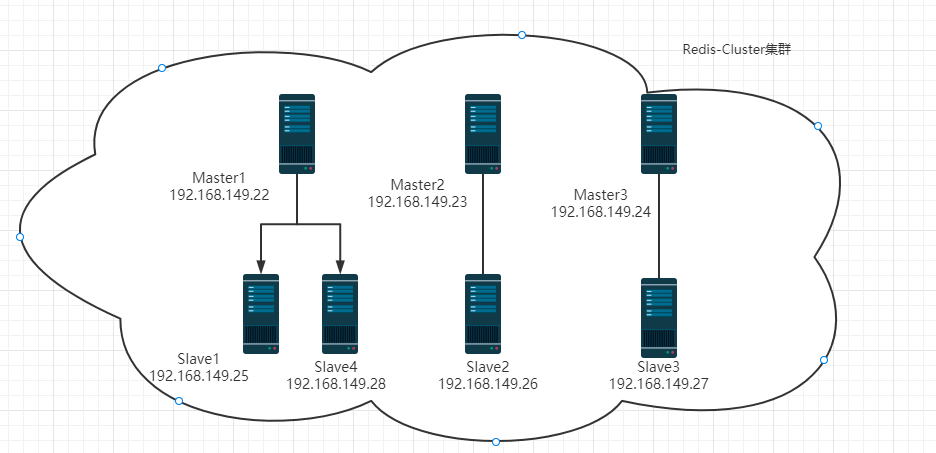

4. Redis Cluster扩、缩容

主要分为redis4以前、5以后的版本;redis 4之前版本主要使用redis-trib.rb命令去管理cluster(ruby环境); redis 5以后版本就可使用redis-cli --cluster去管理cluster集群

redis cluster集群应用场景主要为了规模大的环境提高性能,有多个主节点可写提高写效率

环境准备:

#本次redis版本采用redis-6.2.1

#安装环境采用打怪升级之路二中的脚本二进制部署

##

# 因为redis-cluster集群的节点随时可能成为master,因此集群中redis配置文件均一致

##修改redis.conf配置文件命令

[root@master2 redis-test]# cat redis_cluster_conf.sh

#!/bin/bash

dir=/app/redis/etc

sed -i.bak -e 's/bind 127.0.0.1/bind 0.0.0.0/' -e '/masterauth/a masterauth 123456' -e '/# requirepass/a requirepass 123456' -e '/# cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file nodes-6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require-full-converage yes/c cluster-require-full-coverage no' ${dir}/redis.conf

systemctl restart redis

## redis.conf配置文件主要内容

[root@master2 ~]# vim /app/redis/etc/redis.conf

bind 0.0.0.0

cluster-enabled yes ##开启redis cluster功能

cluster-config-file nodes-6379.conf ##记录cluster的主从关系及slot范围信息

masterauth 123456 ## 主从节点都需要配置

requirepass 123456 ##

cluster-require-full-coverage no ##防止一个节点不可用导致整个cluster不能用

##此时开启集群节点状态的端口 16379

[root@etcd3 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 511 *:6379 *:*

LISTEN 0 32768 192.168.149.28:2379 *:*

LISTEN 0 32768 127.0.0.1:2379 *:*

LISTEN 0 32768 192.168.149.28:2380 *:*

LISTEN 0 128 *:111 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 511 *:16379 *:*

LISTEN 0 511 [::1]:6379 [::]:*

LISTEN 0 128 [::]:111 [::]:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 511 [::1]:16379 [::]:*

## redis进程多了[cluster]字样

[root@etcd3 ~]# ps -ef |grep redis

redis 8398 1 0 00:27 ? 00:00:00 /app/redis/bin/redis-server 0.0.0.0:6379 [cluster]

root 8411 3994 0 00:28 pts/0 00:00:00 grep --color=auto redisredis-cluster初始化、扩容与缩容

##创建集群需要将所有节点写上

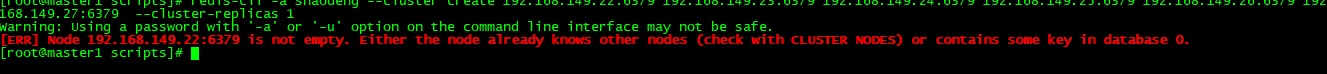

[root@master1 scripts]# redis-cli -a shaodeng --cluster create 192.168.149.22:6379 192.168.149.23:6379 192.168.149.24:6379 192.168.149.25:6379 192.168.149.26:6379 192.168.149.27:6379 --cluster-replicas 1 ##初始化redis-cluster,--cluster-replicas 1 指定一个master有一个slave节点

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.149.26:6379 to 192.168.149.22:6379

Adding replica 192.168.149.27:6379 to 192.168.149.23:6379

Adding replica 192.168.149.25:6379 to 192.168.149.24:6379

...

...

...

M: 4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379

slots:[5461-10922] (5462 slots) master

M: e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379

slots:[10923-16383] (5461 slots) master

S: ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379

replicates e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9

S: 564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379

replicates 9be691e08fc398c86e19bc0e62e30457fa20e5d8

S: 71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379

replicates 4c12e05e09597e4ca0ae35294894cd6184ccc1df

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 192.168.149.22:6379)

M: e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379

slots: (0 slots) slave

replicates e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9

S: 71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379

slots: (0 slots) slave

replicates 4c12e05e09597e4ca0ae35294894cd6184ccc1df

S: 564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379

slots: (0 slots) slave

replicates 9be691e08fc398c86e19bc0e62e30457fa20e5d8

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@master1 scripts]# redis-cli -a 123456 cluster nodes

e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379@16379 master - 0 1633280408933 7 connected 12768-16383

4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379@16379 master - 0 1633280408000 8 connected 7478-10922

9be691e08fc398c86e19bc0e62e30457fa20e5d8 192.168.149.22:6379@16379 myself,master - 0 1633280407000 9 connected 0-7477 10923-12767

ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379@16379 slave e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 0 1633280409934 7 connected

71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379@16379 slave 4c12e05e09597e4ca0ae35294894cd6184ccc1df 0 1633280406000 8 connected

564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379@16379 slave 9be691e08fc398c86e19bc0e62e30457fa20e5d8 0 1633280406000 9 connected

平衡slot槽位分配数量

[root@master1 ~]# redis-cli -a shaodeng --cluster rebalance 192.168.149.22:6379

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing Cluster Check (using node 192.168.149.22:6379)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Rebalancing across 3 nodes. Total weight = 3.00

Moving 2016 slots from 192.168.149.22:6379 to 192.168.149.23:6379

################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################

Moving 1846 slots from 192.168.149.22:6379 to 192.168.149.24:6379

######################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################################

扩容

添加节点的方法一:1.添加节点(新增节点默认为master)->2.【将新增节点变为slave || 为新增master节点分配槽位】

添加节点方法二:redis-cli -a 123456 --cluster add-node <待加入节点:6379> <集群机器IP:6379> --cluster-slave --cluster-master-id <指定master节点ID>

扩容!!!

###扩容

将slave4-192.168.149.28加入到集群中,成为Master1的slave

1.复制相关配置文件并重启服务

scp redis.conf 192.168.149.28:/app/redis//etc/

scp redis_6379.conf 192.168.149.28:/app/redis/data/

systemctl --enable now redis

2.加入redis集群

[root@master1 ~]# redis-cli -a 123456 --cluster add-node 192.168.149.28:6379 192.168.149.22:6379

>>> Adding node 192.168.149.28:6379 to cluster 192.168.149.22:6379

>>> Performing Cluster Check (using node 192.168.149.22:6379)

M: 9be691e08fc398c86e19bc0e62e30457fa20e5d8 192.168.149.22:6379

slots:[3862-7477],[10923-12767] (5461 slots) master

1 additional replica(s)

M: e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379

slots:[2016-3861],[12768-16383] (5462 slots) master

1 additional replica(s)

M: 4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379

slots:[0-2015],[7478-10922] (5461 slots) master

1 additional replica(s)

S: ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379

slots: (0 slots) slave

replicates e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9

S: 71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379

slots: (0 slots) slave

replicates 4c12e05e09597e4ca0ae35294894cd6184ccc1df

S: 564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379

slots: (0 slots) slave

replicates 9be691e08fc398c86e19bc0e62e30457fa20e5d8

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.149.28:6379 to make it join the cluster.

[OK] New node added correctly

[root@master1 ~]# redis-cli -a shaodeng --cluster check 192.168.149.22:6379

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

192.168.149.22:6379 (9be691e0...) -> 0 keys | 5461 slots | 1 slaves.

192.168.149.28:6379 (ff4c14aa...) -> 0 keys | 0 slots | 0 slaves.

192.168.149.24:6379 (e6142fa5...) -> 0 keys | 5462 slots | 1 slaves.

192.168.149.23:6379 (4c12e05e...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.149.22:6379)

M: 9be691e08fc398c86e19bc0e62e30457fa20e5d8 192.168.149.22:6379

slots:[3862-7477],[10923-12767] (5461 slots) master

1 additional replica(s)

M: ff4c14aa1c26653edba376586e49e6ad133de24e 192.168.149.28:6379

slots: (0 slots) master

M: e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379

slots:[2016-3861],[12768-16383] (5462 slots) master

1 additional replica(s)

M: 4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379

slots:[0-2015],[7478-10922] (5461 slots) master

1 additional replica(s)

S: ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379

slots: (0 slots) slave

replicates e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9

S: 71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379

slots: (0 slots) slave

replicates 4c12e05e09597e4ca0ae35294894cd6184ccc1df

S: 564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379

slots: (0 slots) slave

replicates 9be691e08fc398c86e19bc0e62e30457fa20e5d8

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

3.将新加入的节点变为slave角色

在加入节点上选择cluster replicate <master-Id>

[root@etcd3 ~]# redis-cli

127.0.0.1:6379> auth shaodeng

OK

127.0.0.1:6379> CLUSTER REPLICATE 9be691e08fc398c86e19bc0e62e30457fa20e5d8

OK

4.查看状态

[root@master1 ~]# redis-cli -a 123456 info Replication

# Replication

role:master

connected_slaves:2

slave0:ip=192.168.149.26,port=6379,state=online,offset=321972,lag=1

slave1:ip=192.168.149.28,port=6379,state=online,offset=321972,lag=0

master_failover_state:no-failover

master_replid:2a6ab7e880390cd0dad3784ee558b9f6d561fde1

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:321972

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:321972

[root@master1 ~]# redis-cli -a shaodeng cluster nodes

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

ff4c14aa1c26653edba376586e49e6ad133de24e 192.168.149.28:6379@16379 slave 9be691e08fc398c86e19bc0e62e30457fa20e5d8 0 1633510304694 9 connected

e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379@16379 master - 0 1633510306000 11 connected 2016-3861 12768-16383

4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379@16379 master - 0 1633510307198 10 connected 0-2015 7478-10922

9be691e08fc398c86e19bc0e62e30457fa20e5d8 192.168.149.22:6379@16379 myself,master - 0 1633510300000 9 connected 3862-7477 10923-12767

ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379@16379 slave e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 0 1633510308200 11 connected

71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379@16379 slave 4c12e05e09597e4ca0ae35294894cd6184ccc1df 0 1633510308000 10 connected

564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379@16379 slave 9be691e08fc398c86e19bc0e62e30457fa20e5d8 0 1633510305195 9 connected

------------------------------------------【分割线】----------------------------------

或者一条命令一步到位

[root@master1 ~]# redis-cli -a 123456 --cluster add-node 192.168.149.28:6379 192.168.149.22:6379 --cluster-slave --cluster-master-id 9be691e08fc398c86e19bc0e62e30457fa20e5d8

>>> Adding node 192.168.149.28:6379 to cluster 192.168.149.22:6379

>>> Performing Cluster Check (using node 192.168.149.22:6379)

M: 9be691e08fc398c86e19bc0e62e30457fa20e5d8 192.168.149.22:6379

slots:[3862-7477],[10923-12767] (5461 slots) master

1 additional replica(s)

M: e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379

slots:[2016-3861],[12768-16383] (5462 slots) master

1 additional replica(s)

M: 4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379

slots:[0-2015],[7478-10922] (5461 slots) master

1 additional replica(s)

S: ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379

slots: (0 slots) slave

replicates e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9

S: 71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379

slots: (0 slots) slave

replicates 4c12e05e09597e4ca0ae35294894cd6184ccc1df

S: 564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379

slots: (0 slots) slave

replicates 9be691e08fc398c86e19bc0e62e30457fa20e5d8

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.149.28:6379 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 192.168.149.22:6379.

[OK] New node added correctly.

缩容

1.分为删除Master节点或slave节点;

删除master节点思路: 1.迁移master槽位至其他Master(reshard)->2.删除节点(del-node)->3.删除node-6379.conf(防止重启机器或服务导致自动再次加入集群中)

删除slave节点思路:1.删除除节点(del-node)->2..删除node-6379.conf(防止重启机器或服务导致自动再次加入集群中)

## del-node <集群node:端口> <被删除节点的id>

[root@master1 ~]# redis-cli -a shaodeng --cluster del-node 192.168.149.22:6379 ff4c14aa1c26653edba376586e49e6ad133de24e

>>> Removing node ff4c14aa1c26653edba376586e49e6ad133de24e from cluster 192.168.149.22:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

## 查看集群信息

[root@master1 ~]# redis-cli -a 123456 cluster nodes

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 192.168.149.24:6379@16379 master - 0 1633510877189 11 connected 2016-3861 12768-16383

4c12e05e09597e4ca0ae35294894cd6184ccc1df 192.168.149.23:6379@16379 master - 0 1633510873181 10 connected 0-2015 7478-10922

9be691e08fc398c86e19bc0e62e30457fa20e5d8 192.168.149.22:6379@16379 myself,master - 0 1633510872000 9 connected 3862-7477 10923-12767

ee6313f94d268b03baa54e7868aa20f14ec3f7ca 192.168.149.25:6379@16379 slave e6142fa5af3f5f0ceeacb5d00398cd6c6a3910c9 0 1633510874183 11 connected

71282c9fbf6d1a66f54b7882a0d2c23976765e3d 192.168.149.27:6379@16379 slave 4c12e05e09597e4ca0ae35294894cd6184ccc1df 0 1633510876187 10 connected

564b0ce14ca1ac1d8971e5bc5b97debd95e5f258 192.168.149.26:6379@16379 slave 9be691e08fc398c86e19bc0e62e30457fa20e5d8 0 1633510876000 9 connected

[root@master1 ~]# redis-cli -a 123456 cluster info

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:11

cluster_my_epoch:9

cluster_stats_messages_ping_sent:233317

cluster_stats_messages_pong_sent:262000

cluster_stats_messages_update_sent:5

cluster_stats_messages_sent:495322

cluster_stats_messages_ping_received:228364

cluster_stats_messages_pong_received:237179

cluster_stats_messages_meet_received:6

cluster_stats_messages_update_received:4

cluster_stats_messages_received:465553

## 删除生成nodes-6379.conf

[root@etcd3 ~]# rm -f /app/redis/data/nodes-6379.conf

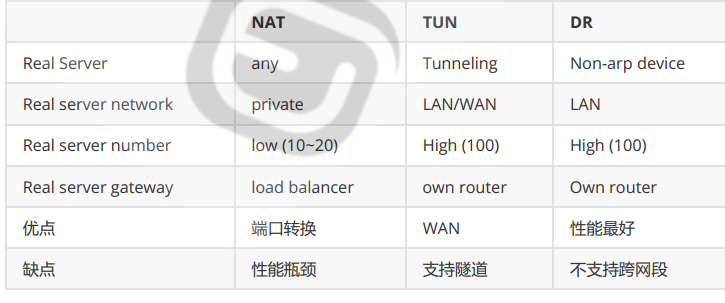

5.LVS调度算法总结

一共12种;新增加两种算法FO与OVF;主要分为静态:RR、WRR、SH与DH;动态:LC、WLC、SED、NQ、LBLC、LBLCR

静态

- RR 轮询

- WRR 加权轮询

- SH 源地址哈希 实现session sticky;将来自同一个IP地址请求始终发往第一次选择的RS

- DH 目标地址哈希;典型场景为正向代理缓存场景的负载均衡如Web缓

- 动态 (根据负载状态进行调度Overhead)

- LC:least connections 适用于长连接应用

- WLC: (默认调度算法) 带权重的LC

- SED: 与WLC相比不考虑非活动的连接,只考虑活动连接;

- NQ:第一次调度分配均匀,后面与SED一样

- LBLC:动态DH算法,基于DH,应用场景也是正向代理,Web缓存

- LBLCR:带复制功能的LBLC,解决LBLC负载不均问题,Web Cache场景等

6.LVS的NAT、DR模型实现

LVS一共有三种模型:NAT、DR(常用)、TUN

NAT实现(发包和回包都会经过LVS,性能瓶颈,可跨网段)

LVS

- vim /etc/sysctl.conf

- net.ipv4.ip_forward = 1 # NAT模式需开启ip_forward

- ipvsadm -A -t 10.0.0.8:80 -s wlc

- ipvsadm -a -t 10.0.0.8:80 -r 10.0.0.9:8080 -m -w 3

- ipvsadm -a -t 10.0.0.8:80 -r 10.0.0.10:8080 -m

- ipvsadm-save > /etc/sysconfig/ipvsadm ##如果要保存则开启

RS: 无需配置

DR实现 (常用、性能好、需要同一网段)

LVS:

- ipvsadm -A -t 10.0.0.8:80 -s wlc

- ipvsadm -a -t 10.0.0.8:80 -r 10.0.0.9:80 -g -w 10

- ipvsadm -a -t 10.0.0.8:80 -r 10.0.0.10:80 -g

RS:

- echo 1 > /proc/sys/net/ipv4/conf/lo0/arp_ignore ##限制arp响应级别,

- echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

- echo 2 > /proc/sys/net/ipv4/conf/lo0/arp_announce ##限制arp通告级别,即避免通告网络到局域网

- echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

lvs-DR模式实验脚本:

## 注意不要在运行了k8s机器上 执行脚本,因为stop命令会清空ipvsadm中的管理表,而k8s的service中的service可能会使用ipvs来处理负载均衡

--------[lvs_dr.sh]---------

#!/bin/bash

PATH=/bin:/sbin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/local/sbin

vip='10.0.0.8'

ifname='lo:1'

mask='255.255.255.255'

port='80'

rs1='10.0.0.9'

rs2='10.0.0.10'

scheduler='wlc'

type='-g'

rpm -q ipvsadm &> /dev/null || yum -y install ipvsadm &> /dev/null

case $1 in

start)

ifconfg $ifname $vip netmask $mask

ipvsadm -A -t ${vip}:${port} -s $scheduler

ipvsadm -a -t ${vip}:${port} -r ${rs1} $type -w 1

ipvsadm -a -t ${vip}:${port} -r ${rs2} $type -w 1

echo "ipvsadm is set completely!"

;;

stop)

ipvsadm -D -t ${vip}:${port}

ifconfig $ifname down

echo "ipvsadm is cancaled!"

;;

*)

echo "Usage: $(basename $0) start|stop"

exit 1

;;

esac

-------------[lvs_rs.sh]--------------

#!/bin/bash

vip=10.0.0.8

mask='255.255.255.255'

dev=lo:1

case $1 in

start)

echo 1 >/proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 >/proc/sys/net/ipv4/conf/all/arp_announce

echo 2 >/proc/sys/net/ipv4/conf/alo/arp_announce

ifconfig $dev $vip netmask $mask

echo "Rs is Ready"

;;

stop)

ifconfig $dev down

echo 0 >/proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 >/proc/sys/net/ipv4/conf/all/arp_announce

echo 0 >/proc/sys/net/ipv4/conf/alo/arp_announce

echo "Rs is canceld"

;;

*)

echo "Usage: $(basename $0) start | stop"

exit 1

;;

esac

最后

以上就是重要电话最近收集整理的关于Redis-LVS打怪升级之路三的全部内容,更多相关Redis-LVS打怪升级之路三内容请搜索靠谱客的其他文章。

发表评论 取消回复