目录

- 摘要

- 方法论

- 实验部分

- 数据集

- 实验使用的模型

- 评价指标

论文链接

- 研究方向相关,做个简单记录。

摘要

-

有道翻译的结果:

近年来,随着深度神经网络的发展,场景文本识别的研究取得了显著的进展。最近对对抗攻击的研究证实,针对非顺序任务(如分类、分割和检索)设计的DNN模型很容易被对抗实例所欺骗。实际上,STR是一个与安全问题高度相关的应用程序。然而,考虑序列预测STR模型的安全性和可靠性的研究较少。在本文中,我们首次尝试攻击最先进的基于dnn的STR模型。具体而言,我们提出了一种新颖而高效的基于优化的方法,该方法可以自然地集成到不同的顺序预测方案中,即connectionist temporal classification (CTC) 和 attention mechanism。 我们将我们提出的方法应用于5个最先进的STR模型,包括目标攻击和非目标攻击模式,对7个真实数据集和2个合成数据集的综合结果一致表明,这些STR模型的脆弱性有显著的性能下降。最后,我们还在一个真实的百度OCR STR引擎上测试了我们的攻击方法,验证了我们的方法的实用性。 -

原文:

The research on scene text recognition (STR) has made remarkable progress in recent years with the development of deep neural networks (DNNs). Recent studies on adversarial attack have verified that a DNN model designed for non-sequential tasks (e.g., classification, segmentation and retrieval) can be easily fooled by adversarial examples. Actually, STR is an application highly related to security issues. However, there are few studies considering the safety and reliability of STR models that make sequential prediction. In this paper, we make the first attempt in attacking the state-of-the-art DNN-based STR models. Specifically, we propose a novel and efficient optimization-based method that can be naturally integrated to different sequential prediction schemes, i.e., connectionist temporal classification (CTC) and attention mechanism. We apply our proposed method to five state-of-the-art STR models with both targeted and untargeted attack modes, the comprehensive results on 7 real-world datasets and 2 synthetic datasets consistently show the vulnerability of these STR models with a significant performance drop. Finally, we also test our attack method on a real-world STR engine of Baidu OCR, which demonstrates the practical potentials of our method.

方法论

优化目标: min x ′ L ( x ′ , l ′ ) + λ D ( x , x ′ ) s.t. x ′ = x + δ , x ′ ∈ [ − 1 , 1 ] ∣ n ∣ begin{array}{l} min _{x^{prime}} Lleft(x^{prime}, l^{prime}right)+lambda Dleft(x, x^{prime}right) \ text { s.t. } x^{prime}=x+delta, x^{prime} in[-1,1]^{|n|} end{array} minx′L(x′,l′)+λD(x,x′) s.t. x′=x+δ,x′∈[−1,1]∣n∣

- 上式所用参数的说明如下:

| 参数 | 说明 |

|---|---|

| x ∈ [ − 1 , 1 ] ∣ n ∣ xin[-1,1]^{vert n vert} x∈[−1,1]∣n∣ | input scene text image with n normalized pixels |

| x ′ x^{prime} x′ | adversarial example |

| δ delta δ | perturbation |

| L ( ⋅ ) L(cdot) L(⋅) | attack loss function |

| D ( ⋅ ) D(cdot) D(⋅) | L 2 L_2 L2 distance metric |

| λ lambda λ | pre-specified hyper-parameter |

- 攻击方法跟PGD类似,主要在于 L ( ⋅ ) L(cdot) L(⋅)的修改。

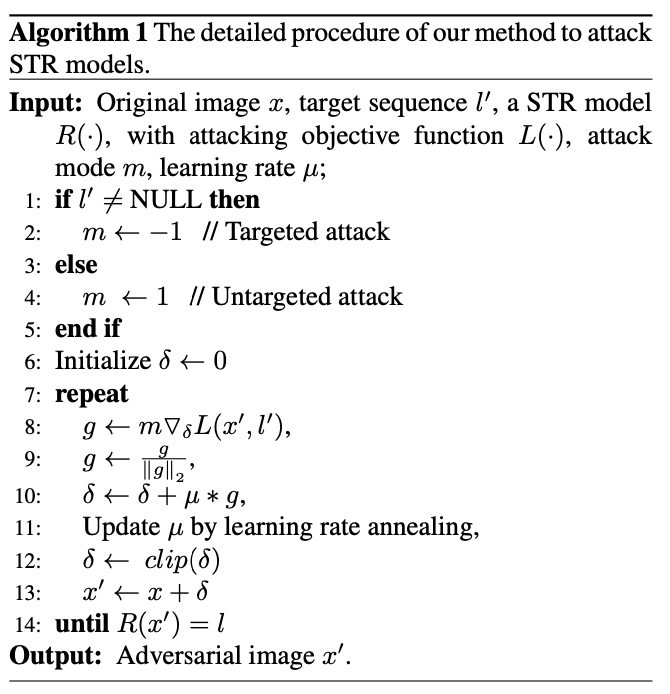

伪代码:

- 文章针对CTC-based和attention-based模型,与目标攻击和非目标攻击的情况组合起来,提出了四种attacking objective function L ( ⋅ ) L(cdot) L(⋅)。

实验部分

数据集

- 训练用数据集:参照Baek等人的工作,把MJSynth (MJ) 数据集和 SynthText (ST)数据集结合起来。

- 测试用数据集:CUTE80, ICDAR2003, ICDAR2013, ICDAR2015, IIIT5K-Words (IIIT5K), Street View Text (SVT), SVT Perspective (SP).

实验使用的模型

- CTC-based model: CRNN, Rosetta, STAR-Net.

- Attention-based model: RARE, TRBA.

评价指标

- success rate (SR), the ratio of successful generation of ad- versarial examples under the perturbation bound within the limited number of iterations;

- the averaged L2 distance (Dist) between the input images and the generated adversarial examples;

- average number of iterations (Iter), required for a successful attack (excluding failed attacks).

- 个人感觉是PGD攻击的迁移应用。

最后

以上就是诚心书包最近收集整理的关于论文阅读||What Machines See Is Not What They Get: Fooling Scene Text Recognition Models with Adversarial摘要方法论实验部分的全部内容,更多相关论文阅读||What内容请搜索靠谱客的其他文章。

发表评论 取消回复