我是靠谱客的博主 无私皮卡丘,这篇文章主要介绍人脸检测、人脸定位、人脸对齐、MTCNN、人脸识别(衡量人脸的相似或不同:softmax、三元组损失Triplet Loss、中心损失Center Loss、ArcFace)日萌社,现在分享给大家,希望可以做个参考。

日萌社

日萌社

人工智能AI:Keras PyTorch MXNet TensorFlow PaddlePaddle 深度学习实战(不定时更新)

# ArcFace

class ArcMarginProduct(nn.Module):

r"""Implement of large margin arc distance: :

Args:

in_features: size of each input sample

out_features: size of each output sample

s: norm of input feature

m: margin

cos(theta + m)

"""

def __init__(self, in_features, out_features, s=30.0, m=0.50, easy_margin=False):

super(ArcMarginProduct, self).__init__()

self.in_features = in_features

self.out_features = out_features

self.s = s

self.m = m

# 初始化权重

self.weight = Parameter(torch.FloatTensor(out_features, in_features))

nn.init.xavier_uniform_(self.weight)

self.easy_margin = easy_margin

self.cos_m = math.cos(m)

self.sin_m = math.sin(m)

self.th = math.cos(math.pi - m)

self.mm = math.sin(math.pi - m) * m

def forward(self, input, label):

# cos(theta) & phi(theta)

# torch.nn.functional.linear(input, weight, bias=None)

# y=x*W^T+b

cosine = F.linear(F.normalize(input), F.normalize(self.weight))

sine = torch.sqrt(1.0 - torch.pow(cosine, 2))

# cos(a+b)=cos(a)*cos(b)-size(a)*sin(b)

phi = cosine * self.cos_m - sine * self.sin_m

if self.easy_margin:

# torch.where(condition, x, y) → Tensor

# condition (ByteTensor) – When True (nonzero), yield x, otherwise yield y

# x (Tensor) – values selected at indices where condition is True

# y (Tensor) – values selected at indices where condition is False

# return:

# A tensor of shape equal to the broadcasted shape of condition, x, y

# cosine>0 means two class is similar, thus use the phi which make it

phi = torch.where(cosine > 0, phi, cosine)

else:

phi = torch.where(cosine > self.th, phi, cosine - self.mm)

# convert label to one-hot

# one_hot = torch.zeros(cosine.size(), requires_grad=True, device='cuda')

# 将cos(theta + m)更新到tensor相应的位置中

one_hot = torch.zeros(cosine.size(), device='cuda')

# scatter_(dim, index, src)

one_hot.scatter_(1, label.view(-1, 1).long(), 1)

# torch.where(out_i = {x_i if condition_i else y_i)

output = (one_hot * phi) + ((1.0 - one_hot) * cosine)

output *= self.s

return output

from PIL import Image

from detector import detect_faces

from visualization_utils import show_results

img = Image.open('some_img.jpg') # modify the image path to yours

bounding_boxes, landmarks = detect_faces(img) # detect bboxes and landmarks for all faces in the image

show_results(img, bounding_boxes, landmarks) # visualize the results

from PIL import Image

from face_dect_recong.align.detector import detect_faces

from face_dect_recong.align.visualization_utils import show_results

%matplotlib inline

img = Image.open('./data/other_my_face/my/my/myf112.jpg')

bounding_boxes, landmarks = detect_faces(img) # detect bboxes and landmarks for all faces in the image

show_results(img, bounding_boxes, landmarks) # visualize the results

from PIL import Image

from face_dect_recong.align.detector import detect_faces

from face_dect_recong.align.visualization_utils import show_results

%matplotlib inline

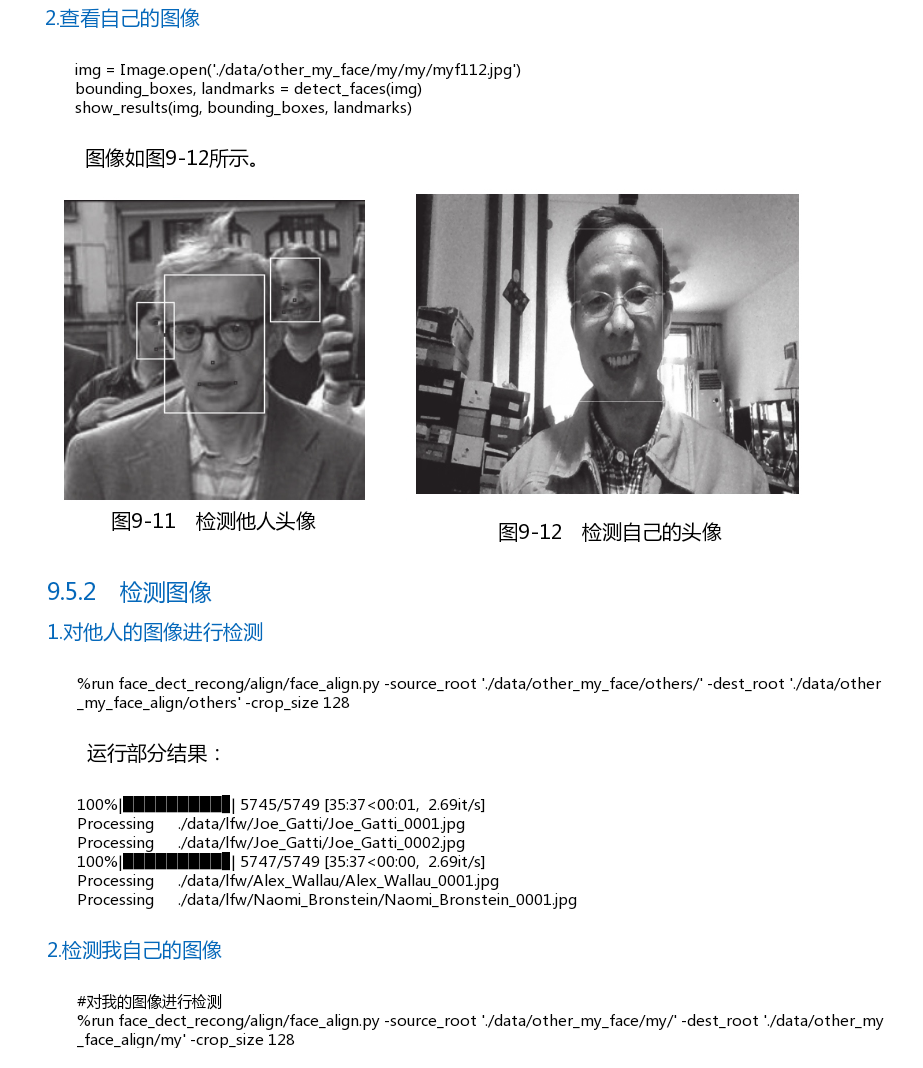

img = Image.open('./data/other_my_face/others/Woody_Allen/Woody_Allen_0001.jpg')

bounding_boxes, landmarks = detect_faces(img)

show_results(img, bounding_boxes, landmarks)

#对其他人的图像进行检测

%run face_dect_recong/align/face_align.py -source_root './data/other_my_face/others/' -dest_root './data/other_my_face_align/others' -crop_size 128

#对我的图像进行检测

%run face_dect_recong/align/face_align.py -source_root './data/other_my_face/my/' -dest_root './data/other_my_face_align/others/' -crop_size 128

import matplotlib.pyplot as plt

from matplotlib.image import imread

%matplotlib inline

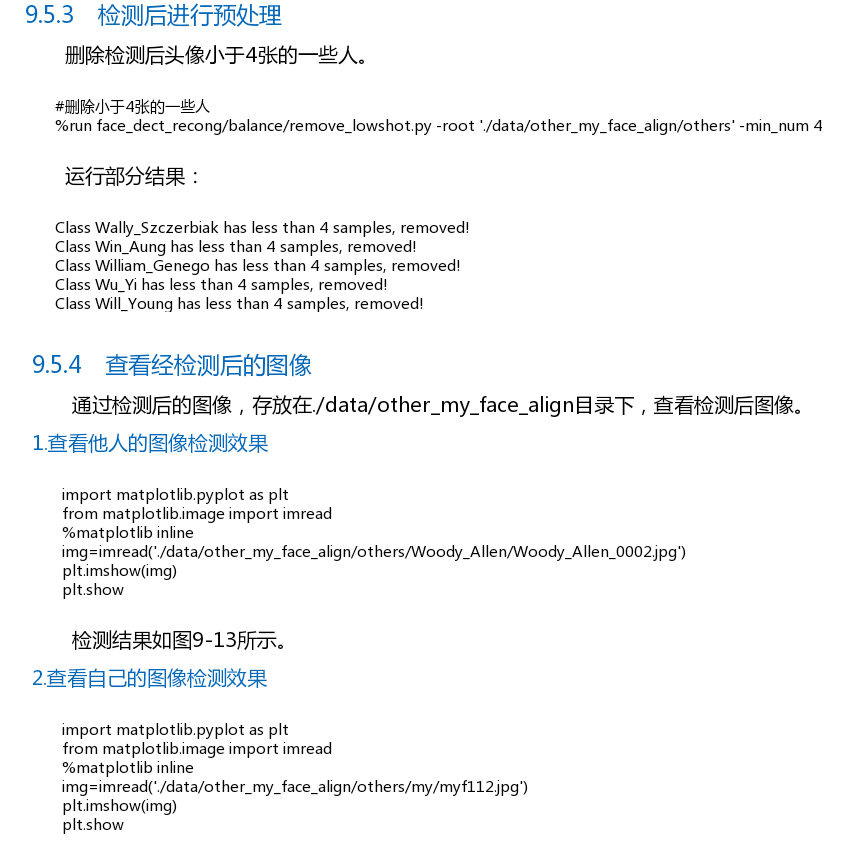

img=imread('./data/other_my_face_align/others/my/myf112.jpg')

plt.imshow(img)

plt.show

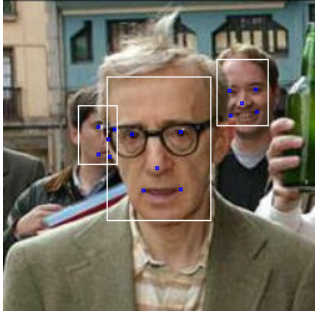

img=imread('./data/other_my_face_align/others/Woody_Allen/Woody_Allen_0002.jpg')

plt.imshow(img)

plt.show

#删除小于4张的一些人

%run face_dect_recong/balance/remove_lowshot.py -root './data/other_my_face_align/others' -min_num 4

img=imread('./data/dataset/lfw/lfw-align-128/Zico/Zico_0001.jpg')

plt.imshow(img)

plt.show

class Config(object):

env = 'default'

backbone = 'resnet18'

classify = 'softmax'

metric = 'arc_margin'

easy_margin = False

#是否使用压缩奖惩网络模块(Squeeze-and-Excitation Blocks)

use_se = False

loss = 'focal_loss'

display = False

finetune = False

lfw_root = '/home/wumg/data/data/other_my_face_align/others'

lfw_test_list = '/home/wumg/data/data/other_my_face_align/others_test_pair.txt'

test_model_path = '/home/wumg/data/data/dataset/lfw/resnet18_110.pth'

save_interval = 10

train_batch_size = 16 # batch size

test_batch_size = 60

input_shape = (1, 128, 128)

optimizer = 'sgd'

use_gpu = True # use GPU or not

gpu_id = '0, 1'

num_workers = 4 # how many workers for loading data

max_epoch = 2

lr = 1e-1 # initial learning rate

lr_step = 10

lr_decay = 0.95 # when val_loss increase, lr = lr*lr_decay

weight_decay = 5e-4

from __future__ import print_function

import os

import cv2

from models import *

import torch

import torch.nn as nn

import numpy as np

import time

from torch.nn import DataParallel

import torch.utils.model_zoo as model_zoo

import torch.nn.functional as F

model_urls = {

'resnet18': 'https://download.pytorch.org/models/resnet18-5c106cde.pth',

'resnet34': 'https://download.pytorch.org/models/resnet34-333f7ec4.pth'

}

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

def conv3x3(in_planes, out_planes, stride=1):

"""3x3 convolution with padding"""

return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

padding=1, bias=False)

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm2d(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

class IRBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None, use_se=True):

super(IRBlock, self).__init__()

self.bn0 = nn.BatchNorm2d(inplanes)

self.conv1 = conv3x3(inplanes, inplanes)

self.bn1 = nn.BatchNorm2d(inplanes)

self.prelu = nn.PReLU()

self.conv2 = conv3x3(inplanes, planes, stride)

self.bn2 = nn.BatchNorm2d(planes)

self.downsample = downsample

self.stride = stride

self.use_se = use_se

if self.use_se:

self.se = SEBlock(planes)

def forward(self, x):

residual = x

out = self.bn0(x)

out = self.conv1(out)

out = self.bn1(out)

out = self.prelu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.use_se:

out = self.se(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.prelu(out)

return out

class ResNetFace(nn.Module):

def __init__(self, block, layers, use_se=True):

self.inplanes = 64

self.use_se = use_se

super(ResNetFace, self).__init__()

self.conv1 = nn.Conv2d(1, 64, kernel_size=3, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.prelu = nn.PReLU()

self.maxpool = nn.MaxPool2d(kernel_size=2, stride=2)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.bn4 = nn.BatchNorm2d(512)

self.dropout = nn.Dropout()

self.fc5 = nn.Linear(512 * 8 * 8, 512)

self.bn5 = nn.BatchNorm1d(512)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d) or isinstance(m, nn.BatchNorm1d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.xavier_normal_(m.weight)

nn.init.constant_(m.bias, 0)

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample, use_se=self.use_se))

self.inplanes = planes

for i in range(1, blocks):

layers.append(block(self.inplanes, planes, use_se=self.use_se))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.prelu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.bn4(x)

x = self.dropout(x)

x = x.view(x.size(0), -1)

x = self.fc5(x)

x = self.bn5(x)

return x

class ResNet(nn.Module):

def __init__(self, block, layers):

self.inplanes = 64

super(ResNet, self).__init__()

# self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,

# bias=False)

self.conv1 = nn.Conv2d(1, 64, kernel_size=3, stride=1, padding=1,

bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

# self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0], stride=2)

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.fc5 = nn.Linear(512 * 8 * 8, 512)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

# x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

# x = nn.AvgPool2d(kernel_size=x.size()[2:])(x)

# x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc5(x)

return x

def resnet18(pretrained=False, **kwargs):

"""Constructs a ResNet-18 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = ResNet(BasicBlock, [2, 2, 2, 2], **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['resnet18']))

return model

def resnet_face18(use_se=True, **kwargs):

model = ResNetFace(IRBlock, [2, 2, 2, 2], use_se=use_se, **kwargs)

return model

def get_lfw_list(pair_list):

with open(pair_list, 'r') as fd:

pairs = fd.readlines()

data_list = []

for pair in pairs:

splits = pair.split()

if splits[0] not in data_list:

data_list.append(splits[0])

if splits[1] not in data_list:

data_list.append(splits[1])

return data_list

def load_image(img_path):

image = cv2.imread(img_path, 0)

if image is None:

return None

image = np.dstack((image, np.fliplr(image)))

image = image.transpose((2, 0, 1))

image = image[:, np.newaxis, :, :]

image = image.astype(np.float32, copy=False)

image -= 127.5

image /= 127.5

return image

def get_featurs(model, test_list, batch_size=10):

images = None

features = None

cnt = 0

for i, img_path in enumerate(test_list):

image = load_image(img_path)

if image is None:

print('read {} error'.format(img_path))

if images is None:

images = image

else:

images = np.concatenate((images, image), axis=0)

if images.shape[0] % batch_size == 0 or i == len(test_list) - 1:

cnt += 1

data = torch.from_numpy(images)

data = data.to(device)

output = model(data)

output = output.data.cpu().numpy()

fe_1 = output[::2]

fe_2 = output[1::2]

feature = np.hstack((fe_1, fe_2))

# print(feature.shape)

if features is None:

features = feature

else:

features = np.vstack((features, feature))

images = None

return features, cnt

def load_model(model, model_path):

model_dict = model.state_dict()

pretrained_dict = torch.load(model_path)

pretrained_dict = {k: v for k, v in pretrained_dict.items() if k in model_dict}

model_dict.update(pretrained_dict)

model.load_state_dict(model_dict)

def get_feature_dict(test_list, features):

fe_dict = {}

for i, each in enumerate(test_list):

# key = each.split('/')[1]

fe_dict[each] = features[i]

return fe_dict

def cosin_metric(x1, x2):

return np.dot(x1, x2) / (np.linalg.norm(x1) * np.linalg.norm(x2))

def cal_accuracy(y_score, y_true):

y_score = np.asarray(y_score)

y_true = np.asarray(y_true)

best_acc = 0

best_th = 0

for i in range(len(y_score)):

th = y_score[i]

y_test = (y_score >= th)

acc = np.mean((y_test == y_true).astype(int))

if acc > best_acc:

best_acc = acc

best_th = th

return (best_acc, best_th)

def test_performance(fe_dict, pair_list):

with open(pair_list, 'r') as fd:

pairs = fd.readlines()

sims = []

labels = []

for pair in pairs:

splits = pair.split()

fe_1 = fe_dict[splits[0]]

fe_2 = fe_dict[splits[1]]

label = int(splits[2])

sim = cosin_metric(fe_1, fe_2)

sims.append(sim)

labels.append(label)

acc, th = cal_accuracy(sims, labels)

return acc, th

def lfw_test(model, img_paths, identity_list, compair_list, batch_size):

s = time.time()

features, cnt = get_featurs(model, img_paths, batch_size=batch_size)

#print(features.shape)

t = time.time() - s

#print('共用时间 {}, average time is {}'.format(t, t / cnt))

fe_dict = get_feature_dict(identity_list, features)

acc, th = test_performance(fe_dict, compair_list)

print('准确率: ', acc, '阀值: ', th)

return acc

opt = Config()

model = resnet_face18(opt.use_se)

#采用多GPU的数据并行处理机制

model = DataParallel(model)

#装载预训练模型

model.load_state_dict(torch.load(opt.test_model_path))

model.to(device)

identity_list = get_lfw_list(opt.lfw_test_list)

img_paths = [os.path.join(opt.lfw_root, each) for each in identity_list]

model.eval()

lfw_test(model, img_paths, identity_list, opt.lfw_test_list, opt.test_batch_size)

#img_path='/home/wumg/data/data/other_my_face_align/others/Wen_Jiabao/Wen_Jiabao_0002.jpg'

img_path='/home/wumg/data/data/other_my_face_align/others/my/myf241.jpg'

#img_path='/home/wumg/data/data/dataset/lfw/lfw-align-128/Wen_Jiabao/Wen_Jiabao_0002.jpg'

image = cv2.imread(img_path, 0)

if image is None:

print("ok")

image = np.dstack((image, np.fliplr(image)))

image = image.transpose((2, 0, 1))

image = image[:, np.newaxis, :, :]

image = image.astype(np.float32, copy=False)

image -= 127.5

image /= 127.5

image.shape

![]()

from __future__ import print_function

import os

import cv2

from models import *

import torch

import numpy as np

import time

#from config import Config

from torch.nn import DataParallel

def get_lfw_list(pair_list):

with open(pair_list, 'r') as fd:

pairs = fd.readlines()

data_list = []

for pair in pairs:

splits = pair.split()

if splits[0] not in data_list:

data_list.append(splits[0])

if splits[1] not in data_list:

data_list.append(splits[1])

return data_list

def load_image(img_path):

image = cv2.imread(img_path, 0)

if image is None:

return None

image = np.dstack((image, np.fliplr(image)))

image = image.transpose((2, 0, 1))

image = image[:, np.newaxis, :, :]

image = image.astype(np.float32, copy=False)

image -= 127.5

image /= 127.5

return image

def get_featurs(model, test_list, batch_size=10):

images = None

features = None

cnt = 0

for i, img_path in enumerate(test_list):

image = load_image(img_path)

if image is None:

print('read {} error'.format(img_path))

if images is None:

images = image

else:

images = np.concatenate((images, image), axis=0)

if images.shape[0] % batch_size == 0 or i == len(test_list) - 1:

cnt += 1

data = torch.from_numpy(images)

data = data.to(torch.device("cuda"))

output = model(data)

output = output.data.cpu().numpy()

fe_1 = output[::2]

fe_2 = output[1::2]

feature = np.hstack((fe_1, fe_2))

# print(feature.shape)

if features is None:

features = feature

else:

features = np.vstack((features, feature))

images = None

return features, cnt

def load_model(model, model_path):

model_dict = model.state_dict()

pretrained_dict = torch.load(model_path)

pretrained_dict = {k: v for k, v in pretrained_dict.items() if k in model_dict}

model_dict.update(pretrained_dict)

model.load_state_dict(model_dict)

def get_feature_dict(test_list, features):

fe_dict = {}

for i, each in enumerate(test_list):

# key = each.split('/')[1]

fe_dict[each] = features[i]

return fe_dict

def cosin_metric(x1, x2):

return np.dot(x1, x2) / (np.linalg.norm(x1) * np.linalg.norm(x2))

def cal_accuracy(y_score, y_true):

y_score = np.asarray(y_score)

y_true = np.asarray(y_true)

best_acc = 0

best_th = 0

for i in range(len(y_score)):

th = y_score[i]

y_test = (y_score >= th)

acc = np.mean((y_test == y_true).astype(int))

if acc > best_acc:

best_acc = acc

best_th = th

return (best_acc, best_th)

def test_performance(fe_dict, pair_list):

with open(pair_list, 'r') as fd:

pairs = fd.readlines()

sims = []

labels = []

for pair in pairs:

splits = pair.split()

fe_1 = fe_dict[splits[0]]

fe_2 = fe_dict[splits[1]]

label = int(splits[2])

sim = cosin_metric(fe_1, fe_2)

sims.append(sim)

labels.append(label)

acc, th = cal_accuracy(sims, labels)

return acc, th

def lfw_test(model, img_paths, identity_list, compair_list, batch_size):

s = time.time()

features, cnt = get_featurs(model, img_paths, batch_size=batch_size)

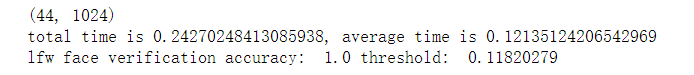

print(features.shape)

t = time.time() - s

print('total time is {}, average time is {}'.format(t, t / cnt))

fe_dict = get_feature_dict(identity_list, features)

acc, th = test_performance(fe_dict, compair_list)

print('lfw face verification accuracy: ', acc, 'threshold: ', th)

return acc

if __name__ == '__main__':

opt = Config()

if opt.backbone == 'resnet18':

model = resnet_face18(opt.use_se)

elif opt.backbone == 'resnet34':

model = resnet34()

elif opt.backbone == 'resnet50':

model = resnet50()

model = DataParallel(model)

# load_model(model, opt.test_model_path)

model.load_state_dict(torch.load(opt.test_model_path))

model.to(torch.device("cuda"))

identity_list = get_lfw_list(opt.lfw_test_list)

img_paths = [os.path.join(opt.lfw_root, each) for each in identity_list]

model.eval()

lfw_test(model, img_paths, identity_list, opt.lfw_test_list, opt.test_batch_size)

最后

以上就是无私皮卡丘最近收集整理的关于人脸检测、人脸定位、人脸对齐、MTCNN、人脸识别(衡量人脸的相似或不同:softmax、三元组损失Triplet Loss、中心损失Center Loss、ArcFace)日萌社的全部内容,更多相关人脸检测、人脸定位、人脸对齐、MTCNN、人脸识别(衡量人脸的相似或不同:softmax、三元组损失Triplet内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

![[keras] triplelet loss](https://www.shuijiaxian.com/files_image/reation/bcimg14.png)

发表评论 取消回复