Apache Sqoop

传统的应用程序管理系统,即应用程序与使用RDBMS的关系数据库的交互,是产生大数据的来源之一。这样的大数据由RDBMS生成,存储在关系数据库结构中的关系数据库服务器中。

当Hadoop生态系统中的MapReduce、Hive、HBase、Cassandra、Pig等大数据存储和分析工具出现时,它们需要一个工具来与关系数据库服务器交互,以便导入和导出驻留在其中的大数据。

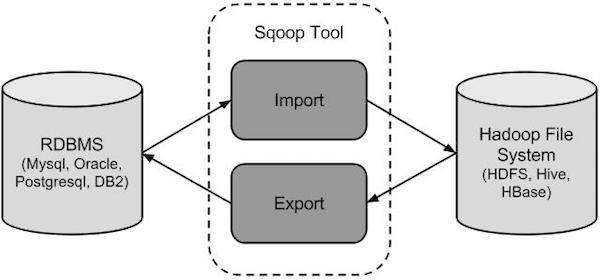

此时,Sqoop在Hadoop生态系统中占有一席之地,它提供关系数据库服务器和Hadoop的HDFS之间可行的交互。

Sqoop − “SQL to Hadoop 和 Hadoop to SQL”

Sqoop是一个用于在Hadoop和关系数据库服务器之间传输数据的工具。用于从MySQL、Oracle等关系数据库导入数据到Hadoop HDFS,从Hadoop文件系统导出数据到关系数据库。

[工作机制]

[Sqoop 导入]

导入工具将各个表从RDBMS导入到HDFS。表中的每一行都被视为HDFS中的一条记录。所有记录都存储为文本文件中的文本数据或Avro和序列文件中的二进制数据。

[Sqoop 导出]

导出工具将一组文件从HDFS导出到RDBMS。作为输入提供给Sqoop的文件包含记录,这些记录被称为表中的行。它们被读取和解析为一组记录,并使用用户指定的分隔符分隔。

Installation

下载Sqoop

tar

环境变量

配置 sqoop-env.sh

[root@master /opt]#cd sqoop/conf/ [root@master /opt/sqoop/conf]#cp sqoop-env-template.sh sqoop-env.sh#Set path to where bin/hadoop is available export HADOOP_COMMON_HOME=/opt/hadoop #Set path to where hadoop-*-core.jar is available export HADOOP_MAPRED_HOME=/opt/hadoop #set the path to where bin/hbase is available #export HBASE_HOME=/opt/hbase #Set the path to where bin/hive is available #export HIVE_HOME=/opt/hive #Set the path for where zookeper config dir is #export ZOOCFGDIR=/opt/zookeeper

添加mysql-connection-java-xxx-bin.jar

测试

[root@master /opt/sqoop/conf]#sqoop-version Warning: /opt/sqoop/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /opt/sqoop/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. 20/06/24 09:20:11 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Sqoop 1.4.7 git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8 Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

Run

[Import]

# 1. Importing a Table

[root@master /opt/sqoop/conf]#sqoop import --connect jdbc:mysql://192.168.132.1/dbms_test --username root --password 123456 --table department --m 1

# 注:--m 就是 --num-mappers

------ result ------

[root@slave5 /root]#hdfs dfs -cat /user/root/department/part-m-*

1,Los Angeles Lakers,manager,2020-03-03

2,Houston Rockets ,manager,2020-03-05

# 2. Importing into Target Directory

[root@master /opt/sqoop/conf]#sqoop import --connect jdbc:mysql://192.168.132.1/dbms_test --username root --password 123456 --table department --m 1 --target-dir /user/root/queryresult

------ logger ------

Warning: /opt/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

20/06/24 09:52:24 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

20/06/24 09:52:24 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

20/06/24 09:52:25 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

20/06/24 09:52:25 INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hbase-1.4.13/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Wed Jun 24 09:52:25 PDT 2020 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

20/06/24 09:52:25 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `department` AS t LIMIT 1

20/06/24 09:52:25 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `department` AS t LIMIT 1

20/06/24 09:52:25 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop

Note: /tmp/sqoop-root/compile/fcffef5112ac053d3ddb3ad259850d76/department.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

20/06/24 09:52:27 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/fcffef5112ac053d3ddb3ad259850d76/department.jar

20/06/24 09:52:27 WARN manager.MySQLManager: It looks like you are importing from mysql.

20/06/24 09:52:27 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

20/06/24 09:52:27 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

20/06/24 09:52:27 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

20/06/24 09:52:27 INFO mapreduce.ImportJobBase: Beginning import of department

20/06/24 09:52:27 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

20/06/24 09:52:38 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

20/06/24 09:52:38 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

Wed Jun 24 09:52:41 PDT 2020 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

20/06/24 09:52:41 INFO db.DBInputFormat: Using read commited transaction isolation

20/06/24 09:52:42 INFO mapreduce.JobSubmitter: number of splits:1

20/06/24 09:52:42 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1593016932618_0002

20/06/24 09:52:43 INFO impl.YarnClientImpl: Submitted application application_1593016932618_0002

20/06/24 09:52:43 INFO mapreduce.Job: The url to track the job: http://slave5:8088/proxy/application_1593016932618_0002/

20/06/24 09:52:43 INFO mapreduce.Job: Running job: job_1593016932618_0002

20/06/24 09:52:51 INFO mapreduce.Job: Job job_1593016932618_0002 running in uber mode : false

20/06/24 09:52:51 INFO mapreduce.Job: map 0% reduce 0%

20/06/24 09:52:59 INFO mapreduce.Job: map 100% reduce 0%

20/06/24 09:52:59 INFO mapreduce.Job: Job job_1593016932618_0002 completed successfully

20/06/24 09:52:59 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=139605

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=79

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=5167

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=5167

Total vcore-milliseconds taken by all map tasks=5167

Total megabyte-milliseconds taken by all map tasks=5291008

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=59

CPU time spent (ms)=720

Physical memory (bytes) snapshot=109760512

Virtual memory (bytes) snapshot=2104303616

Total committed heap usage (bytes)=30474240

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=79

20/06/24 09:52:59 INFO mapreduce.ImportJobBase: Transferred 79 bytes in 21.64 seconds (3.6506 bytes/sec)

20/06/24 09:52:59 INFO mapreduce.ImportJobBase: Retrieved 2 records.

------ result ------

[root@slave5 /root]#hdfs dfs -cat /user/root/queryresult/part-m-*

1,Los Angeles Lakers,manager,2020-03-03

2,Houston Rockets ,manager,2020-03-05

# 3. Import Subset of Table Data

[root@master /opt/sqoop/conf]#sqoop import --connect jdbc:mysql://192.168.132.1/dbms_test --username root --password 123456 --table department --m 1 --target-dir /user/root/whereresult --where "mgrstartdate = '2020-03-03'"

------ result ------

[root@slave5 /root]#hdfs dfs -cat /user/root/whereresult/part-m-*

1,Los Angeles Lakers,manager,2020-03-03

# 4. Import All Tables

[root@master /opt/sqoop/conf]#sqoop import-all-tables --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456

------ result ------

[root@slave5 /root]#hdfs dfs -ls /user/root

Found 5 items

drwxr-xr-x - root supergroup 0 2020-06-24 10:57 /user/root/classes

drwxr-xr-x - root supergroup 0 2020-06-24 10:58 /user/root/players

drwxr-xr-x - root supergroup 0 2020-06-24 09:52 /user/root/queryresult

drwxr-xr-x - root supergroup 0 2020-06-24 10:58 /user/root/student

drwxr-xr-x - root supergroup 0 2020-06-24 10:01 /user/root/whereresult

[root@slave5 /root]#hdfs dfs -cat /user/root/players/part-m-*

1,player_1

2,player_2

3,player_3

[Export]

[root@master /opt/sqoop/conf]#sqoop import --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456 --table employee --target-dir /user/root/emp_data --m 1

[root@master /opt/sqoop/conf]#sqoop export --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456 --table employee --export-dir /user/root/emp_data

------ result ------

mysql> select * from employee;

+------+----------+---------+--------+------+

| id | name | deg | salary | dept |

+------+----------+---------+--------+------+

| 1201 | gopal | manager | 50000 | TP |

| 1202 | manisha | preader | 50000 | TP |

| 1203 | kalil | php dev | 30000 | AC |

| 1204 | prasanth | php dev | 30000 | AC |

| 1205 | kranthi | admin | 20000 | TP |

| 1206 | satish p | grp des | 20000 | GR |

+------+----------+---------+--------+------+

6 rows in set (0.00 sec)

[Job]

# Create Job (--create)

[root@master /opt/sqoop/conf]#sqoop job --create myjob -- import --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --table employee --m 1 --target-dir /user/root/emp_data

# Verify Job (--list)

[root@master /opt/sqoop/conf]#sqoop job --list

------ result ------

Available jobs:

myjob

# Inspect Job (--show)

[root@master /opt/sqoop/conf]#sqoop job --show myjob

Enter password:

------ result ------

Job: myjob

Tool: import

Options:

----------------------------

verbose = false

hcatalog.drop.and.create.table = false

db.connect.string = jdbc:mysql://192.168.132.1/db1?useSSL=true

codegen.output.delimiters.escape = 0

codegen.output.delimiters.enclose.required = false

codegen.input.delimiters.field = 0

mainframe.input.dataset.type = p

split.limit = null

hbase.create.table = false

db.require.password = true

skip.dist.cache = false

hdfs.append.dir = false

db.table = employee

codegen.input.delimiters.escape = 0

accumulo.create.table = false

import.fetch.size = null

codegen.input.delimiters.enclose.required = false

db.username = root

reset.onemapper = false

codegen.output.delimiters.record = 10

import.max.inline.lob.size = 16777216

sqoop.throwOnError = false

hbase.bulk.load.enabled = false

hcatalog.create.table = false

db.clear.staging.table = false

codegen.input.delimiters.record = 0

enable.compression = false

hive.overwrite.table = false

hive.import = false

codegen.input.delimiters.enclose = 0

accumulo.batch.size = 10240000

hive.drop.delims = false

customtool.options.jsonmap = {}

codegen.output.delimiters.enclose = 0

hdfs.delete-target.dir = false

codegen.output.dir = .

codegen.auto.compile.dir = true

relaxed.isolation = false

mapreduce.num.mappers = 1

accumulo.max.latency = 5000

import.direct.split.size = 0

sqlconnection.metadata.transaction.isolation.level = 2

codegen.output.delimiters.field = 44

export.new.update = UpdateOnly

incremental.mode = None

hdfs.file.format = TextFile

sqoop.oracle.escaping.disabled = true

codegen.compile.dir = /tmp/sqoop-root/compile/165f19ef24d49a202ccc6d22c5d3f879

direct.import = false

temporary.dirRoot = _sqoop

hdfs.target.dir = /user/root/emp_data

hive.fail.table.exists = false

jdbc.driver.class = com.mysql.jdbc.Driver

db.batch = false

# Execute Job (--exec)

[root@master /opt/sqoop/conf]#sqoop job --exec myjob

Enter password:

# Delete Job (--delete)

[root@master /opt/sqoop/conf]#sqoop job --delete myjob

[Codegen]

从面向对象应用程序的观点来看,每个数据库表都有一个DAO类,其中包含用于初始化对象的“getter”和“setter”方法。这个工具(-codegen)自动生成DAO类。它基于表模式结构生成Java中的DAO类。

Java定义作为导入过程的一部分被实施。这个工具的主要用途是检查Java是否丢失了Java代码。如果是,它将创建一个新版本的Java,在字段之间使用默认分隔符。

[root@master /opt/sqoop/conf]#sqoop codegen --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456 --table employee --bindir /user/root/out_jar

------ logger ------

20/06/24 13:37:11 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop

Note: /user/root/out_jar/employee.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

20/06/24 13:37:13 INFO orm.CompilationManager: Writing jar file: /user/root/out_jar/employee.jar

------ result ------

[root@master /opt/sqoop/conf]#ls -al /user/root/out_jar

total 64

drwxr-xr-x. 2 root root 230 Jun 24 13:42 .

drwxr-xr-x. 3 root root 21 Jun 24 13:42 ..

-rw-r--r--. 1 root root 592 Jun 24 13:42 employee$1.class

-rw-r--r--. 1 root root 589 Jun 24 13:42 employee$2.class

-rw-r--r--. 1 root root 589 Jun 24 13:42 employee$3.class

-rw-r--r--. 1 root root 592 Jun 24 13:42 employee$4.class

-rw-r--r--. 1 root root 589 Jun 24 13:42 employee$5.class

-rw-r--r--. 1 root root 12249 Jun 24 13:42 employee.class

-rw-r--r--. 1 root root 225 Jun 24 13:42 employee$FieldSetterCommand.class

-rw-r--r--. 1 root root 7578 Jun 24 13:42 employee.jar

-rw-r--r--. 1 root root 17143 Jun 24 13:42 employee.java

[Eval]

它允许用户对各自的数据库服务器执行用户定义的查询,并在控制台中预览结果。使用eval,我们可以计算任何类型的SQL查询,可以是DDL或DML语句。

[root@master /opt/sqoop/conf]#sqoop eval --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456 --query 'SELECT * FROM employee LIMIT 3'

------ result ------

--------------------------------------------------------

| id | name | deg | salary | dept |

--------------------------------------------------------

| 1201 | gopal | manager | 50000 | TP |

| 1202 | manisha | preader | 50000 | TP |

| 1203 | kalil | php dev | 30000 | AC |

--------------------------------------------------------

[List Databases]

Sqoop list-databases工具对数据库服务器解析并执行SHOW DATABASES查询。 此后,列出当前服务器中的数据库。

[root@master /opt/sqoop/conf]#sqoop list-databases --connect jdbc:mysql://192.168.132.1/?useSSL=true --username root --password 123456

------ result ------

information_schema

ch

clouddb01

clouddb02

clouddb03

db1

dbms

dbms_test

......

[List Tables]

Sqoop list-tables工具对特定数据库解析并执行SHOW TABLES查询,此后,列出当前数据库中的表。

[root@master /opt/sqoop/conf]#sqoop list-tables --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456

------ result ------

classes

employee

players

student

[Import To Hive]

[root@master /root]#sqoop import --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456 --table employee --hive-import --hive-overwrite --hive-database mydb2 --hive-table employee

------ logger ------

20/06/25 04:04:21 INFO hive.HiveImport: Loading uploaded data into Hive

20/06/25 04:04:21 INFO conf.HiveConf: Found configuration file null

20/06/25 04:04:26 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings.

20/06/25 04:04:26 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/opt/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

20/06/25 04:04:26 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/opt/hbase-1.4.13/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

20/06/25 04:04:26 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/opt/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

20/06/25 04:04:26 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

20/06/25 04:04:27 INFO hive.HiveImport:

20/06/25 04:04:27 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/opt/apache-hive-2.1.1-bin/lib/hive-common-2.1.1.jar!/hive-log4j2.properties Async: true

20/06/25 04:04:34 INFO hive.HiveImport: OK

20/06/25 04:04:34 INFO hive.HiveImport: Time taken: 1.529 seconds

20/06/25 04:04:34 INFO hive.HiveImport: Loading data to table mydb2.employee

20/06/25 04:04:34 INFO hive.HiveImport: OK

20/06/25 04:04:34 INFO hive.HiveImport: Time taken: 0.683 seconds

20/06/25 04:04:35 INFO hive.HiveImport: Hive import complete.

------ result ------

0: jdbc:hive2://localhost:10000/mydb2> select * from employee;

OK

+------+----------+---------+--------+------+

| id | name | deg | salary | dept |

+------+----------+---------+--------+------+

| 1201 | gopal | manager | 50000 | TP |

| 1202 | manisha | preader | 50000 | TP |

| 1203 | kalil | php dev | 30000 | AC |

| 1204 | prasanth | php dev | 30000 | AC |

| 1205 | kranthi | admin | 20000 | TP |

| 1206 | satish p | grp des | 20000 | GR |

+------+----------+---------+--------+------+

6 rows selected (1.222 seconds)

[Import To HBase]

[root@master /root]#sqoop import --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456 --table employee --hbase-create-table --hbase-table ns2:hbase_emp --column-family cf1 --hbase-row-key id

hbase(main):014:0> list_namespace_tables 'ns2'

TABLE

hbase_emp

t2

2 row(s) in 0.0080 seconds

hbase(main):015:0> scan 'ns2:hbase_emp'

+------+---------------------------------------------------------+

| ROW | COLUMN+CELL |

+------+---------------------------------------------------------+

| 1201 | column=cf1:deg, timestamp=1593084511041, value=manager |

| 1201 | column=cf1:dept, timestamp=1593084511041, value=TP |

| 1201 | column=cf1:name, timestamp=1593084511041, value=gopal |

| 1201 | column=cf1:salary, timestamp=1593084511041, value=50000 |

| 1202 | column=cf1:deg, timestamp=1593084511041, value=preader |

| 1202 | column=cf1:dept, timestamp=1593084511041, value=TP |

| 1202 | column=cf1:name, timestamp=1593084511041, value=manisha |

| 1202 | column=cf1:salary, timestamp=1593084511041, value=50000 |

| 1203 | column=cf1:deg, timestamp=1593084509869, value=php |

| 1203 | column=cf1:dept, timestamp=1593084509869, value=AC |

| 1203 | column=cf1:name, timestamp=1593084509869, value=kalil |

| 1203 | column=cf1:salary, timestamp=1593084509869, value=30000 |

| 1204 | column=cf1:deg, timestamp=1593084509633, value=php |

| 1204 | column=cf1:dept, timestamp=1593084509633, value=AC |

| 1204 | column=cf1:name, timestamp=1593084509633, value=prasanth|

| 1204 | column=cf1:salary, timestamp=1593084509633, value=30000 |

| 1205 | column=cf1:deg, timestamp=1593084512406, value=admin |

| 1205 | column=cf1:dept, timestamp=1593084512406, value=TP |

| 1205 | column=cf1:name, timestamp=1593084512406, value=kranthi |

| 1205 | column=cf1:salary, timestamp=1593084512406, value=20000 |

| 1206 | column=cf1:deg, timestamp=1593084512406, value=grp |

| 1206 | column=cf1:dept, timestamp=1593084512406, value=GR |

| 1206 | column=cf1:name, timestamp=1593084512406, value=satish |

| 1206 | column=cf1:salary, timestamp=1593084512406, value=20000 |

+------+---------------------------------------------------------+

6 row(s) in 0.0280 seconds

[Incremental Imports]

Sqoop提供了一种增量导入模式,能被用于只检索一些比之前导入的行集更新的行。

| Argument | Description |

|---|---|

--check-column (col) | 指定在确定要导入哪些行时要检查的列。该列不应该是(CHAR/NCHAR/VARCHAR/VARNCHAR/ LONGVARCHAR/LONGNVARCHAR等)字符串类型的 |

--incremental (mode) | 指定Sqoop如何确定哪些行是新的。 mode合法值包括append和lastmodified。 |

--last-value (value) | Specifies the maximum value of the check column from the previous import.指定先前导入的检查列的最大值。 |

在导入一个随着row id值不断增加,新行不断添加的表时,您应该使用append 模式。使用 --check-column指定包含row id的列。Sqoop会导入检查列的拥有值大于--last-value指定值的行。

Sqoop支持的另一种表更新策略称为lastmodified 模式。当源表的行可能被更新时应该使用这种模式,之后每次这样更新都会将last-modified列的值设置为当前时间戳。Sqoop会导入检查列持有时间戳比--last-value指定时间戳较新的行。

在增量导入结束时,应该为后续导入而被指定的 --last-value 的值会被打印到屏幕上。在运行后续导入时,你用这种方式应该指定--last-value以确保只导入新的或更新的数据。通过创建增量导入作为保存的作业来自动处理,是执行循环增量导入的首选机制。

[root@master /root]#sqoop import --connect jdbc:mysql://192.168.132.1/db1?useSSL=true --username root --password 123456 --table employee --hbase-table ns2:hbase_emp --column-family cf1 --hbase-row-key id --check-column id --incremental append --last-value 1202

------ logger ------

20/06/25 05:23:27 WARN util.AppendUtils: Cannot append files to target dir; no such directory: _sqoop/997b41edf8d648599f52f37102349766_employee

20/06/25 05:23:27 INFO tool.ImportTool: Incremental import complete! To run another incremental import of all data following this import, supply the following arguments:

20/06/25 05:23:27 INFO tool.ImportTool: --incremental append

20/06/25 05:23:27 INFO tool.ImportTool: --check-column id

20/06/25 05:23:27 INFO tool.ImportTool: --last-value 1206

20/06/25 05:23:27 INFO tool.ImportTool: (Consider saving this with 'sqoop job --create')

Exception

- 导入数据时出现,ERROR manager.SqlManager: Error executing statement: java.sql.SQLException: Access denied for user ‘root’@‘master’ (using password: YES)

原因: 数据库权限错误

解决方法:

mysql> show grants for root; +------------------------------------------------+ | Grants for root@% | +------------------------------------------------+ | GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' WITH GRANT OPTION | +------------------------------------------------+ 1 row in set (0.00 sec) mysql> show grants for root@master; ERROR 1141 (42000): There is no such grant defined for user 'root' on host 'master' mysql> grant all privileges on *.* to root@master identified by '123456'; Query OK, 0 rows affected, 1 warning (0.02 sec) mysql> show grants for root@master; +------------------------------------------------+ | Grants for root@master | +------------------------------------------------+ | GRANT ALL PRIVILEGES ON *.* TO 'root'@'master' | +------------------------------------------------+ 1 row in set (0.00 sec)

- 导入全部表时,ERROR tool.ImportAllTablesTool: Encountered IOException running import job: java.io.IOException: Generating splits for a textual index column allowed only in case of “-Dorg.apache.sqoop.splitter.allow_text_splitter=true” property passed as a parameter

**原因:**如果使用的是import-all-tables,数据库中的每个表都必须有一个主键字段。

解决方法:- 暂时只能使用较简单的表 -

- 创建Job时,Exception in thread “main” java.lang.NoClassDefFoundError: org/json/JSONObject

**原因:**sqoop缺少java-json.jar包

**解决方法:**下载java-json.jar

- 导入Hive时,ERROR tool.ImportTool: Import failed: java.io.IOException: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

**原因:**sqoop缺少hive-common-xxx.jar包

**解决方法:**该jar包在hive的lib目录下,拷贝到sqoop的lib目录下即可

最后

以上就是傲娇小馒头最近收集整理的关于Apache Sqoop 学习笔记的全部内容,更多相关Apache内容请搜索靠谱客的其他文章。

发表评论 取消回复