我是靠谱客的博主 飘逸朋友,这篇文章主要介绍SLAM and autonomous navigation with ROS + kinect + arduino + android CATEGORY: PROJECTS,现在分享给大家,希望可以做个参考。

Sung's Blog

CATEGORY: PROJECTS

My Personal Robotic Companion

SLAM and autonomous navigation with ROS + kinect + arduino + android

https://github.com/sungjik/my_personal_robotic_companion

class="youtube-player" src="https://www.youtube.com/embed/uMZ-9CB7mtQ?version=3&rel=1&fs=1&autohide=2&showsearch=0&showinfo=1&iv_load_policy=1&wmode=transparent" style="margin: 0px auto; border: 0px currentColor; width: 760px; height: 458px; display: block; max-width: 100%; box-sizing: inherit;" type="text/html" data-height="458" data-width="760" data-ratio="0.6026315789473684" allowfullscreen="true">

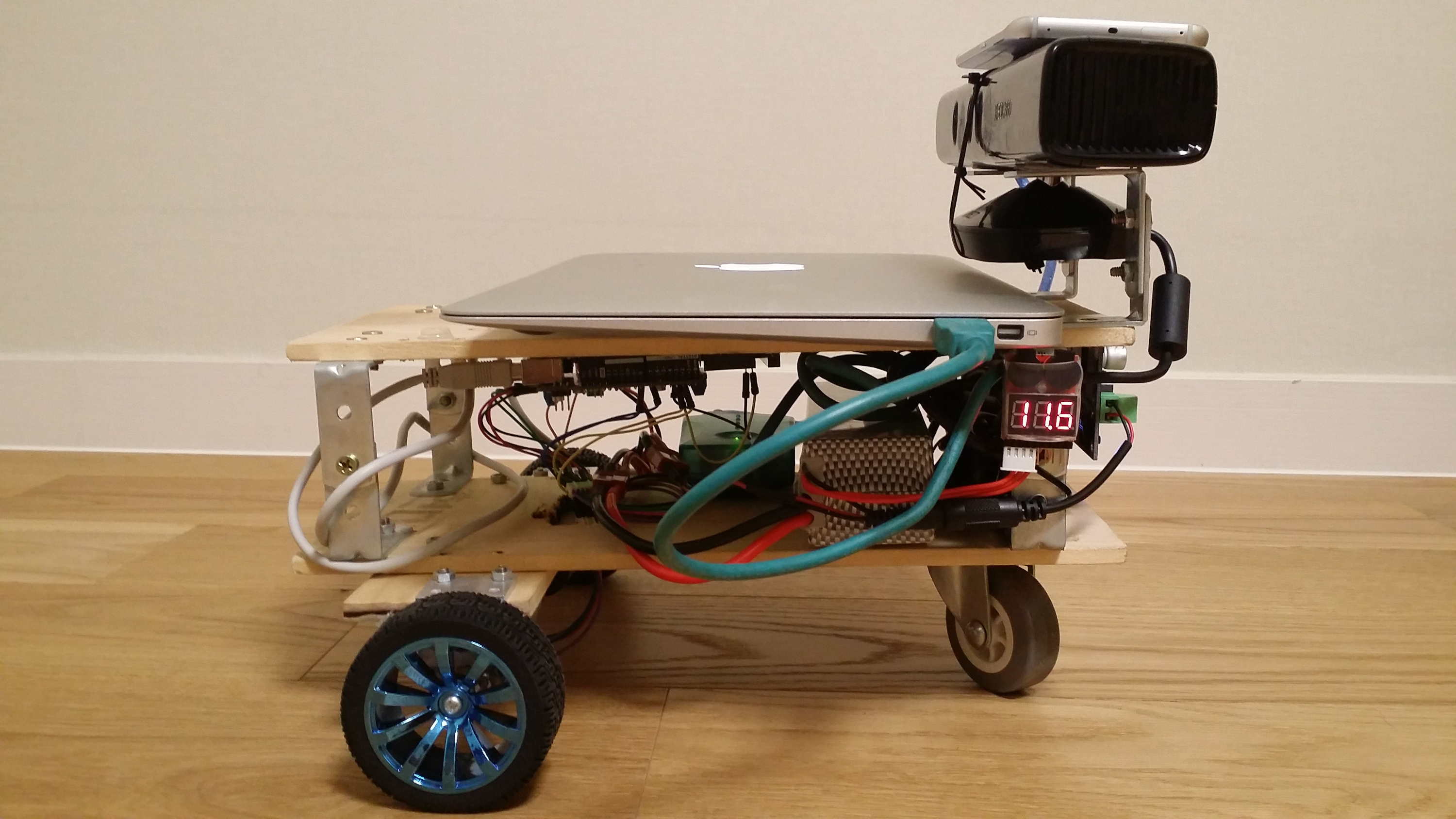

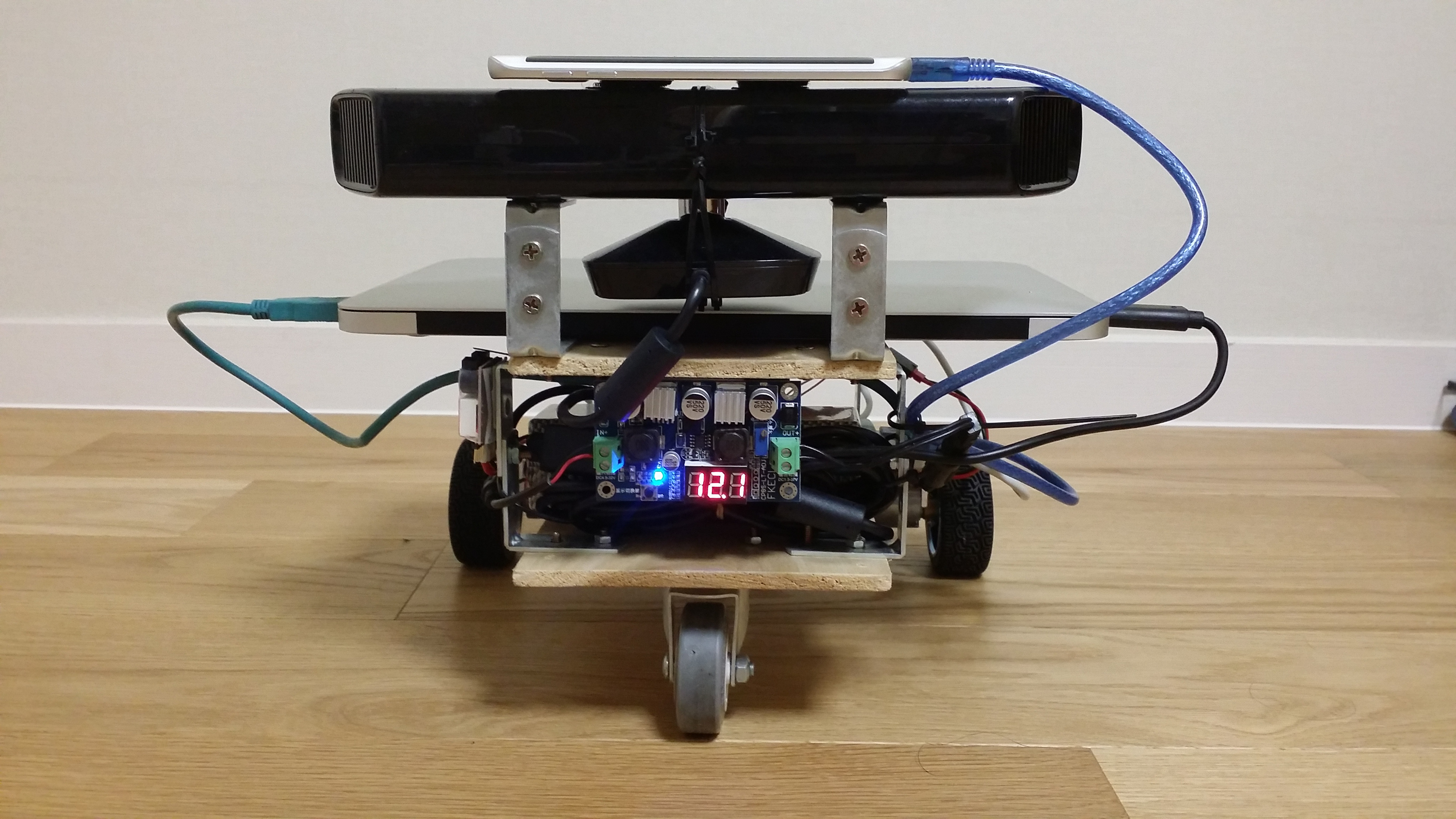

- The Hardware

– two geared DC motors with integrated encoders (RB30-MM7W-D W/EC 26P 12V)

{gear ratio: 1/120, rated speed: 49.5 rpm, torque: 4kgf.cm, encoder resolution: 26}

– Xbox kinect 360

– Macbook Air 11 inch running Lubuntu 14.04 and ROS indigo

– Galaxy S6 edge w/ Android

– Arduino Mega 2560

– Adafruit Motor Shield v2

– 6800mAh 3S LiPo battery (and balance charger)

– DC to DC step up and step down converter (used for connecting the kinect to the battery)

– LiPo alarm (to prevent completely discharging the battery)

– Wood planks, metal connectors, nuts, bolts, wires, cables, etc. - Arduino(motor_controller.ino)

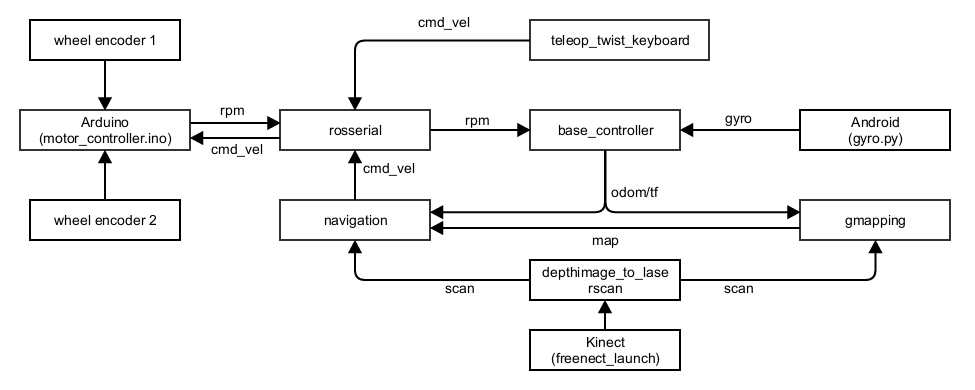

The main loop in motor_controller.ino converts encoder tick counts into the current rpm of both motors and controls the speed of the motors using PID control. The rosserial library conveniently allows the program to communicate with the rest of the ros nodes in the pc via usb. The program subscribes to the cmd_vel topic which sets the desired speed of the motors, and publishes the actual speeds on the rpm topic. I used two interrupt pins in the mega, one for each encoder. The PID control and rpm publishing are all done in the same loop cycle, at a desired rate of 10Hz (every 100 ms). I modifed the Adafruit motorshield v2 library such that setSpeed for DC motors used the full 4096 PWM resolution, i.e. in Adafruit_MotorShield.cpp

void Adafruit_DCMotor::setSpeed(uint16_t speed) {

MC->setPWM(PWMpin, speed);

}

The uint8_t parameter in the corresponding declaration in Adafruit_MotorShield.h also needs to be modified accordingly. The adafruit motor shield v2 has its own on-board pwm chip with 12 bit resolution, but for some strange reason the dc motor library only used 8 bits.

References :

– http://forum.arduino.cc/index.php?topic=8652.0

– http://ctms.engin.umich.edu/CTMS/index.php?example=Introduction§ion=ControlPID

– http://playground.arduino.cc/Main/RotaryEncoders - base_controller.cpp

The base_controller subscribes to the rpm topic from the arduino and converts it into x velocity, theta velocity, xy position, and yaw. It also subscribes to the gyro topic from the android phone and combines the readings with the rpm information to produce a combined yaw. The base_controller then publishes this information in the form of odometry and tf messages.

Subscribed Topics : rpm(geometry_msgs::Vector3Stamped), gyro(geometry_msgs::Vector3)

Published Topics : odom(nav_msgs:Odometry)

Parameters:

– publish_tf : whether to publish tf or not. set to false if you want to use robot_pose_ekf

– publish_rate : rate in hz for publishing odom and tf messages

– linear_scale_positive : amount to scale translational velocity in the positive x direction

– linear_scale_negative : amount to scale translational velocity in the negative x direction

– angular_scale_positive : amount to scale rotational velocity counterclockwise

– angular_scale_negative : amount to scale rotational velocity clockwise

– alpha : value between 0 and 1, how much to weigh wheel encoder yaw relative to gyro yaw

References :

– https://github.com/Arkapravo/turtlebot

– http://wiki.ros.org/turtlebot

– http://wiki.ros.org/ros_arduino_bridge

- Android(gyro.py)

I found it necessary to add an IMU to the robot to improve odometry, since odometry from wheel encoders was prone to error from voltage changes, wheel diameter and track width miscalculations, etc. I used an app called HyperImu on the android app market, which publishes gyroscope, accelerometer, magnetometer, and other useful sensor readings onto a UDP stream. I connected my android phone to the PC via usb and set up usb tethering on the android to get an IP address for the UDP stream. Getting the odometry to work with reasonable accuracy was one of the toughest parts of the project. There always existed a discrepancy between what the software thought the robot was doing and what the robot was doing in real life. I at first tried fusing magnetometer, accelerometer, and gyroscope data using a complementary filter and a kalman filter, but in the end I found that a simple weighted average of the gyroscope data and wheel encoder data worked the best. To test whether odometry was reasonably accurate or not, I followed the instructions in http://wiki.ros.org/navigation/Tutorials/Navigation%20Tuning%20Guide.

- Kinect

I followed the instructions in http://wiki.ros.org/kinect/Tutorials/Adding%20a%20Kinect%20to%20an%20iRobot%20Create to connect my 11.1v lipo battery to the kinect. I used an automatic step up/down DC converter instead of a voltage regulator to ensure a steady 12v to the kinect. I then installed the freenect library :

sudo apt-get install ros-indigo-freenect-stack

and used the depthimage_to_laserscan ros package to publish a fake laser scan derived from the kinect’s rgbd camera and ir sensors.

References :

– https://github.com/turtlebot/turtlebot/blob/indigo/turtlebot_bringup/launch/3dsensor.launch - teleop_twist_keyboard

The teleop_twist_keyboard ros package takes in keyboard input and publishes cmd_vel messages. - gmapping

sudo apt-get install ros-indigo-slam-gmapping ros-indigo-gmapping

The ros gmapping package uses Simultaneous Localization and Mapping(SLAM) to produce a 2D map from laser scan data. I played around with the parameters linearUpdate, angularUpdate, and particles to get a reasonably accurate map. I pretty much followed the directions in http://www.hessmer.org/blog/2011/04/10/2d-slam-with-ros-and-kinect/ and referenced the parameter settings in the turtlebot stack(http://wiki.ros.org/turtlebot). - navigation

sudo apt-get install ros-indigo-navigation

I used the amcl, base_local_planner, and costmap_2d packages from the ros navigation stack. I pretty much followed the instructions in http://wiki.ros.org/navigation/Tutorials. One thing I had to do differently was adding map topics for the global and local costmap in rviz instead of a a grid topic. I also modified and used https://code.google.com/p/drh-robotics-ros/source/browse/trunk/ros/ardros/nodes/velocityLogger.py to calculate the acceleration limits of the robot. After hours of tuning amcl, base_local_planner, and costamp_2d parameters, I finally got everything to work.

by Sung Jik Cha

最后

以上就是飘逸朋友最近收集整理的关于SLAM and autonomous navigation with ROS + kinect + arduino + android CATEGORY: PROJECTS的全部内容,更多相关SLAM内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复