目录

- 准备

- application.yml

- 公共配置类

- MySQL配置类

- Hive配置类

- DAO层Mapper接口类

- Mapper.xml

- Service层

准备

maven依赖,包括mybatis,springboot,大数据连接,MySQL依赖,druid等

<!---配置CDH仓库地址-->

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web-services</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-webflux</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<!-- 添加spring管理bean对象 -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-beans</artifactId>

</dependency>

<!-- spring -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

<version>8.0.13</version>

</dependency>

<!-- 连接大数据 -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>1.1.0</version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.aggregate</groupId>

<artifactId>*</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0-cdh5.15.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.6.0-cdh5.15.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>2.6.0-cdh5.15.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0-cdh5.15.2</version>

</dependency>

<!-- 添加数据库连接池 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.0.29</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.10</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.12.3</version>

<configuration>

<groups>unit</groups>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<verbose>true</verbose>

<fork>true</fork>

<compilerVersion>1.5</compilerVersion>

</configuration>

</plugin>

</plugins>

<!-- 添加资源 -->

<resources>

<resource>

<directory>src/main/resources</directory>

<!-- src/main/resources下的指定资源放行 -->

<includes>

<include>**/*.properties</include>

<include>**/*.yml</include>

<include>**/*.xml</include>

</includes>

<filtering>false</filtering>

</resource>

</resources>

<defaultGoal>compile</defaultGoal>

</build>

application.yml

spring:

datasource:

mysqlMain: # 数据源1mysql配置

type: com.alibaba.druid.pool.DruidDataSource

jdbc-url: jdbc:mysql://0.0.0.0:3306/analysis?characterEncoding=UTF-8&useUnicode=true&serverTimezone=GMT%2B8

username: root

password: root

driver-class-name: com.mysql.cj.jdbc.Driver

hive: # 数据源2hive配置

jdbc-url: jdbc:hive2://0.0.0.0:10000/iot

username: hive

password: hive

driver-class-name: org.apache.hive.jdbc.HiveDriver

type: com.alibaba.druid.pool.DruidDataSource

common-config: #连接池统一配置,应用到所有的数据源

initialSize: 1

minIdle: 1

maxIdle: 5

maxActive: 50

maxWait: 10000

timeBetweenEvictionRunsMillis: 10000

minEvictableIdleTimeMillis: 300000

validationQuery: select 'x'

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

maxOpenPreparedStatements: 20

filters: stat

采用双数据源(hive和mysql)

- 配置jdbc串连接信息 ,注意是hive2;

- 配置Druid连接池信息 以便进行后续编写默认连接池配置类

公共配置类

用于读取hive和mysql配置信息

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import java.util.Map;

@Data

@ConfigurationProperties(prefix = DataSourceProperties.DS, ignoreUnknownFields = false)

public class DataSourceProperties {

final static String DS = "spring.datasource";

private Map<String,String> mysqlMain;

private Map<String,String> hive;

private Map<String,String> commonConfig;

}

读取druid连接池配置

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

@Data

@ConfigurationProperties(prefix = DataSourceCommonProperties.DS, ignoreUnknownFields = false)

public class DataSourceCommonProperties {

final static String DS = "spring.datasource.common-config";

private int initialSize = 10;

private int minIdle;

private int maxIdle;

private int maxActive;

private int maxWait;

private int timeBetweenEvictionRunsMillis;

private int minEvictableIdleTimeMillis;

private String validationQuery;

private boolean testWhileIdle;

private boolean testOnBorrow;

private boolean testOnReturn;

private boolean poolPreparedStatements;

private int maxOpenPreparedStatements;

private String filters;

private String mapperLocations;

private String typeAliasPackage;

}

MySQL配置类

其中@MapperScan路径见后面解释

import com.alibaba.druid.pool.DruidDataSource;

import com.xxxx.xxxx.Config.DataSourceCommonProperties;

import com.xxxx.xxxx.Config.DataSourceProperties;

import lombok.extern.log4j.Log4j2;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.SqlSessionTemplate;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import javax.sql.DataSource;

import java.sql.SQLException;

@Configuration

@MapperScan(basePackages = "com.xxxx.xxxx.Dao.db1", sqlSessionFactoryRef = "db1SqlSessionFactory")

@Log4j2

@EnableConfigurationProperties({DataSourceProperties.class, DataSourceCommonProperties.class})

public class MysqlConfig {

@Autowired

private DataSourceProperties dataSourceProperties;

@Autowired

private DataSourceCommonProperties dataSourceCommonProperties;

// 设置为主数据源

@Primary

@Bean("db1DataSource")

public DataSource getDb1DataSource(){

DruidDataSource datasource = new DruidDataSource();

//配置数据源属性

datasource.setUrl(dataSourceProperties.getMysqlMain().get("jdbc-url"));

datasource.setUsername(dataSourceProperties.getMysqlMain().get("username"));

datasource.setPassword(dataSourceProperties.getMysqlMain().get("password"));

datasource.setDriverClassName(dataSourceProperties.getMysqlMain().get("driver-class-name"));

//配置统一属性

datasource.setInitialSize(dataSourceCommonProperties.getInitialSize());

datasource.setMinIdle(dataSourceCommonProperties.getMinIdle());

datasource.setMaxActive(dataSourceCommonProperties.getMaxActive());

datasource.setMaxWait(dataSourceCommonProperties.getMaxWait());

datasource.setTimeBetweenEvictionRunsMillis(dataSourceCommonProperties.getTimeBetweenEvictionRunsMillis());

datasource.setMinEvictableIdleTimeMillis(dataSourceCommonProperties.getMinEvictableIdleTimeMillis());

datasource.setValidationQuery(dataSourceCommonProperties.getValidationQuery());

datasource.setTestWhileIdle(dataSourceCommonProperties.isTestWhileIdle());

datasource.setTestOnBorrow(dataSourceCommonProperties.isTestOnBorrow());

datasource.setTestOnReturn(dataSourceCommonProperties.isTestOnReturn());

datasource.setPoolPreparedStatements(dataSourceCommonProperties.isPoolPreparedStatements());

try {

datasource.setFilters(dataSourceCommonProperties.getFilters());

} catch (SQLException e) {

log.error("Druid configuration initialization filter error.", e);

}

return datasource;

}

// 创建工厂bean对象

@Primary

@Bean("db1SqlSessionFactory")

public SqlSessionFactory db1SqlSessionFactory(@Qualifier("db1DataSource") DataSource dataSource) throws Exception {

SqlSessionFactoryBean bean = new SqlSessionFactoryBean();

bean.setDataSource(dataSource);

// mapper的xml形式文件位置必须要配置,不然将报错:no statement (这种错误也可能是mapper的xml中,namespace与项目的路径不一致导致)

bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath*:Mapper/db1/*.xml"));

return bean.getObject();

}

// 创建模板bean

@Primary

@Bean("db1SqlSessionTemplate")

public SqlSessionTemplate db1SqlSessionTemplate(@Qualifier("db1SqlSessionFactory") SqlSessionFactory sqlSessionFactory){

return new SqlSessionTemplate(sqlSessionFactory);

}

}

Hive配置类

import com.alibaba.druid.pool.DruidDataSource;

import com.xxxx.xxxx.Config.DataSourceCommonProperties;

import com.xxxx.xxxx.Config.DataSourceProperties;

import lombok.extern.log4j.Log4j2;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.SqlSessionTemplate;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import javax.sql.DataSource;

import java.sql.SQLException;

@Configuration

@MapperScan(basePackages = "com.xxxx.xxxx.Dao.db2", sqlSessionFactoryRef = "db2SqlSessionFactory")

@Log4j2

@EnableConfigurationProperties({DataSourceProperties.class, DataSourceCommonProperties.class})

public class HiveConfig {

@Autowired

private DataSourceProperties dataSourceProperties;

@Autowired

private DataSourceCommonProperties dataSourceCommonProperties;

@Bean("db2DataSource")

public DataSource getDb2DataSource(){

DruidDataSource datasource = new DruidDataSource();

//配置数据源属性

datasource.setUrl(dataSourceProperties.getHive().get("jdbc-url"));

datasource.setUsername(dataSourceProperties.getHive().get("username"));

datasource.setDriverClassName(dataSourceProperties.getHive().get("driver-class-name"));

//配置统一属性

datasource.setInitialSize(dataSourceCommonProperties.getInitialSize());

datasource.setMinIdle(dataSourceCommonProperties.getMinIdle());

datasource.setMaxActive(dataSourceCommonProperties.getMaxActive());

datasource.setMaxWait(dataSourceCommonProperties.getMaxWait());

datasource.setTimeBetweenEvictionRunsMillis(dataSourceCommonProperties.getTimeBetweenEvictionRunsMillis());

datasource.setMinEvictableIdleTimeMillis(dataSourceCommonProperties.getMinEvictableIdleTimeMillis());

datasource.setValidationQuery(dataSourceCommonProperties.getValidationQuery());

datasource.setTestWhileIdle(dataSourceCommonProperties.isTestWhileIdle());

datasource.setTestOnBorrow(dataSourceCommonProperties.isTestOnBorrow());

datasource.setTestOnReturn(dataSourceCommonProperties.isTestOnReturn());

datasource.setPoolPreparedStatements(dataSourceCommonProperties.isPoolPreparedStatements());

try {

datasource.setFilters(dataSourceCommonProperties.getFilters());

} catch (SQLException e) {

log.error("Druid configuration initialization filter error.", e);

}

return datasource;

}

@Bean("db2SqlSessionFactory")

public SqlSessionFactory db2SqlSessionFactory(@Qualifier("db2DataSource") DataSource dataSource) throws Exception {

SqlSessionFactoryBean bean = new SqlSessionFactoryBean();

bean.setDataSource(dataSource);

// mapper的xml形式文件位置必须要配置,不然将报错:no statement (这种错误也可能是mapper的xml中,namespace与项目的路径不一致导致)

// 设置mapper.xml路径,classpath不能有空格

bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath*:Mapper/db2/*.xml"));

return bean.getObject();

}

@Bean("db2SqlSessionTemplate")

public SqlSessionTemplate db2SqlSessionTemplate(@Qualifier("db2SqlSessionFactory") SqlSessionFactory sqlSessionFactory){

return new SqlSessionTemplate(sqlSessionFactory);

}

}

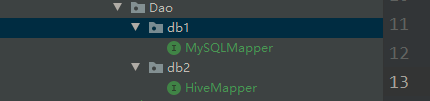

mapper接口层如图片所示,为@MapperScan扫描的包,扫描进spring容器

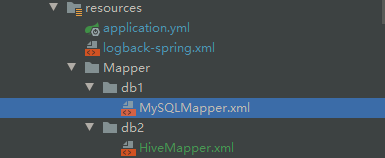

mapper.xml如图

DAO层Mapper接口类

@Mapper

public interface MySQLMapper {

List<Float> findByStartEndTime(@Param("startTime") String startTime, @Param("endTime") String endTime);

}

@Mapper

public interface HiveMapper {

/**

* 从hive中读数据

* @param startTime

* @param endTime

* @return

*/

List<Float> findByHive(String startTime, String endTime);

}

Mapper.xml

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" >

<mapper namespace="com.xxxx.xxxx.Dao.db1.MySQKMapper">

<sql id="Base_Column_List" >

xxxx

</sql>

<select id="findByStartEndTime" parameterType="java.lang.String">

select <include refid="Base_Column_List" />

from xxxx

where flag = 1 and

DATE_FORMAT(STR_TO_DATE(time, '%Y-%m-%d %H:%i:%s'), '%Y%m%d%H%i%s')

between #{startTime}

and #{endTime}

order by time desc

</select>

</mapper>

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" >

<mapper namespace="com.xxxx.xxxx.Dao.db2.HiveMapper">

<sql id="Base_Column_List" >

xxxx

</sql>

<select id="findByHive" parameterType="java.lang.String" >

select <include refid="Base_Column_List" />

from xxxx

where receive_time

between #{startTime}

and #{endTime}

order by receive_time asc

limit 10

</select>

</mapper>

Service层

接口

public interface GetData{

List<Float> findByStartEndTime(String startTime, String endTime);

List<Float> findByHive(String startTime, String endTime);

}

实现类

@Service

@Slf4j

@SuppressWarnings("all")

public class SectionTempAlarmServiceImpl implements SectionTempAlarmService {

@Autowired

private MySQLMapper mySQLMapper;

@Autowired

private HiveMapper hiveMapper;

@Override

public List<Float> findByStartEndTime(String startTime, String endTime) {

List<Float> data= mySQLMapper.findByStartEndTime(startTime, endTime);

return data;

}

@Override

public List<Float> findByHive(String startTime, String endTime) {

long currentTimeMillis = System.currentTimeMillis();

List<Float> allHiveData = hiveMapper.findByHive(startTime, endTime);

long queryTimeMills = System.currentTimeMillis();

System.out.println("=====================select from hive cost: " + (queryTimeMills - currentTimeMillis) / 1000 + "===============");

return allHiveData;

}

}

经过测试,采用mvn package打包就行

最后

以上就是幸福狗最近收集整理的关于springboot整合mybatis采用druid连接池对mysql,hive双数据源整合并打包准备的全部内容,更多相关springboot整合mybatis采用druid连接池对mysql内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

![[NCTF2019]SQLi——regexp注入](https://www.shuijiaxian.com/files_image/reation/bcimg1.png)

发表评论 取消回复