我们都知道Spark比Hadoop的MR计算速度更快。到底快多少呢?我一直比较疑惑,会有官网说的那么夸张吗。

今天就拿基于Spark的Spark SQL和基于MR的Hive比较一下,因为Spark SQL也兼容了HiveQL,我们就可以通过运行相同的HiveQL语句,比较直观的看出到底快多少了。

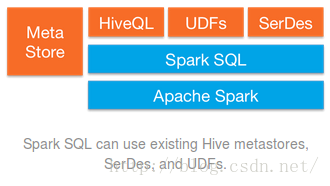

Spark SQL只要在编译的时候引入Hive支持,就可以支持Hive表访问,UDF,SerDe,以及HiveQL/HQL--引自《Spark快速大数据分析》

Hive一般在工作站上运行,它把SQL查询转化为一系列在Hadoop集群上运行的MR作业--引自《Hadoop权威指南》

用的文件系统都是HDFS,比较的是第二条sql语句

1.运行spark-sql shell

guo@drguo:/opt/spark-1.6.1-bin-hadoop2.6/bin$ spark-sql

spark-sql> create external table cn(x bigint, y bigint, z bigint, k bigint)

> row format delimited fields terminated by ','

> location '/cn';

OK

Time taken: 0.876 seconds

spark-sql> create table p as select y, z, sum(k) as t

> from cn where x>=20141228 and x<=20150110 group by y, z;

Time taken: 20.658 seconds 查看jobs,用了15s,为什么上面显示花了接近21s?我也不清楚,先放下不管。

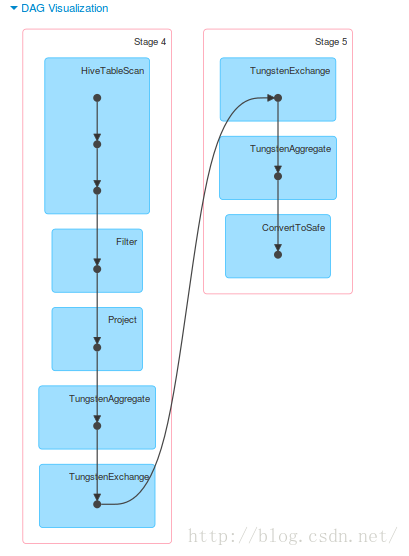

再看一下它的DAG图

2.运行Hive

guo@drguo:~$ hive

hive> create external table cn(x bigint, y bigint, z bigint, k bigint)

> row format delimited fields terminated by ','

> location '/cn';

OK

Time taken: 0.752 seconds

hive> create table p as select y, z, sum(k) as t

> from cn where x>=20141228 and x<=20150110 group by y, z;

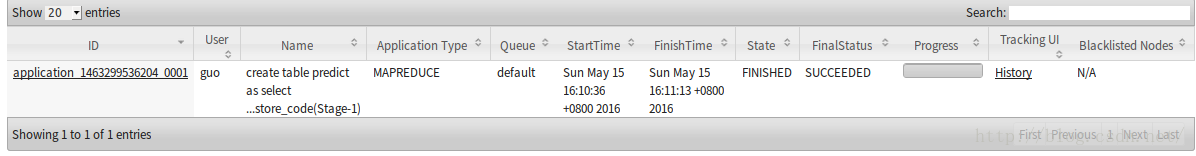

Query ID = guo_20160515161032_2e035fc2-6214-402a-90dd-7acda7d638bf

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1463299536204_0001, Tracking URL = http://drguo:8088/proxy/application_1463299536204_0001/

Kill Command = /opt/Hadoop/hadoop-2.7.2/bin/hadoop job -kill job_1463299536204_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-05-15 16:10:52,353 Stage-1 map = 0%, reduce = 0%

2016-05-15 16:11:03,418 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 8.65 sec

2016-05-15 16:11:13,257 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 13.99 sec

MapReduce Total cumulative CPU time: 13 seconds 990 msec

Ended Job = job_1463299536204_0001

Moving data to: hdfs://drguo:9000/user/hive/warehouse/predict

Table default.predict stats: [numFiles=1, numRows=1486, totalSize=15256, rawDataSize=13770]

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 13.99 sec HDFS Read: 16551670 HDFS Write: 15332 SUCCESS

Total MapReduce CPU Time Spent: 13 seconds 990 msec

OK

Time taken: 42.887 seconds

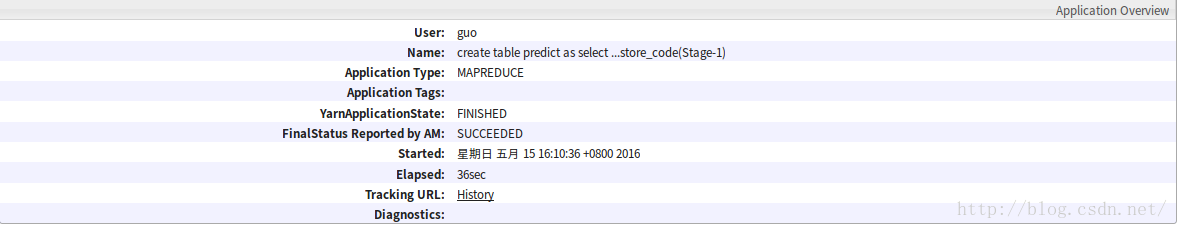

花了接近43s。再去RM看一下,花了36s

结果非常明显

如果按shell里显示花的时间算,21:43

按网页里显示花的时间算,15:36

差不多一倍,据说即将发布的Spark2.0速度更快。

那么问题来了,Spark什么时候会完全取代MR?

后补:数据量550734,count时间为25秒比2.7秒

hive> show tables;

OK

t_log_2016

test

Time taken: 0.333 seconds, Fetched: 2 row(s)

hive> select count(1) from t_log_2016;

Query ID = hdfs_20161013143029_f605d459-878f-495f-95bf-3a3960537d00

Total jobs = 1

Launching Job 1 out of 1

Tez session was closed. Reopening...

Session re-established.

Status: Running (Executing on YARN cluster with App id application_1475896673093_0012)

--------------------------------------------------------------------------------

VERTICES STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

--------------------------------------------------------------------------------

Map 1 .......... SUCCEEDED 12 12 0 0 0 0

Reducer 2 ...... SUCCEEDED 1 1 0 0 0 0

--------------------------------------------------------------------------------

VERTICES: 02/02 [==========================>>] 100% ELAPSED TIME: 16.56 s

--------------------------------------------------------------------------------

OK

550734

Time taken: 25.034 seconds, Fetched: 1 row(s)

spark-sql> show tables;

t_log_2016 false

test false

Time taken: 1.964 seconds, Fetched 2 row(s)

spark-sql> select count(*) from t_log_2016;

550734

Time taken: 2.715 seconds, Fetched 1 row(s)

————————————————

版权声明:本文为CSDN博主「光于前裕于后」的原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/dr_guo/article/details/52775070

最后

以上就是沉默鞋垫最近收集整理的关于Spark SQL与Hive On MapReduce速度比较的全部内容,更多相关Spark内容请搜索靠谱客的其他文章。

发表评论 取消回复