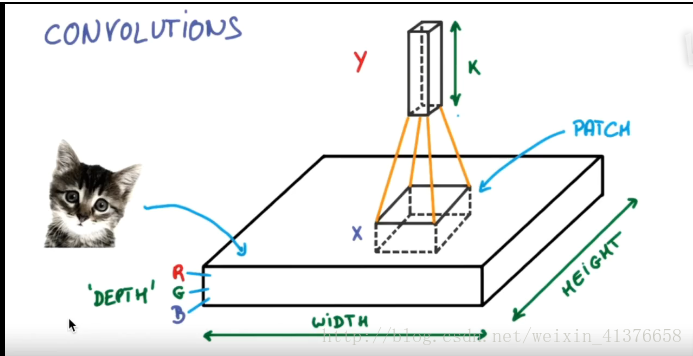

首先对上一篇CNN博文各个层输出的shape值进行补充说明。X_train(-1, 1, 28, 28), -1是表示有多少张图片;1是channel,这里是黑白图片;28x28,每张图片大小。在经过conv1后输出的shape(32, 28, 28) 首先不管图片的张数,32表示channel,图片大小还是不变,卷积是不会改变图片太小,只是将图片高度增加。pooling之后shape(32, 14, 14)channel不变,图片大小变小了。整个过程就是将图片将高,压缩的更小。如图,channel就是k值变大,width,height变小。

**RNN_classifier**Recurrent Neural Network循环神经网络

首先了解一下啥是RNN, LSTM长短时间记忆。

a.首先添加RNN层,输入为训练数据,输出数据大小由CELL_SIZE定义。

b.然后添加输出层,激励函数选择softmax

c.训练,评估。

import numpy as np

np.random.seed(1337) # for reproducibility

from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import SimpleRNN, Activation, Dense

from keras.optimizers import Adam

TIME_STEPS = 28 # same as the height of the image

INPUT_SIZE = 28 # same as the width of the image

BATCH_SIZE = 50

BATCH_INDEX = 0

OUTPUT_SIZE = 10

CELL_SIZE = 50

LR = 0.001

# download the mnist to the path '~/.keras/datasets/' if it is the first time to be called

# X shape (60,000 28x28), y shape (10,000, )

(X_train, y_train), (X_test, y_test) = mnist.load_data()

# data pre-processing

X_train = X_train.reshape(-1, 28, 28) / 255. # normalize

X_test = X_test.reshape(-1, 28, 28) / 255. # normalize

y_train = np_utils.to_categorical(y_train, num_classes=10)

y_test = np_utils.to_categorical(y_test, num_classes=10)

# build RNN model

model = Sequential()

# RNN cell

model.add(SimpleRNN(

# for batch_input_shape, if using tensorflow as the backend, we have to put None for the batch_size.

# Otherwise, model.evaluate() will get error.

batch_input_shape=(None, TIME_STEPS, INPUT_SIZE), # Or: input_dim=INPUT_SIZE, input_length=TIME_STEPS,

output_dim=CELL_SIZE,

unroll=True,

))

# output layer

model.add(Dense(OUTPUT_SIZE))

model.add(Activation('softmax'))

# optimizer

adam = Adam(LR)

model.compile(optimizer=adam,

loss='categorical_crossentropy',

metrics=['accuracy'])

# training

for step in range(4001):

# data shape = (batch_num, steps, inputs/outputs)

X_batch = X_train[BATCH_INDEX: BATCH_INDEX+BATCH_SIZE, :, :]

Y_batch = y_train[BATCH_INDEX: BATCH_INDEX+BATCH_SIZE, :]

cost = model.train_on_batch(X_batch, Y_batch)

BATCH_INDEX += BATCH_SIZE

BATCH_INDEX = 0 if BATCH_INDEX >= X_train.shape[0] else BATCH_INDEX

if step % 500 == 0:

cost, accuracy = model.evaluate(X_test, y_test, batch_size=y_test.shape[0], verbose=False)

print('test cost: ', cost, 'test accuracy: ', accuracy)

贴出原址,

最后

以上就是安静冰淇淋最近收集整理的关于Keras_morvan(四) :RNN_classifier的全部内容,更多相关Keras_morvan(四)内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复