ELK 系统

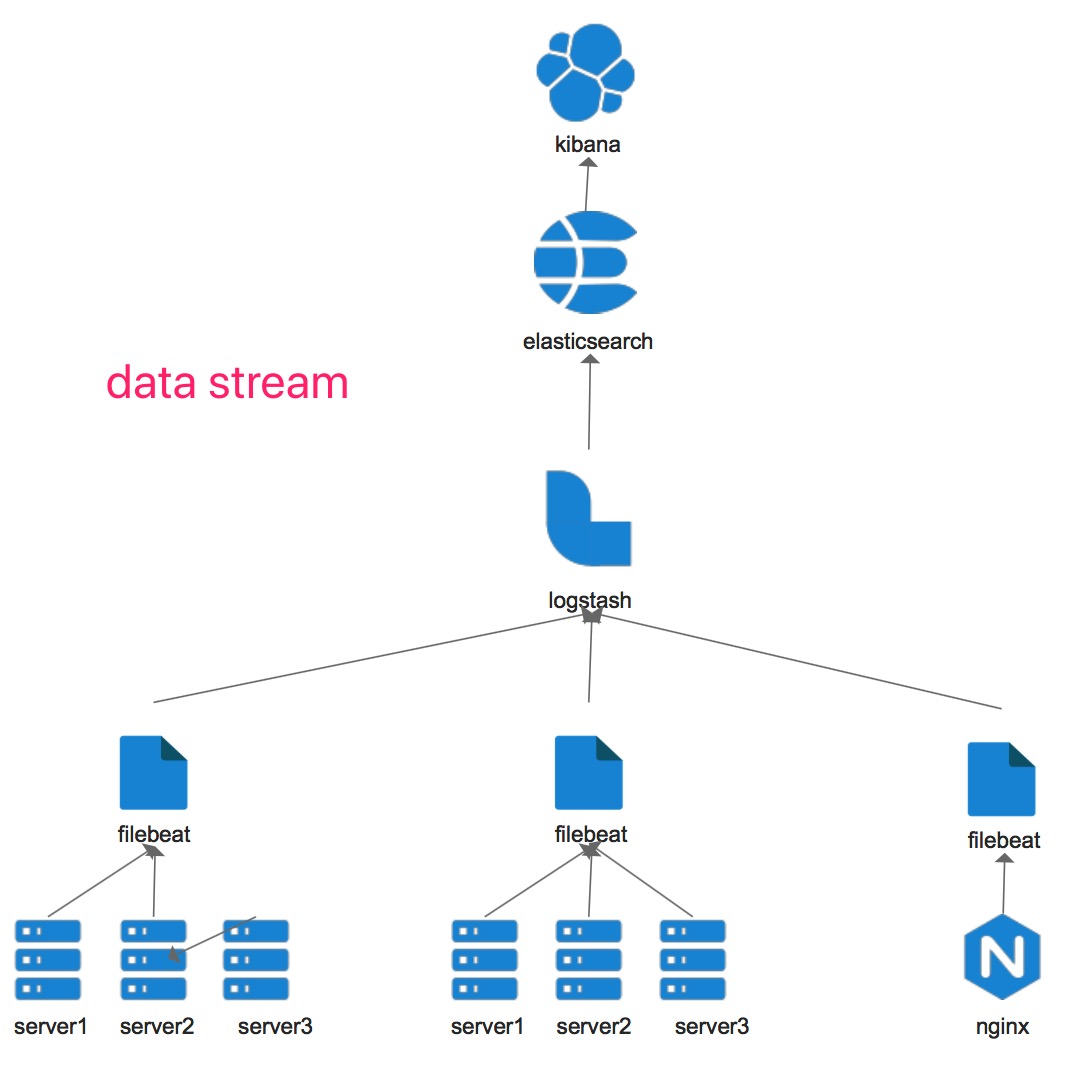

ELK Elastic stack is a popular open-source solution for analyzing weblogs,ELK stack will reside on a server separate from your application.Server log and Nginx log will send by filebeat from http.In my work bank we use ELK:

- receive every Microservice logs

- receive nginx logs

- handing some collected logs

- provide some alarm service

ELK Relationship between the three

graph TB

a(logstash--receive) --> b(elasticsearch--save)

b --> c(kibana--show)ElasticSearch

Elasticsearch is a full-text serach system.We use es index log and text.

Logstash

Logstash is an data engine. Use logstash receive Microservice log and nginx accress log.

kibana

We use kibana analyze data,and provide image data for user.

Install ELK

My machines on aliyun and server use Centos7 These machines Install ELK by yum.(The detail information in my other note.)

Config ELK

Filebeat Config

We use filebeat send server logs to logstash,filebeat only simple dispose data.

This is filebeat.yml:

filebeat.prospectors:

- type: log

#开关

enabled: true

#扫描包路径

paths:

- /var/app/logs/index-server/index-server-info.log

#日志多行处理

multiline:

pattern: ([0-9]{3}[1-9]|[0-9]{2}[1-9][0-9]{1}|[0-9]{1}[1-9][0-9]{2}|[1-9][0-9]{3})-(((0[13578]|1[02])-(0[1-9]|[12][0-9]|3[01]))|((0[469]|11)-(0[1-9]|[12][0-9]|30))|(02-(0[1-9]|[1][0-9]|2[0-8])))

negate: true

match: after

#自定义变量

fields:

service_name: index-server

log_type: info

data_type: log

- type: log

#开关

enabled: true

#扫描包路径

paths:

- /var/app/logs/elastic-server/elastic-server-info.log

#日志多行处理

multiline:

pattern: ([0-9]{3}[1-9]|[0-9]{2}[1-9][0-9]{1}|[0-9]{1}[1-9][0-9]{2}|[1-9][0-9]{3})-(((0[13578]|1[02])-(0[1-9]|[12][0-9]|3[01]))|((0[469]|11)-(0[1-9]|[12][0-9]|30))|(02-(0[1-9]|[1][0-9]|2[0-8])))

negate: true

match: after

#自定义变量

fields:

service_name: elastic-server

log_type: info

data_type: log

- type: log

#开关

enabled: true

#扫描包路径

paths:

- /var/log/nginx/access.log

#自定义变量

fields:

service_name: nginx-system

log_type: info

data_type: log

#输出

output.logstash:

hosts: ["172.17.70.224:9600"]Logstash

Use logstash dispose data use filter plugins.

Grok:Parse arbitrary text and structure it.This plugin import abuto 120 patterns for logs.

input {

# 指定输入源-beats

beats {

host => "172.17.70.224"

port => "9600"

}

}

filter {

if [fields][service_name] =~ ".*system" {

grok {

match => { "message" => "%{COMBINEDAPACHELOG} "%{IPORHOST:host}"" }

}

}

if [field][service_name] =~ ".*server"{

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:logdate} %{LOGLEVEL:level} [(?<thread>S+)]s(?<class>S+) --> (?<message>.*)" }

}

}

# 将 kibana 的查询时间改成日志的打印时间,方便之后查询,如果不改的话,kibana会有自己的时间,导致查询不方便

date {

match => ["logdate", "yyyy-MM-dd HH:mm:ss Z", "ISO8601"]

target => "@timestamp"

}

}

# 输出设置

output {

if [fields][data_type] == "log" {

# 输出到控制台

stdout {

codec => rubydebug

}

# 输出到elasticsearch,提供给kibana进行搜索

elasticsearch {

hosts => [ "172.17.70.224:9200" ]

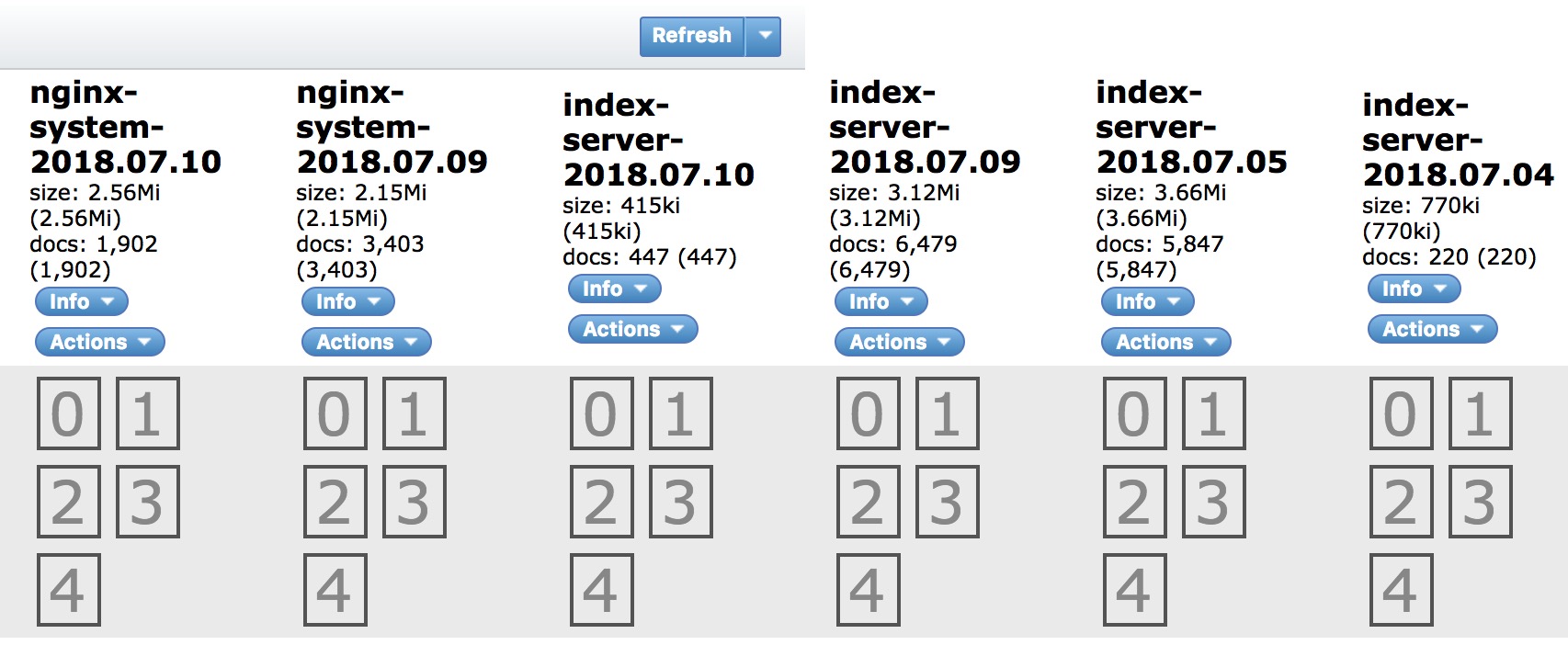

index => "%{[fields][service_name]}-%{+YYYY.MM.dd}" # 在es中存储的索引格式,按照“服务名-日期”进行索引

}

}

}Search log by kibana

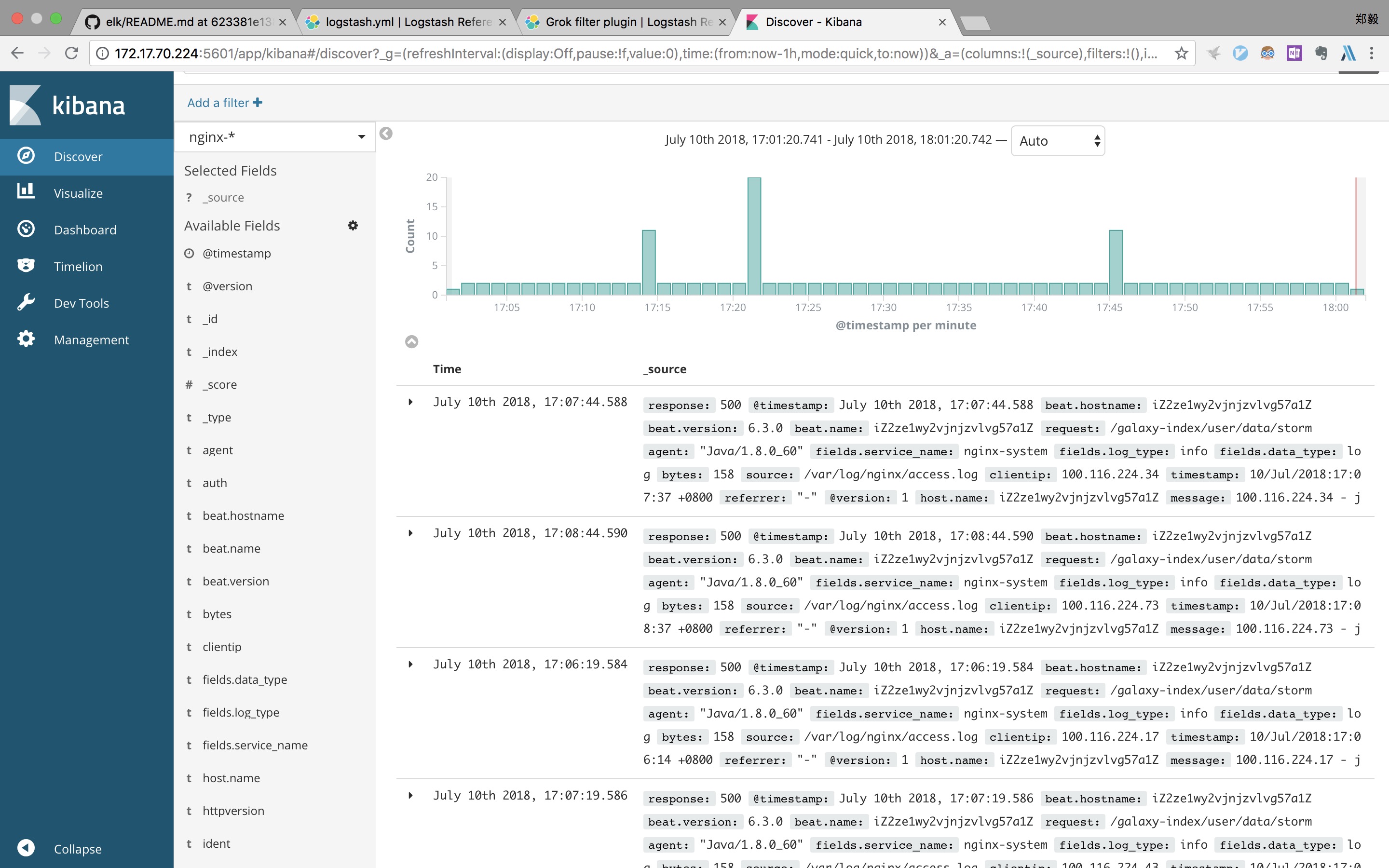

We use logstash change log to json,use elasticsearch index log last use kibana view log by some query roles.

We can view kibana web,this website to show logs data by dashboard.

Nginx-data format

There are some nginx’s logs,We can see .The logs was splited by Grop.

{

"_index": "nginx-system-2018.07.09",

"_type": "doc",

"_id": "KoNVfmQBoNfeGOQ6f_ja",

"_version": 1,

"_score": 1,

"_source": {

"offset": 203925,

"clientip": "100.116.224.83",

"source": "/var/log/nginx/access.log",

"verb": "POST",

"auth": "jbzm",

"response": "500",

"fields": {

"log_type": "info",

"data_type": "log",

"service_name": "nginx-system"

},

"beat": {

"hostname": "iZ2ze1wy2vjnjzvlvg57a1Z",

"name": "iZ2ze1wy2vjnjzvlvg57a1Z",

"version": "6.3.0"

},

"input": {

"type": "log"

},

"httpversion": "1.1",

"@timestamp": "2018-07-09T09:06:59.100Z",

"timestamp": "09/Jul/2018:14:31:37 +0800",

"bytes": "158",

"message": "100.116.224.83 - jbzm [09/Jul/2018:14:31:37 +0800] "POST /galaxy-index/user/data/storm HTTP/1.1" 500 158 "-" "Java/1.8.0_60" "123.103.86.50"",

"ident": "-",

"agent": ""Java/1.8.0_60"",

"prospector": {

"type": "log"

},

"request": "/galaxy-index/user/data/storm",

"host": {

"name": "iZ2ze1wy2vjnjzvlvg57a1Z"

},

"@version": "1",

"referrer": ""-"",

"tags": [

"beats_input_codec_plain_applied"

]

}

}最后

以上就是闪闪蜜粉最近收集整理的关于ELK nginx系统日志收集+微服务日志集中处理实战的全部内容,更多相关ELK内容请搜索靠谱客的其他文章。

![[emerg] 1#1: open()](https://www.shuijiaxian.com/files_image/reation/bcimg9.png)

发表评论 取消回复