我是靠谱客的博主 雪白黑夜,这篇文章主要介绍clickhouse 测试数据集在线插入报错 Failed to read all the data from the reader, missing 10485760 bytes,现在分享给大家,希望可以做个参考。

今天在向 ClickHouse 测试表插入数据集时,报了以下错误:

ClickHouse.localdomain :) INSERT INTO hits_NoPrimaryKey SELECT

intHash32(c11::UInt64) AS UserID,

c15 AS URL,

c5 AS EventTime

FROM url('https://datasets.clickhouse.com/hits/tsv/hits_v1.tsv.xz')

WHERE URL != '';

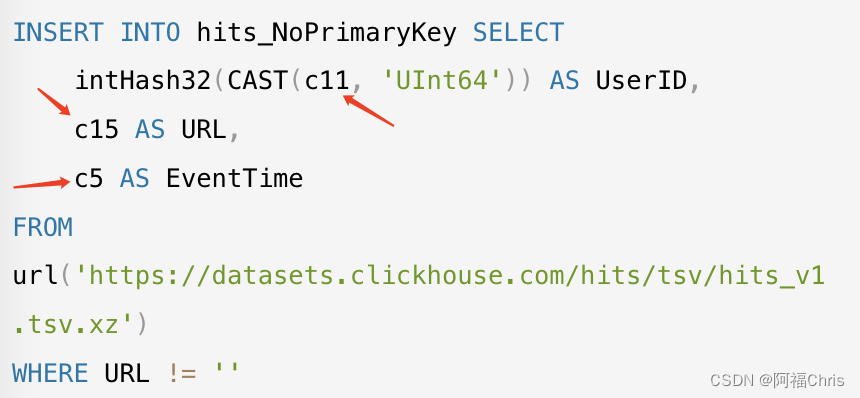

INSERT INTO hits_NoPrimaryKey SELECT

intHash32(CAST(c11, 'UInt64')) AS UserID,

c15 AS URL,

c5 AS EventTime

FROM url('https://datasets.clickhouse.com/hits/tsv/hits_v1.tsv.xz')

WHERE URL != ''

Query id: 40869efe-bbf8-41eb-82f9-4200cb72948a

→ Progress: 446.34 thousand rows, 843.07 MB (30.34 thousand rows/s., 57.31 MB/s.) (0.0 CPU, 117.94 MB RAM)

0 rows in set. Elapsed: 14.711 sec. Processed 446.34 thousand rows, 843.07 MB (30.34 thousand rows/s., 57.31 MB/s.)

Received exception from server (version 22.9.1):

Code: 49. DB::Exception: Received from localhost:9000. DB::Exception: Failed to read all the data from the reader, missing 10485760 bytes: While executing ParallelParsingBlockInputFormat: While executing URL. (LOGICAL_ERROR)

主要关注错误信息:DB::Exception: Failed to read all the data from the reader, missing 10485760 bytes。预测可能是因为网络不稳定,数据下载波动导致的。因为多次执行发现有 timeout 字眼:

Received exception from server (version 22.9.1):

Code: 636. DB::Exception: Received from localhost:9000. DB::Exception: Cannot extract table structure from TSV format file:

Poco::Exception. Code: 1000, e.code() = 0, Timeout: connect timed out: 172.66.43.7:443 (version 22.9.1.2514 (official build))

You can specify the structure manually. (CANNOT_EXTRACT_TABLE_STRUCTURE)

所以遇到这网络的问题,可以将数据 wget 到本地,然后采用本地文件入库的方式导入,例如:

wget https://datasets.clickhouse.com/hits/tsv/hits_v1.tsv.xz

xz -d https://datasets.clickhouse.com/hits/tsv/hits_v1.tsv.xz

clickhouse-client --query "INSERT INTO hits_NoPrimaryKey FORMAT TSV" --max_insert_block_size=100000 --password < hits_v1.tsv

通过上面的 clickhouse 客户端导入,肯定会报错,因为从上面的 url 函数可以看出,其实它只选择了 3 列,所以我们还需要做一些改变才能在本地导入:

这里我们对数据进行筛选,我使用 cut 语句选择出这三列的数据:

cut -f5,11,15 < hits_v1.tsv > result.tsv # 先选择出这 3 列数据

重建一下表,修改一下字段顺序:

CREATE TABLE hits_NoPrimaryKey

(

`EventTime` DateTime,

`UserID` UInt32,

`URL` String

)

ENGINE = MergeTree

PRIMARY KEY tuple();

使用客户端导入数据:

clickhouse-client --query "INSERT INTO hits_NoPrimaryKey FORMAT TSV" --max_insert_block_size=100000 --password < result.tsv

查询入库数据量:

ClickHouse.localdomain :) select count(*) from hits_NoPrimaryKey;

SELECT count(*)

FROM hits_NoPrimaryKey

Query id: 104a30dc-d281-463d-b4b2-3a9c9cc406a4

┌─count()─┐

│ 8873898 │

└─────────┘

1 row in set. Elapsed: 0.001 sec.

如果对您有用,麻烦动动小手【关注】、【点赞】、【收藏】,谢谢~

End~

最后

以上就是雪白黑夜最近收集整理的关于clickhouse 测试数据集在线插入报错 Failed to read all the data from the reader, missing 10485760 bytes的全部内容,更多相关clickhouse内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复