我是靠谱客的博主 腼腆山水,这篇文章主要介绍yarn-per-job模式启动flink程序时出现程序无法实例化,connectd refused,无法创建检查点实例的原因,现在分享给大家,希望可以做个参考。

四台节点(CDH环境,一台cm主节点,三台大数据服务节点),在yarn中运行flink程序,不小心出现的问题:

org.apache.flink.util.FlinkException: JobMaster for job 133fa98764016aa4f4da2bc2ebcbe657 failed.

at org.apache.flink.runtime.dispatcher.Dispatcher.jobMasterFailed(Dispatcher.java:790) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.Dispatcher.dispatcherJobFailed(Dispatcher.java:439) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.Dispatcher.handleDispatcherJobResult(Dispatcher.java:416) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$runJob$3(Dispatcher.java:397) ~[larkdata-dispose.jar:?]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture$UniHandle.tryFire(CompletableFuture.java:797) ~[?:1.8.0_181]

at java.util.concurrent.CompletableFuture$Completion.run(CompletableFuture.java:442) ~[?:1.8.0_181]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRunAsync(AkkaRpcActor.java:404) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:197) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:74) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:154) ~[larkdata-dispose.jar:?]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:26) [larkdata-dispose.jar:?]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:21) [larkdata-dispose.jar:?]

at scala.PartialFunction$class.applyOrElse(PartialFunction.scala:123) [larkdata-dispose.jar:?]

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:21) [larkdata-dispose.jar:?]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:170) [larkdata-dispose.jar:?]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171) [larkdata-dispose.jar:?]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171) [larkdata-dispose.jar:?]

at akka.actor.Actor$class.aroundReceive(Actor.scala:517) [larkdata-dispose.jar:?]

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:225) [larkdata-dispose.jar:?]

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:592) [larkdata-dispose.jar:?]

at akka.actor.ActorCell.invoke(ActorCell.scala:561) [larkdata-dispose.jar:?]

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:258) [larkdata-dispose.jar:?]

at akka.dispatch.Mailbox.run(Mailbox.scala:225) [larkdata-dispose.jar:?]

at akka.dispatch.Mailbox.exec(Mailbox.scala:235) [larkdata-dispose.jar:?]

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260) [larkdata-dispose.jar:?]

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339) [larkdata-dispose.jar:?]

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979) [larkdata-dispose.jar:?]

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107) [larkdata-dispose.jar:?]

Caused by: org.apache.flink.runtime.client.JobInitializationException: Could not instantiate JobManager.

at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$createJobManagerRunner$5(Dispatcher.java:463) ~[larkdata-dispose.jar:?]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: org.apache.flink.util.FlinkRuntimeException: Failed to create checkpoint storage at checkpoint coordinator side.

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:316) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:231) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.executiongraph.ExecutionGraph.enableCheckpointing(ExecutionGraph.java:495) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.executiongraph.ExecutionGraphBuilder.buildGraph(ExecutionGraphBuilder.java:347) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.createExecutionGraph(SchedulerBase.java:291) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.createAndRestoreExecutionGraph(SchedulerBase.java:256) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.<init>(SchedulerBase.java:238) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.DefaultScheduler.<init>(DefaultScheduler.java:134) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.DefaultSchedulerFactory.createInstance(DefaultSchedulerFactory.java:108) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobMaster.createScheduler(JobMaster.java:323) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobMaster.<init>(JobMaster.java:310) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFactory.java:96) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFactory.java:41) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobManagerRunnerImpl.<init>(JobManagerRunnerImpl.java:141) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.DefaultJobManagerRunnerFactory.createJobManagerRunner(DefaultJobManagerRunnerFactory.java:80) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$createJobManagerRunner$5(Dispatcher.java:450) ~[larkdata-dispose.jar:?]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: java.net.ConnectException: Call From test-03/192.168.10.33 to test-01:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_181]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_181]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_181]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_181]

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:824) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:754) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1495) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1437) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1347) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116) ~[larkdata-dispose.jar:?]

at com.sun.proxy.$Proxy40.mkdirs(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:639) ~[larkdata-dispose.jar:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_181]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_181]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_181]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_181]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359) ~[larkdata-dispose.jar:?]

at com.sun.proxy.$Proxy41.mkdirs(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2376) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:2352) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1243) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1240) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:1257) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:1232) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:2260) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.fs.hdfs.HadoopFileSystem.mkdirs(HadoopFileSystem.java:178) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.state.filesystem.FsCheckpointStorageAccess.initializeBaseLocations(FsCheckpointStorageAccess.java:111) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:314) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:231) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.executiongraph.ExecutionGraph.enableCheckpointing(ExecutionGraph.java:495) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.executiongraph.ExecutionGraphBuilder.buildGraph(ExecutionGraphBuilder.java:347) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.createExecutionGraph(SchedulerBase.java:291) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.createAndRestoreExecutionGraph(SchedulerBase.java:256) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.<init>(SchedulerBase.java:238) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.DefaultScheduler.<init>(DefaultScheduler.java:134) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.DefaultSchedulerFactory.createInstance(DefaultSchedulerFactory.java:108) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobMaster.createScheduler(JobMaster.java:323) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobMaster.<init>(JobMaster.java:310) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFactory.java:96) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFactory.java:41) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobManagerRunnerImpl.<init>(JobManagerRunnerImpl.java:141) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.DefaultJobManagerRunnerFactory.createJobManagerRunner(DefaultJobManagerRunnerFactory.java:80) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$createJobManagerRunner$5(Dispatcher.java:450) ~[larkdata-dispose.jar:?]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) ~[?:1.8.0_181]

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) ~[?:1.8.0_181]

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:685) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:788) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client$Connection.access$3500(Client.java:409) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1552) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1383) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1347) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116) ~[larkdata-dispose.jar:?]

at com.sun.proxy.$Proxy40.mkdirs(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:639) ~[larkdata-dispose.jar:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_181]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_181]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_181]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_181]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359) ~[larkdata-dispose.jar:?]

at com.sun.proxy.$Proxy41.mkdirs(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2376) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:2352) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1243) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1240) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:1257) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:1232) ~[larkdata-dispose.jar:?]

at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:2260) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.fs.hdfs.HadoopFileSystem.mkdirs(HadoopFileSystem.java:178) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.state.filesystem.FsCheckpointStorageAccess.initializeBaseLocations(FsCheckpointStorageAccess.java:111) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:314) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:231) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.executiongraph.ExecutionGraph.enableCheckpointing(ExecutionGraph.java:495) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.executiongraph.ExecutionGraphBuilder.buildGraph(ExecutionGraphBuilder.java:347) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.createExecutionGraph(SchedulerBase.java:291) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.createAndRestoreExecutionGraph(SchedulerBase.java:256) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.SchedulerBase.<init>(SchedulerBase.java:238) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.DefaultScheduler.<init>(DefaultScheduler.java:134) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.scheduler.DefaultSchedulerFactory.createInstance(DefaultSchedulerFactory.java:108) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobMaster.createScheduler(JobMaster.java:323) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobMaster.<init>(JobMaster.java:310) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFactory.java:96) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.factories.DefaultJobMasterServiceFactory.createJobMasterService(DefaultJobMasterServiceFactory.java:41) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.jobmaster.JobManagerRunnerImpl.<init>(JobManagerRunnerImpl.java:141) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.DefaultJobManagerRunnerFactory.createJobManagerRunner(DefaultJobManagerRunnerFactory.java:80) ~[larkdata-dispose.jar:?]

at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$createJobManagerRunner$5(Dispatcher.java:450) ~[larkdata-dispose.jar:?]

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

2022-06-09 22:02:01,494 INFO org.apache.flink.runtime.blob.BlobServer [] - Stopped BLOB server at 0.0.0.0:33730

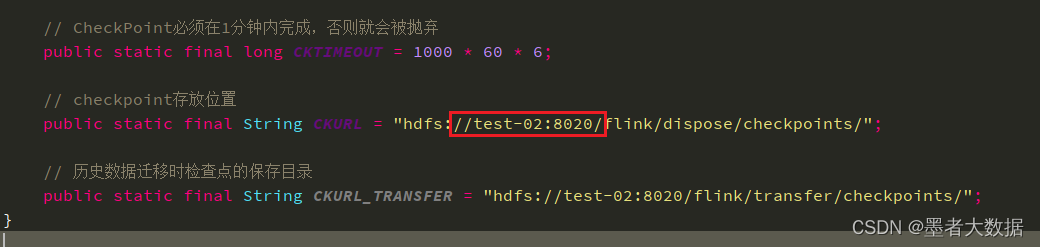

经过排查发现,是因为 checkpoint的地址写错了,因为test-01节点不是hdfs的节点,需要 改成另外三个节点的其中一个。

提示:

细心!!!!!!!!!!!!

最后

以上就是腼腆山水最近收集整理的关于yarn-per-job模式启动flink程序时出现程序无法实例化,connectd refused,无法创建检查点实例的原因的全部内容,更多相关yarn-per-job模式启动flink程序时出现程序无法实例化,connectd内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复