1、创建Maven项目,导入以下依赖,必须:

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

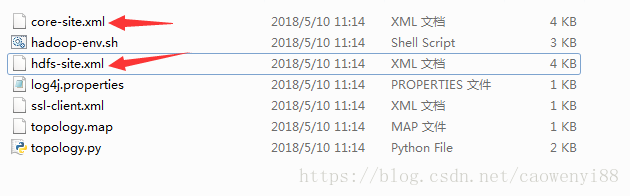

</dependency>2、获取客户端配置文件hdfs-site.xml及core-site.xml

文件目录:

3、测试程序

public static void test1(String user, String keytab, String dir) throws Exception {

Configuration conf = new Configuration();

conf.addResource(new Path("D:/cdh_10/hdfs-site.xml"));

conf.addResource(new Path("D:/cdh_10/core-site.xml"));

System.setProperty("java.security.krb5.conf", "D:/cdh_10/krb5.conf");

UserGroupInformation.setConfiguration(conf);

UserGroupInformation.loginUserFromKeytab(user, keytab);

listDir(conf, dir);

}

public static void listDir(Configuration conf, String dir) throws IOException {

FileSystem fs = FileSystem.get(conf);

FileStatus files[] = fs.listStatus(new Path(dir));

for (FileStatus file : files) {

System.out.println(file.getPath());

}

}

public static void main(String[] args) {

String user = "xxx";

String keytab = "D:/cdh_10/xxx.keytab";

String dir = "hdfs://ns1/data";

try {

test1(user, keytab, dir);

} catch (Exception e) {

e.printStackTrace();

}

}可能遇到的异常:

1、缺少kerberos配置文件设置时,如下

System.setProperty("java.security.krb5.conf", "D:/cdh_10/krb5.conf");抛出异常:

java.lang.IllegalArgumentException: Can't get Kerberos realm

at org.apache.hadoop.security.HadoopKerberosName.setConfiguration(HadoopKerberosName.java:65)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:263)

at org.apache.hadoop.security.UserGroupInformation.setConfiguration(UserGroupInformation.java:299)

at com.xxx.hdfs.CdhHdfsOpt.test1(CdhHdfsOpt.java:122)

at com.xxx.hdfs.CdhHdfsOpt.main(CdhHdfsOpt.java:141)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.security.authentication.util.KerberosUtil.getDefaultRealm(KerberosUtil.java:84)

at org.apache.hadoop.security.HadoopKerberosName.setConfiguration(HadoopKerberosName.java:63)

... 4 more

Caused by: KrbException: Cannot locate default realm

at sun.security.krb5.Config.getDefaultRealm(Config.java:1029)

... 10 moreimport java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.security.UserGroupInformation;

public class HdfsKerberosTest {

String hdfsUrl = "hdfs://192.168.10.12:8020";

/**

* 从hdfs中读取文件信息

*

* @param path

* @param ipaUser

* @param sysProperty

* @return

*/

public InputStream read(final String path) {

FileSystem fs = null;

try {

Configuration conf = new Configuration();

init(conf);

Path p = new Path(path);

fs = FileSystem.get(URI.create(hdfsUrl), conf);

return fs.open(p);

} catch (IllegalArgumentException | IOException e) {

System.out.println("从HDFS获取文件信息,抛异常了!" + e);

}

return null;

}

public void init(final Configuration conf) throws IOException {

System.setProperty("java.security.krb5.kdc", "freeipa.com");// kdc的ip

System.setProperty("java.security.krb5.realm", "COM");// realm

// System.setProperty("java.security.krb5.conf", "D:\etl\krb5.conf");

conf.set("hadoop.security.authentication", "Kerberos");

conf.addResource(new Path("D:\etl\core-site.xml"));// hdfs中下载下来的

conf.addResource(new Path("D:\etl\hdfs-site.xml"));// hdfs中下载下来的

UserGroupInformation.setConfiguration(conf);

UserGroupInformation.loginUserFromKeytab("duhai@COM", "D:\etl\duhai.keytab");// 在kdc中增加的认证名称和生成的keytab

}

public static void main(final String[] args) throws IOException {

Test test = new Test();

//InputStream inStream = test.read("hdfs://10.121.8.11:8020/filesystem/etl/duhai/dw-etl-demo-1.0.0.jar");// 下载文件的地址

InputStream inStream = test.read("/etl/duhai/dw-etl-demo-1.0.0.jar!20190919-155416757");// 下载文件的地址

OutputStream outputStream = new FileOutputStream("D:\etl\aa.jar");

// 循环取出流中的数据

byte[] b = new byte[1024];

int len;

while ((len = inStream.read(b)) > 0) {

outputStream.write(b, 0, len);

}

inStream.close();

outputStream.close();

}

}

最后

以上就是欢喜马里奥最近收集整理的关于Java 访问kerberos认证的HDFS文件的全部内容,更多相关Java内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复