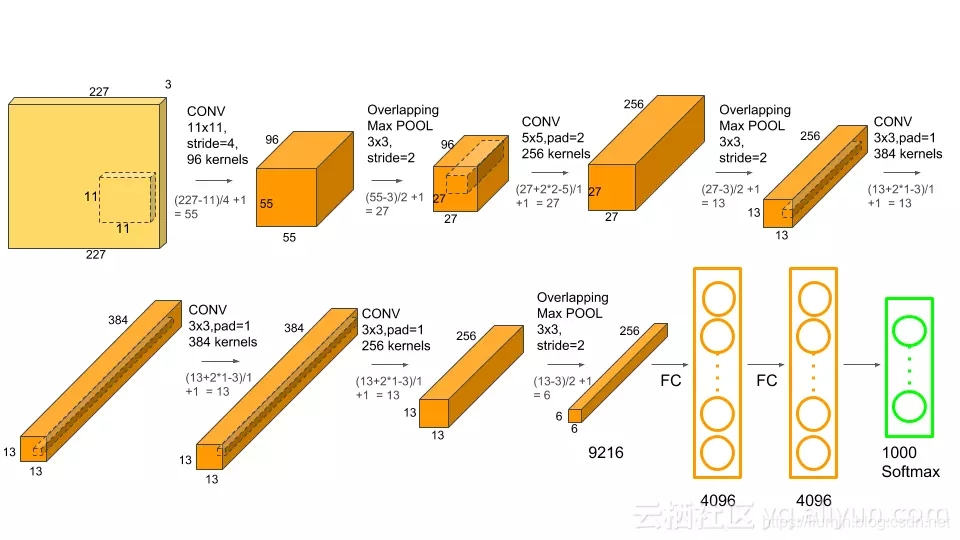

首先贴一个Alexnet网络结构的示意图

然后按照上图编写Alexnet网络,一一对应即可:

import torch

import torch.nn as nn

#import torchvision

class Alexnet(nn.Module):

def __init__(self, class_num = 1000):

super(Alexnet, self).__init__()

self.featureExtraction = nn.Sequential(

nn.Conv2d(in_channels= 3, out_channels= 96, kernel_size= 11, stride= 4, bias= 0),

nn.ReLU(inplace= True),

nn.MaxPool2d(kernel_size= 3, stride= 2, padding= 0),

nn.Conv2d(in_channels= 96, out_channels= 256, kernel_size= 5, stride= 1, padding = 2, bias= 0),

nn.ReLU(inplace= True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(in_channels=256, out_channels=384, kernel_size=3, stride=1, padding=1, bias=0),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=384, out_channels=384, kernel_size= 3, stride=1, padding= 1, bias=0),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=384, out_channels=256, kernel_size= 3, stride= 1, padding= 1, bias=0),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size= 3, stride= 2, padding= 0)

)

self.fc = nn.Sequential(

nn.Dropout(0.5),

nn.Linear(in_features= 256*6*6, out_features= 4096),

nn.ReLU(inplace= True),

nn.Dropout(0.5),

nn.Linear(in_features= 4096, out_features= 4096),

nn.ReLU(inplace= True),

nn.Linear(in_features= 4096, out_features= class_num)

)

def forward(self, x):

x = self.featureExtraction(x)

x = x.view(x.size(0), 256*6*6)

x = self.fc(x)

return x

if __name__ == '__main__':

model = Alexnet()

print(model)

input = torch.randn(8, 3, 227, 227)

out = model(input)

print(out.shape)

print(out)

测试结果:

Alexnet(

(featureExtraction): Sequential(

(0): Conv2d(3, 96, kernel_size=(11, 11), stride=(4, 4), bias=False)

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(96, 256, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), bias=False)

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(256, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(7): ReLU(inplace=True)

(8): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(9): ReLU(inplace=True)

(10): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(fc): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Linear(in_features=9216, out_features=4096, bias=True)

(2): ReLU(inplace=True)

(3): Dropout(p=0.5, inplace=False)

(4): Linear(in_features=4096, out_features=4096, bias=True)

(5): ReLU(inplace=True)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

torch.Size([8, 1000])

tensor([[ 7.8992e-03, -5.0696e-03, 1.0371e-02, ..., 1.2258e-02,

-1.5194e-05, -1.0831e-02],

[ 1.6226e-02, -1.3935e-02, -1.0737e-04, ..., 1.4513e-02,

-7.0582e-03, -2.1036e-02],

[-1.6301e-03, -1.3431e-02, -3.7540e-04, ..., -7.3956e-04,

-8.8427e-03, -1.8173e-02],

...,

[ 1.6233e-02, -4.9180e-03, 3.8799e-03, ..., 7.6893e-03,

-2.4209e-03, -1.8067e-02],

[ 1.7316e-02, -1.5523e-02, 7.8454e-03, ..., 9.2318e-03,

-1.2605e-02, -1.2254e-02],

[ 2.3515e-02, -1.6916e-02, 1.2861e-02, ..., 1.5658e-02,

-8.7848e-03, -2.1918e-02]], grad_fn=<AddmmBackward>)

最后

以上就是懵懂奇异果最近收集整理的关于使用Pytorch手动搭建Alexnet网络的全部内容,更多相关使用Pytorch手动搭建Alexnet网络内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复