聊聊Thrift(四) thrift 服务篇-TNonblockingServer

TNonblockingServer是thrift提供的NIO实现的服务模式,提供非阻塞的服务处理,用一个单线程来处理所有的RPC请求。

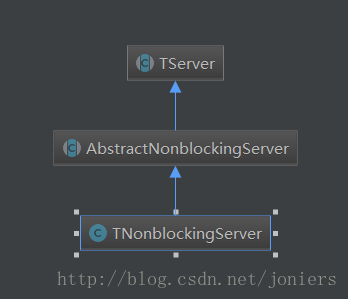

类关系如下:

AbstractNonblockingServer是TNonblockingServer和TThreadedSelectorServer的父类,TThreadedSelectorServer即为我们下篇将要讲解的高级非阻塞模式

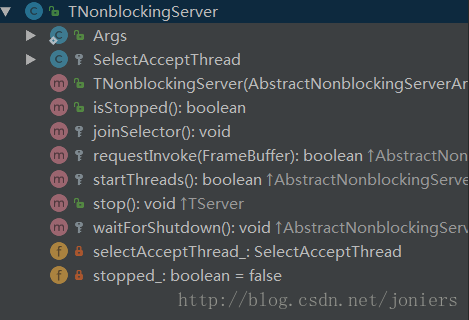

class结构如下图:

Args是静态内部类,用于传递参数,通过Args可以设置读缓冲区大小,序列化方式,传输协议以及RPC处理类。

通过AbstractNonblockingServer 的serve方法,启动服务,启动接受线程,启动监听,等待服务结束

/**

* Begin accepting connections and processing invocations.

*/

public void serve() {

// start any IO threads

if (!startThreads()) {

return;

}

// start listening, or exit

if (!startListening()) {

return;

}

setServing(true);

// this will block while we serve

waitForShutdown();

setServing(false);

// do a little cleanup

stopListening();

}重点需要我们关注的是startThreads,在startThreads的实现中,会创建一个SelectAcceptThread线程,并启动。

protected boolean startThreads() {

// start the selector

try {

selectAcceptThread_ = new SelectAcceptThread((TNonblockingServerTransport)serverTransport_);

return true;

} catch (IOException e) {

LOGGER.error("Failed to start selector thread!", e);

return false; }

}

SelectAcceptThread就是我们的NIO处理线程,用来accept客户端连接,读写请求

流程:startThreads-》SelectAcceptThread-》select

其中select用来处理所有io事件,select通过selector.select()等待IO事件,然后通过handleAccept、handleRead、handleWrite处理接收到的事件。

if (key.isAcceptable()) {

handleAccept();

} else if (key.isReadable()) {

// deal with reads

handleRead(key);

} else if (key.isWritable()) {

// deal with writes

handleWrite(key);

} else {

LOGGER.warn("Unexpected state in select! " + key.interestOps());

}由于TNonblockingServer是用一个SelectAcceptThread处理所有的请求,在并发场景下,其他的IO事件只能等待当前线程依次处理,处理效率略低。

这里记录一下handleRead的处理流程:

protected void handleRead(SelectionKey key) {

FrameBuffer buffer = (FrameBuffer) key.attachment();

//将channel中数据读取到buffer中

if (!buffer.read()) {

cleanupSelectionKey(key);

return;

}

// if the buffer's frame read is complete, invoke the method.

//判断一帧的数据是否读取完成,读取完成就调用相关的方法,执行rpc调用

if (buffer.isFrameFullyRead()) {

if (!requestInvoke(buffer)) {

cleanupSelectionKey(key);

}

}

}

在buffer.read()中完成实在数据的读取,根据FrameBuffer的状态,分为几部分读取

FrameBufferState.READING_FRAME_SIZE 初始状态,标识读取帧大小,该步完成,状态变更为:FrameBufferState.READING_FRAME

FrameBufferState.READING_FRAME 标识读取实际帧内容,该步完成,状态变更为:FrameBufferState.READ_FRAME_COMPLETE

public boolean read() {

//读取帧大小

if (state_ == FrameBufferState.READING_FRAME_SIZE) {

// try to read the frame size completely

if (!internalRead()) {

return false;

}

// if the frame size has been read completely, then prepare to read the

// actual frame.

if (buffer_.remaining() == 0) {

// pull out the frame size as an integer.

int frameSize = buffer_.getInt(0);

if (frameSize <= 0) {

LOGGER.error("Read an invalid frame size of " + frameSize

+ ". Are you using TFramedTransport on the client side?");

return false;

}

// if this frame will always be too large for this server, log the

// error and close the connection.

if (frameSize > MAX_READ_BUFFER_BYTES) {

LOGGER.error("Read a frame size of " + frameSize

+ ", which is bigger than the maximum allowable buffer size for ALL connections.");

return false;

}

// if this frame will push us over the memory limit, then return.

// with luck, more memory will free up the next time around.

if (readBufferBytesAllocated.get() + frameSize > MAX_READ_BUFFER_BYTES) {

return true;

}

// increment the amount of memory allocated to read buffers

readBufferBytesAllocated.addAndGet(frameSize + 4);

// reallocate the readbuffer as a frame-sized buffer

buffer_ = ByteBuffer.allocate(frameSize + 4);

buffer_.putInt(frameSize);

state_ = FrameBufferState.READING_FRAME;

} else {

// this skips the check of READING_FRAME state below, since we can't

// possibly go on to that state if there's data left to be read at

// this one.

return true;

}

}

// it is possible to fall through from the READING_FRAME_SIZE section

// to READING_FRAME if there's already some frame data available once

// READING_FRAME_SIZE is complete.

//读取帧大小完成,读取实际的帧数据

if (state_ == FrameBufferState.READING_FRAME) {

if (!internalRead()) {

return false;

}

// since we're already in the select loop here for sure, we can just

// modify our selection key directly.

if (buffer_.remaining() == 0) {

// get rid of the read select interests

selectionKey_.interestOps(0);

//标识读取帧完成,可以进行下一步

state_ = FrameBufferState.READ_FRAME_COMPLETE;

}

return true;

}

// if we fall through to this point, then the state must be invalid.

LOGGER.error("Read was called but state is invalid (" + state_ + ")");

return false;

}帧读取完成后,调用requestInvoke调用相关的方法,执行RPC调用

/**

* Actually invoke the method signified by this FrameBuffer.

*/

public void invoke() {

frameTrans_.reset(buffer_.array());//设置transport的缓冲区

response_.reset();//响应缓冲区清零

try {

if (eventHandler_ != null) {

eventHandler_.processContext(context_, inTrans_, outTrans_);

}

// 实际调用,通过getProcessor获取服务启动时设置的processor,这个processor是我们通过thrift的接口*.thrift编译生成的类里面的处理类

processorFactory_.getProcessor(inTrans_).process(inProt_, outProt_);

//响应结果

responseReady();

return;

} catch (TException te) {

LOGGER.warn("Exception while invoking!", te);

} catch (Throwable t) {

LOGGER.error("Unexpected throwable while invoking!", t);

}

// This will only be reached when there is a throwable.

state_ = FrameBufferState.AWAITING_CLOSE;

requestSelectInterestChange();

}通过 processorFactory_.getProcessor(inTrans_)获取到我们设置的处理类,然后process进行解析处理,首先解析message里面的信息,获取msg,然后通过msg.name获得processMap中对应的处理类,例如echo方法对应的映射关系:

processMap.put(“echo”, new echo());

获取到处理类之后,调用process进行处理,如fn.process(msg.seqid, in, out, iface)

@Override

public boolean process(TProtocol in, TProtocol out) throws TException {

//获取msg

TMessage msg = in.readMessageBegin();

//获取实际的处理方法

ProcessFunction fn = processMap.get(msg.name);

if (fn == null) {

TProtocolUtil.skip(in, TType.STRUCT);

in.readMessageEnd();

TApplicationException x = new TApplicationException(TApplicationException.UNKNOWN_METHOD, "Invalid method name: '"+msg.name+"'");

out.writeMessageBegin(new TMessage(msg.name, TMessageType.EXCEPTION, msg.seqid));

x.write(out);

out.writeMessageEnd();

out.getTransport().flush();

return true;

}

//调用处理方法,进行消息处理

fn.process(msg.seqid, in, out, iface);

return true;

}消息处理的方法见下:

public final void process(int seqid, TProtocol iprot, TProtocol oprot, I iface) throws TException {

T args = getEmptyArgsInstance();

try {

args.read(iprot);

} catch (TProtocolException e) {

iprot.readMessageEnd();

TApplicationException x = new TApplicationException(TApplicationException.PROTOCOL_ERROR, e.getMessage());

oprot.writeMessageBegin(new TMessage(getMethodName(), TMessageType.EXCEPTION, seqid));

x.write(oprot);

oprot.writeMessageEnd();

oprot.getTransport().flush();

return;

}

iprot.readMessageEnd();

TBase result = null;

try {

//实际的方法调用,会直接调用RPC接口的实际实现

result = getResult(iface, args);

} catch(TException tex) {

LOGGER.error("Internal error processing " + getMethodName(), tex);

TApplicationException x = new TApplicationException(TApplicationException.INTERNAL_ERROR,

"Internal error processing " + getMethodName());

oprot.writeMessageBegin(new TMessage(getMethodName(), TMessageType.EXCEPTION, seqid));

x.write(oprot);

oprot.writeMessageEnd();

oprot.getTransport().flush();

return;

}

//处理返回结果,通过oprot将结果序列化,有客户端进行解析

if(!isOneway()) {

oprot.writeMessageBegin(new TMessage(getMethodName(), TMessageType.REPLY, seqid));

result.write(oprot);

oprot.writeMessageEnd();

oprot.getTransport().flush();

}

}这里对getResult(iface, args)做一下简单描述,以echo接口为例:

public static class echo<I extends Iface> extends org.apache.thrift.ProcessFunction<I, echo_args> {

public echo() {

super("echo");

}

public echo_args getEmptyArgsInstance() {

return new echo_args();

}

protected boolean isOneway() {

return false;

}

public echo_result getResult(I iface, echo_args args) throws org.apache.thrift.TException {

echo_result result = new echo_result();

//调用echo的实现

result.success = iface.echo(args.reqId, args.caller, args.srcStr, args.ext);

return result;

}

}通过iface.echo(args.reqId, args.caller, args.srcStr, args.ext)的调用,我们实现了实际的echo接口的调用,然后通过oprot将返回结果序列化,写入缓冲区。

responseReady方法将结果放入nio的缓冲区,然后通过selectionKey_.interestOps(SelectionKey.OP_WRITE);设置写事件,触发NIO write操作,由handle_write将结果返回给客户端。

最后

以上就是慈祥信封最近收集整理的关于聊聊Thrift(四) thrift 服务篇-TNonblockingServer聊聊Thrift(四) thrift 服务篇-TNonblockingServer的全部内容,更多相关聊聊Thrift(四)内容请搜索靠谱客的其他文章。

发表评论 取消回复