实践:HBase源码篇 | ZGC初体验——OpenJdk 15编译HBase 1.4.8

- 实践:HBase源码篇 | OpenJdk 15编译HBase并部署测试

- 前言

- 所需环境

- 一步一步地解决报错

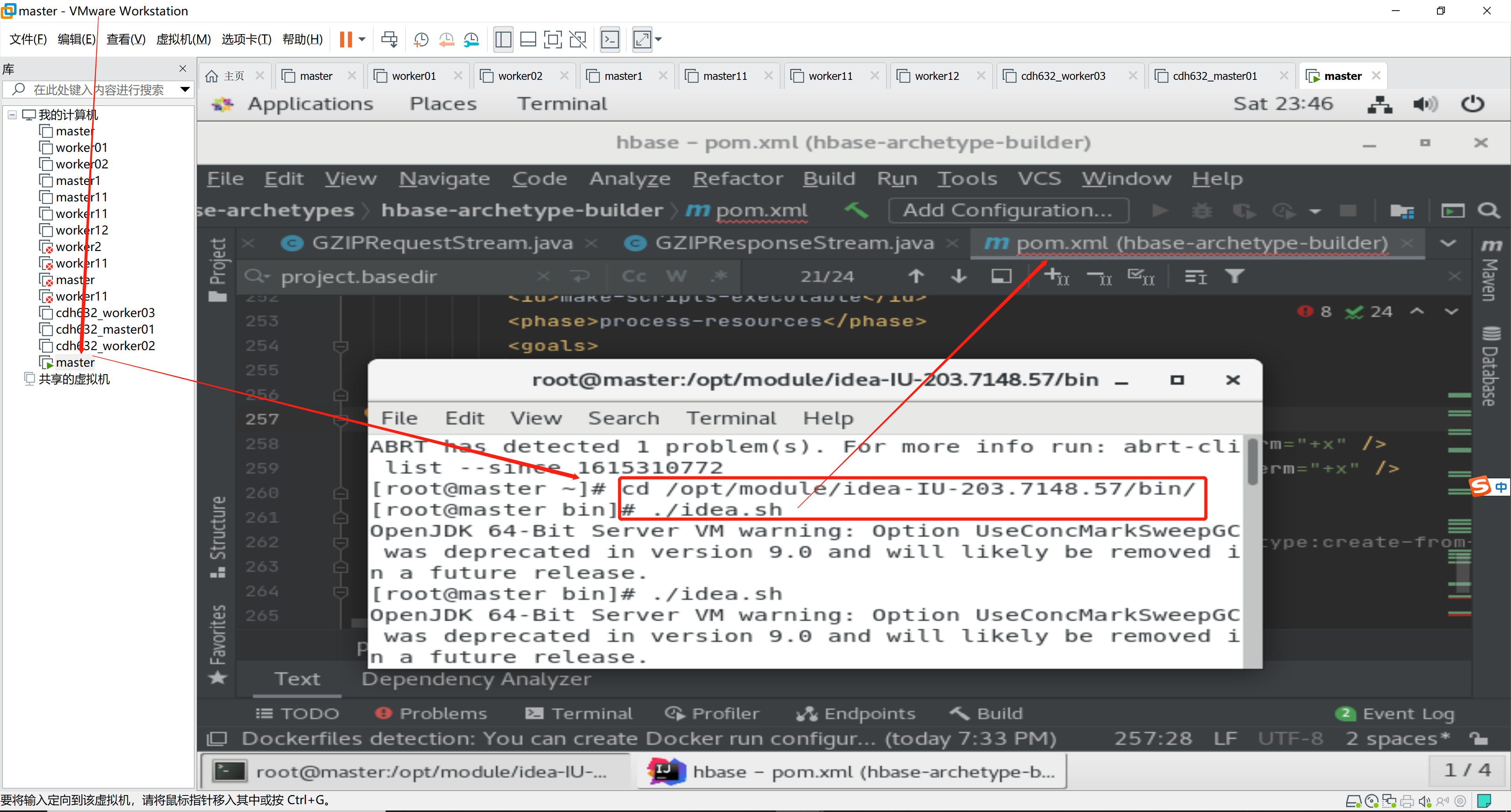

- idea调试代码

- [HBase探索 | OpenJDK 15 编译部署 CDH 版 HBase](https://mp.weixin.qq.com/s/wp99-tguyy9lPtBsmPmVyA)(hbase-cdh6.3.2)

- 预准备

- 至github的cloudera下载[源码](https://codeload.github.com/cloudera/hbase/tar.gz/cdh6.3.2-release)

- 一步一步地解决报错

实践:HBase源码篇 | OpenJdk 15编译HBase并部署测试

原文链接:HBase源码篇 | ZGC初体验——OpenJdk 15编译HBase 1.4.8

前言

说说JVM中的ZGC垃圾收集器

所需环境

Centos7桌面版便于Linux版ideaIU-2020.3.2查看和Debug源码。

- /etc/profile ,设置如下,【注意】此处的jdk1.8.0虽没必要按如下设置,但必须安装(见下文);

export JAVA_HOME1=/usr/java/jdk1.8.0_171

export CLASSPATH=.:${JAVA_HOME1}/jre/lib/rt.jar:${JAVA_HOME1}/lib/dt.jar:${JAVA_HOME1}/lib/tools.jar

export JAVA_HOME=/usr/java/jdk-15.0.2+7

export PATH=${JAVA_HOME}/bin:${PATH}

MAVEN_HOME=/opt/module/apache-maven-3.5.4

export PATH=${MAVEN_HOME}/bin:${PATH}

- OpenJDK15 ,官网下载OpenJDK15U-jdk_x64_linux_hotspot_15.0.2_7.tar.gz;

- Maven-3.5.4 ,下载

wget http://mirror.bit.edu.cn/apache/maven/maven-3/3.5.4/binaries/apache-maven-3.5.4-bin.tar.gz;

并配置(其实他的更简洁):

vi /opt/module/apache-maven-3.5.4/conf/settings.xml

<mirror>

<id>aliyun</id>

<mirrorOf>*,!cloudera</mirrorOf>

<name>Nexus Release Repository</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

<mirror>

<id>central</id>

<name>Maven Repository Switchboard</name>

<url>https://repo1.maven.org/maven2/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<!-- 中央仓库在中国的镜像 -->

<mirror>

<id>maven.net.cn</id>

<name>oneof the central mirrors in china</name>

<url>http://maven.net.cn/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>alimaven</id>

<mirrorOf>ali central</mirrorOf>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

</mirror>

<mirror>

<id>repo2</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://repo2.maven.org/maven2/</url>

</mirror>

<mirror>

<id>ibiblio</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://mirrors.ibiblio.org/pub/mirrors/maven2/</url>

</mirror>

<mirror>

<id>jboss-public-repository-group</id>

<mirrorOf>central</mirrorOf>

<name>JBoss Public Repository Group</name>

<url>http://repository.jboss.org/nexus/content/groups/public</url>

</mirror>

<mirror>

<id>google-maven-central</id>

<name>Google Maven Central</name>

<url>https://maven-central.storage.googleapis.com</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>nexus-hortonworks</id>

<mirrorOf>!central</mirrorOf>

<name>Nexus hortonworks</name>

<url>https://repo.hortonworks.com/content/groups/public/</url>

</mirror>

- HBase-1.4.8,下载hbase-1.4.8-src.tar.gz并解压至/opt/module/目录下

- 修改根pom文件,至于为什么搞compilerArgs,请看他的说明。而我在此时就加上了,这也可能是我为什么没有遇到他的4.4中的两类的报错。

<!--提高下该插件的版本-->

<!-- <maven.compiler.version>3.6.1</maven.compiler.version> -->

<maven.compiler.version>3.8.1</maven.compiler.version>

<!--compileSource修改为15,原来的1.7是指编译该版本JDK的最小版本是jdk1.7-->

<!-- <compileSource>1.7</compileSource> -->

<compileSource>15</compileSource>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>${maven.compiler.version}</version>

<configuration>

<source>${compileSource}</source>

<target>${compileSource}</target>

<showWarnings>true</showWarnings>

<showDeprecation>false</showDeprecation>

<!-- <compilerArgument>-Xlint:-options</compilerArgument> -->

<compilerArgs>

<arg>--add-exports=java.base/jdk.internal.access=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/jdk.internal=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/jdk.internal.misc=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/sun.security.pkcs=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/sun.nio.ch=ALL-UNNAMED</arg>

</compilerArgs>

</configuration>

</plugin>

- 进行编译:

mvn clean package -DskipTests

一步一步地解决报错

说明:由于我是站在他的肩膀上进行实践,所以并未遇到他的4.4中的报错,如下仅为我先后出现的报错:

1)按他的4.5和4.6中所指出的解决方案:在根pom文件中新增如下两个依赖项:

<!-- 为解决hbase-it模块的类javax.xml.ws.http.HTTPException -->

<dependency>

<groupId>jakarta.xml.ws</groupId>

<artifactId>jakarta.xml.ws-api</artifactId>

<version>2.3.3</version>

</dependency>

<!-- 为解决hbase.thrift和hbase.thrift2的类javax.annotation.Generated -->

<dependency>

<groupId>javax.annotation</groupId>

<artifactId>javax.annotation-api</artifactId>

<version>1.3.1</version>

</dependency>

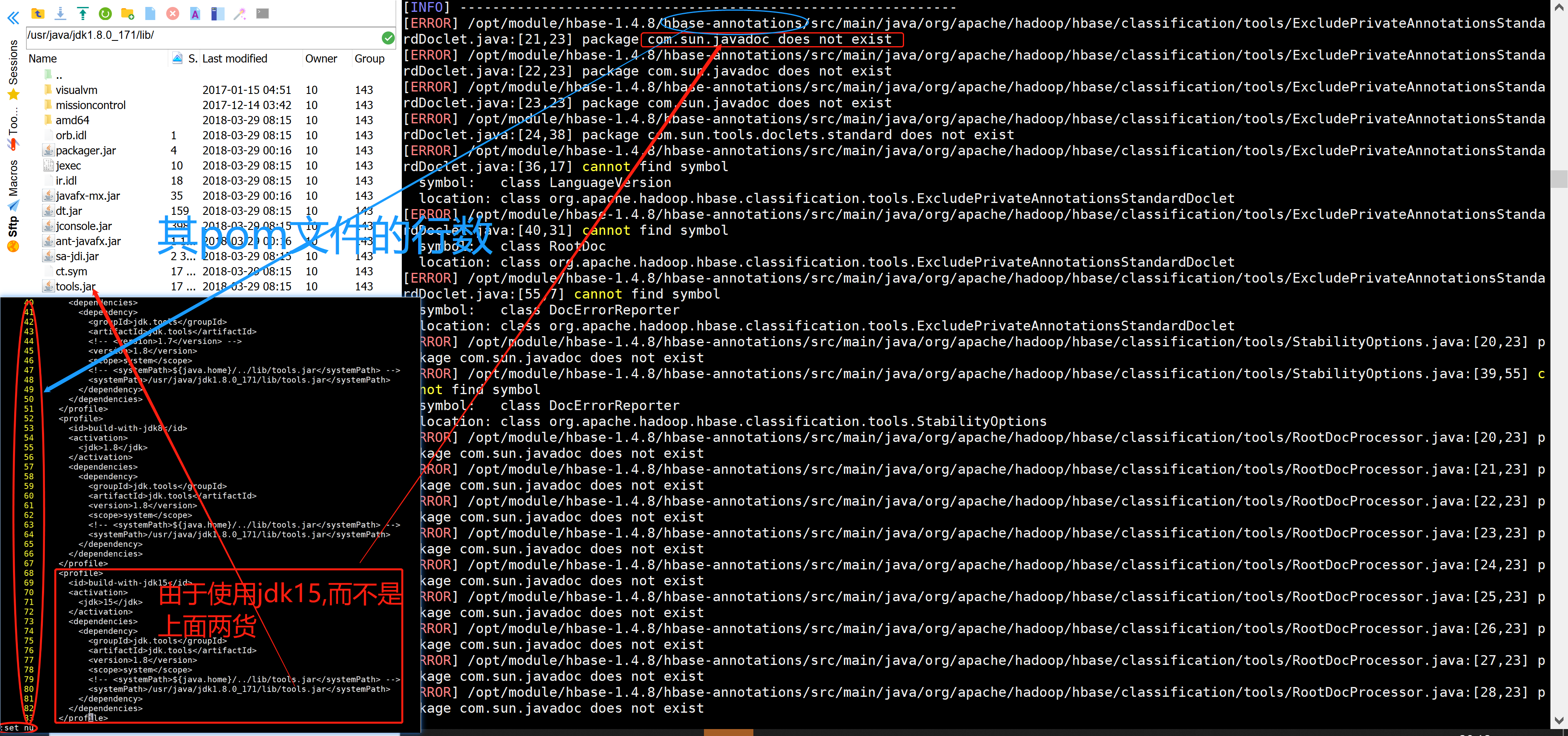

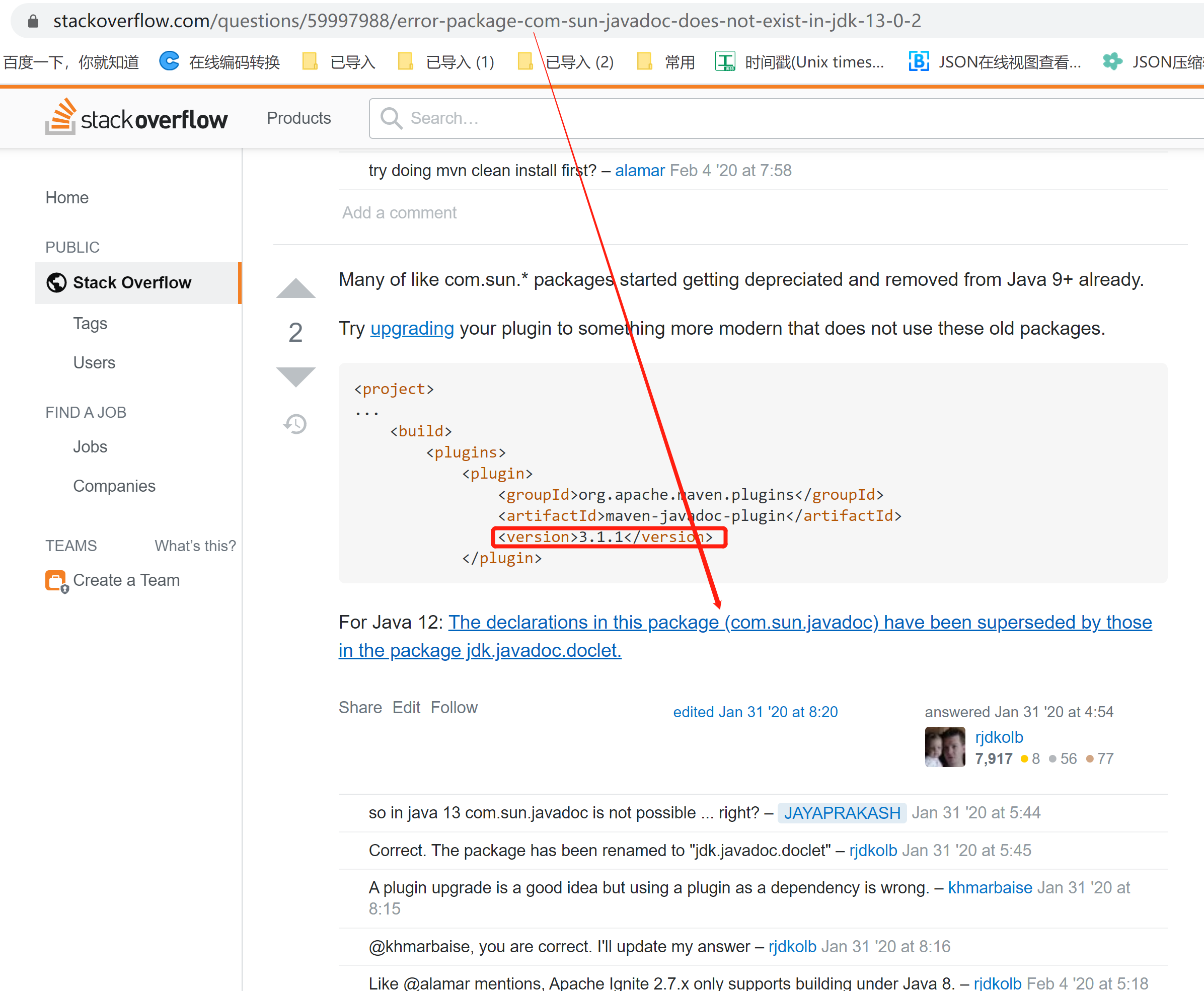

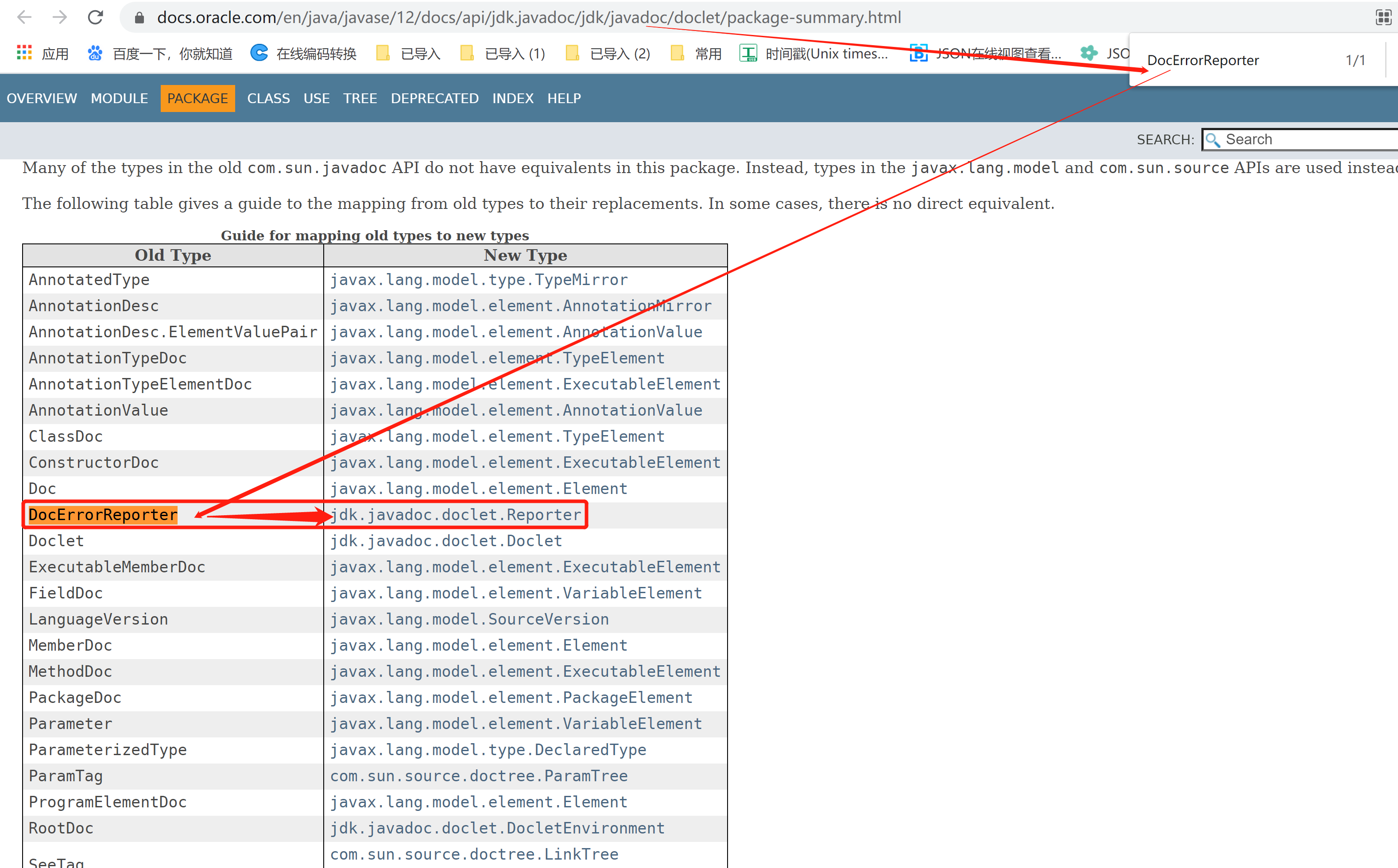

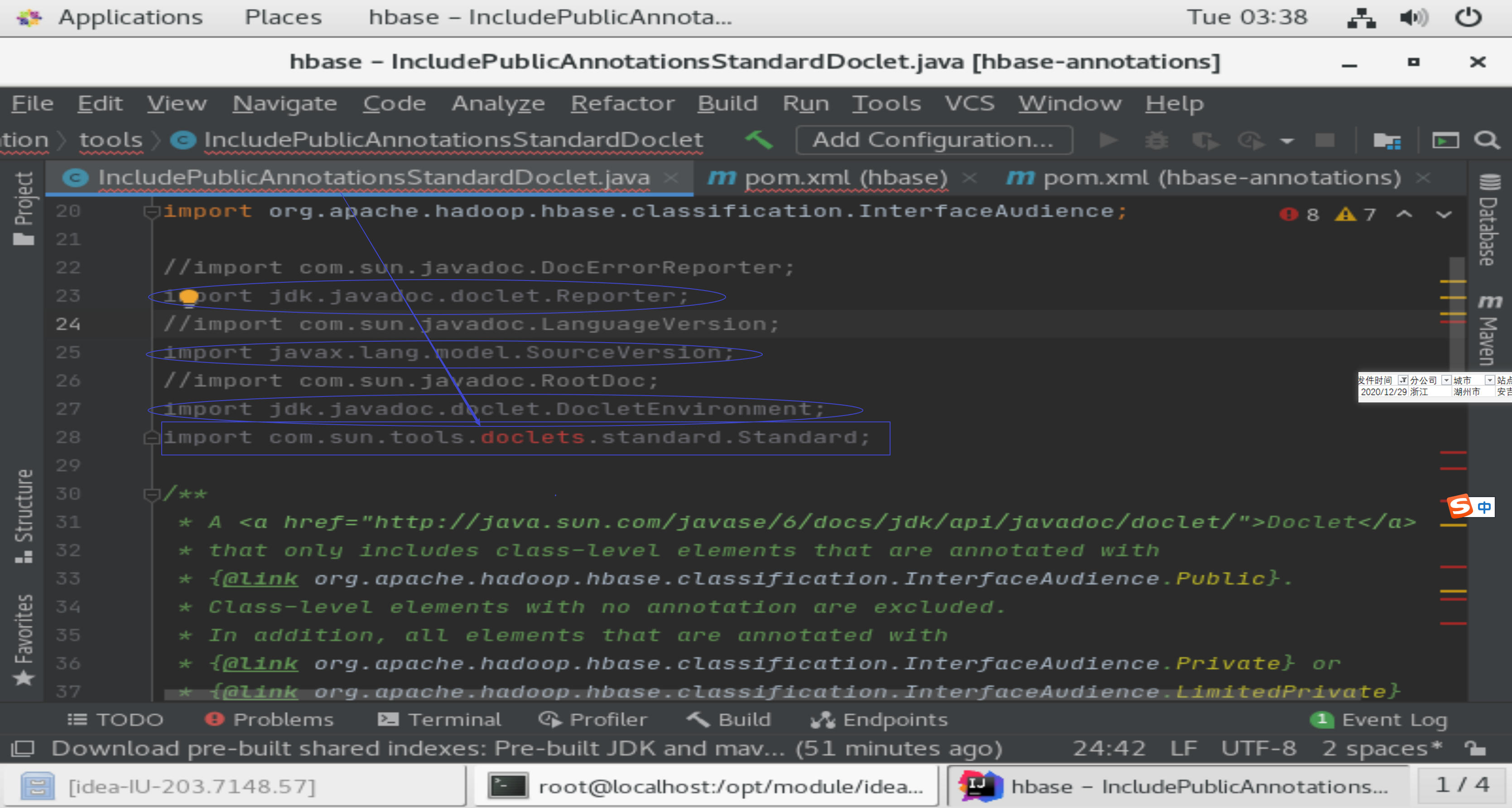

2)package com.sun.javadoc does not exist

最终的解决方案,见下图。【注】他的4.7中说到“jdk1.8 也行”,我先后尝试jdk1.7和jdk1.8均无效,而按下图新增jdk15成功解决:

由于这步在探索过程中,不知何原因,中间走了好多弯路。比如:

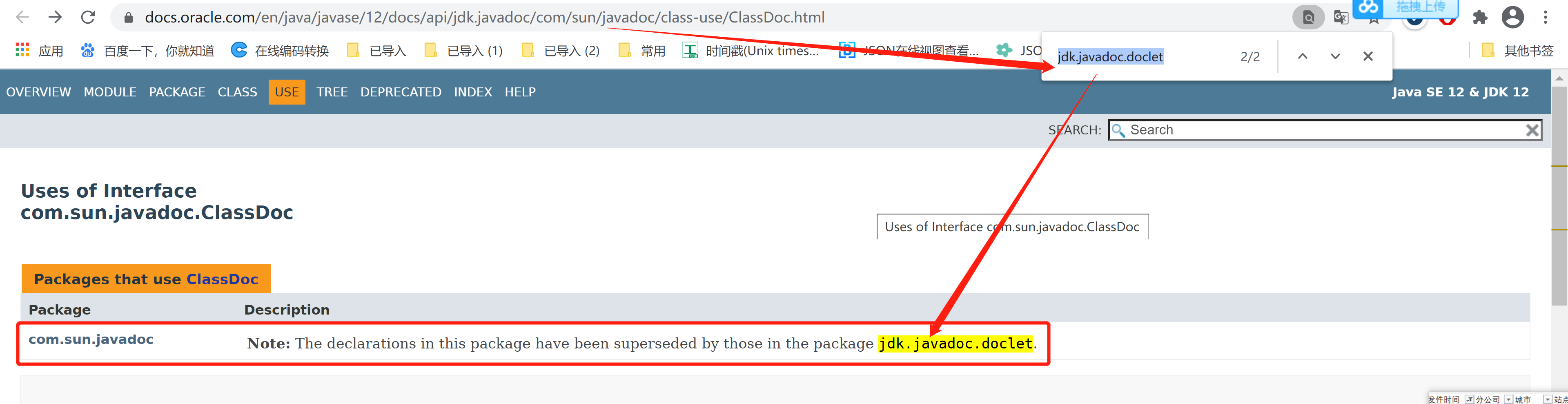

i.经查:,最终发现换api了。

如下尝试无果:

点击上图的黄色字体后,进入下图

若采取换api的方式,上图可以换三个,但最后一个却找不到替代类哦。

ii.于是放弃上图的修改,把他4.4中提到的hbase-common模块中两类修改下导入项依然无果:

org.apache.hadoop.hbase.util.Bytes类org.apache.hadoop.hbase.util.UnsafeAccess类:

//import sun.misc.Unsafe;

import jdk.internal.misc.Unsafe;

iii.按他的4.12中修改编译时跳过 license 检查,在根pom文件中搜索check-aggregate-license即可找到如下skip,如下设置依然没解决问题。

<!-- <skip>${skip.license.check}</skip> -->

<skip>true</skip>

最后百度一下想到在上文我的/etc/profile中设置CLASSPATH依然无效。

3)这次是hbase-server模块报错:An Ant BuildException has occured: java.util.MissingResourceException: Can’t find com.sun.common.util.logging.LogStrings bundle from

按他的4.8中的方案进行升级 jetty,【注】共修改五个pom文件:

[root@master hbase-1.4.8]# vi pom.xml

<!-- <jetty.version>6.1.26</jetty.version>

<jetty.jspapi.version>6.1.14</jetty.jspapi.version> -->

<jetty.version>9.3.28.v20191105</jetty.version>

<!-- <dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

<version>${jetty.version}</version>

<exclusions>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>servlet-api</artifactId>

</exclusion>

</exclusions>

</dependency> -->

<!-- <dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-sslengine</artifactId>

<version>${jetty.version}</version>

</dependency> -->

<!-- 解决Can't find com.sun.common.util.logging.LogStrings -->

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-server</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlet</artifactId>

<version>${jetty.version}</version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty</groupId>

<artifactId>servlet-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-security</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-http</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-io</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-jmx</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-webapp</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util-ajax</artifactId>

<version>${jetty.version}</version>

</dependency>

<dependency>

<!--This lib has JspC in it. Needed precompiling jsps in hbase-rest, etc.-->

<!-- 这个很重要,用来编译jsp的-->

<groupId>org.glassfish.web</groupId>

<artifactId>javax.servlet.jsp</artifactId>

<version>2.3.2</version>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

<version>3.1.0</version>

</dependency>

<!-- 以上即为把org.mortbay.jetty升级为现在主流的org.eclipse.jetty -->

[root@master hbase-1.4.8]# vi ./hbase-server/pom.xml

<!-- 注释掉旧的jetty依赖 -->

<!-- <dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-sslengine</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-2.1</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-api-2.1</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>servlet-api-2.5</artifactId>

</dependency> -->

<!--加入新jetty依赖-->

<dependency>

<!--For JspC used in ant task-->

<groupId>org.glassfish.web</groupId>

<artifactId>javax.servlet.jsp</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-server</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlet</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-http</artifactId>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency>

<!--完毕-->

[root@master hbase-1.4.8]# vi ./hbase-thrift/pom.xml

<!-- 注释掉旧的jetty依赖 -->

<!-- <dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-sslengine</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>servlet-api-2.5</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-api-2.1</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-2.1</artifactId>

<scope>compile</scope>

</dependency> -->

<!--加入新jetty依赖-->

<dependency>

<!--For JspC used in ant task-->

<groupId>org.glassfish.web</groupId>

<artifactId>javax.servlet.jsp</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-server</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlet</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-http</artifactId>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency>

<!--完毕-->

[root@master hbase-1.4.8]# vi ./hbase-rest/pom.xml

<!-- 注释掉旧的jetty依赖 -->

<!-- <dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-2.1</artifactId>

<scope>compile</scope>

</dependency> -->

<!-- 注释掉老的jetty依赖 -->

<!-- <dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-sslengine</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-api-2.1</artifactId>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>servlet-api-2.5</artifactId>

</dependency> -->

<!--加入新jetty依赖-->

<dependency>

<!--For JspC used in ant task-->

<groupId>org.glassfish.web</groupId>

<artifactId>javax.servlet.jsp</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-server</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlet</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-http</artifactId>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency>

<!--完毕-->

[root@master hbase-1.4.8]# vi ./hbase-common/pom.xml

<!-- 注释掉旧的jetty依赖 -->

<!-- <dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency> -->

<!--加入新jetty依赖-->

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util</artifactId>

</dependency>

4)这次是hbase-rest模块报错:

[ERROR] /opt/module/hbase-1.4.8/hbase-rest/src/main/java/org/apache/hadoop/hbase/rest/filter/GZIPRequestStream.java:[31,8] org.apache.hadoop.hbase.rest.filter.GZIPRequestStream is not abstract and does not override abstract method setReadListener(javax.servlet.ReadListener) in javax.servlet.ServletInputStream

[ERROR] /opt/module/hbase-1.4.8/hbase-rest/src/main/java/org/apache/hadoop/hbase/rest/filter/GZIPResponseStream.java:[31,8] org.apache.hadoop.hbase.rest.filter.GZIPResponseStream is not abstract and does not override abstract method setWriteListener(javax.servlet.WriteListener) in javax.servlet.ServletOutputStream

解决方案很简单,直接在idea中找到这两个类,使用Alt+Enter快捷键即可,没必要修改任何方法中的内容。

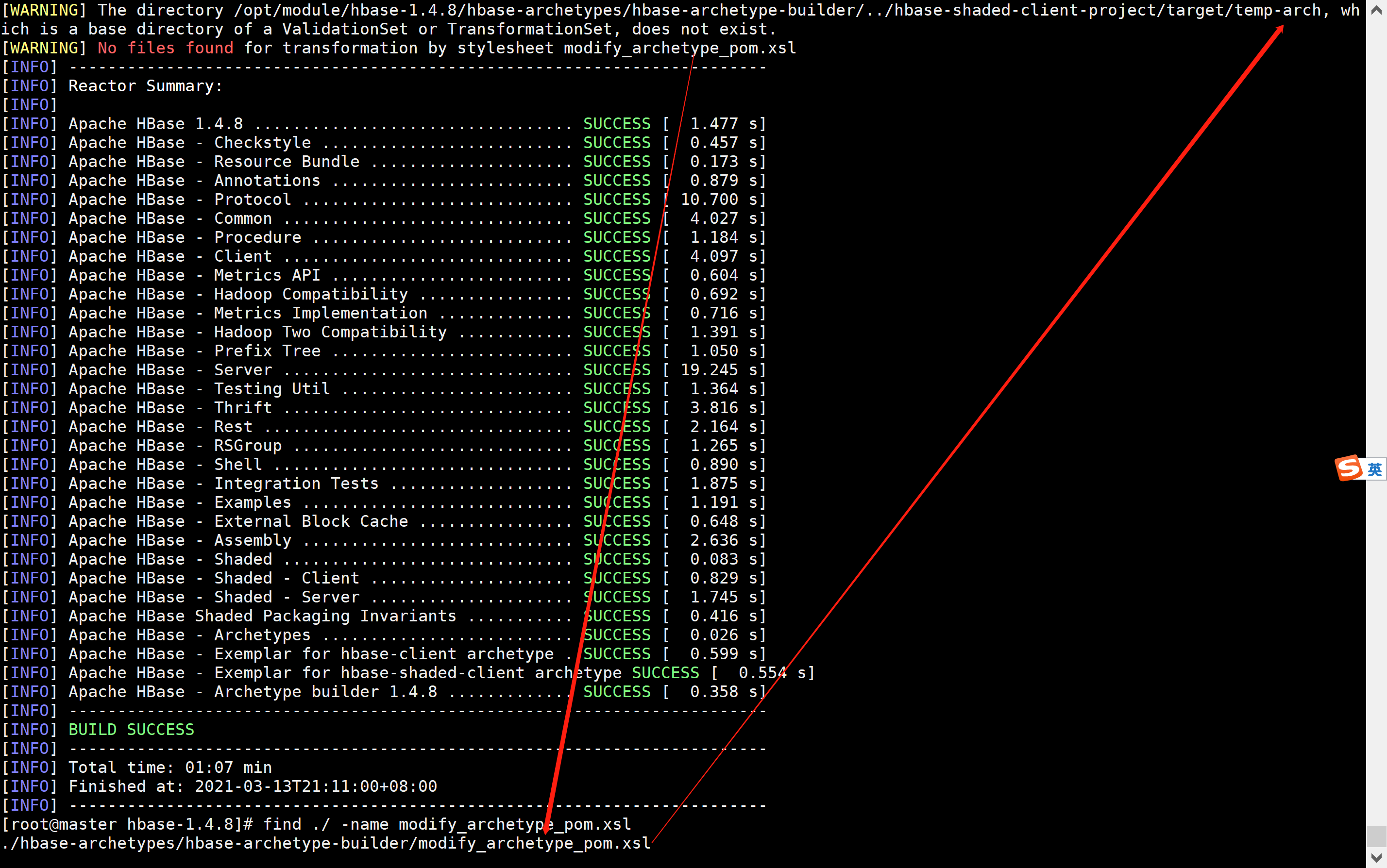

5)关于虽然编译成功,但警告两个位置的文件( modify_archetype_pom.xsl)找不到。

[INFO] --- xml-maven-plugin:1.0.1:transform (modify-archetype-pom-files-via-xslt) @ hbase-archetype-builder ---

[WARNING] The directory /opt/module/hbase-1.4.8/hbase-archetypes/hbase-archetype-builder/../hbase-client-project/target/temp-arch, which is a base directory of a ValidationSet or TransformationSet, does not exist.

[WARNING] No files found for transformation by stylesheet modify_archetype_pom.xsl

[WARNING] The directory /opt/module/hbase-1.4.8/hbase-archetypes/hbase-archetype-builder/../hbase-shaded-client-project/target/temp-arch, which is a base directory of a ValidationSet or TransformationSet, does not exist.

[WARNING] No files found for transformation by stylesheet modify_archetype_pom.xsl

查看hbase-archetype-builder模块的pom文件,除了maven中处理shell脚本的地方报错外,涉及到modify_archetype_pom.xsl的相关东西,没看懂。反正不影响部署此编译包,就先不管了。

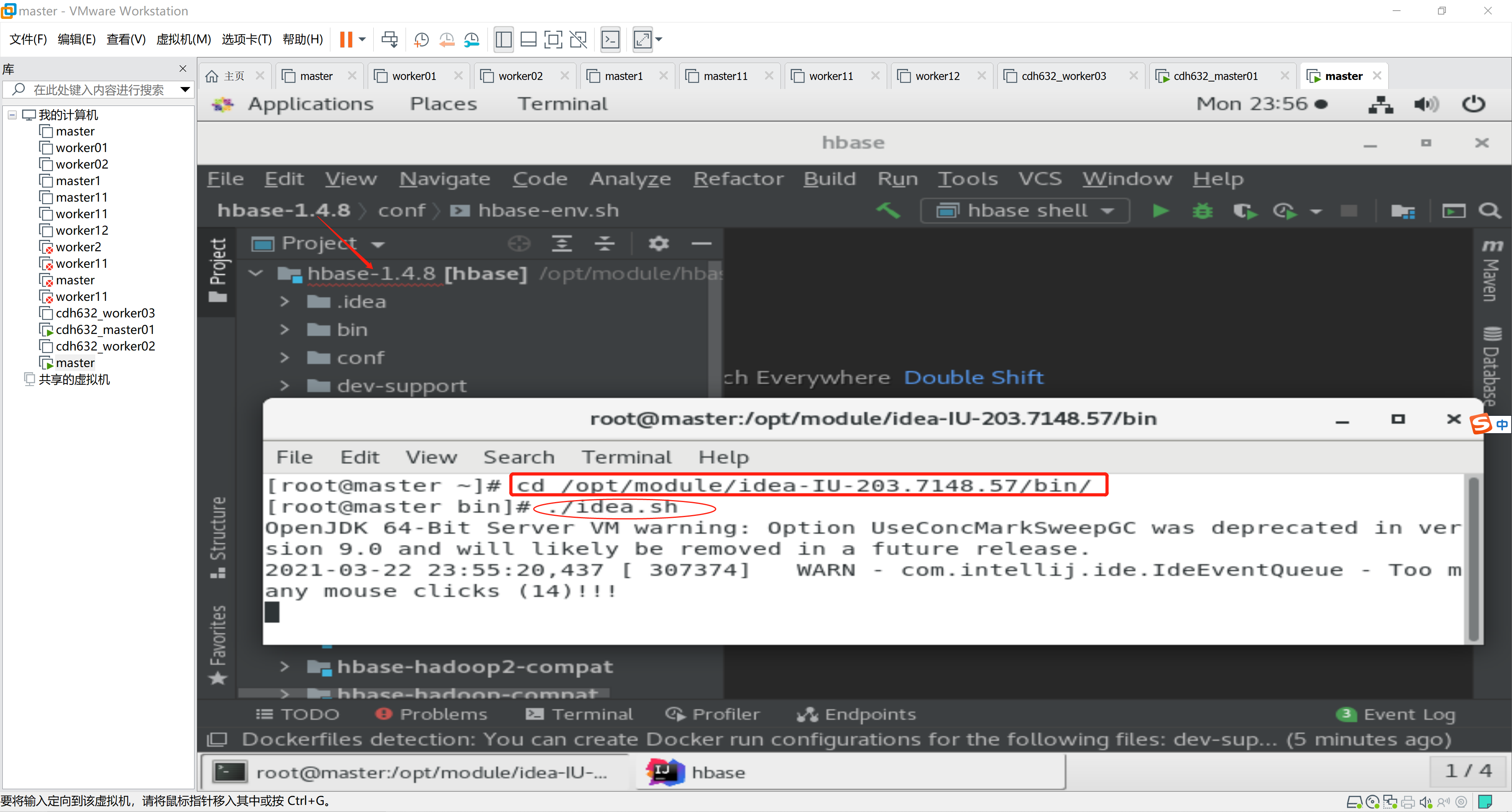

idea调试代码

- 打开源码

- 修改源码

package org.apache.hadoop.hbase.classification.tools;

import org.apache.hadoop.hbase.classification.InterfaceAudience;

//import com.sun.javadoc.DocErrorReporter;

import jdk.javadoc.doclet.Reporter;

//import com.sun.javadoc.LanguageVersion;

import javax.lang.model.SourceVersion;

//import com.sun.javadoc.RootDoc;

import jdk.javadoc.doclet.DocletEnvironment;

//import com.sun.tools.doclets.standard.Standard;

import jdk.javadoc.doclet.StandardDoclet;

import javax.lang.model.SourceVersion;

import java.util.Locale;

/**

* A <a href="http://java.sun.com/javase/6/docs/jdk/api/javadoc/doclet/">Doclet</a>

* for excluding elements that are annotated with

* {@link org.apache.hadoop.hbase.classification.InterfaceAudience.Private} or

* {@link org.apache.hadoop.hbase.classification.InterfaceAudience.LimitedPrivate}.

* It delegates to the Standard Doclet, and takes the same options.

*/

@InterfaceAudience.Private

public class ExcludePrivateAnnotationsStandardDoclet {

/*public static LanguageVersion languageVersion() {

return LanguageVersion.JAVA_1_5;

}*/

public static SourceVersion languageVersion() {

return SourceVersion.RELEASE_15;

}

//public static boolean start(RootDoc root) {

public static boolean start(DocletEnvironment docEnv) {

System.out.println(

ExcludePrivateAnnotationsStandardDoclet.class.getSimpleName());

//return Standard.start(RootDocProcessor.process(root));

StandardDoclet standardDoclet = new StandardDoclet();

return standardDoclet.run(docEnv);

}

public static int optionLength(String option) {

Integer length = StabilityOptions.optionLength(option);

if (length != null) {

return length;

}

//return Standard.optionLength(option);

StandardDoclet standardDoclet = new StandardDoclet();

return standardDoclet.hashCode();

}

//public static boolean validOptions(String[][] options, DocErrorReporter reporter) {

public static boolean validOptions(String[][] options, Reporter reporter) {

//StabilityOptions.validOptions(options, reporter);

String[][] filteredOptions = StabilityOptions.filterOptions(options);

//return Standard.validOptions(filteredOptions, reporter);

StandardDoclet standardDoclet = new StandardDoclet();

Locale locale = new Locale("English");

standardDoclet.init(locale, reporter);

return true;

}

}

package org.apache.hadoop.hbase.classification.tools;

import org.apache.hadoop.hbase.classification.InterfaceAudience;

//import com.sun.javadoc.DocErrorReporter;

import jdk.javadoc.doclet.Reporter;

//import com.sun.javadoc.LanguageVersion;

import javax.lang.model.SourceVersion;

//import com.sun.javadoc.RootDoc;

import jdk.javadoc.doclet.DocletEnvironment;

//import com.sun.tools.doclets.standard.Standard;

import jdk.javadoc.doclet.StandardDoclet;

import java.util.Locale;

/**

* A <a href="http://java.sun.com/javase/6/docs/jdk/api/javadoc/doclet/">Doclet</a>

* that only includes class-level elements that are annotated with

* {@link org.apache.hadoop.hbase.classification.InterfaceAudience.Public}.

* Class-level elements with no annotation are excluded.

* In addition, all elements that are annotated with

* {@link org.apache.hadoop.hbase.classification.InterfaceAudience.Private} or

* {@link org.apache.hadoop.hbase.classification.InterfaceAudience.LimitedPrivate}

* are also excluded.

* It delegates to the Standard Doclet, and takes the same options.

*/

@InterfaceAudience.Private

public class IncludePublicAnnotationsStandardDoclet {

/*public static LanguageVersion languageVersion() {

return LanguageVersion.JAVA_1_5;

}*/

public static SourceVersion languageVersion() {

return SourceVersion.RELEASE_15;

}

//public static boolean start(RootDoc root) {

public static boolean start(DocletEnvironment docEnv) {

System.out.println(

IncludePublicAnnotationsStandardDoclet.class.getSimpleName());

RootDocProcessor.treatUnannotatedClassesAsPrivate = true;

//return Standard.start(RootDocProcessor.process(root));

StandardDoclet standardDoclet = new StandardDoclet();

return standardDoclet.run(docEnv);

}

public static int optionLength(String option) {

Integer length = StabilityOptions.optionLength(option);

if (length != null) {

return length;

}

//return Standard.optionLength(option);

StandardDoclet standardDoclet = new StandardDoclet();

return standardDoclet.hashCode();

}

//public static boolean validOptions(String[][] options, DocErrorReporter reporter) {

public static boolean validOptions(String[][] options, Reporter reporter) {

//StabilityOptions.validOptions(options, reporter);

String[][] filteredOptions = StabilityOptions.filterOptions(options);

//return Standard.validOptions(filteredOptions, reporter);

StandardDoclet standardDoclet = new StandardDoclet();

Locale locale = new Locale("English");

standardDoclet.init(locale, reporter);

return true;

}

}

根pom中升级jruby由1.6.8到9.1.17.0后AbstractTestShell.java第61行修改

TestShellRSGroups.java第86行同上

即:

//升级jruby后

//jruby.getProvider().setLoadPaths(loadPaths);

jruby.setLoadPaths(loadPaths);

可能还有其他的类需修改,但我忘了。

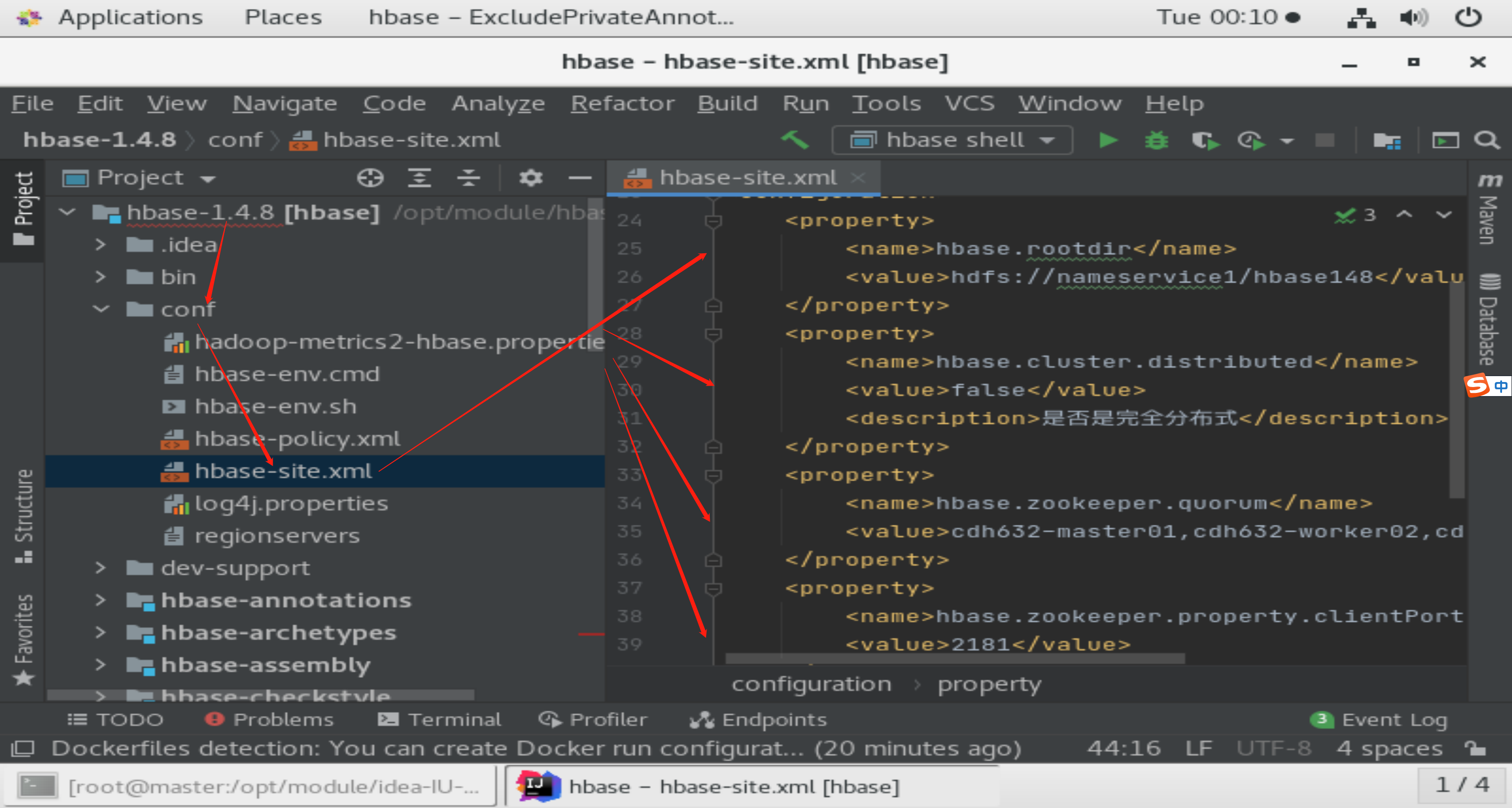

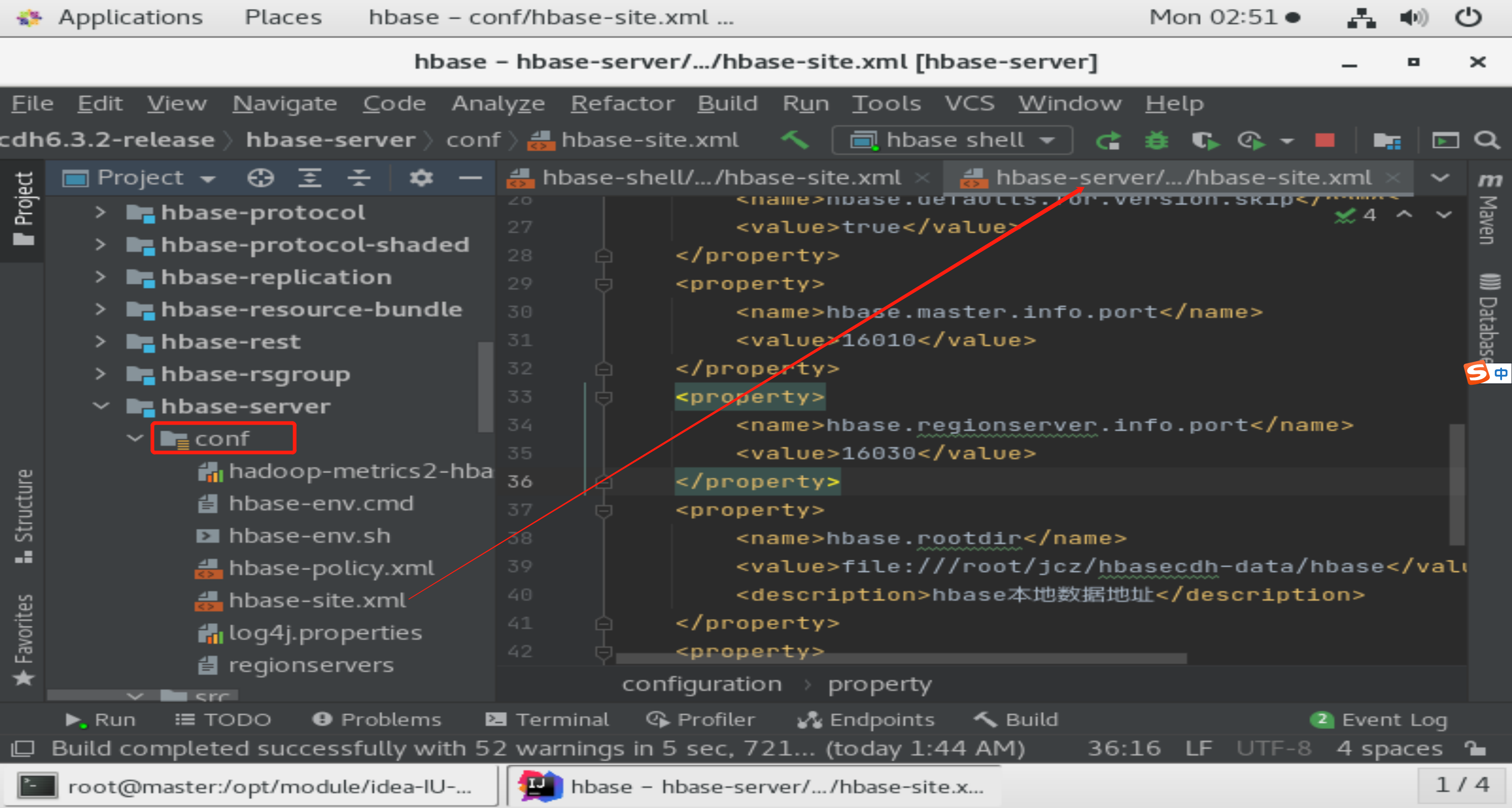

3. 移动conf目录

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://nameservice1/hbase148</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>false</value>

<description>是否是完全分布式</description>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>cdh632-master01,cdh632-worker02,cdh632-worker03</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>zookeeper.znode.parent</name>

<value>/hbase148</value>

</property>

</configuration>

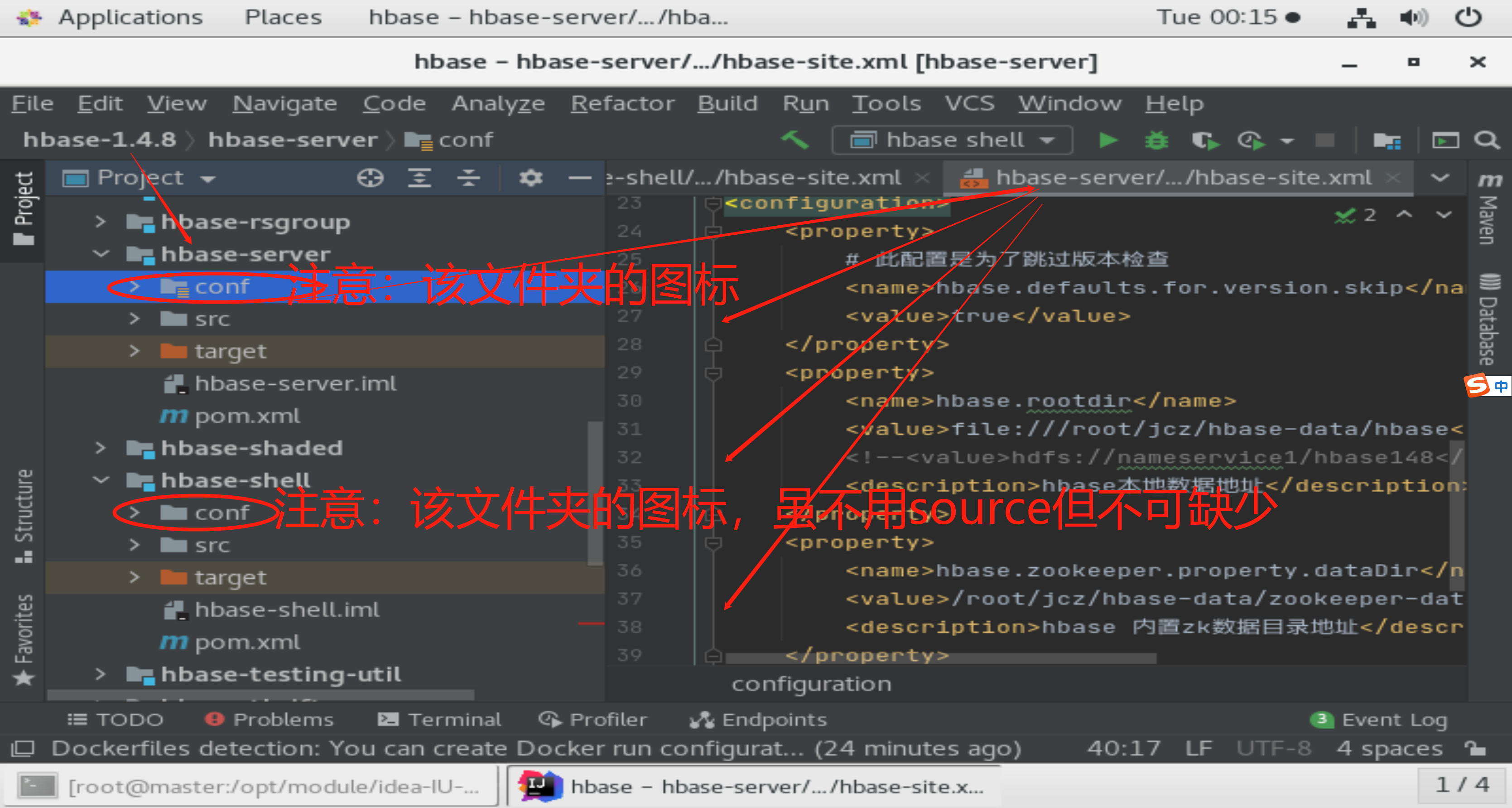

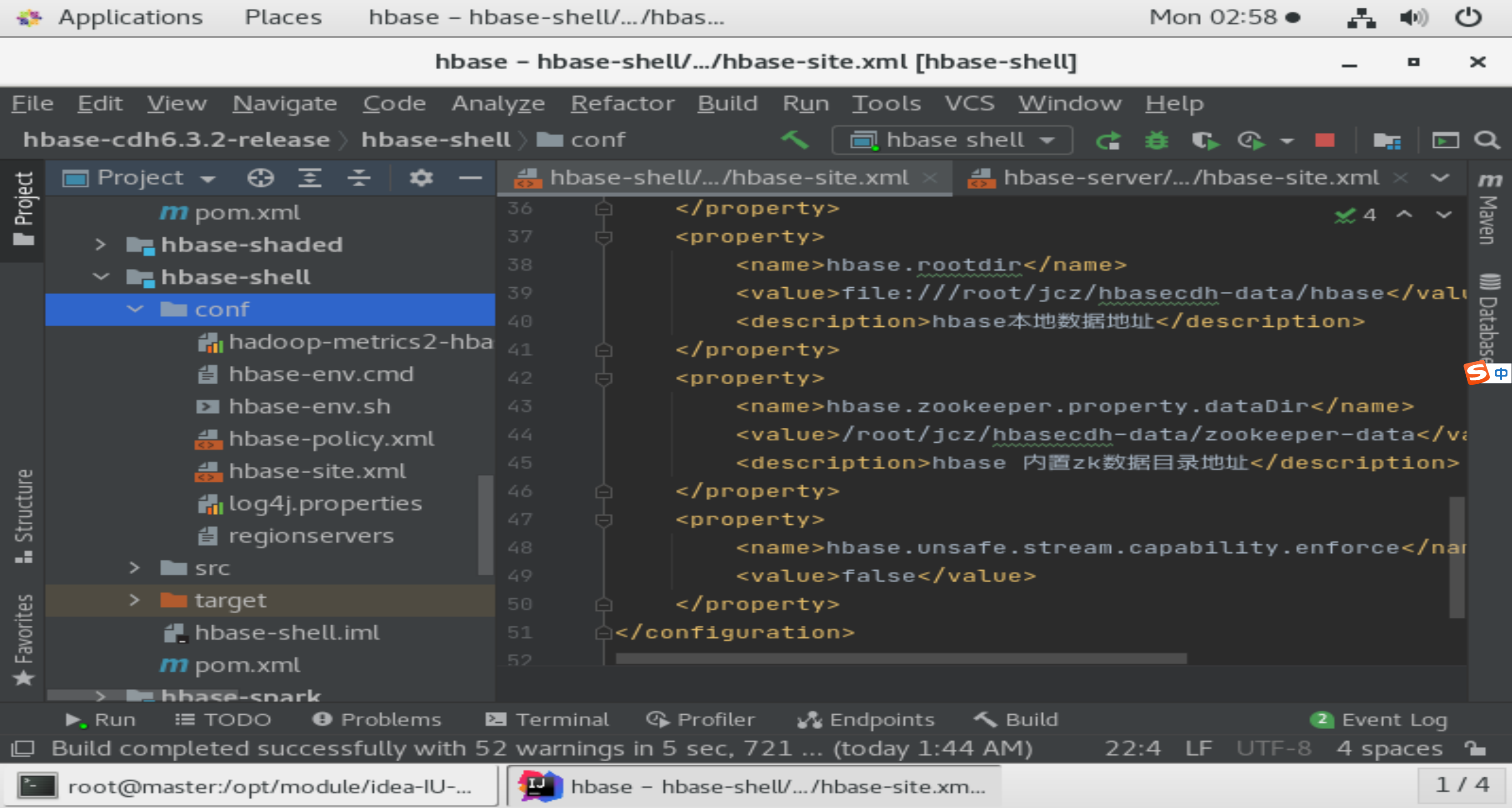

将上图中conf目录复制粘贴到如下两个位置并修改内容如下图:

<configuration>

<property>

<!--此配置是为了跳过版本检查-->

<name>hbase.defaults.for.version.skip</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>file:///root/jcz/hbase-data/hbase</value>

<!--<value>hdfs://nameservice1/hbase148</value>-->

<description>hbase本地数据地址</description>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/root/jcz/hbase-data/zookeeper-data</value>

<description>hbase 内置zk数据目录地址</description>

</property>

</configuration>

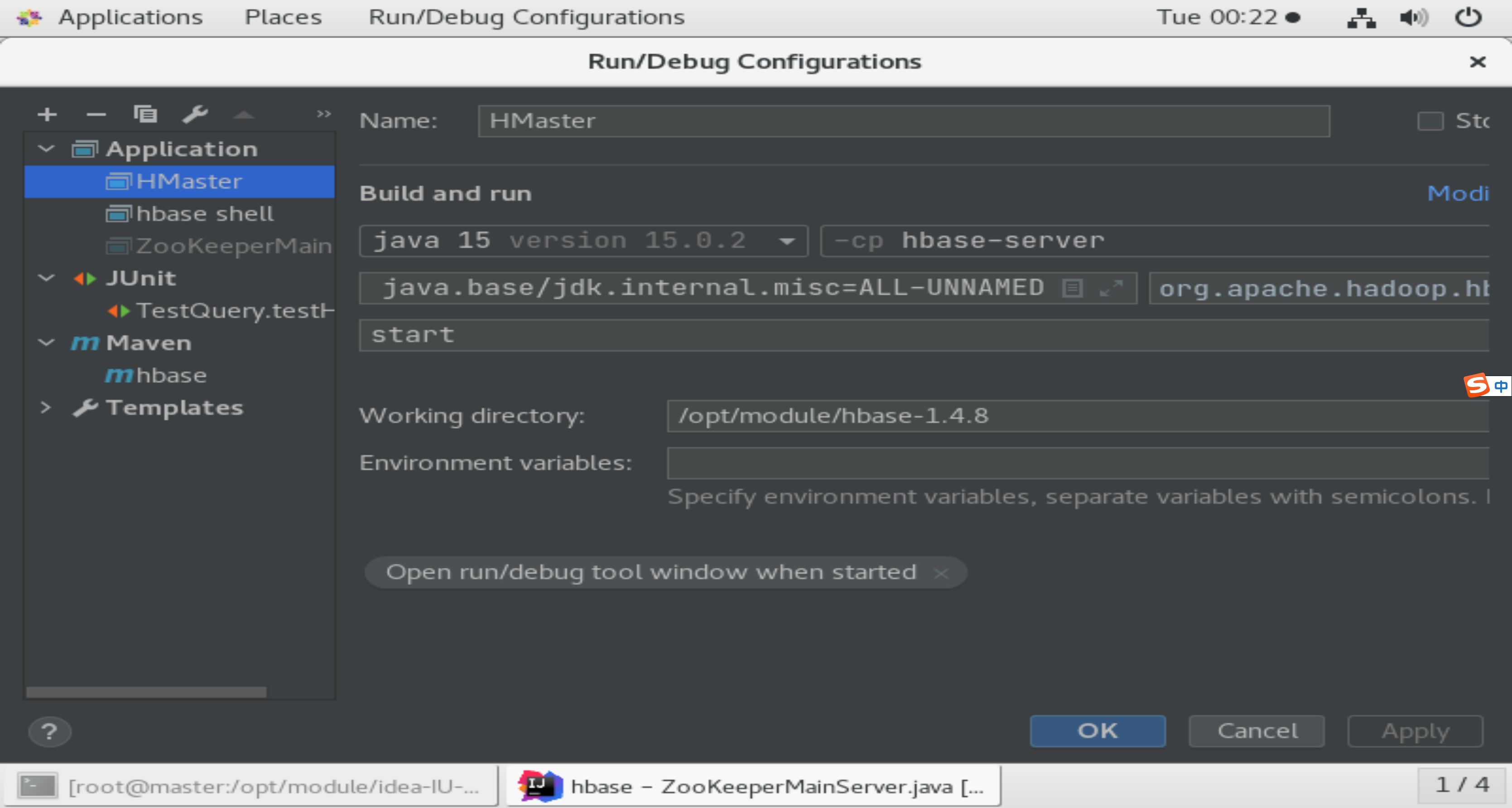

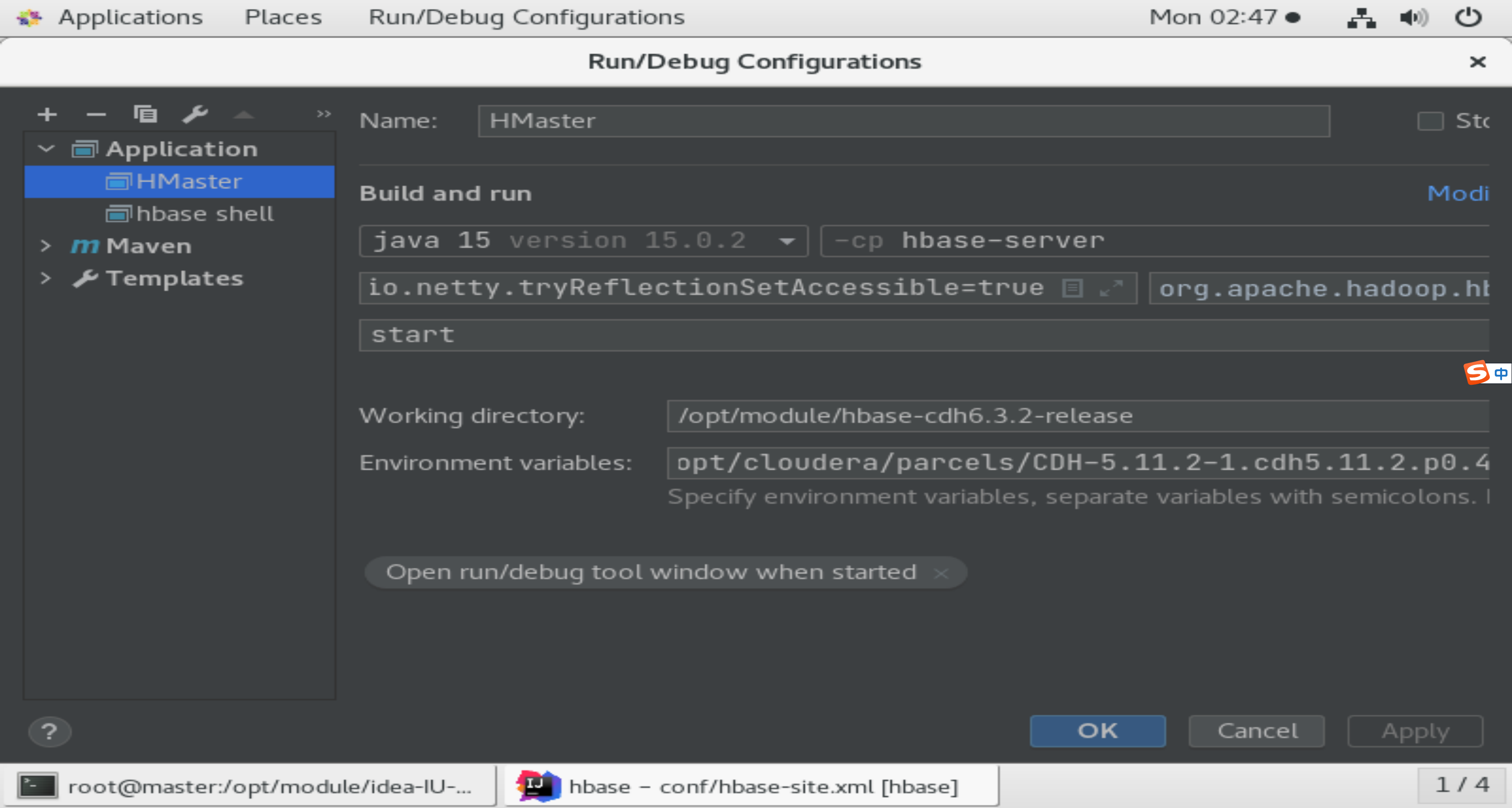

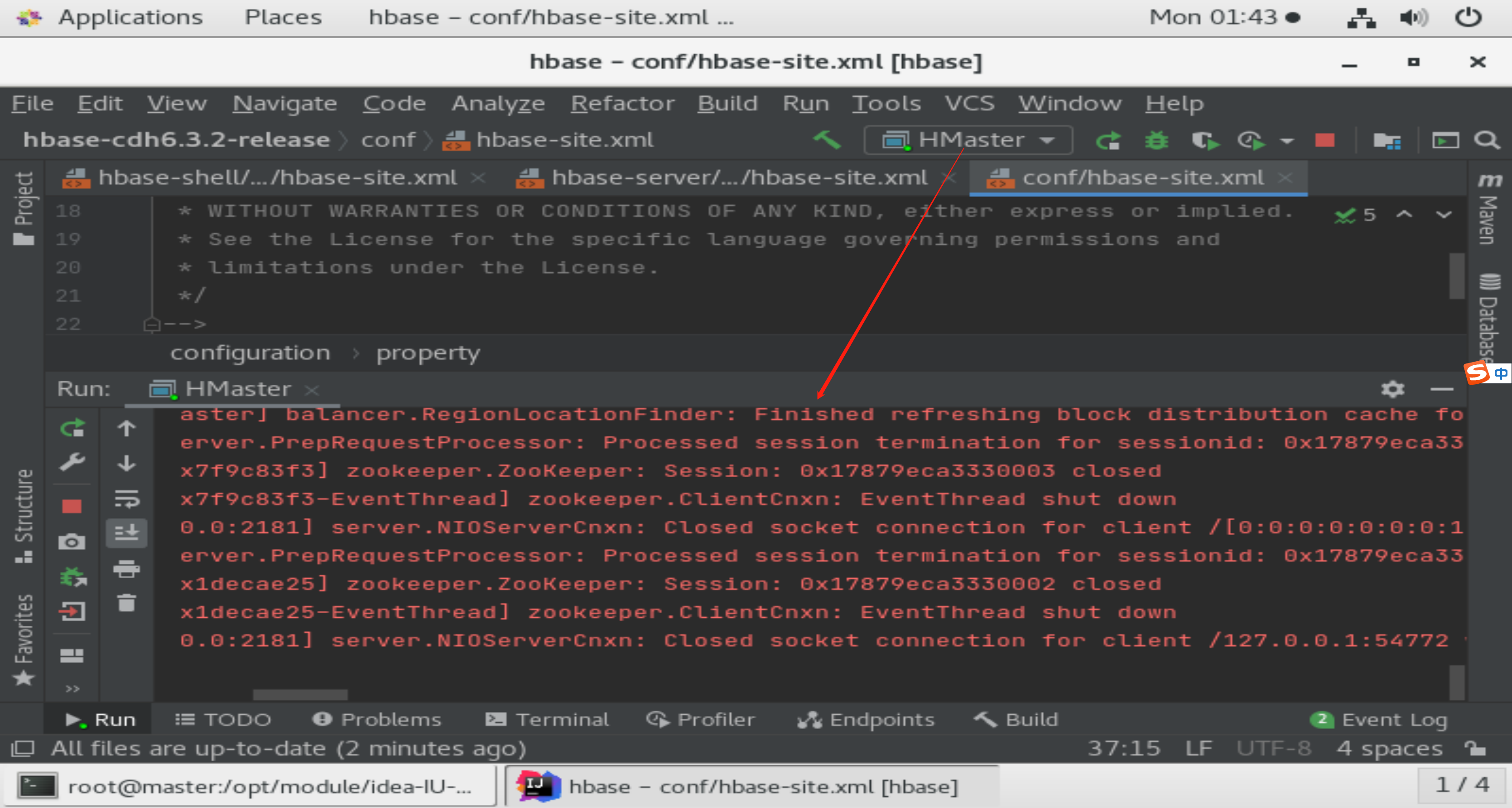

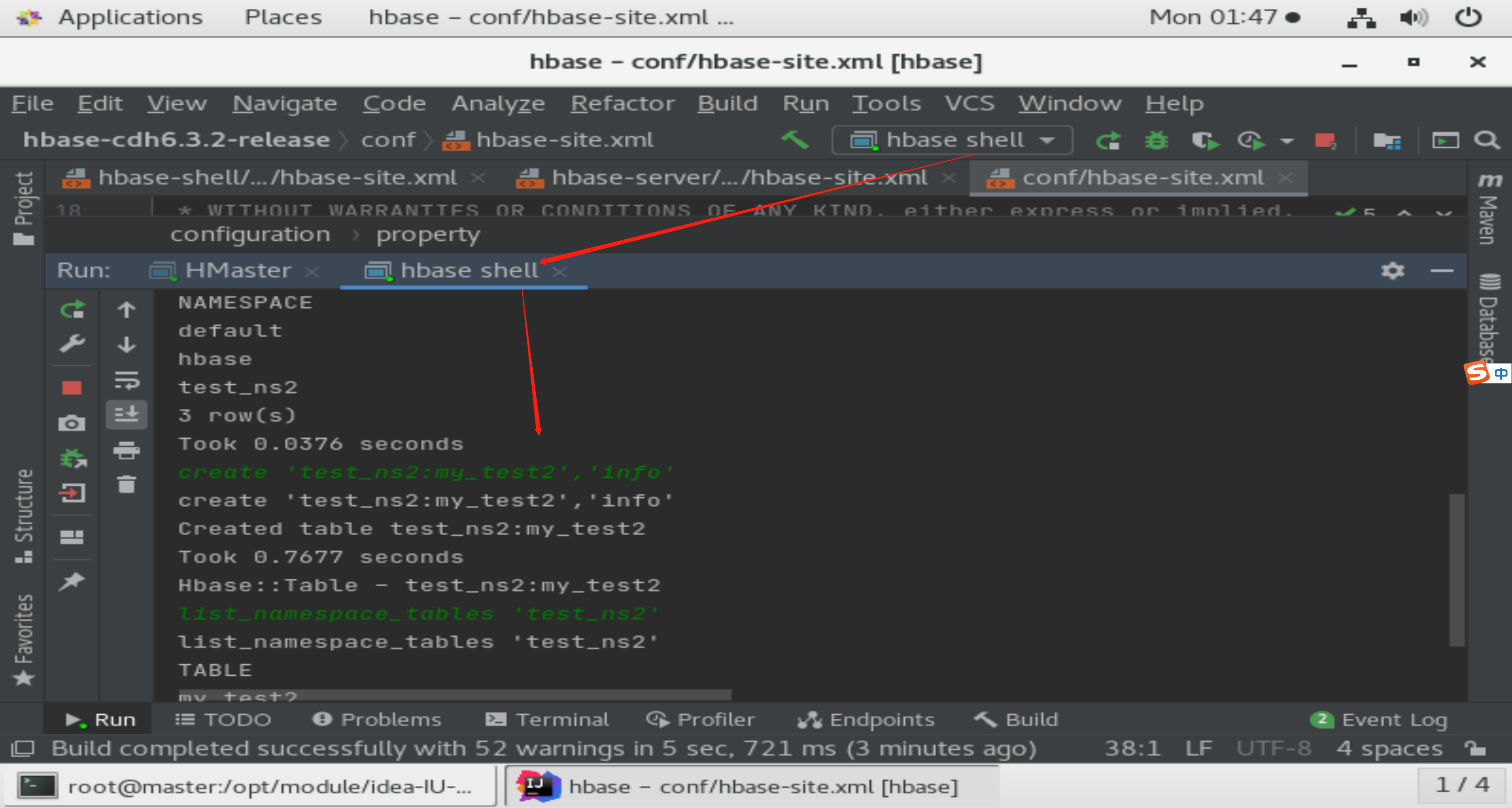

- 启动

1)配置HMaster启动项

-Dhbase.home.dir=/opt/module/hbase-1.4.8 -Dhbase.id.str=root -Dlog4j.configuration=/opt/module/hbase-1.4.8/conf/log4j.properties -Dhbase.log.dir=/root/jcz/logs -Dhbase.log.file=hbase-root-master.log -Dhbase.root.logger=INFO,console,DRFA --add-exports=java.base/jdk.internal.access=ALL-UNNAMED --add-exports=java.base/jdk.internal=ALL-UNNAMED --add-exports=java.base/jdk.internal.misc=ALL-UNNAMED --add-exports=java.base/sun.security.pkcs=ALL-UNNAMED --add-exports=java.base/sun.nio.ch=ALL-UNNAMED --add-opens java.base/jdk.internal.misc=ALL-UNNAMED

org.apache.hadoop.hbase.master.HMaster

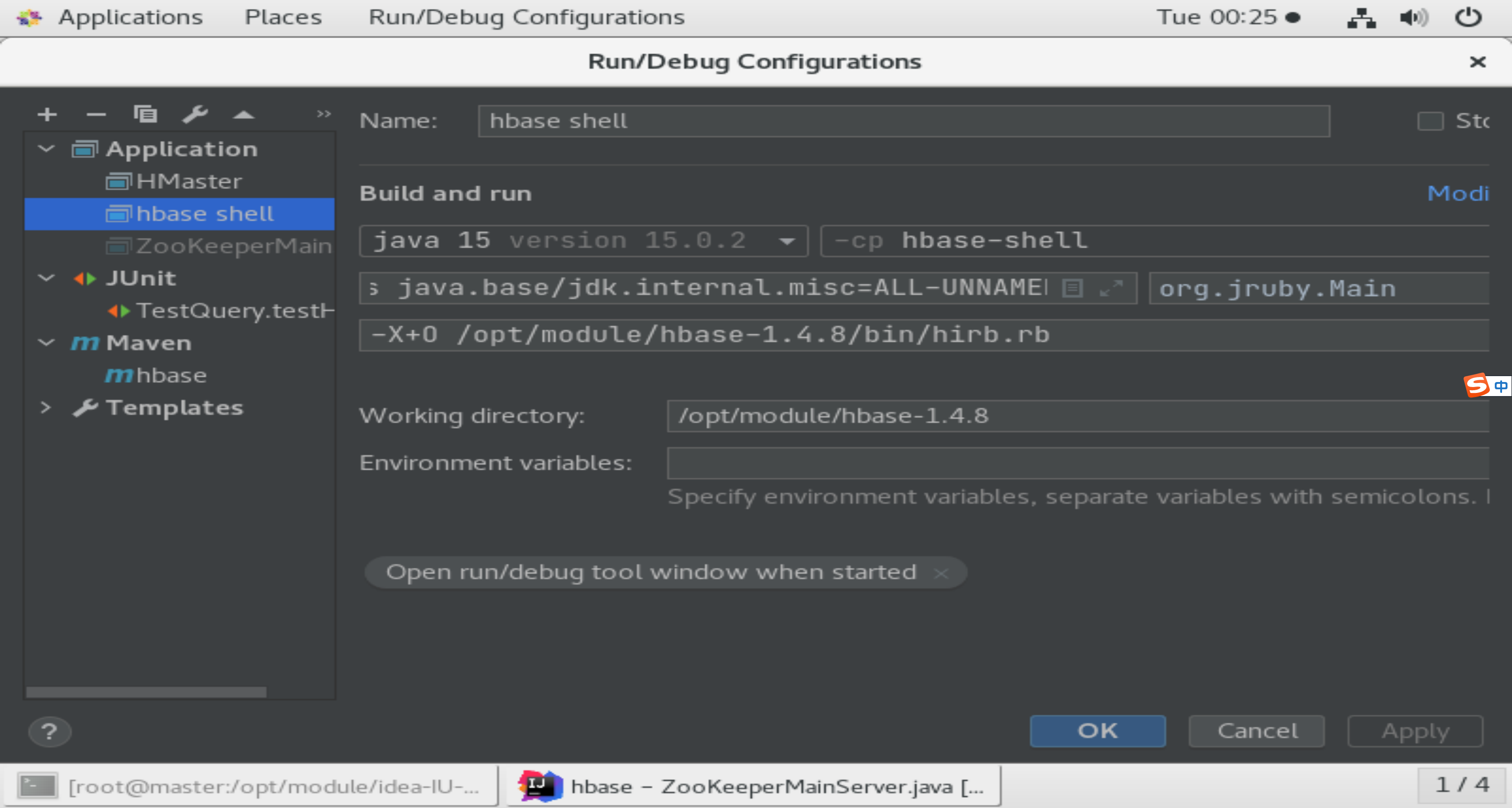

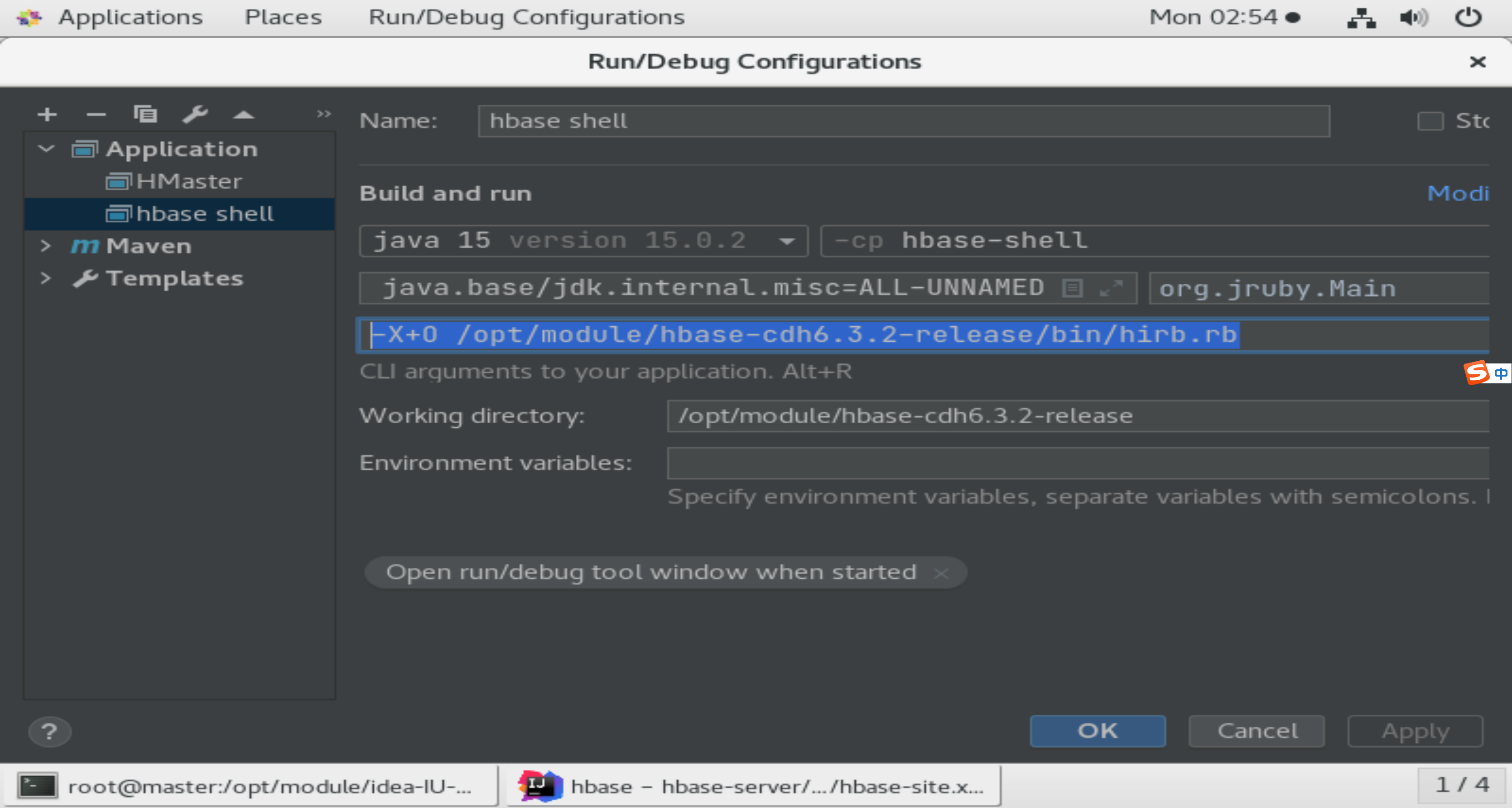

2)配置hbase shell启动项

-Dhbase.ruby.sources=/opt/module/hbase-1.4.8/hbase-shell/src/main/ruby --add-exports=java.base/jdk.internal.access=ALL-UNNAMED --add-exports=java.base/jdk.internal=ALL-UNNAMED --add-exports=java.base/jdk.internal.misc=ALL-UNNAMED --add-exports=java.base/sun.security.pkcs=ALL-UNNAMED --add-exports=java.base/sun.nio.ch=ALL-UNNAMED --add-opens java.base/jdk.internal.misc=ALL-UNNAMED

org.jruby.Main

-X+O /opt/module/hbase-cdh6.3.2-release/bin/hirb.rb

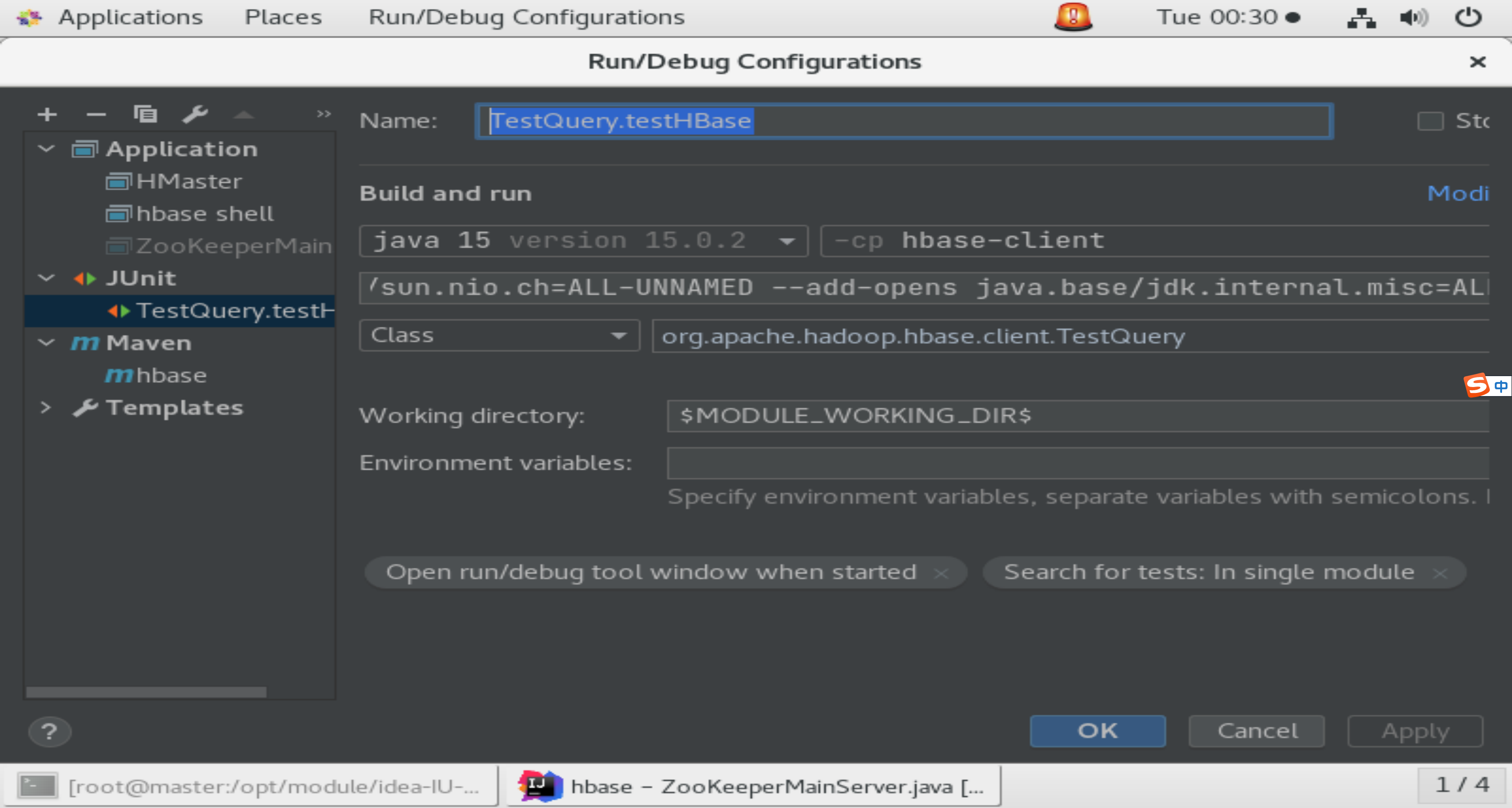

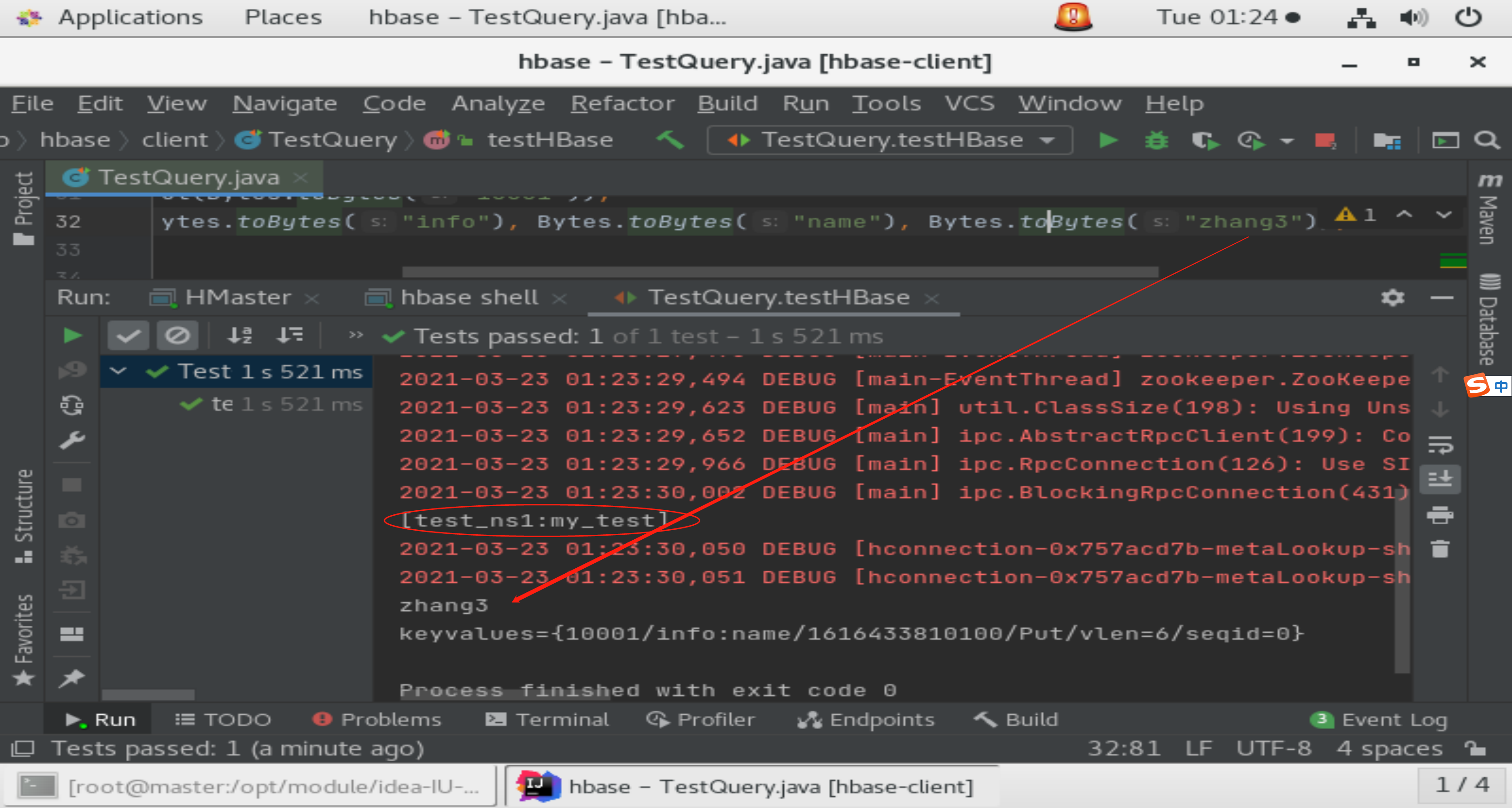

3)配置TestQuery.testHBase启动项

-Dhbase.ruby.sources=/opt/module/hbase-1.4.8/hbase-shell/src/main/ruby --add-exports=java.base/jdk.internal.access=ALL-UNNAMED --add-exports=java.base/jdk.internal=ALL-UNNAMED --add-exports=java.base/jdk.internal.misc=ALL-UNNAMED --add-exports=java.base/sun.security.pkcs=ALL-UNNAMED --add-exports=java.base/sun.nio.ch=ALL-UNNAMED --add-opens java.base/jdk.internal.misc=ALL-UNNAMED

org.apache.hadoop.hbase.client.TestQuery

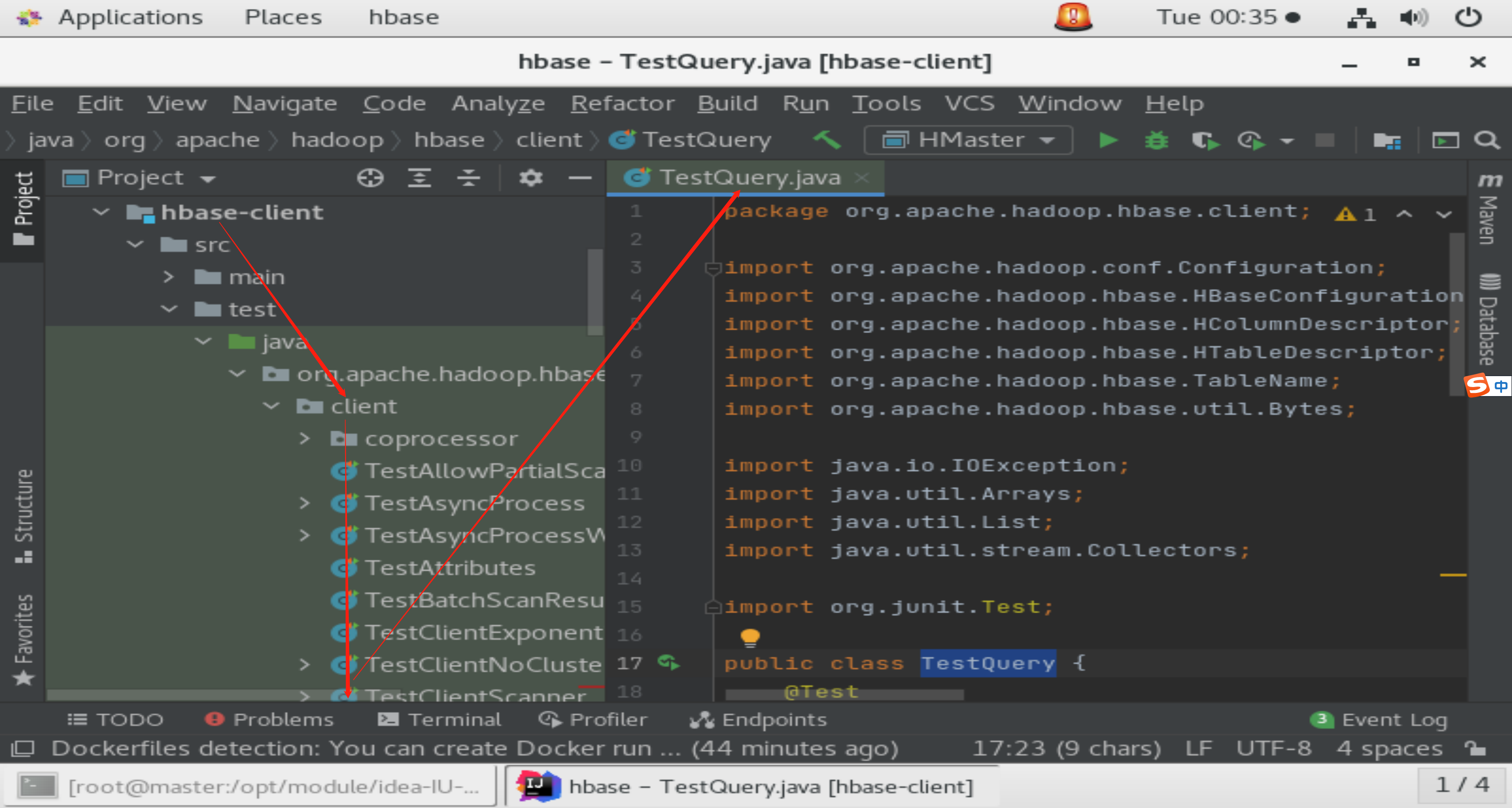

4)编写TestQuery测试类

package org.apache.hadoop.hbase.client;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

import java.util.Arrays;

import java.util.List;

import java.util.stream.Collectors;

import org.junit.Test;

public class TestQuery {

@Test

public void testHBase() {

Configuration conf = HBaseConfiguration.create();

try {

Connection connection = ConnectionFactory.createConnection(conf);

Admin admin = connection.getAdmin();

HTableDescriptor tableDescriptor = new HTableDescriptor(TableName.valueOf("test_ns1:my_test"));

HColumnDescriptor columnDescriptor = new HColumnDescriptor(Bytes.toBytes("info"));

tableDescriptor.addFamily(columnDescriptor);

//admin.createTable(tableDescriptor);

final List<String> tables = Arrays.stream(admin.listTableNames()).map(TableName::getNameAsString).collect(Collectors.toList());

System.out.println(tables);

Table table = connection.getTable(TableName.valueOf("test_ns1:my_test"));

Put put = new Put(Bytes.toBytes("10001"));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), Bytes.toBytes("zhang3"));

table.put(put);

Get get = new Get(Bytes.toBytes("10001"));

Result result = table.get(get);

byte[] value = result.getValue(Bytes.toBytes("info"), Bytes.toBytes("name"));

System.out.println(Bytes.toString(value).toString());

System.out.println(result);

} catch (IOException e) {

e.printStackTrace();

}

}

}

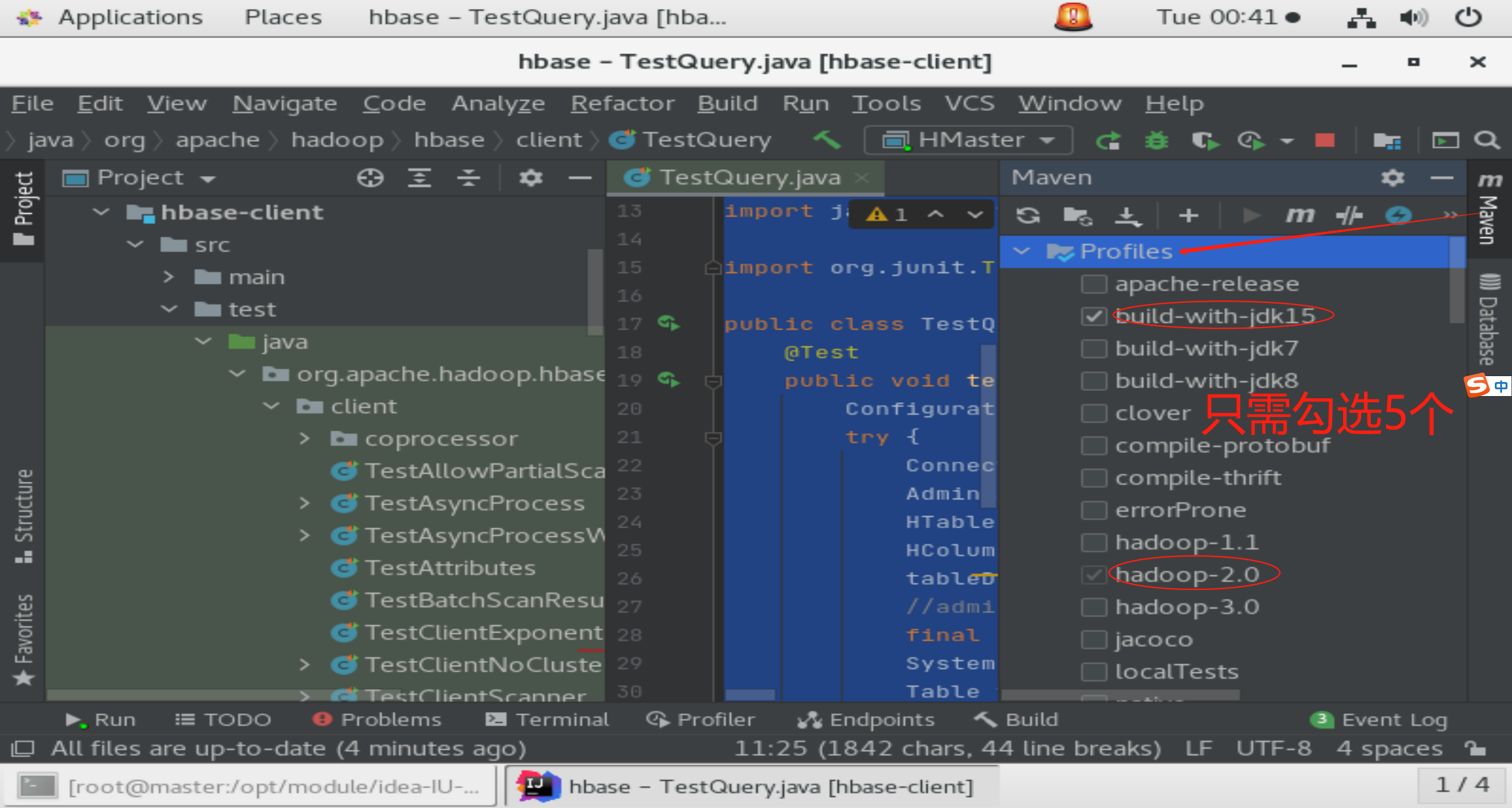

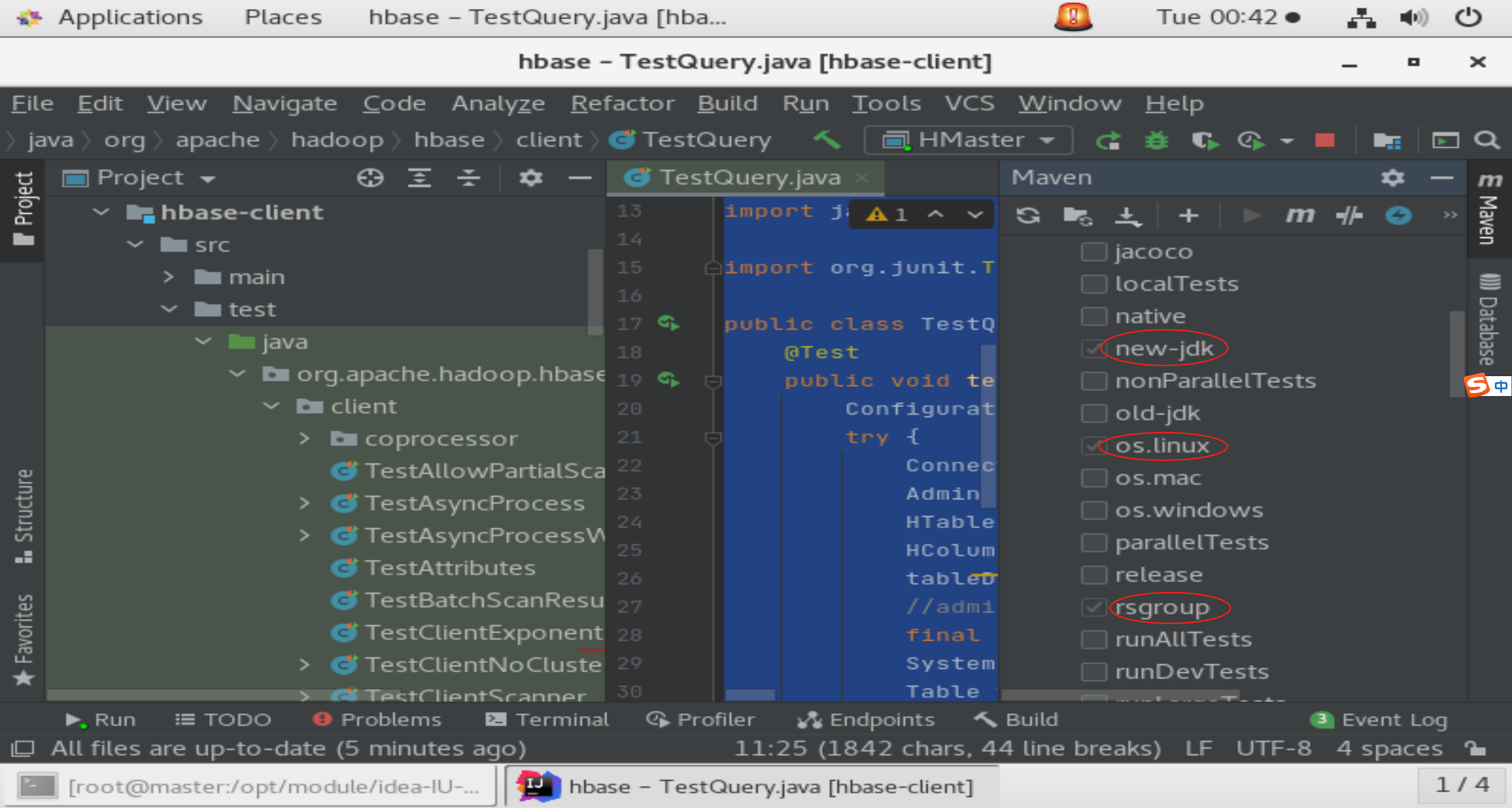

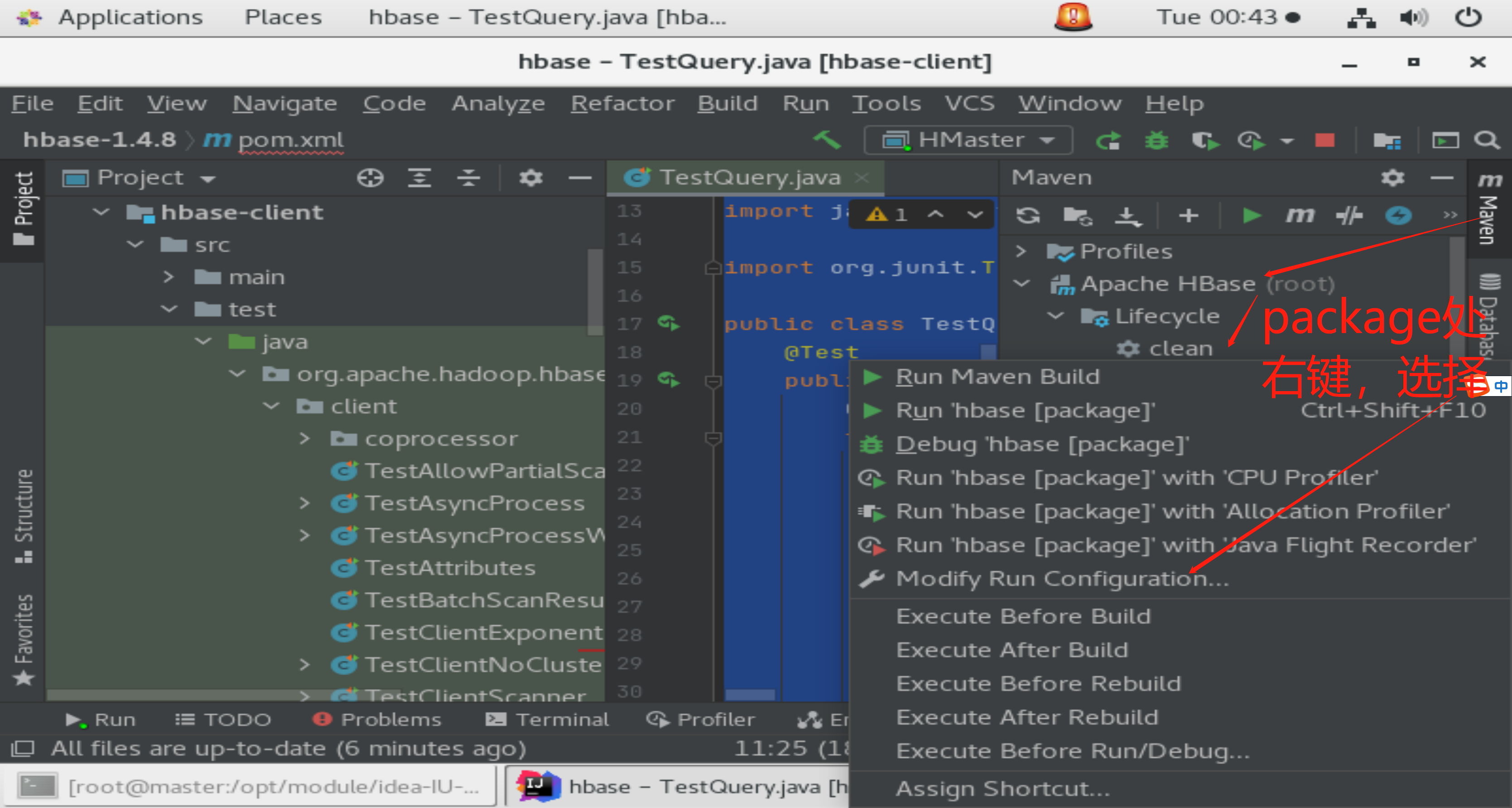

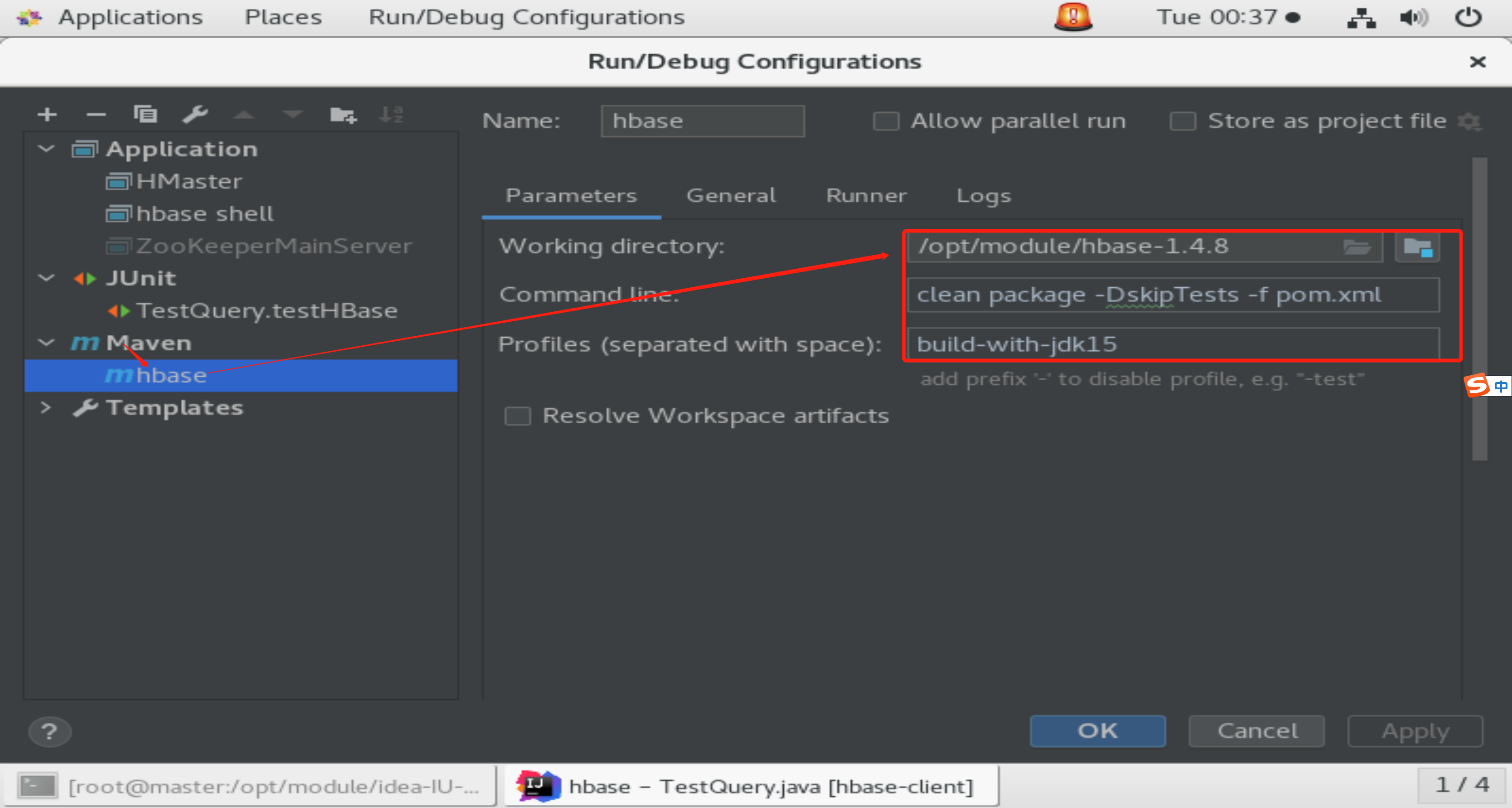

5)上面修改后,为便于用idea直接编译,作如下配置:

然后编译即可,发现所改并没影响到编译过程。

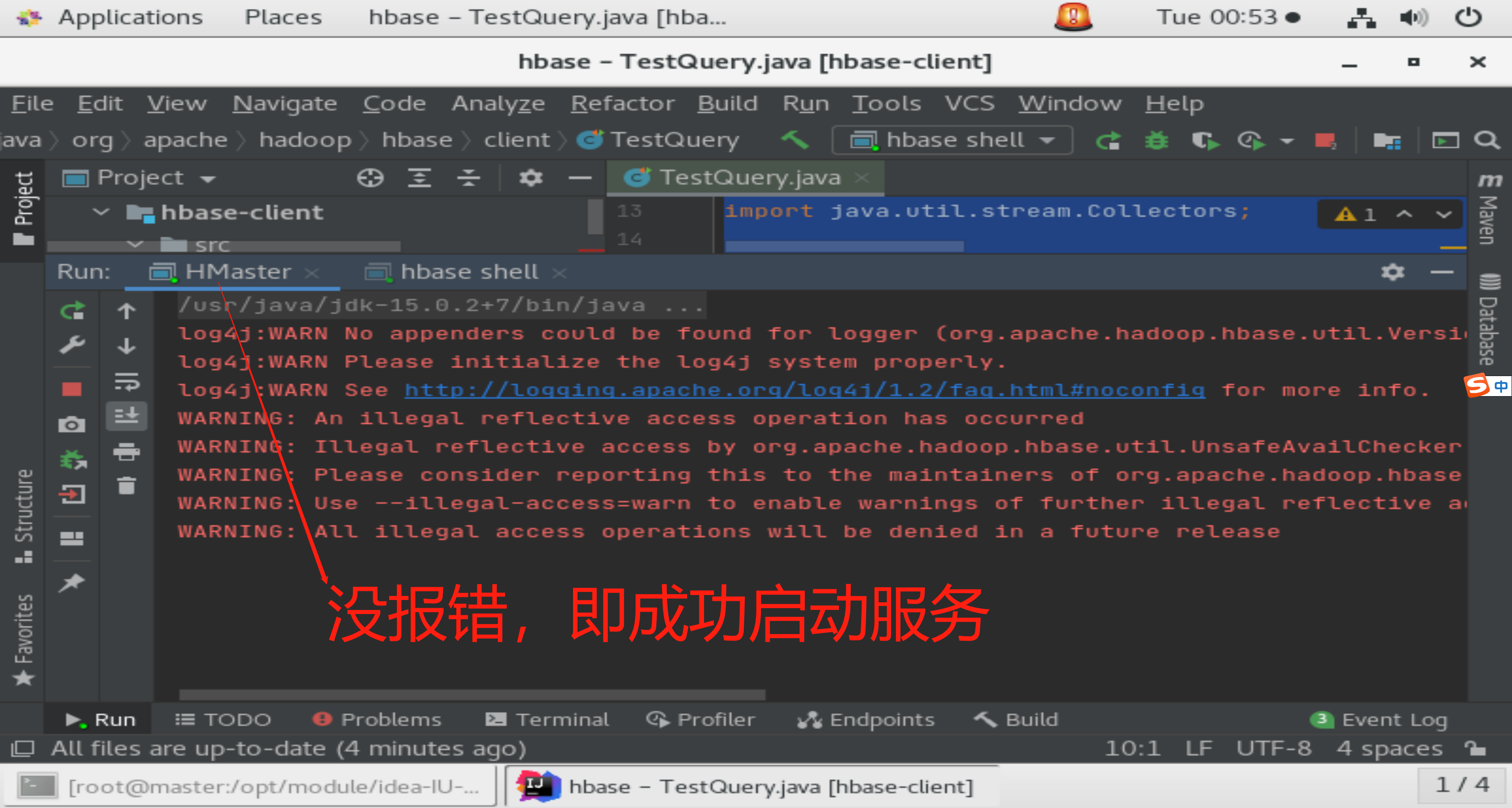

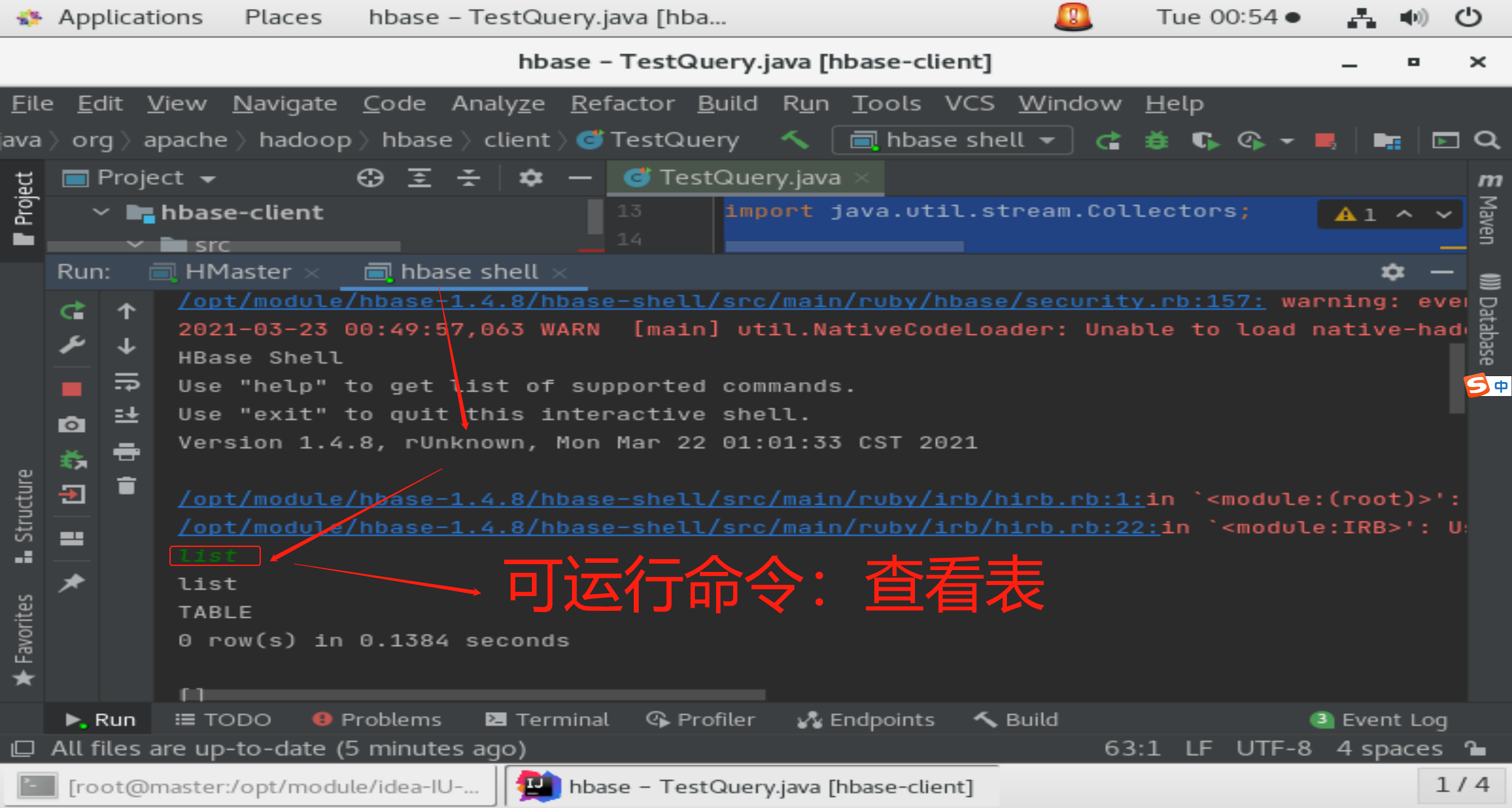

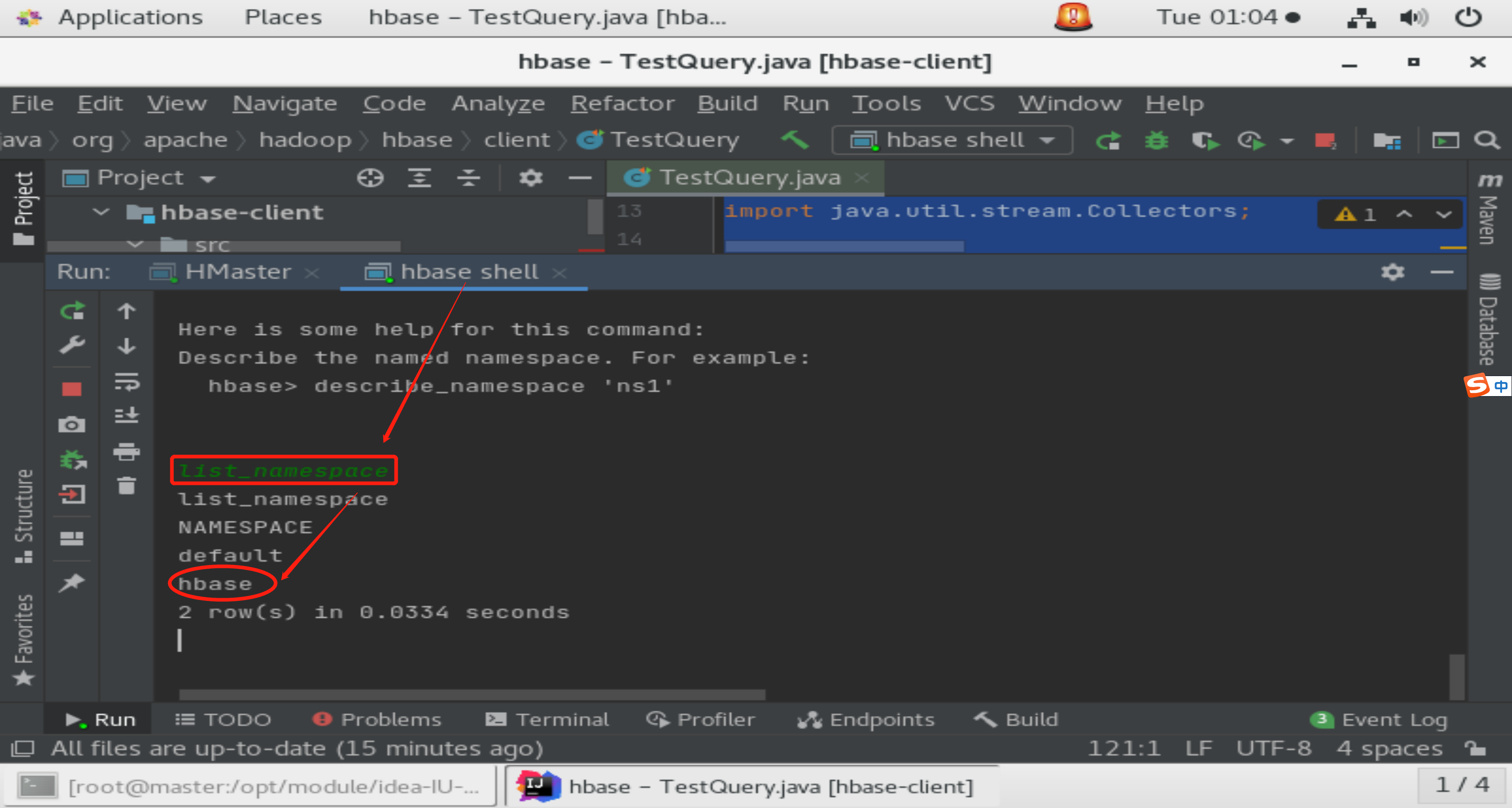

6)先启动HMaster再启动hbase shell,随便运行TestQuery.java

建议参考hbase shell-namespace(命名空间指令)一番操作验证hbase shell可用即可。

- 若有遗漏,请指出,待补充

HBase探索 | OpenJDK 15 编译部署 CDH 版 HBase(hbase-cdh6.3.2)

预准备

[root@master ~]# yum install -y protobuf protobuf-devel

[root@master ~]# protoc --version

libprotoc 2.5.0

[root@master ~]# mvn install:install-file -DgroupId=com.google.protobuf -DartifactId=protoc -Dversion=2.5.0 -Dclassifier=linux-aarch_64 -Dpackaging=exe -Dfile=/usr/bin/protoc

至github的cloudera下载源码

[root@master soft]# tar -zxf hbase-cdh6.3.2-release.tar.gz -C /opt/module/

[root@master soft]# cd /opt/module/hbase-cdh6.3.2-release/

由于已经有经历了,下面略写:

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

</repositories>

<!-- 旧配置 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>${maven.compiler.version}</version>

<configuration>

<source>${compileSource}</source>

<target>${compileSource}</target>

<showWarnings>true</showWarnings>

<showDeprecation>false</showDeprecation>

<useIncrementalCompilation>false</useIncrementalCompilation>

<compilerArgument>-Xlint:-options</compilerArgument>

</configuration>

</plugin>

<!-- 新配置 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>${maven.compiler.version}</version>

<configuration>

<source>${compileSource}</source>

<target>${compileSource}</target>

<showWarnings>true</showWarnings>

<showDeprecation>false</showDeprecation>

<useIncrementalCompilation>false</useIncrementalCompilation>

<compilerArgs>

<arg>--add-exports=java.base/jdk.internal.access=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/jdk.internal=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/jdk.internal.misc=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/sun.security.pkcs=ALL-UNNAMED</arg>

<arg>--add-exports=java.base/sun.nio.ch=ALL-UNNAMED</arg>

<!-- --add-opens 的配置可以不需要-->

<arg>--add-opens=java.base/java.nio=ALL-UNNAMED</arg>

<arg>--add-opens=java.base/jdk.internal.misc=ALL-UNNAMED</arg>

</compilerArgs>

</configuration>

</plugin>

1、在编译到hbase-protocol-shaded模块时报错:package javax.annotation does not exist

/opt/module/hbase-cdh6.3.2-release/hbase-protocol-shaded/target/generated-sources/protobuf/java/org/apache/hadoop/hbase/shaded/protobuf/generated/MasterProcedureProtos.java:[6,18] package javax.annotation does not exist

<dependencies>

<dependency>

<groupId>javax.annotation</groupId>

<artifactId>javax.annotation-api</artifactId>

<version>1.3.1</version>

</dependency>

</dependencies>

2、Failed to execute goal org.apache.maven.plugins:maven-shade-plugin:3.0.0:shade (default) on project hbase-protocol-shaded: Error creating shaded jar: null: IllegalArgumentException

maven-shade-plugin 版本升级为 3.2.4

3、在编译到hbase-it模块时报错:package javax.xml.ws.http does not exist

/opt/module/hbase-cdh6.3.2-release/hbase-it/src/test/java/org/apache/hadoop/hbase/RESTApiClusterManager.java:[39,25] package javax.xml.ws.http does not exist

<dependency>

<groupId>jakarta.xml.ws</groupId>

<artifactId>jakarta.xml.ws-api</artifactId>

<version>2.3.3</version>

</dependency>

4、hbase-shaded:

1)Rule 0: org.apache.maven.plugins.enforcer.EvaluateBeanshell failed with message:

License errors detected, for more detail find ERROR in

/opt/module/hbase-cdh6.3.2-release/hbase-shaded/target/maven-shared-archive-resources/META-INF/LICENSE

2)Failed to execute goal org.apache.maven.plugins:maven-enforcer-plugin:3.0.0-M2:enforce (check-aggregate-license) on project hbase-shaded: Some Enforcer rules have failed. Look above for specific messages explaining why the rule failed.

1)的解决方案:

在根pom文件中搜索check-aggregate-license即可找到如下skip:

<!-- <skip>${skip.license.check}</skip> -->

<skip>true</skip>

2)上面一旦跳过,便完全没必要升级maven-enforcer-plugin版本升级至 3.0.0-M3了。

5、hbase-spark:

1)error: java.lang.NoClassDefFoundError: javax/tools/ToolProvider

2)Failed to execute goal net.alchim31.maven:scala-maven-plugin:3.3.1:compile (scala-compile-first) on project hbase-spark: wrap: org.apache.commons.exec.ExecuteException: Process exited with an error: 240 (Exit value: 240)

仅需<arg>-feature</arg>改为<arg>-nobootcp</arg>不需将scala-maven-plugin插件的版本到4.4.0,更没必要由默认的3.3.1改为3.4.6

## 启动

-Dproc_master

-XX:OnOutOfMemoryError="kill -9 %p"

-XX:+UnlockExperimentalVMOptions

-XX:+UseZGC

-Dhbase.log.dir=/root/jcz/logs

-Dhbase.log.file=hbase-root-master.log

-Dhbase.home.dir=/opt/module/hbase-cdh6.3.2-release

-Dhbase.id.str=root

-Dhbase.root.logger=INFO,console,DRFA

--illegal-access=deny

--add-exports=java.base/jdk.internal.access=ALL-UNNAMED

--add-exports=java.base/jdk.internal=ALL-UNNAMED

--add-exports=java.base/jdk.internal.misc=ALL-UNNAMED

--add-exports=java.base/sun.security.pkcs=ALL-UNNAMED

--add-exports=java.base/sun.nio.ch=ALL-UNNAMED

--add-opens=java.base/java.nio=ALL-UNNAMED

--add-opens java.base/jdk.internal.misc=ALL-UNNAMED

-Dorg.apache.hbase.thirdparty.io.netty.tryReflectionSetAccessible=true

org.apache.hadoop.hbase.master.HMaster

<property>

# 此配置是为了跳过版本检查

<name>hbase.defaults.for.version.skip</name>

<value>true</value>

</property>

<property>

<name>hbase.master.info.port</name>

<value>16010</value>

</property>

<property>

<name>hbase.regionserver.info.port</name>

<value>16030</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>file:///Users/mac/other_project/cloudera/jdk15/cloudera-hbase/hbase-data/hbase</value>

<description>hbase本地数据地址</description>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/Users/mac/other_project/cloudera/jdk15/cloudera-hbase/hbase-data/zookeeper-data</value>

<description>hbase 内置zk数据目录地址</description>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

-Dhbase.ruby.sources=/opt/module/hbase-cdh6.3.2-release/hbase-shell/src/main/ruby --add-exports=java.base/jdk.internal.access=ALL-UNNAMED --add-exports=java.base/jdk.internal=ALL-UNNAMED --add-exports=java.base/jdk.internal.misc=ALL-UNNAMED --add-exports=java.base/sun.security.pkcs=ALL-UNNAMED --add-exports=java.base/sun.nio.ch=ALL-UNNAMED --add-opens java.base/jdk.internal.misc=ALL-UNNAMED

org.jruby.Main

-X+O /opt/module/hbase-cdh6.3.2-release/bin/hirb.rb

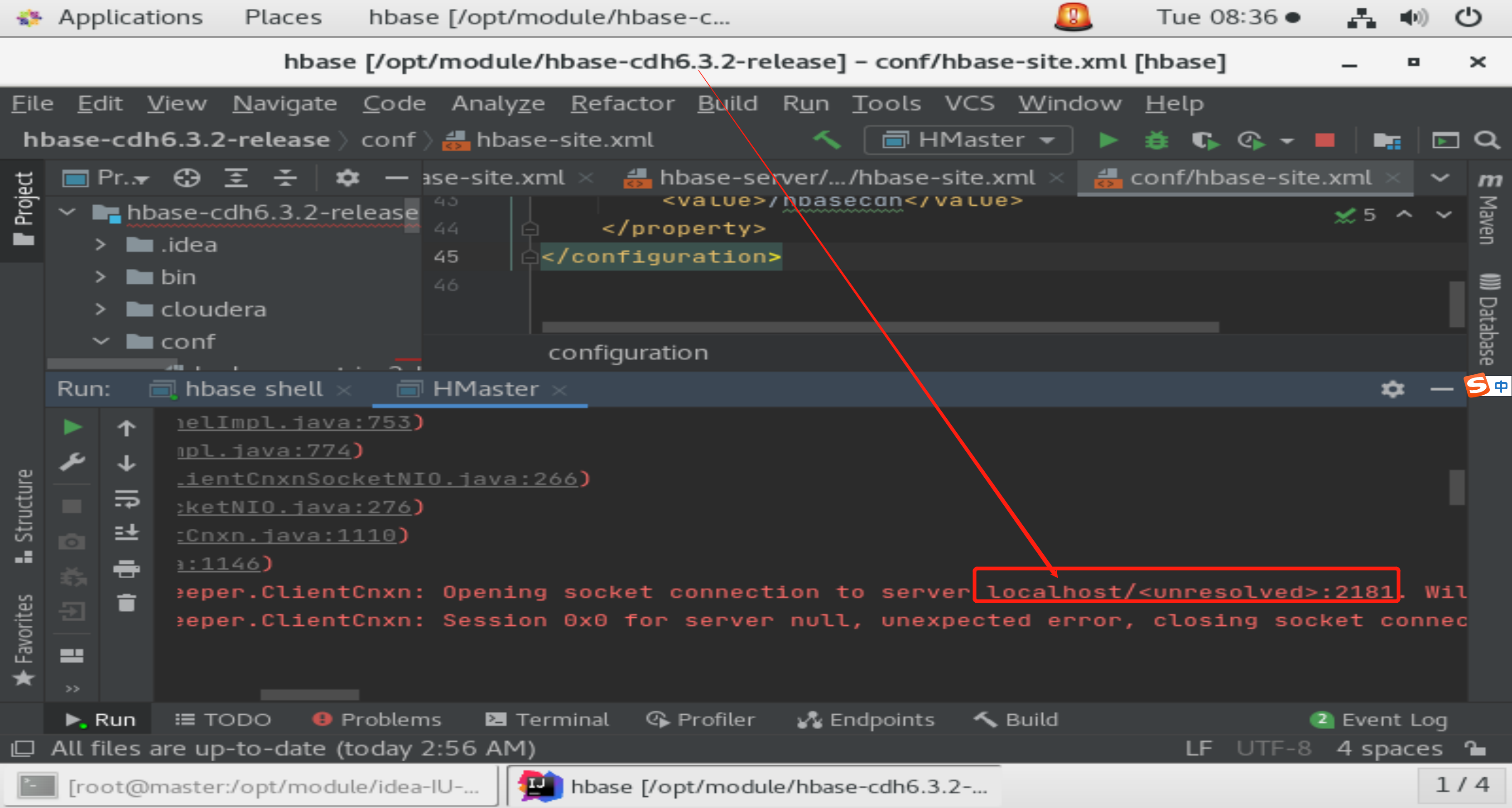

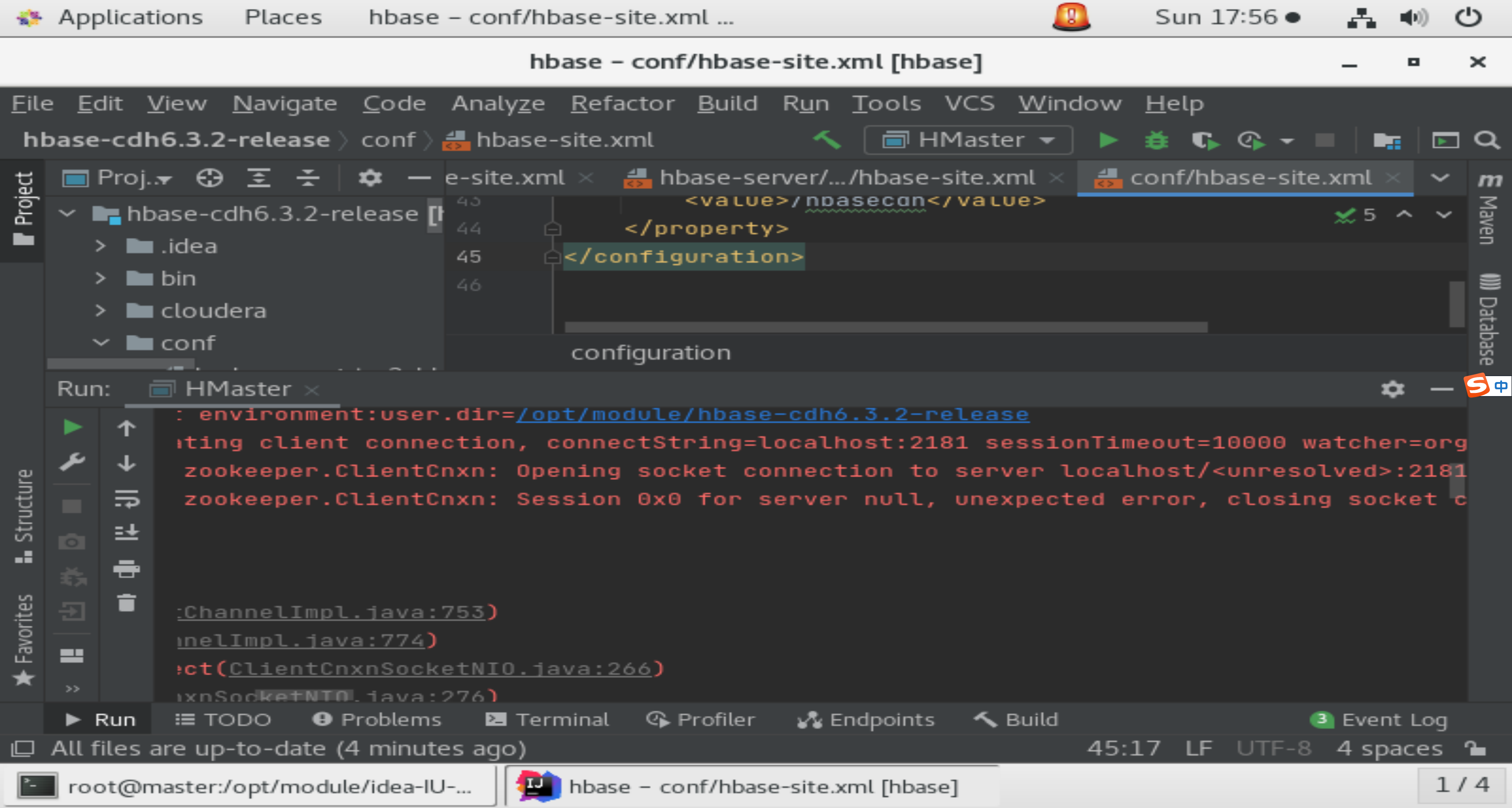

conf目录同样复制上面的,但不需要设置为Resources(如下图)

此时启动HMaster报错:localhost/:2181

一步一步地解决报错

1)下载zookeeper-3.4.5-cdh6.3.2源码解压并用idea打开后配置上maven-3.5.4开始修改:

private void init(Collection<InetSocketAddress> serverAddresses) {

try{

for (InetSocketAddress address : serverAddresses) {

InetAddress ia = address.getAddress();

InetAddress resolvedAddresses[] = InetAddress.getAllByName((ia!=null) ? ia.getHostAddress():

address.getHostName());

for (InetAddress resolvedAddress : resolvedAddresses) {

if (resolvedAddress.toString().startsWith("/")

&& resolvedAddress.getAddress() != null) {

this.serverAddresses.add(

new InetSocketAddress(InetAddress.getByAddress(

address.getHostName(),

resolvedAddress.getAddress()),

address.getPort()));

} else {

this.serverAddresses.add(new InetSocketAddress(resolvedAddress.getHostAddress(), address.getPort()));

}

}

}

}catch (UnknownHostException e){

throw new IllegalArgumentException("UnknownHostException ws cached!");

}

//增加上面21行,注释掉下面一行

if (serverAddresses.isEmpty()) {

throw new IllegalArgumentException(

"A HostProvider may not be empty!");

}

//this.serverAddresses.addAll(serverAddresses);

Collections.shuffle(this.serverAddresses);

}

扩展:zookeeper-cdh6.3.2-release编译

[root@master soft]# wget -P ./ https://mirrors.tuna.tsinghua.edu.cn/apache/ant/binaries/apache-ant-1.10.9-bin.tar.gz

[root@master soft]# tar -zxvf apache-ant-1.10.9-bin.tar.gz -C /opt/module/

[root@master soft]# vi /etc/profile

# export JAVA_HOME1=/usr/java/jdk1.8.0_171

# export CLASSPATH=.:${JAVA_HOME1}/jre/lib/rt.jar:${JAVA_HOME1}/lib/dt.jar:${JAVA_HOME1}/lib/tools.jar

export JAVA_HOME=/usr/java/jdk1.8.0_171

export CLASSPATH=.:${JAVA_HOME}/jre/lib/rt.jar:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

# export JAVA_HOME=/usr/java/jdk-15.0.2+7

export PATH=${JAVA_HOME}/bin:${PATH}

MAVEN_HOME=/opt/module/apache-maven-3.5.4

export PATH=${MAVEN_HOME}/bin:${PATH}

export ANT_HOME=/opt/module/apache-ant-1.10.9

export PATH=$PATH:$ANT_HOME/bin

[root@master soft]# source /etc/profile

[root@master soft]# ant -version

Apache Ant(TM) version 1.10.9 compiled on September 27 2020

[root@master soft]# cd /opt/soft/my-zookeeper-cdh6.3.2-release/zookeeper-cdh6.3.2-release/

[root@master soft]# cd /opt/soft/my-zookeeper-cdh6.3.2-release/zookeeper-cdh6.3.2-release/

[root@master zookeeper-cdh6.3.2-release]# ant

注意:由于所下载的ant版本要求最小jdk版本为1.8而又不支持15,详情见:

1)若报错:

[ERROR] Malformed POM /opt/soft/my-zookeeper-cdh6.3.2-release/zookeeper-cdh6.3.2-release/cloudera/maven-packaging/pom.xml: Unrecognised tag: 'pluginRepositories' (position: START_TAG seen ...</repository>n <pluginRepositories>... @72:25) @ /opt/soft/my-zookeeper-cdh6.3.2-release/zookeeper-cdh6.3.2-release/cloudera/maven-packaging/pom.xml, line 72, column 25

是因为上图d步骤中要把pluginRepositories放到</repositories>后面而不是前面

2)若报错:

[ERROR] Some problems were encountered while processing the POMs:

[exec] [FATAL] Non-resolvable parent POM for com.cloudera.cdh:zookeeper-root:3.4.5-cdh6.3.2: Failure to find com.cloudera.cdh:cdh-root:pom:6.3.2 in http://maven.aliyun.com/nexus/content/groups/public was cached in the local repository, resolution will not be reattempted until the update interval of aliyun has elapsed or updates are forced and 'parent.relativePath' points at wrong local POM @ com.cloudera.cdh:zookeeper-root:3.4.5-cdh6.3.2, /opt/soft/my-zookeeper-cdh6.3.2-release/zookeeper-cdh6.3.2-release/cloudera/maven-packaging/pom.xml, line 21, column 11

解决方案:

[root@master zookeeper-cdh6.3.2-release]# cp cloudera-pom.xml my-pom.xml

[root@master zookeeper-cdh6.3.2-release]# vi my-pom.xml

删除相应位置并在该位置处添加:

<groupId>com.cloudera.cdh</groupId>

<artifactId>cdh-root</artifactId>

<version>6.3.2</version>

<packaging>pom</packaging>

<properties>

<cdh.slf4j.version>1.7.25</cdh.slf4j.version>

<cdh.log4j.version>1.2.17</cdh.log4j.version>

<cdh.jline.version>2.11</cdh.jline.version>

<cdh.io.netty.version>3.10.6.Final</cdh.io.netty.version>

<cdh.junit.version>4.12</cdh.junit.version>

<cdh.mockito.version>1.8.5</cdh.mockito.version>

<cdh.checkstyle.version>5.0</cdh.checkstyle.version>

<cdh.jdiff.version>1.0.9</cdh.jdiff.version>

<cdh.xerces.version>1.4.4</cdh.xerces.version>

<cdh.rat.version>0.6</cdh.rat.version>

<cdh.commons-lang.version>2.4</cdh.commons-lang.version>

<cdh.commons-collections.version>3.2.2</cdh.commons-collections.version>

</properties>

3)若报错:

BUILD FAILED

/opt/soft/my-zookeeper-cdh6.3.2-release/zookeeper-cdh6.3.2-release/build.xml:1874: Incorrect JVM, current = 15.0.2, required 1.8.

解决方案:把jdk由15切换至1.8

[root@master zookeeper-cdh6.3.2-release]# ant compile-native

暂时无法解决:

compile-native:

[exec] configure.ac:37: warning: macro 'AM_PATH_CPPUNIT' not found in library

[exec] libtoolize: putting auxiliary files in `.'.

[exec] libtoolize: copying file `./ltmain.sh'

[exec] libtoolize: Consider adding `AC_CONFIG_MACRO_DIR([m4])' to configure.ac and

[exec] libtoolize: rerunning libtoolize, to keep the correct libtool macros in-tree.

[exec] libtoolize: Consider adding `-I m4' to ACLOCAL_AMFLAGS in Makefile.am.

[exec] configure.ac:37: warning: macro 'AM_PATH_CPPUNIT' not found in library

[exec] configure.ac:37: error: possibly undefined macro: AM_PATH_CPPUNIT

[exec] If this token and others are legitimate, please use m4_pattern_allow.

[exec] See the Autoconf documentation.

[exec] autoreconf: /usr/bin/autoconf failed with exit status: 1

BUILD FAILED

/opt/soft/my-zookeeper-cdh6.3.2-release/zookeeper-cdh6.3.2-release/build.xml:470: exec returned: 1

ant compile-native编译失败无影响,查了好多资料未解决:

参考1

参考2

[root@master zookeeper-cdh6.3.2-release]# ant package tar

以上均为铺垫,由于无法直接javac编译StaticHostProvider类,因报错:

[root@master zookeeper-cdh6.3.2-release]# cd /opt/soft/my_mvn/

[root@master my_mvn]# javac StaticHostProvider.java

StaticHostProvider.java:30: error: package org.apache.yetus.audience does not exist

import org.apache.yetus.audience.InterfaceAudience;

^

StaticHostProvider.java:31: error: package org.slf4j does not exist

import org.slf4j.Logger;

^

StaticHostProvider.java:32: error: package org.slf4j does not exist

import org.slf4j.LoggerFactory;

^

StaticHostProvider.java:42: error: package InterfaceAudience does not exist

@InterfaceAudience.Public

^

StaticHostProvider.java:48: error: cannot find symbol

private static final Logger LOG = LoggerFactory

^

symbol: class Logger

location: class StaticHostProvider

StaticHostProvider.java:48: error: cannot find symbol

private static final Logger LOG = LoggerFactory

^

symbol: variable LoggerFactory

location: class StaticHostProvider

6 errors

最终的解决方案还是他的最简易,如下:

1)上图pom.xml内容为:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<cdh.slf4j.version>1.7.25</cdh.slf4j.version>

<cdh.log4j.version>1.2.17</cdh.log4j.version>

<cdh.jline.version>2.11</cdh.jline.version>

<cdh.io.netty.version>3.10.6.Final</cdh.io.netty.version>

<cdh.junit.version>4.12</cdh.junit.version>

<cdh.mockito.version>1.8.5</cdh.mockito.version>

<cdh.checkstyle.version>5.0</cdh.checkstyle.version>

<cdh.jdiff.version>1.0.9</cdh.jdiff.version>

<cdh.xerces.version>1.4.4</cdh.xerces.version>

<cdh.rat.version>0.6</cdh.rat.version>

<cdh.commons-lang.version>2.4</cdh.commons-lang.version>

<cdh.commons-collections.version>3.2.2</cdh.commons-collections.version>

</properties>

<dependencies>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${cdh.slf4j.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${cdh.slf4j.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>${cdh.log4j.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>jline</groupId>

<artifactId>jline</artifactId>

<version>${cdh.jline.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty</artifactId>

<version>${cdh.io.netty.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.apache.yetus</groupId>

<artifactId>audience-annotations</artifactId>

<version>0.5.0</version>

<!--jcz 2021-03-28-->

<scope>compile</scope>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>${cdh.junit.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-all</artifactId>

<version>${cdh.mockito.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>checkstyle</groupId>

<artifactId>checkstyle</artifactId>

<version>${cdh.checkstyle.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>jdiff</groupId>

<artifactId>jdiff</artifactId>

<version>${cdh.jdiff.version}</version>

<optional>true</optional>

<!--jcz 2021-03-28-->

<scope>test</scope>

</dependency>

<dependency>

<groupId>xerces</groupId>

<artifactId>xerces</artifactId>

<version>${cdh.xerces.version}</version>

<optional>true</optional>

<!--jcz 2021-03-28-->

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.rat</groupId>

<artifactId>apache-rat-tasks</artifactId>

<version>${cdh.rat.version}</version>

<optional>true</optional>

<!--jcz 2021-03-28-->

<scope>test</scope>

</dependency>

<dependency>

<groupId>commons-lang</groupId>

<artifactId>commons-lang</artifactId>

<version>${cdh.commons-lang.version}</version>

<optional>true</optional>

<!--jcz 2021-03-28-->

<scope>test</scope>

</dependency>

<dependency>

<groupId>commons-collections</groupId>

<artifactId>commons-collections</artifactId>

<version>${cdh.commons-collections.version}</version>

<optional>true</optional>

<!--jcz 2021-03-28-->

<scope>test</scope>

</dependency>

</dependencies>

</project>

2)StaticHostProvider类修改后的全貌为

/**

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.zookeeper.client;

import java.net.InetAddress;

import java.net.InetSocketAddress;

import java.net.UnknownHostException;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collection;

import java.util.Collections;

import java.util.List;

import org.apache.yetus.audience.InterfaceAudience;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* Most simple HostProvider, resolves on every next() call.

*

* Please be aware that although this class doesn't do any DNS caching, there're multiple levels of caching already

* present across the stack like in JVM, OS level, hardware, etc. The best we could do here is to get the most recent

* address from the underlying system which is considered up-to-date.

*

*/

@InterfaceAudience.Public

public final class StaticHostProvider implements HostProvider {

public interface Resolver {

InetAddress[] getAllByName(String name) throws UnknownHostException;

}

private static final Logger LOG = LoggerFactory

.getLogger(StaticHostProvider.class);

private final List<InetSocketAddress> serverAddresses = new ArrayList<InetSocketAddress>(5);

private int lastIndex = -1;

private int currentIndex = -1;

private Resolver resolver;

/**

* Constructs a SimpleHostSet.

*

* @param serverAddresses

* possibly unresolved ZooKeeper server addresses

* @throws IllegalArgumentException

* if serverAddresses is empty or resolves to an empty list

*/

public StaticHostProvider(Collection<InetSocketAddress> serverAddresses) {

this.resolver = new Resolver() {

@Override

public InetAddress[] getAllByName(String name) throws UnknownHostException {

return InetAddress.getAllByName(name);

}

};

init(serverAddresses);

}

/**

* Introduced for testing purposes. getAllByName() is a static method of InetAddress, therefore cannot be easily mocked.

* By abstraction of Resolver interface we can easily inject a mocked implementation in tests.

*

* @param serverAddresses

* possibly unresolved ZooKeeper server addresses

* @param resolver

* custom resolver implementation

* @throws IllegalArgumentException

* if serverAddresses is empty or resolves to an empty list

*/

public StaticHostProvider(Collection<InetSocketAddress> serverAddresses, Resolver resolver) {

this.resolver = resolver;

init(serverAddresses);

}

/**

* Common init method for all constructors.

* Resolve all unresolved server addresses, put them in a list and shuffle.

*/

private void init(Collection<InetSocketAddress> serverAddresses) {

try{

for (InetSocketAddress address : serverAddresses) {

InetAddress ia = address.getAddress();

InetAddress resolvedAddresses[] = InetAddress.getAllByName((ia!=null) ? ia.getHostAddress():

address.getHostName());

for (InetAddress resolvedAddress : resolvedAddresses) {

if (resolvedAddress.toString().startsWith("/")

&& resolvedAddress.getAddress() != null) {

this.serverAddresses.add(

new InetSocketAddress(InetAddress.getByAddress(

address.getHostName(),

resolvedAddress.getAddress()),

address.getPort()));

} else {

this.serverAddresses.add(new InetSocketAddress(resolvedAddress.getHostAddress(), address.getPort()));

}

}

}

}catch (UnknownHostException e){

throw new IllegalArgumentException("UnknownHostException ws cached!");

}

//增加上面21行,注释掉下面一行

if (serverAddresses.isEmpty()) {

throw new IllegalArgumentException(

"A HostProvider may not be empty!");

}

//this.serverAddresses.addAll(serverAddresses);

Collections.shuffle(this.serverAddresses);

}

/**

* Evaluate to a hostname if one is available and otherwise it returns the

* string representation of the IP address.

*

* In Java 7, we have a method getHostString, but earlier versions do not support it.

* This method is to provide a replacement for InetSocketAddress.getHostString().

*

* @param addr

* @return Hostname string of address parameter

*/

private String getHostString(InetSocketAddress addr) {

String hostString = "";

if (addr == null) {

return hostString;

}

if (!addr.isUnresolved()) {

InetAddress ia = addr.getAddress();

// If the string starts with '/', then it has no hostname

// and we want to avoid the reverse lookup, so we return

// the string representation of the address.

if (ia.toString().startsWith("/")) {

hostString = ia.getHostAddress();

} else {

hostString = addr.getHostName();

}

} else {

// According to the Java 6 documentation, if the hostname is

// unresolved, then the string before the colon is the hostname.

String addrString = addr.toString();

hostString = addrString.substring(0, addrString.lastIndexOf(':'));

}

return hostString;

}

public int size() {

return serverAddresses.size();

}

public InetSocketAddress next(long spinDelay) {

currentIndex = ++currentIndex % serverAddresses.size();

if (currentIndex == lastIndex && spinDelay > 0) {

try {

Thread.sleep(spinDelay);

} catch (InterruptedException e) {

LOG.warn("Unexpected exception", e);

}

} else if (lastIndex == -1) {

// We don't want to sleep on the first ever connect attempt.

lastIndex = 0;

}

InetSocketAddress curAddr = serverAddresses.get(currentIndex);

try {

String curHostString = getHostString(curAddr);

List<InetAddress> resolvedAddresses = new ArrayList<InetAddress>(Arrays.asList(this.resolver.getAllByName(curHostString)));

if (resolvedAddresses.isEmpty()) {

return curAddr;

}

Collections.shuffle(resolvedAddresses);

return new InetSocketAddress(resolvedAddresses.get(0), curAddr.getPort());

} catch (UnknownHostException e) {

return curAddr;

}

}

@Override

public void onConnected() {

lastIndex = currentIndex;

}

}

直接借助maven进行编译后

[root@master my_mvn]# ll ./zookeeper/target/

total 12

drwxr-xr-x 3 root root 17 Mar 29 01:28 classes

drwxr-xr-x 3 root root 25 Mar 29 01:28 generated-sources

drwxr-xr-x 2 root root 28 Mar 29 01:28 maven-archiver

drwxr-xr-x 3 root root 35 Mar 29 01:28 maven-status

-rw-r--r-- 1 root root 8467 Mar 29 01:28 zookeeper-1.0-SNAPSHOT.jar

[root@master my_mvn]# cd ./zookeeper/target/

[root@master target]# jar -xvf zookeeper-1.0-SNAPSHOT.jar

created: META-INF/

inflated: META-INF/MANIFEST.MF

created: org/

created: org/apache/

created: org/apache/zookeeper/

created: org/apache/zookeeper/client/

inflated: org/apache/zookeeper/client/FourLetterWordMain.class

inflated: org/apache/zookeeper/client/HostProvider.class

inflated: org/apache/zookeeper/client/StaticHostProvider$Resolver.class

inflated: org/apache/zookeeper/client/StaticHostProvider$1.class

inflated: org/apache/zookeeper/client/StaticHostProvider.class

created: META-INF/maven/

created: META-INF/maven/org.apache.zookeeper/

created: META-INF/maven/org.apache.zookeeper/zookeeper/

inflated: META-INF/maven/org.apache.zookeeper/zookeeper/pom.xml

inflated: META-INF/maven/org.apache.zookeeper/zookeeper/pom.properties

[root@master target]# cd /opt/soft/my_mvn/zookeeper-3.4.5-cdh6.3.2/org/apache/zookeeper/client/

[root@master client]# rm -rf StaticHostProvider*

[root@master client]# ll

total 68

-rw-r--r-- 1 root root 1955 Nov 8 2019 ConnectStringParser.class

-rw-r--r-- 1 root root 2932 Nov 6 2019 ConnectStringParser.java

-rw-r--r-- 1 root root 2839 Nov 8 2019 FourLetterWordMain.class

-rw-r--r-- 1 root root 2793 Nov 6 2019 FourLetterWordMain.java

-rw-r--r-- 1 root root 471 Nov 8 2019 HostProvider.class

-rw-r--r-- 1 root root 2347 Nov 6 2019 HostProvider.java

-rw-r--r-- 1 root root 1648 Nov 8 2019 ZooKeeperSaslClient$1.class

-rw-r--r-- 1 root root 11782 Nov 8 2019 ZooKeeperSaslClient.class

-rw-r--r-- 1 root root 20069 Nov 6 2019 ZooKeeperSaslClient.java

-rw-r--r-- 1 root root 1370 Nov 8 2019 ZooKeeperSaslClient$SaslState.class

-rw-r--r-- 1 root root 1923 Nov 8 2019 ZooKeeperSaslClient$ServerSaslResponseCallback.class

[root@master client]# cp /opt/soft/my_mvn/zookeeper/target/org/apache/zookeeper/client/StaticHostProvider* ./

[root@master client]# cp /opt/soft/my_mvn/zookeeper/src/main/java/org/apache/zookeeper/client/StaticHostProvider* ./

[root@master client]# ll

total 92

-rw-r--r-- 1 root root 1955 Nov 8 2019 ConnectStringParser.class

-rw-r--r-- 1 root root 2932 Nov 6 2019 ConnectStringParser.java

-rw-r--r-- 1 root root 2839 Nov 8 2019 FourLetterWordMain.class

-rw-r--r-- 1 root root 2793 Nov 6 2019 FourLetterWordMain.java

-rw-r--r-- 1 root root 471 Nov 8 2019 HostProvider.class

-rw-r--r-- 1 root root 2347 Nov 6 2019 HostProvider.java

-rw-r--r-- 1 root root 966 Mar 29 01:33 StaticHostProvider$1.class

-rw-r--r-- 1 root root 5391 Mar 29 01:33 StaticHostProvider.class

-rw-r--r-- 1 root root 7726 Mar 29 01:34 StaticHostProvider.java

-rw-r--r-- 1 root root 375 Mar 29 01:33 StaticHostProvider$Resolver.class

-rw-r--r-- 1 root root 1648 Nov 8 2019 ZooKeeperSaslClient$1.class

-rw-r--r-- 1 root root 11782 Nov 8 2019 ZooKeeperSaslClient.class

-rw-r--r-- 1 root root 20069 Nov 6 2019 ZooKeeperSaslClient.java

-rw-r--r-- 1 root root 1370 Nov 8 2019 ZooKeeperSaslClient$SaslState.class

-rw-r--r-- 1 root root 1923 Nov 8 2019 ZooKeeperSaslClient$ServerSaslResponseCallback.class

[root@master client]# cd /opt/soft/my_mvn

[root@master my_mvn]# rm -rf zookeeper-3.4.5-cdh6.3.2.jar

[root@master my_mvn]# jar -cvf zookeeper-3.4.5-cdh6.3.2.jar -C zookeeper-3.4.5-cdh6.3.2/ .

[root@master my_mvn]# find / -name zookeeper-3.4.5-cdh6.3.2.jar

/root/.m2/repository/org/apache/zookeeper/zookeeper/3.4.5-cdh6.3.2/zookeeper-3.4.5-cdh6.3.2.jar

/opt/soft/my_mvn/zookeeper-3.4.5-cdh6.3.2.jar

/opt/module/repository/org/apache/zookeeper/zookeeper/3.4.5-cdh6.3.2/zookeeper-3.4.5-cdh6.3.2.jar

[root@master my_mvn]# rm -rf /root/.m2/repository/org/apache/zookeeper/zookeeper/3.4.5-cdh6.3.2/zookeeper-3.4.5-cdh6.3.2.jar

[root@master my_mvn]# rm -rf /opt/module/repository/org/apache/zookeeper/zookeeper/3.4.5-cdh6.3.2/zookeeper-3.4.5-cdh6.3.2.jar

[root@master my_mvn]# cp /opt/soft/my_mvn/zookeeper-3.4.5-cdh6.3.2.jar /root/.m2/repository/org/apache/zookeeper/zookeeper/3.4.5-cdh6.3.2/

[root@master my_mvn]# cp /opt/soft/my_mvn/zookeeper-3.4.5-cdh6.3.2.jar /opt/module/repository/org/apache/zookeeper/zookeeper/3.4.5-cdh6.3.2/

然后就可以成功启动HMaster并测试hbase shell了

最后

以上就是自然小伙最近收集整理的关于OpenJdk 15编译HBase并部署到cdh6.3.2中实践:HBase源码篇 | OpenJdk 15编译HBase并部署测试HBase探索 | OpenJDK 15 编译部署 CDH 版 HBase(hbase-cdh6.3.2)的全部内容,更多相关OpenJdk内容请搜索靠谱客的其他文章。

发表评论 取消回复