简述

首先是摄像头问题,很多菜鸟比如我会纠结使用那种摄像头,我觉得4G图传CSI就可以了,USB摄像头似乎消耗系统资源会更多,而且在广域网图传受4G速度影响不可能太清晰,即分辨率高的摄像头反而鸡肋。这里有个小坑,绝大部分摄像头支持MJPEG、YUY2、YUV等格式,乍一看还以为支持硬编码,其实MJPEG是拿不到的,即便是能拿到也只是软编码,比如ESP32-CAM我猜就是软编码,硬编码的速度不应该那么慢。

MJPEG

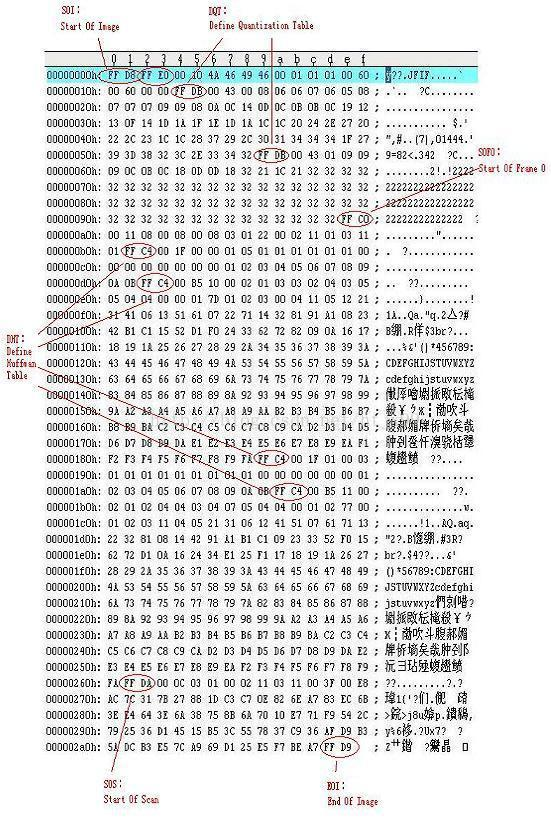

使用MJPEG图传很方便,看一下格式:

MJPEG每帧都是关键帧,也不需要解码,上位机基本不需要对它做处理,但是这货有个问题,超过SVGA也就是800X600,编码出来的大小在网络上传输是很不理想的,如果每帧超过上百KB了,估计5G才能满足这个需求了,YUY2、YUV就更不适合在网络上传输了,单帧已经过MB了。如果对清晰度要求不是很高,SVGA就已经可以满足需求了,只不过费点流量,也很容易卡帧。

在python中获取JPG很容易,对JPG的质量也可以方便的控制

if(self.cap.isOpened()):

ret, frame = self.cap.read()

else:

time.sleep(1)

continue

try:

data = cv2.imencode(".jpg", frame, [int(cv2.IMWRITE_JPEG_QUALITY), 80])[1]

self.client.sendall(data.tobytes())

except Exception as e:

print(e)

这里我试过480X320的分辨率还是很流畅的,而800X600在网络条件很不错的情况下也是可以的,但是4G网络速度变化太不稳定了,所以即便是800X600也感觉很奢侈了,主要是cv2.imencode的编码速度瓶颈,我也尝试了其它工具(https://blog.csdn.net/weixin_43928944/article/details/111245235),结论也是一样的,软编码800X600是个瓶颈。

h264

想高清图传还是需要用目前资料比较多的H264方便一些。

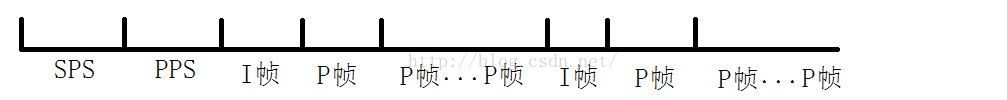

这里I帧是关键帧,补偿帧(P/B帧),SPS(Sequence Parameter Set:序列参数集)和PPS(Picture Parameter Set:图像参数集)

编码后的h264每一帧都是以0001或者001开头的,0001居多,因为我是用TCP传的,所以这个头就很容易识别每条编码了。

python 编码h264方式很多也很方便这里就不提了,服务器负责转发没什么好说的,注意一下缓冲区别太小导致丢失数据即可

//创建ServerBootstrap实例

ServerBootstrap serverBootstrap = new ServerBootstrap();

//初始化ServerBootstrap的线程组

serverBootstrap.group(bossGroup, workerGroup)

.option(ChannelOption.CONNECT_TIMEOUT_MILLIS, 5000)

.option(ChannelOption.RCVBUF_ALLOCATOR, new FixedRecvByteBufAllocator(65535));

//设置将要被实例化的ServerChannel类

serverBootstrap.channel(NioServerSocketChannel.class);//

//在ServerChannelInitializer中初始化ChannelPipeline责任链,并添加到serverBootstrap中

serverBootstrap.childHandler(new ImageChannelInitializer());

//标识当服务器请求处理线程全满时,用于临时存放已完成三次握手的请求的队列的最大长度

serverBootstrap.option(ChannelOption.SO_BACKLOG, 1024);

// 是否启用心跳保活机机制

serverBootstrap.childOption(ChannelOption.SO_KEEPALIVE, true);

//收发缓冲区

serverBootstrap.childOption(ChannelOption.SO_RCVBUF,400*1024);

serverBootstrap.childOption(ChannelOption.SO_SNDBUF,400*1024);

h264解码是比较麻烦的,调试起来参数也比较多,我参考的是这位大佬https://blog.csdn.net/qq_36467463/article/details/77977562

这位大佬参考的是那位大神http://www.itdadao.com/articles/c15a280703p0.html

Android的布局主流一般是TextureView、或是SurfaceView,这里没有深入研究区别,我用的SurfaceView

贴一个使用TextureView的参考代码:

package com.marchnetworks.decodeh264;

import android.graphics.SurfaceTexture;

import android.media.MediaCodec;

import android.media.MediaFormat;

import android.os.AsyncTask;

import android.support.v7.app.AppCompatActivity;

import android.os.Bundle;

import android.view.Surface;

import android.view.TextureView;

import java.nio.ByteBuffer;

public class MainActivity extends AppCompatActivity implements TextureView.SurfaceTextureListener {

private int width = 1920;

private int height = 1080;

// View that contains the Surface Texture

private TextureView m_surface;

// Object that connects to our server and gets H264 frames

private H264Provider provider;

// Media decoder

private MediaCodec m_codec;

// Async task that takes H264 frames and uses the decoder to update the Surface Texture

private DecodeFramesTask m_frameTask;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// Find our desired SurfaceTexture to display the stream

m_surface = (TextureView) findViewById(R.id.textureView);

// Add the SurfaceTextureListener

m_surface.setSurfaceTextureListener(this);

}

@Override

// Invoked when a TextureView's SurfaceTexture is ready for use

public void onSurfaceTextureAvailable(SurfaceTexture surfaceTexture, int i, int i1) {

// when the surface is ready, we make a H264 provider Object. When its constructor

// runs it starts an AsyncTask to log into our server and start getting frames

// I have dummed down this demonstration to access the local h264 video from the raw resources dir

provider = new H264Provider(getResources().openRawResource(R.raw.video));

//Create the format settings for the MediaCodec

MediaFormat format = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC, width, height);

// Set the SPS frame

format.setByteBuffer("csd-0", ByteBuffer.wrap(provider.getSPS()));

// Set the PPS frame

format.setByteBuffer("csd-1", ByteBuffer.wrap(provider.getPPS()));

// Set the buffer size

format.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, width * height);

try {

// Get an instance of MediaCodec and give it its Mime type

m_codec = MediaCodec.createDecoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

// Configure the codec

m_codec.configure(format, new Surface(m_surface.getSurfaceTexture()), null, 0);

// Start the codec

m_codec.start();

// Create the AsyncTask to get the frames and decode them using the Codec

m_frameTask = new DecodeFramesTask();

m_frameTask.executeOnExecutor(AsyncTask.THREAD_POOL_EXECUTOR);

}catch (Exception e){

e.printStackTrace();

}

}

@Override

// Invoked when the SurfaceTexture's buffer size changed

public void onSurfaceTextureSizeChanged(SurfaceTexture surfaceTexture, int i, int i1) {

}

@Override

// Invoked when the specified SurfaceTexture is about to be destroyed

public boolean onSurfaceTextureDestroyed(SurfaceTexture surfaceTexture) {

return false;

}

@Override

// Invoked when the specified SurfaceTexture is updated through updateTextImage()

public void onSurfaceTextureUpdated(SurfaceTexture surfaceTexture) {

}

private class DecodeFramesTask extends AsyncTask<String, String, String> {

@Override

protected String doInBackground(String... strings) {

while (!isCancelled()) {

// Get the next frame

byte[] frame = provider.nextFrame();

// Now we need to give it to the Codec to decode into the surface

// Get the input buffer from the decoder

// Pass in -1 here as in this example we don't have a playback time reference

int inputIndex = m_codec.dequeueInputBuffer(-1);

// If the buffer number is valid use the buffer with that index

if (inputIndex >= 0) {

ByteBuffer buffer = m_codec.getInputBuffer(inputIndex);

try {

buffer.put(frame);

}catch(NullPointerException e) {

e.printStackTrace();

}

// Tell the decoder to process the frame

m_codec.queueInputBuffer(inputIndex, 0, frame.length, 0, 0);

}

MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

int outputIndex = m_codec.dequeueOutputBuffer(info, 0);

if (outputIndex >= 0) {

// Output the image to our SufaceTexture

m_codec.releaseOutputBuffer(outputIndex, true);

}

// wait for the next frame to be ready, our server makes a frame every 250ms

try {

Thread.sleep(250);

}catch (Exception e) {

e.printStackTrace();

}

}

return "";

}

@Override

protected void onPostExecute(String result) {

try {

m_codec.stop();

m_codec.release();

}catch (Exception e) {

e.printStackTrace();

}

provider.release();

}

}

@Override

public void onStop() {

super.onStop();

m_frameTask.cancel(true);

provider.release();

}

}

package com.marchnetworks.decodeh264;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import java.io.InputStream;

public class H264Provider {

private byte[] sps_params = null;

private byte[] pps_params = null;

private byte[] i_frame = null;

public H264Provider(InputStream inStream) {

try {

int firstStartCodeIndex = 6;

int secondStartCodeIndex = 0;

int thirdStartCodeIndex = 0;

//byte[] data = new byte[inStream.available()];

byte[] data = toByteArray(inStream);

for (int i = 0; i < 100; i++)

{

if (data[i] == 0x00 && data[i+1] == 0x00 && data[i+2] == 0x00 && data[i+3] == 0x01)

{

if ((data[i + 4] & 0x1F) == 7) //SPS

{

firstStartCodeIndex = i;

break;

}

}

}

int firstNaluSize = 0;

for (int i = firstStartCodeIndex + 4; i < firstStartCodeIndex + 100; i++)

{

if (data[i] == 0x00 && data[i+1] == 0x00 && data[i+2] == 0x00 && data[i+3] == 0x01)

{

if (firstNaluSize == 0)

{

firstNaluSize = i - firstStartCodeIndex;

}

if ((data[i + 4] & 0x1F) == 8) //PPS

{

secondStartCodeIndex = i;

break;

}

}

}

int secondNaluSize = 0;

for (int i = secondStartCodeIndex + 4; i < secondStartCodeIndex + 130; i++)

{

if (data[i] == 0x00 && data[i+1] == 0x00 && data[i+2] == 0x00 && data[i+3] == 0x01)

{

if (secondNaluSize == 0)

{

secondNaluSize = i - secondStartCodeIndex;

}

if ((data[i+4] & 0x1F) == 5) //IFrame

{

thirdStartCodeIndex = i;

break;

}

}

}

int thirdNaluSize = 0;

int counter = thirdStartCodeIndex + 4;

while (counter++ < data.length - 1)

{

if (data[counter] == 0x00 && data[counter + 1] == 0x00 && data[counter + 2] == 0x00 && data[counter + 3] == 0x01)

{

thirdNaluSize = counter - thirdStartCodeIndex;

break;

}

}

// This is how you would remove the "x00x00x00x01"

// byte[] firstNalu = new byte[firstNaluSize - 4];

// byte[] secondNalu = new byte[secondNaluSize - 4];

// byte[] thirdNalu = new byte[thirdNaluSize];

// System.arraycopy(data, thirdStartCodeIndex, thirdNalu, 0, thirdNaluSize);

// System.arraycopy(data, firstStartCodeIndex+4, firstNalu, 0, firstNaluSize-4);

// System.arraycopy(data, secondStartCodeIndex+4, secondNalu, 0, secondNaluSize-4);

byte[] firstNalu = new byte[firstNaluSize];

byte[] secondNalu = new byte[secondNaluSize];

byte[] thirdNalu = new byte[thirdNaluSize];

System.arraycopy(data, thirdStartCodeIndex, thirdNalu, 0, thirdNaluSize);

System.arraycopy(data, firstStartCodeIndex, firstNalu, 0, firstNaluSize);

System.arraycopy(data, secondStartCodeIndex, secondNalu, 0, secondNaluSize);

sps_params = firstNalu;

pps_params = secondNalu;

i_frame = thirdNalu;

}catch (IOException e) {

e.printStackTrace();

}

}

public byte[] getSPS () {

return sps_params;

}

public byte[] getPPS () {

return pps_params;

}

public byte[] nextFrame () {

return i_frame;

}

public void release () {

// Logout of server;

}

/*

** Simple function to return a byte array from an input stream

*/

private byte[] toByteArray(InputStream in) throws IOException {

ByteArrayOutputStream out = new ByteArrayOutputStream();

int read = 0;

byte[] buffer = new byte[1024];

while (read != -1) {

read = in.read(buffer);

if (read != -1)

out.write(buffer,0,read);

}

out.close();

return out.toByteArray();

}

}

核心的代码

private void initDecoder() throws IOException {

/**

* 工作流是这样的: 以编码为例,首先要初始化硬件编码器,配置要编码的格式、视频文件的长宽、码率、帧率、关键帧间隔等等。

* 这一步叫configure。之后开启编码器,当前编码器便是可用状态,随时准备接收数据。

* 下一个过程便是编码的running过程,在此过程中,需要维护两个buffer队列,InputBuffer 和OutputBuffer,

* 用户需要不断出队InputBuffer (即dequeueInputBuffer),往里边放入需要编码的图像数据之后再入队等待处理,

* 然后硬件编码器开始异步处理,一旦处理结束,他会将数据放在OutputBuffer中,并且通知用户当前有输出数据可用了,

* 那么用户就可以出队一个OutputBuffer,将其中的数据拿走,然后释放掉这个buffer。

* 结束条件在于end-of-stream这个flag标志位的设定。在编码结束后,编码器调用stop函数停止编码,

* 之后调用release函数将编码器完全释放掉,整体流程结束。

* */

mCodec = MediaCodec.createDecoderByType(MIME_TYPE);

MediaFormat mediaFormat = MediaFormat.createVideoFormat(MIME_TYPE, VIDEO_WIDTH, VIDEO_HEIGHT);//MediaFormat为媒体格式

// BITRATE_MODE_CQ: 表示完全不控制码率,尽最大可能保证图像质量

// BITRATE_MODE_CBR: 表示编码器会尽量把输出码率控制为设定值

// BITRATE_MODE_VBR: 表示编码器会根据图像内容的复杂度(实际上是帧间变化量的大小)

// mediaFormat.setInteger(MediaFormat.KEY_BITRATE_MODE,MediaCodecInfo.EncoderCapabilities.BITRATE_MODE_VBR);

mediaFormat.setInteger(MediaFormat.KEY_BITRATE_MODE, MediaCodecInfo.EncoderCapabilities.BITRATE_MODE_CQ);

// mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, 9000000);

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 16);

mediaFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, VIDEO_WIDTH * VIDEO_HEIGHT);

mediaFormat.setInteger(MediaFormat.KEY_MAX_HEIGHT, VIDEO_HEIGHT);

mediaFormat.setInteger(MediaFormat.KEY_MAX_WIDTH, VIDEO_WIDTH);

//设置为 0,表示希望每一帧都是 KeyFrame。

// mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 0);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface);

mCodec.configure(mediaFormat, mSurfaceView.getHolder().getSurface(), null, 0);

mCodec.start();//当创建编解码器的时候处于未初始化状态。

这里我没有使用固定码率模式,即在下位机就没有使用固定码率,而是固定了质量,当图像变化剧烈时,会自动加大码率,但保持质量恒定,这是Q群里一位大佬的建议。

解码部分

public boolean onFrame(byte[] buf, int offset, int length) {

int inputBufferIndex = mCodec.dequeueInputBuffer(TIMEOUT_US);//dequeueInputBuffer:从输入流队列中取数据进行编码操作

Log.e("Media", "onFrame index:" + inputBufferIndex);

if (inputBufferIndex >= 0) {

ByteBuffer inputBuffer = mCodec.getInputBuffer(inputBufferIndex);//获取需要编码数据的输入流队列,

inputBuffer.put(buf, offset, length);//从buf数组中的offset到offset+length区域读取数据并使用相对写写入此byteBuffer

inputBuffer.clear();//初始化

inputBuffer.limit(buf.length);

//此参数表示帧的录制时间,因此需要增加您要编码的帧与上一个帧之间的距离。

mCodec.queueInputBuffer(inputBufferIndex, 0, length, mCount* TIME_INTERNAL, 0);

mCount++;

} else {

return false;

}

//getInputBuffers:获取需要编码数据的输入流队列,返回的是一个ByteBuffer数组

//queueInputBuffer:输入流入队列

//dequeueInputBuffer:从输入流队列中取数据进行编码操作

//getOutputBuffers:获取编解码之后的数据输出流队列,返回的是一个ByteBuffer数组

//dequeueOutputBuffer:从输出队列中取出编码操作之后的数据

//releaseOutputBuffer:处理完成,释放ByteBuffer数据

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_US);

while (outputBufferIndex >= 0) {

mCodec.releaseOutputBuffer(outputBufferIndex, true);

outputBufferIndex = mCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_US);

}

return true;

}

这里只需要把下位机编码好的0001或001开头的buf传进来就实现解码的全部流程了,但是由于API的不熟悉我还是掉了个坑,解码后发现花屏,其现象是当画面静止的时候一切正常,但是一旦画面有动作就会局部花屏,有的时候也很严重,起初以为是网络不稳定或是TCP传输的时候丢帧了,但是对比数据长度并没有发现异常,也有群友说可能是数据错位导致的,甚至有群友建议我用MD5去校验数据,调试这个BUG确实挺伤脑筋,直到看到了这个:

End-of-stream Handling

When you reach the end of the input data, you must signal it to the codec by specifying the BUFFER_FLAG_END_OF_STREAM flag in the call to queueInputBuffer. You can do this on the last valid input buffer, or by submitting an additional empty input buffer with the end-of-stream flag set. If using an empty buffer, the timestamp will be ignored.

The codec will continue to return output buffers until it eventually signals the end of the output stream by specifying the same end-of-stream flag in the MediaCodec.BufferInfo set in dequeueOutputBuffer or returned via onOutputBufferAvailable. This can be set on the last valid output buffer, or on an empty buffer after the last valid output buffer. The timestamp of such empty buffer should be ignored.

Do not submit additional input buffers after signaling the end of the input stream, unless the codec has been flushed, or stopped and restarted.

然后修改:

mCodec.queueInputBuffer(inputBufferIndex, 0, length, mCount* TIME_INTERNAL, MediaCodec.BUFFER_FLAG_KEY_FRAME);

重新立了个flag 但是问题依旧。

解决问题还得从根上起,我保存了下位机编码的码流和android上的码流直接用播放器播放,发现并不是解码器的问题,而是丢帧的问题。最终错误定位是由于tcp粘包造成的错位和丢帧,调整了一下策略解决了问题。

- 补充

做调试的时候要注意网络速率的变化,在网速不好的情况下无论你如何优化参数都会丢帧,这时候要主动校验然后去手动丢帧,也可以降低上位机的质量,防止网络阻塞,如果抖动严重可以考虑增加编码端流的I帧插入速率

目前对这个结果很满意,其实我之前也尝试过使用ffmpeg来图传,但是效果始终不太理想

self.command = ['ffmpeg',

# '-f','alsa',

#'-ar','11025',

#'-i','hw:0',

#'-vol','200',

# '-y',

'-analyzeduration','1000000',

'-f', 'rawvideo',

'-fflags','nobuffer',

'-vcodec','rawvideo',

'-pix_fmt','bgr24',

'-s', "{}x{}".format(self.width, self.height),

'-r', self.fps,

'-i', '-',

'-c:v', 'h264_omx',

'-pix_fmt', 'yuv420p',

'-preset', 'ultrafast',

'-tune:v', 'zerolatency',

'-f', 'flv',

self.rtmpUrl]

print(self.command)

self.p = sp.Popen(self.command, stdin=sp.PIPE)

不知道是我打开的方式不对还是研究的不透彻,效果始终不好。算了我也用不上这种方式了。

最后

以上就是仁爱棉花糖最近收集整理的关于树莓派(网络摄像头)4G网络720p高清图传(python3.7+SpringBoot-JavaNetty+Android-Mediacodec)简述MJPEGh264解码部分的全部内容,更多相关树莓派(网络摄像头)4G网络720p高清图传(python3内容请搜索靠谱客的其他文章。

发表评论 取消回复