初始化和read、write过程分析

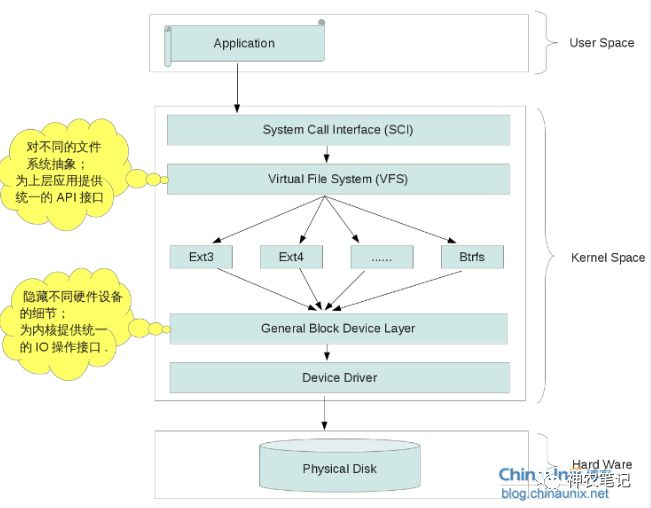

- 整体框架图

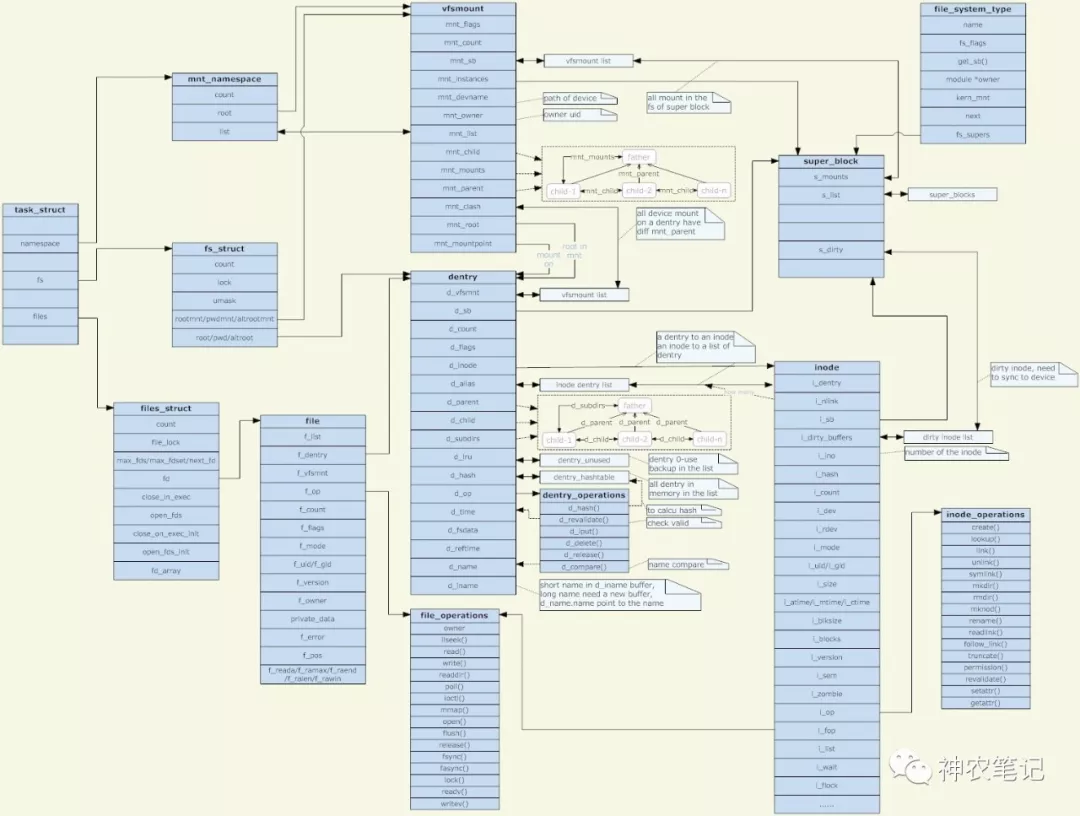

- vfs系统类关系图

- vfs层次关系通

- 初始化过程

- 文件read流程分析

- 文件read和BIO调度分析

- 文件write过程分析

整体框架图

vfs系统类关系图

vfs层次关系通

初始化过程

在内核启动的主函数中

start_kernel()

{

vfs_caches_init_early();

vfs_caches_init();

rest_init();

}

rest_init()

{

kernel_init();

}

kernel_init()

{

kernel_init_freeable();

}

在启动过程中相关的函数差不多就这样了。

vfs_caches_init_early 进行早期的初始化

vfs_caches_init 这里面主要是初始化inode 缓存 dentry的缓存等等。

主要关键的很难理解的是rest_init

而rest_init 又调用了kernel_init 然后又调用了kernel_init_freeable,这里面做什么事情呢?

做一件非常关键的事情,就是第一次开机在什么都没有的情况下,相当于硬盘是空的。

OK,描述下这个过程:

1、硬盘是空的,怎么办

2、从flash中加载initramfs 到内存,在内存中临时建立一个 “/” 根文件系统,将初次需要的一些操作,在这个内存的fs里面做。比如sys设备。

3、完成内核的最后阶段要去执行第一个进程/init 的时候,把这个内存里的 fs,送到硬盘。OK,完成第一次启动

4、第二次或者以后还要启动的时候,检查硬盘是不是空的,不是空的,检查硬盘里面有没有init,有就直接启动init完成后续启动。

文件read流程分析

fs/read_write.c

ssize_t vfs_read(struct file *file, char __user *buf, size_t count, loff_t *pos)

{

ssize_t ret;

if (!(file->f_mode & FMODE_READ))

return -EBADF;

if (!file->f_op || (!file->f_op->read && !file->f_op->aio_read))

return -EINVAL;

if (unlikely(!access_ok(VERIFY_WRITE, buf, count)))

return -EFAULT;

ret = rw_verify_area(READ, file, pos, count);

if (ret >= 0) {

count = ret;

if (file->f_op->read)

ret = file->f_op->read(file, buf, count, pos);

else

ret = do_sync_read(file, buf, count, pos);

if (ret > 0) {

fsnotify_access(file);

add_rchar(current, ret);

}

inc_syscr(current);

}

return ret;

}

ssize_t do_sync_read(struct file *filp, char __user *buf, size_t len, loff_t *ppos)

{

struct iovec iov = { .iov_base = buf, .iov_len = len };

struct kiocb kiocb;

ssize_t ret;

init_sync_kiocb(&kiocb, filp);

kiocb.ki_pos = *ppos;

kiocb.ki_left = len;

kiocb.ki_nbytes = len;

ret = filp->f_op->aio_read(&kiocb, &iov, 1, kiocb.ki_pos);

if (-EIOCBQUEUED == ret)

ret = wait_on_sync_kiocb(&kiocb);

*ppos = kiocb.ki_pos;

return ret;

}

fs/ext4/files.c

const struct file_operations ext4_file_operations = {

.llseek = ext4_llseek,

.read = do_sync_read,

.write = do_sync_write,

.aio_read = generic_file_aio_read,

.aio_write = ext4_file_write,

.unlocked_ioctl = ext4_ioctl,

#ifdef CONFIG_COMPAT

.compat_ioctl = ext4_compat_ioctl,

#endif

.mmap = ext4_file_mmap,

.open = ext4_file_open,

.release = ext4_release_file,

.fsync = ext4_sync_file,

.splice_read = generic_file_splice_read,

.splice_write = generic_file_splice_write,

.fallocate = ext4_fallocate,

};

generic_file_aio_read

./mm/filemap.c

ssize_t

generic_file_aio_read(struct kiocb *iocb, const struct iovec *iov,

unsigned long nr_segs, loff_t pos)

{

struct file *filp = iocb->ki_filp;

ssize_t retval;

unsigned long seg = 0;

size_t count;

loff_t *ppos = &iocb->ki_pos;

count = 0;

retval = generic_segment_checks(iov, &nr_segs, &count, VERIFY_WRITE);

if (retval)

return retval;

/* coalesce the iovecs and go direct-to-BIO for O_DIRECT */

if (filp->f_flags & O_DIRECT) {

loff_t size;

struct address_space *mapping;

struct inode *inode;

mapping = filp->f_mapping;

inode = mapping->host;

if (!count)

goto out; /* skip atime */

size = i_size_read(inode);

if (pos < size) {

retval = filemap_write_and_wait_range(mapping, pos,

pos + iov_length(iov, nr_segs) - 1);

if (!retval) {

retval = mapping->a_ops->direct_IO(READ, iocb,

iov, pos, nr_segs);

}

if (retval > 0) {

*ppos = pos + retval;

count -= retval;

}

...

if (retval < 0 || !count || *ppos >= size) {

file_accessed(filp);

goto out;

}

}

}

count = retval;

for (seg = 0; seg < nr_segs; seg++) {

read_descriptor_t desc;

loff_t offset = 0;

...

desc.written = 0;

desc.arg.buf = iov[seg].iov_base + offset;

desc.count = iov[seg].iov_len - offset;

if (desc.count == 0)

continue;

desc.error = 0;

do_generic_file_read(filp, ppos, &desc, file_read_actor);

retval += desc.written;

if (desc.error) {

retval = retval ?: desc.error;

break;

}

if (desc.count > 0)

break;

}

out:

return retval;

}

do_generic_file_read

./mm/filemap.c

static void do_generic_file_read(struct file *filp, loff_t *ppos,

read_descriptor_t *desc, read_actor_t actor)

{

struct address_space *mapping = filp->f_mapping;

struct inode *inode = mapping->host;

struct file_ra_state *ra = &filp->f_ra;

pgoff_t index;

pgoff_t last_index;

pgoff_t prev_index;

unsigned long offset;

unsigned int prev_offset;

int error;

index = *ppos >> PAGE_CACHE_SHIFT;

prev_index = ra->prev_pos >> PAGE_CACHE_SHIFT;

prev_offset = ra->prev_pos & (PAGE_CACHE_SIZE-1);

last_index = (*ppos + desc->count + PAGE_CACHE_SIZE-1) >> PAGE_CACHE_SHIFT;

offset = *ppos & ~PAGE_CACHE_MASK;

for (;;) {

struct page *page;

pgoff_t end_index;

loff_t isize;

unsigned long nr, ret;

cond_resched();

find_page:

page = find_get_page(mapping, index);

if (!page) {

page_cache_sync_readahead(mapping,

ra, filp,

index, last_index - index);

page = find_get_page(mapping, index);

if (unlikely(page == NULL))

goto no_cached_page;

}

if (PageReadahead(page)) {

page_cache_async_readahead(mapping,

ra, filp, page,

index, last_index - index);

}

if (!PageUptodate(page)) {

if (inode->i_blkbits == PAGE_CACHE_SHIFT ||

!mapping->a_ops->is_partially_uptodate)

goto page_not_up_to_date;

if (!trylock_page(page))

goto page_not_up_to_date;

/* Did it get truncated before we got the lock? */

if (!page->mapping)

goto page_not_up_to_date_locked;

if (!mapping->a_ops->is_partially_uptodate(page,

desc, offset))

goto page_not_up_to_date_locked;

unlock_page(page);

}

page_ok:

...

isize = i_size_read(inode);

end_index = (isize - 1) >> PAGE_CACHE_SHIFT;

if (unlikely(!isize || index > end_index)) {

page_cache_release(page);

goto out;

}

/* nr is the maximum number of bytes to copy from this page */

nr = PAGE_CACHE_SIZE;

if (index == end_index) {

nr = ((isize - 1) & ~PAGE_CACHE_MASK) + 1;

if (nr <= offset) {

page_cache_release(page);

goto out;

}

}

nr = nr - offset;

...

if (mapping_writably_mapped(mapping))

flush_dcache_page(page);

...

if (prev_index != index || offset != prev_offset)

mark_page_accessed(page);

prev_index = index;

....

ret = actor(desc, page, offset, nr);

offset += ret;

index += offset >> PAGE_CACHE_SHIFT;

offset &= ~PAGE_CACHE_MASK;

prev_offset = offset;

page_cache_release(page);

if (ret == nr && desc->count)

continue;

goto out;

page_not_up_to_date:

error = lock_page_killable(page);

if (unlikely(error))

goto readpage_error;

page_not_up_to_date_locked:

/* Did it get truncated before we got the lock? */

if (!page->mapping) {

unlock_page(page);

page_cache_release(page);

continue;

}

/* Did somebody else fill it already? */

if (PageUptodate(page)) {

unlock_page(page);

goto page_ok;

}

readpage:

ClearPageError(page);

error = mapping->a_ops->readpage(filp, page);

if (unlikely(error)) {

if (error == AOP_TRUNCATED_PAGE) {

page_cache_release(page);

goto find_page;

}

goto readpage_error;

}

if (!PageUptodate(page)) {

error = lock_page_killable(page);

if (unlikely(error))

goto readpage_error;

if (!PageUptodate(page)) {

if (page->mapping == NULL) {

/*

* invalidate_mapping_pages got it

*/

unlock_page(page);

page_cache_release(page);

goto find_page;

}

unlock_page(page);

shrink_readahead_size_eio(filp, ra);

error = -EIO;

goto readpage_error;

}

unlock_page(page);

}

goto page_ok;

readpage_error:

/* UHHUH! A synchronous read error occurred. Report it */

desc->error = error;

page_cache_release(page);

goto out;

no_cached_page:

page = page_cache_alloc_cold(mapping);

if (!page) {

desc->error = -ENOMEM;

goto out;

}

error = add_to_page_cache_lru(page, mapping,

index, GFP_KERNEL);

if (error) {

page_cache_release(page);

if (error == -EEXIST)

goto find_page;

desc->error = error;

goto out;

}

goto readpage;

}

out:

ra->prev_pos = prev_index;

ra->prev_pos <<= PAGE_CACHE_SHIFT;

ra->prev_pos |= prev_offset;

*ppos = ((loff_t)index << PAGE_CACHE_SHIFT) + offset;

file_accessed(filp);

}

文件read和BIO调度分析

int ext4_mpage_readpages(struct address_space *mapping,

struct list_head *pages, struct page *page,

unsigned nr_pages)

{

struct bio *bio = NULL;

unsigned page_idx;

sector_t last_block_in_bio = 0;

struct inode *inode = mapping->host;

const unsigned blkbits = inode->i_blkbits;

const unsigned blocks_per_page = PAGE_CACHE_SIZE >> blkbits;

const unsigned blocksize = 1 << blkbits;

sector_t block_in_file;

sector_t last_block;

sector_t last_block_in_file;

sector_t blocks[MAX_BUF_PER_PAGE];

unsigned page_block;

struct block_device *bdev = inode->i_sb->s_bdev;

int length;

unsigned relative_block = 0;

struct ext4_map_blocks map;

map.m_pblk = 0;

map.m_lblk = 0;

map.m_len = 0;

map.m_flags = 0;

for (page_idx = 0; nr_pages; page_idx++, nr_pages--) {

int fully_mapped = 1;

unsigned first_hole = blocks_per_page;

prefetchw(&page->flags);

if (pages) {

page = list_entry(pages->prev, struct page, lru);

list_del(&page->lru);

if (add_to_page_cache_lru(page, mapping, page->index,

mapping_gfp_constraint(mapping, GFP_KERNEL)))

goto next_page;

}

if (page_has_buffers(page))

goto confused;

block_in_file = (sector_t)page->index << (PAGE_CACHE_SHIFT - blkbits);

last_block = block_in_file + nr_pages * blocks_per_page;

last_block_in_file = (i_size_read(inode) + blocksize - 1) >> blkbits;

if (last_block > last_block_in_file)

last_block = last_block_in_file;

page_block = 0;

/*

* Map blocks using the previous result first.

*/

if ((map.m_flags & EXT4_MAP_MAPPED) &&

block_in_file > map.m_lblk &&

block_in_file < (map.m_lblk + map.m_len)) {

unsigned map_offset = block_in_file - map.m_lblk;

unsigned last = map.m_len - map_offset;

for (relative_block = 0; ; relative_block++) {

if (relative_block == last) {

/* needed? */

map.m_flags &= ~EXT4_MAP_MAPPED;

break;

}

if (page_block == blocks_per_page)

break;

blocks[page_block] = map.m_pblk + map_offset +

relative_block;

page_block++;

block_in_file++;

}

}

/*

* Then do more ext4_map_blocks() calls until we are

* done with this page.

*/

while (page_block < blocks_per_page) {

if (block_in_file < last_block) {

map.m_lblk = block_in_file;

map.m_len = last_block - block_in_file;

if (ext4_map_blocks(NULL, inode, &map, 0) < 0) {

set_error_page:

SetPageError(page);

zero_user_segment(page, 0,

PAGE_CACHE_SIZE);

unlock_page(page);

goto next_page;

}

}

if ((map.m_flags & EXT4_MAP_MAPPED) == 0) {

fully_mapped = 0;

if (first_hole == blocks_per_page)

first_hole = page_block;

page_block++;

block_in_file++;

continue;

}

if (first_hole != blocks_per_page)

goto confused; /* hole -> non-hole */

/* Contiguous blocks? */

if (page_block && blocks[page_block-1] != map.m_pblk-1)

goto confused;

for (relative_block = 0; ; relative_block++) {

if (relative_block == map.m_len) {

/* needed? */

map.m_flags &= ~EXT4_MAP_MAPPED;

break;

} else if (page_block == blocks_per_page)

break;

blocks[page_block] = map.m_pblk+relative_block;

page_block++;

block_in_file++;

}

}

if (first_hole != blocks_per_page) {

zero_user_segment(page, first_hole << blkbits,

PAGE_CACHE_SIZE);

if (first_hole == 0) {

SetPageUptodate(page);

unlock_page(page);

goto next_page;

}

} else if (fully_mapped) {

SetPageMappedToDisk(page);

}

if (fully_mapped && blocks_per_page == 1 &&

!PageUptodate(page) && cleancache_get_page(page) == 0) {

SetPageUptodate(page);

goto confused;

}

/*

* This page will go to BIO. Do we need to send this

* BIO off first?

*/

if (bio && (last_block_in_bio != blocks[0] - 1)) {

submit_and_realloc:

ext4_submit_bio_read(bio);

bio = NULL;

}

if (bio == NULL) {

struct ext4_crypto_ctx *ctx = NULL;

if (ext4_encrypted_inode(inode) &&

S_ISREG(inode->i_mode)) {

ctx = ext4_get_crypto_ctx(inode);

if (IS_ERR(ctx))

goto set_error_page;

}

bio = bio_alloc(GFP_KERNEL,

min_t(int, nr_pages, BIO_MAX_PAGES));

if (!bio) {

if (ctx)

ext4_release_crypto_ctx(ctx);

goto set_error_page;

}

bio->bi_bdev = bdev;

bio->bi_iter.bi_sector = blocks[0] << (blkbits - 9);

bio->bi_end_io = mpage_end_io;

bio->bi_private = ctx;

}

length = first_hole << blkbits;

if (bio_add_page(bio, page, length, 0) < length)

goto submit_and_realloc;

if (((map.m_flags & EXT4_MAP_BOUNDARY) &&

(relative_block == map.m_len)) ||

(first_hole != blocks_per_page)) {

ext4_submit_bio_read(bio);

bio = NULL;

} else

last_block_in_bio = blocks[blocks_per_page - 1];

goto next_page;

confused:

if (bio) {

ext4_submit_bio_read(bio);

bio = NULL;

}

if (!PageUptodate(page))

block_read_full_page(page, ext4_get_block);

else

unlock_page(page);

next_page:

if (pages)

page_cache_release(page);

}

BUG_ON(pages && !list_empty(pages));

if (bio)

ext4_submit_bio_read(bio);

return 0;

}

int bio_add_page(struct bio *bio, struct page *page,

unsigned int len, unsigned int offset)

{

struct bio_vec *bv;

/*

* cloned bio must not modify vec list

*/

if (WARN_ON_ONCE(bio_flagged(bio, BIO_CLONED)))

return 0;

/*

* For filesystems with a blocksize smaller than the pagesize

* we will often be called with the same page as last time and

* a consecutive offset. Optimize this special case.

*/

if (bio->bi_vcnt > 0) {

bv = &bio->bi_io_vec[bio->bi_vcnt - 1];

if (page == bv->bv_page &&

offset == bv->bv_offset + bv->bv_len) {

bv->bv_len += len;

goto done;

}

}

if (bio->bi_vcnt >= bio->bi_max_vecs)

return 0;

bv = &bio->bi_io_vec[bio->bi_vcnt];

bv->bv_page = page;

bv->bv_len = len;

bv->bv_offset = offset;

bio->bi_vcnt++;

done:

bio->bi_iter.bi_size += len;

return len;

}

//

sumbit (bio)

/****************************************驱动程序****************************************************************/

getbio();

struct bio_vec* bvec;

pRHdata = pdev->data + (bio->bi_sector * RAMHD_SECTOR_SIZE);

bio_for_each_segment(bvec, bio, i){

pBuffer = kmap(bvec->bv_page) + bvec->bv_offset;

switch(bio_data_dir(bio)){

case READ:

memcpy(pBuffer, pRHdata, bvec->bv_len);

flush_dcache_page(bvec->bv_page);

break;

case WRITE:

flush_dcache_page(bvec->bv_page);

memcpy(pRHdata, pBuffer, bvec->bv_len);

break;

default:

kunmap(bvec->bv_page);

goto out;

}

kunmap(bvec->bv_page);

pRHdata += bvec->bv_len;

}

-

首先是疑问,就是在准备探索cfq和红黑树的时候,知道红黑树应该是根据磁盘的bi_sector进行树的组织的,那么这个bi_setctor是哪里来的,所以找回去readpage流程。

-

第二个疑问:然后之前也一直疑问bio,应该是好几个page组成的,之前没有看到,所以就用单个bio去探索也不影响分析代码,今天顺便一起看下

第一问解答:

从上面代码就知道了,第一个标黑体的地方和第二个标记黑体的地方就是bi_setctor的由来

const unsigned blkbits = inode->i_blkbits;

bio->bi_iter.bi_sector = blocks[0] << (blkbits - 9);

虽然还有些模糊,比如blkbits 是inode节点,相当于节点编号,相当于硬盘位置,但是这个iNode的又是最终是怎么来的呢?还需要探索。

还有减去9,还有blocks 左移是什么意思。

第二问解答:

bio_alloc

bio_add_page

如果bio还是空的,就用bio_alloc开辟一个,然后后面的page用bio_add_page。

然后在bio_add_page函数中我们对比bvec的小节点的数据流动,很清楚的看到,每个page的数据指针进行的数据传递,其实是个数组,并没有组织成链表。

文件write过程分析

从vfs_write 开始分析

ssize_t vfs_write(struct file *file, const char __user *buf, size_t count, loff_t *pos)

{

ssize_t ret;

if (!(file->f_mode & FMODE_WRITE))

return -EBADF;

if (!(file->f_mode & FMODE_CAN_WRITE))

return -EINVAL;

if (unlikely(!access_ok(VERIFY_READ, buf, count)))

return -EFAULT;

ret = rw_verify_area(WRITE, file, pos, count);

if (ret >= 0) {

count = ret;

file_start_write(file);

ret = __vfs_write(file, buf, count, pos);

if (ret > 0) {

fsnotify_modify(file);

add_wchar(current, ret);

}

inc_syscw(current);

file_end_write(file);

}

return ret;

}

- access_ok

- __vfs_write

- fsnotify_modify

ssize_t __vfs_write(struct file *file, const char __user *p, size_t count,

loff_t *pos)

{

if (file->f_op->write)

return file->f_op->write(file, p, count, pos);

else if (file->f_op->write_iter)

return new_sync_write(file, p, count, pos);

else

return -EINVAL;

}

这里检查f_op 的write接口,我们以ext4为例

const struct file_operations ext4_file_operations = {

.llseek = ext4_llseek,

.read_iter = generic_file_read_iter,

.write_iter = ext4_file_write_iter,

.unlocked_ioctl = ext4_ioctl,

#ifdef CONFIG_COMPAT

.compat_ioctl = ext4_compat_ioctl,

#endif

.mmap = ext4_file_mmap,

.open = ext4_file_open,

.release = ext4_release_file,

.fsync = ext4_sync_file,

.splice_read = generic_file_splice_read,

.splice_write = iter_file_splice_write,

.fallocate = ext4_fallocate,

};

那么它会走write_iter接口,

static ssize_t new_sync_write(struct file *filp, const char __user *buf, size_t len, loff_t *ppos)

{

struct iovec iov = { .iov_base = (void __user *)buf, .iov_len = len };

struct kiocb kiocb;

struct iov_iter iter;

ssize_t ret;

init_sync_kiocb(&kiocb, filp);

kiocb.ki_pos = *ppos;

iov_iter_init(&iter, WRITE, &iov, 1, len);

ret = filp->f_op->write_iter(&kiocb, &iter);

BUG_ON(ret == -EIOCBQUEUED);

if (ret > 0)

*ppos = kiocb.ki_pos;

return ret;

}

这里只是转换数据结构。

static ssize_t

ext4_file_write_iter(struct kiocb *iocb, struct iov_iter *from)

{

struct file *file = iocb->ki_filp;

struct inode *inode = file_inode(iocb->ki_filp);

struct mutex *aio_mutex = NULL;

struct blk_plug plug;

int o_direct = iocb->ki_flags & IOCB_DIRECT;

int overwrite = 0;

ssize_t ret;

if (o_direct) {

size_t length = iov_iter_count(from);

loff_t pos = iocb->ki_pos;

blk_start_plug(&plug);

if (ext4_should_dioread_nolock(inode) && !aio_mutex &&

!file->f_mapping->nrpages && pos + length <= i_size_read(inode)) {

struct ext4_map_blocks map;

unsigned int blkbits = inode->i_blkbits;

int err, len;

map.m_lblk = pos >> blkbits;

map.m_len = (EXT4_BLOCK_ALIGN(pos + length, blkbits) >> blkbits)

- map.m_lblk;

len = map.m_len;

err = ext4_map_blocks(NULL, inode, &map, 0);

if (err == len && (map.m_flags & EXT4_MAP_MAPPED))

overwrite = 1;

}

}

ret = __generic_file_write_iter(iocb, from);

mutex_unlock(&inode->i_mutex);

if (ret > 0) {

ssize_t err;

err = generic_write_sync(file, iocb->ki_pos - ret, ret);

if (err < 0)

ret = err;

}

if (o_direct)

blk_finish_plug(&plug);

if (aio_mutex)

mutex_unlock(aio_mutex);

return ret;

out:

mutex_unlock(&inode->i_mutex);

if (aio_mutex)

mutex_unlock(aio_mutex);

return ret;

}

这边检查o_direct,应该就是直接写入的标签,前面会做一些工作,我先假设没有这个东东。

ssize_t __generic_file_write_iter(struct kiocb *iocb, struct iov_iter *from)

{

struct file *file = iocb->ki_filp;

struct address_space * mapping = file->f_mapping;

struct inode *inode = mapping->host;

ssize_t written = 0;

ssize_t err;

ssize_t status;

current->backing_dev_info = inode_to_bdi(inode);

err = file_remove_privs(file);

if (err)

goto out;

err = file_update_time(file);

if (err)

goto out;

if (iocb->ki_flags & IOCB_DIRECT) {

loff_t pos, endbyte;

written = generic_file_direct_write(iocb, from, iocb->ki_pos);

if (written < 0 || !iov_iter_count(from) || IS_DAX(inode))

goto out;

status = generic_perform_write(file, from, pos = iocb->ki_pos);

if (unlikely(status < 0)) {

err = status;

goto out;

}

endbyte = pos + status - 1;

err = filemap_write_and_wait_range(mapping, pos, endbyte);

if (err == 0) {

iocb->ki_pos = endbyte + 1;

written += status;

invalidate_mapping_pages(mapping,

pos >> PAGE_CACHE_SHIFT,

endbyte >> PAGE_CACHE_SHIFT);

} else {

}

} else {

written = generic_perform_write(file, from, iocb->ki_pos);

if (likely(written > 0))

iocb->ki_pos += written;

}

out:

current->backing_dev_info = NULL;

return written ? written : err;

}

这里还是会检查IOCB_DIRECT,直接写流程,我们先不看了。

ssize_t generic_perform_write(struct file *file,

struct iov_iter *i, loff_t pos)

{

struct address_space *mapping = file->f_mapping;

const struct address_space_operations *a_ops = mapping->a_ops;

long status = 0;

ssize_t written = 0;

unsigned int flags = 0;

if (!iter_is_iovec(i))

flags |= AOP_FLAG_UNINTERRUPTIBLE;

do {

struct page *page;

unsigned long offset; /* Offset into pagecache page */

unsigned long bytes; /* Bytes to write to page */

size_t copied; /* Bytes copied from user */

void *fsdata;

offset = (pos & (PAGE_CACHE_SIZE - 1));

bytes = min_t(unsigned long, PAGE_CACHE_SIZE - offset,

iov_iter_count(i));

again:

if (unlikely(iov_iter_fault_in_readable(i, bytes))) {

status = -EFAULT;

break;

}

if (fatal_signal_pending(current)) {

status = -EINTR;

break;

}

status = a_ops->write_begin(file, mapping, pos, bytes, flags,

&page, &fsdata);

if (unlikely(status < 0))

break;

if (mapping_writably_mapped(mapping))

flush_dcache_page(page);

copied = iov_iter_copy_from_user_atomic(page, i, offset, bytes);

flush_dcache_page(page);

status = a_ops->write_end(file, mapping, pos, bytes, copied,

page, fsdata);

if (unlikely(status < 0))

break;

copied = status;

cond_resched();

iov_iter_advance(i, copied);

if (unlikely(copied == 0)) {

bytes = min_t(unsigned long, PAGE_CACHE_SIZE - offset,

iov_iter_single_seg_count(i));

goto again;

}

pos += copied;

written += copied;

balance_dirty_pages_ratelimited(mapping);

} while (iov_iter_count(i));

return written ? written : status;

}

这里就是循环一页一页的写。当然是写到缓存中,

1、a_ops->write_begin 和a_ops交互准备开始写

2、iov_iter_copy_from_user_atomic 拷贝用户数据到 page

3、a_ops->write_end 写入

4、balance_dirty_pages_ratelimited 做限制检查

那么准备cache的过程在write_begin中。做限制的后面再看。我们的目标就是这两个。

static const struct address_space_operations ext4_aops = {

.readpage = ext4_readpage,

.readpages = ext4_readpages,

.writepage = ext4_writepage,

.writepages = ext4_writepages,

.write_begin = ext4_write_begin,

.write_end = ext4_write_end,

.bmap = ext4_bmap,

.invalidatepage = ext4_invalidatepage,

.releasepage = ext4_releasepage,

.direct_IO = ext4_direct_IO,

.migratepage = buffer_migrate_page,

.is_partially_uptodate = block_is_partially_uptodate,

.error_remove_page = generic_error_remove_page,

};

static int ext4_write_begin(struct file *file, struct address_space *mapping,

loff_t pos, unsigned len, unsigned flags,

struct page **pagep, void **fsdata)

{

struct inode *inode = mapping->host;

int ret, needed_blocks;

handle_t *handle;

int retries = 0;

struct page *page;

pgoff_t index;

unsigned from, to;

trace_android_fs_datawrite_start(inode, pos, len,

current->pid, current->comm);

trace_ext4_write_begin(inode, pos, len, flags);

needed_blocks = ext4_writepage_trans_blocks(inode) + 1;

index = pos >> PAGE_CACHE_SHIFT;

from = pos & (PAGE_CACHE_SIZE - 1);

to = from + len;

if (ext4_test_inode_state(inode, EXT4_STATE_MAY_INLINE_DATA)) {

ret = ext4_try_to_write_inline_data(mapping, inode, pos, len,

flags, pagep);

if (ret < 0)

return ret;

if (ret == 1)

return 0;

}

retry_grab:

page = grab_cache_page_write_begin(mapping, index, flags);

if (!page)

return -ENOMEM;

unlock_page(page);

retry_journal:

handle = ext4_journal_start(inode, EXT4_HT_WRITE_PAGE, needed_blocks);

if (IS_ERR(handle)) {

page_cache_release(page);

return PTR_ERR(handle);

}

lock_page(page);

if (page->mapping != mapping) {

/* The page got truncated from under us */

unlock_page(page);

page_cache_release(page);

ext4_journal_stop(handle);

goto retry_grab;

}

wait_for_stable_page(page);

#ifdef CONFIG_EXT4_FS_ENCRYPTION

if (ext4_should_dioread_nolock(inode))

ret = ext4_block_write_begin(page, pos, len,

ext4_get_block_write);

else

ret = ext4_block_write_begin(page, pos, len,

ext4_get_block);

#else

if (ext4_should_dioread_nolock(inode))

ret = __block_write_begin(page, pos, len, ext4_get_block_write);

else

ret = __block_write_begin(page, pos, len, ext4_get_block);

#endif

if (!ret && ext4_should_journal_data(inode)) {

ret = ext4_walk_page_buffers(handle, page_buffers(page),

from, to, NULL,

do_journal_get_write_access);

}

if (ret) {

unlock_page(page);

if (pos + len > inode->i_size && ext4_can_truncate(inode))

ext4_orphan_add(handle, inode);

ext4_journal_stop(handle);

if (pos + len > inode->i_size) {

ext4_truncate_failed_write(inode);

if (inode->i_nlink)

ext4_orphan_del(NULL, inode);

}

if (ret == -ENOSPC &&

ext4_should_retry_alloc(inode->i_sb, &retries))

goto retry_journal;

page_cache_release(page);

return ret;

}

*pagep = page;

mtk_btag_pidlog_write_begin(*pagep);

return ret;

}

- 前面做一些检查

- 后面的handle是和block buffers进行交互的

- 主要的过程是中间的grab_cache_page_write_begin

struct page *grab_cache_page_write_begin(struct address_space *mapping,

pgoff_t index, unsigned flags)

{

struct page *page;

int fgp_flags = FGP_LOCK|FGP_ACCESSED|FGP_WRITE|FGP_CREAT;

if (flags & AOP_FLAG_NOFS)

fgp_flags |= FGP_NOFS;

page = pagecache_get_page(mapping, index, fgp_flags,

mapping_gfp_mask(mapping));

if (page)

wait_for_stable_page(page);

return page;

}

pagecache_get_page 从radix中获取一页。

struct page *pagecache_get_page(struct address_space *mapping, pgoff_t offset,

int fgp_flags, gfp_t gfp_mask)

{

struct page *page;

repeat:

page = find_get_entry(mapping, offset);

if (radix_tree_exceptional_entry(page))

page = NULL;

if (!page)

goto no_page;

if (fgp_flags & FGP_LOCK) {

if (fgp_flags & FGP_NOWAIT) {

if (!trylock_page(page)) {

page_cache_release(page);

return NULL;

}

} else {

lock_page(page);

}

/* Has the page been truncated? */

if (unlikely(page->mapping != mapping)) {

unlock_page(page);

page_cache_release(page);

goto repeat;

}

VM_BUG_ON_PAGE(page->index != offset, page);

}

if (page && (fgp_flags & FGP_ACCESSED))

mark_page_accessed(page);

no_page:

if (!page && (fgp_flags & FGP_CREAT)) {

int err;

if ((fgp_flags & FGP_WRITE) && mapping_cap_account_dirty(mapping))

gfp_mask |= __GFP_WRITE;

if (fgp_flags & FGP_NOFS)

gfp_mask &= ~__GFP_FS;

page = __page_cache_alloc(gfp_mask);

if (!page)

return NULL;

if (WARN_ON_ONCE(!(fgp_flags & FGP_LOCK)))

fgp_flags |= FGP_LOCK;

/* Init accessed so avoid atomic mark_page_accessed later */

if (fgp_flags & FGP_ACCESSED)

__SetPageReferenced(page);

err = add_to_page_cache_lru(page, mapping, offset,

gfp_mask & GFP_RECLAIM_MASK);

if (unlikely(err)) {

page_cache_release(page);

page = NULL;

if (err == -EEXIST)

goto repeat;

}

}

return page;

}

- find_get_entry 获取一页

- mark_page_accessed 标记页据说是给清除的时候用的

- no_page流程,如果没有获取到page就 __page_cache_alloc 一个 然后

add_to_page_cache_lru

struct page *find_get_entry(struct address_space *mapping, pgoff_t offset)

{

void **pagep;

struct page *page;

rcu_read_lock();

repeat:

page = NULL;

pagep = radix_tree_lookup_slot(&mapping->page_tree, offset);

if (pagep) {

page = radix_tree_deref_slot(pagep);

if (unlikely(!page))

goto out;

if (radix_tree_exception(page)) {

if (radix_tree_deref_retry(page))

goto repeat;

goto out;

}

if (!page_cache_get_speculative(page))

goto repeat;

if (unlikely(page != *pagep)) {

page_cache_release(page);

goto repeat;

}

}

out:

rcu_read_unlock();

return page;

}

find_get_entry 也很简单,就是在radix_tree_lookup_slot 找一个。

最后

以上就是粗心蜻蜓最近收集整理的关于操作系统学习-3.Linux文件系统学习1-初始化和read、write过程分析整体框架图初始化过程文件read流程分析文件read和BIO调度分析文件write过程分析的全部内容,更多相关操作系统学习-3内容请搜索靠谱客的其他文章。

发表评论 取消回复