基于tensorrt的模型开发

- 基于tensorrt的模型开发:

- 目标识别及自动驾驶等工业产品应用的感知架构结构:

基于tensorrt的模型开发:

深度学习模型研发的生命周期包括五步:目标确认、任务建模与pipeline搭建、数据采集与标注、模型训练、模型部署。模型部署是非常重要的一环,它是模型落地前的临门一脚;95%的公司训练出模型,却停在了部署阶段。

模型部署阶段对模型推理有五个要求:

Throughput

The volume of output within a given period. Often measured in inferences/second or samples/second, per-server throughput is critical to cost-effective scaling in data centers.

Efficiency

Amount of throughput delivered per unit-power, often expressed as performance/watt. Efficiency is another key factor to cost-effective data center scaling, since servers, server racks, and entire data centers must operate within fixed power budgets.

Latency

Time to execute an inference, usually measured in milliseconds. Low latency is critical to delivering rapidly growing, real-time inference-based services.

Accuracy

A trained neural network’s ability to deliver the correct answer. For image classification based usages, the critical metric is expressed as a top-5 or top-1 percentage.

Memory Usage

The host and device memory that need to be reserved to do inference on a network depend on the algorithms used. This constrains what networks and what combinations of networks can run on a given inference platform. This is particularly important for systems where multiple networks are needed and memory resources are limited - such as cascading multi-class detection networks used in intelligent video analytics and multi-camera, multi-network autonomous driving systems.

如果我们部署的硬件是英伟达的产品,以上五点都可以通过TensorRT进行优化,TensorRT有如下五个功能:

Quantization

Most deep learning frameworks train neural networks in full 32-bit precision (FP32). Once the model is fully trained, inference computations can use half precision FP16 or even INT8 tensor operations, since gradient backpropagation is not required for inference. Using lower precision results in smaller model size, lower memory utilization and latency, and higher throughput.

Kernel Auto Tuning

During the optimization phase TensorRT also chooses from hundreds of specialized kernels, many of them hand-tuned and optimized for a range of parameters and target platforms. As an example, there are several different algorithms to do convolutions. TensorRT will pick the implementation from a library of kernels that delivers the best performance for thetarget GPU, input data size, filter size, tensor layout, batch size and other parameters.This ensures that the deployed model is performance tuned for the specific deployment platform as well as for the specific neural network being deployed.

Elimination of Redundant Layers and Operations

layers whose outputs are not used and operations which are equivalent to no-op.

Figure1. TensorRT’s vertical and horizontal layer fusion and layer elimination optimizations simplify the GoogLeNet Inception module graph, reducing computation and memory overhead.

Layer & Tensor Fusion

TensorRT parses the network computational graph and looks for opportunities to perform graph optimizations. These graph optimizations do not change the underlying computation in the graph: instead, they look to restructure the graph to perform the operations much faster and more efficiently.

When a deep learning framework executes this graph during inference, it makes multiple function calls for each layer. Since each operation is performed on the GPU, this translates to multiple CUDA kernel launches. The kernel computation is often very fast relative to the kernel launch overhead and the cost of reading and writing the tensor data for each layer. This results in a memory bandwidth bottleneck and underutilization of available GPU resources.

TensorRT addresses this by vertically fusing kernels to perform the sequential operations together. This layer fusion reduces kernel launches and avoids writing into and reading from memory between layers. In network on the left of Figure 1, the convolution, bias and ReLU layers of various sizes can be combined into a single kernel called CBR as the right side of Figure 1 shows. A simple analogy is making three separate trips to the supermarket to buy three items versus buying all three in a single trip.

TensorRT also recognizes layers that share the same input data and filter size, but have different weights. Instead of three separate kernels, TensorRT fuses them horizontally into a single wider kernel as shown for the 1×1 CBR layer in the right side of Figure 1.

TensorRT can also eliminate the concatenation layers in Figure 1 (“concat”) by preallocating output buffers and writing into them in a strided fashion.

Overall the result is a smaller, faster and more efficient graph with fewer layers and kernel launches, therefore reducing inference latency.

Dynamic Tensor Memory

TensorRT also reduces memory footprint and improves memory reuse by designating memory for each tensor only for the duration of its usage, avoiding memory allocation overhead for fast and efficient execution.

TensorRT使用过程分为两步:

Build

The build phase needs to be run on the target deployment GPU platform. For example, if your application is going to run on a Jetson TX2, the build needs to be performed on a Jetson TX2, and likewise if your inference services will run in the cloud on AWS P3 instances with Tesla V100 GPUs, then the build phase needs to run on a system with a Tesla V100. This step is only performed once, so typical applications build one or many engines once, and then serialize them for later use.

We use TensorRT to parse a trained model and perform optimizations for specified parameters such as batch size, precision, and workspace memory for the target deployment GPU. The output of this step is an optimized inference execution engine which we serialize a file on disk called a plan file.

A plan file includes serialized data that the runtime engine uses to execute the network. It’s called a plan file because it includes not only the weights, but also the schedule for the kernels to execute the network. It also includes information about the network that the application can query in order to determine how to bind input and output buffers.

Deploy

This is the deployment step. We load and deserialize a saved plan file to create a TensorRT engine object, and use it to run inference on new data on the target deployment platform.

目标识别及自动驾驶等工业产品应用的感知架构结构:

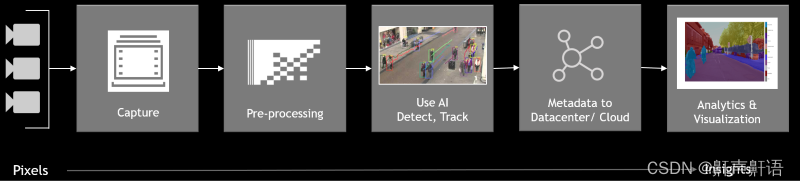

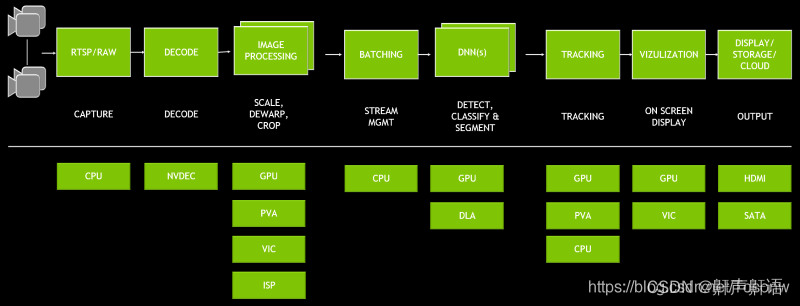

通过将深度神经网络和其他复杂的处理任务引入到流处理管道中,以实现对视频和其他传感器数据的近实时分析。从这些传感器中提取有意义的见解为提高运营效率和安全性创造了机会。例如,摄像头是当前使用最多的目标识别。在我们的家中,街道上,停车场,大型购物中心,仓库,工厂中都可以找到相机–无处不在。视频分析的潜在用途是巨大的:自动驾驶,访问控制,防止丢失,自动结帐,监视,安全,自动检查(QA),包裹分类(智能物流),交通控制/工程,工业自动化等。

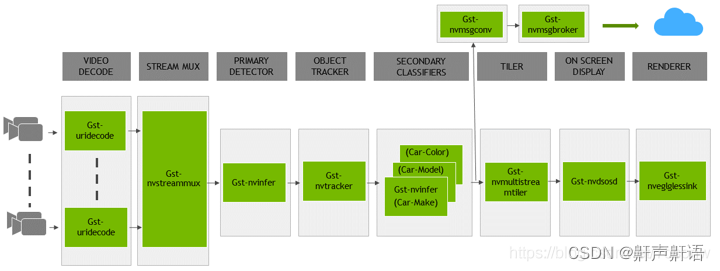

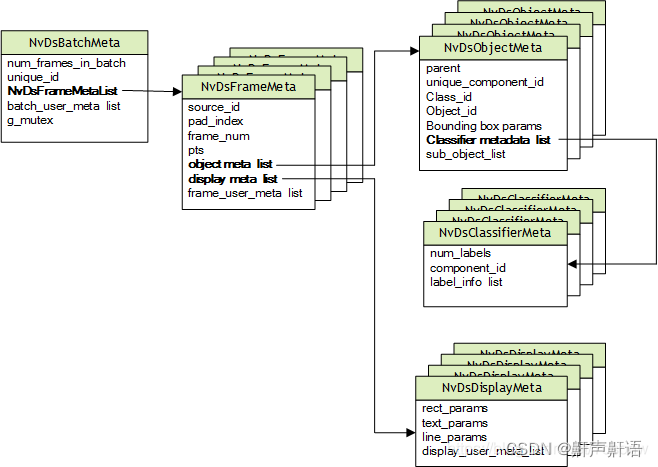

通用流分析架构定义了可扩展的视频处理管道,可用于执行推理,对象跟踪和报告,甚至存储到云端。当应用程序分析每个视频帧时,插件会提取信息并将其存储为级联元数据记录的一部分,从而保持记录与源帧的关联。管道末尾的完整元数据集合表示深度学习模型和其他分析插件从框架中提取的完整信息集。应用程序可以使用此信息进行显示,也可以作为消息的一部分在外部进行传输,以进行进一步的分析或长期归档。

对元数据使用可扩展的标准结构。基本的元数据结构NvDsBatchMeta始于在所需Gst-nvstreammux插件内部创建的批处理级元数据。辅助元数据结构保存框架,对象,分类器和标签数据。

最后

以上就是腼腆大碗最近收集整理的关于NVIDIA边缘计算产品项目落地,tensorrt介绍以及应用的全部内容,更多相关NVIDIA边缘计算产品项目落地内容请搜索靠谱客的其他文章。

发表评论 取消回复