文章目录

- 0 前言

- 1 课题背景

- 2 实现效果

- 3 卷积神经网络

- 3.1卷积层

- 3.2 池化层

- 3.3 激活函数:

- 3.4 全连接层

- 3.5 使用tensorflow中keras模块实现卷积神经网络

- 4 inception_v3网络

- 5 最后

0 前言

???? 这两年开始毕业设计和毕业答辩的要求和难度不断提升,传统的毕设题目缺少创新和亮点,往往达不到毕业答辩的要求,这两年不断有学弟学妹告诉学长自己做的项目系统达不到老师的要求。

为了大家能够顺利以及最少的精力通过毕设,学长分享优质毕业设计项目,今天要分享的是

???? **基于深度学习的动物识别算法 **

????学长这里给一个题目综合评分(每项满分5分)

- 难度系数:3分

- 工作量:3分

- 创新点:3分

???? 选题指导, 项目分享:

https://gitee.com/dancheng-senior/project-sharing-1/blob/master/%E6%AF%95%E8%AE%BE%E6%8C%87%E5%AF%BC/README.md

1 课题背景

利用深度学习对野生动物进行自动识别分类,可以大大提高野生动物监测效率,为野生动物保护策略的制定提供可靠的数据支持。但是目前野生动物的自动识别仍面临着监测图像背景信息复杂、质量低造成的识别准确率低的问题,影响了深度学习技术在野生动物保护领域的应用落地。为了实现高准确率的野生动物自动识别,本项目基于卷积神经网络实现图像动物识别。

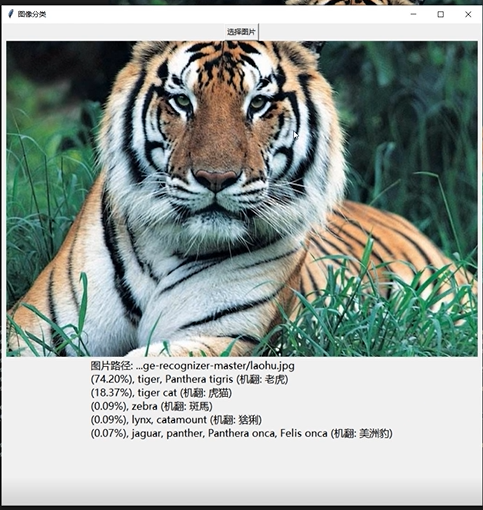

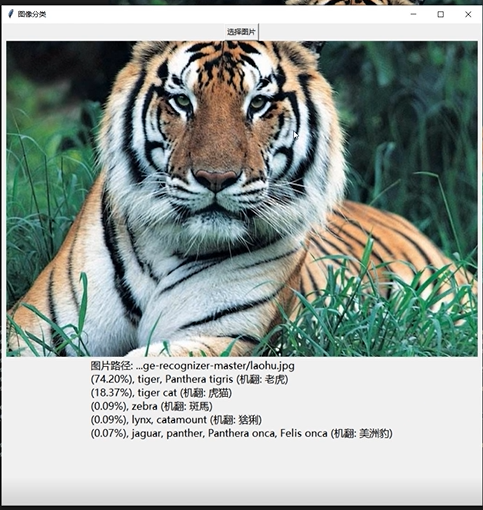

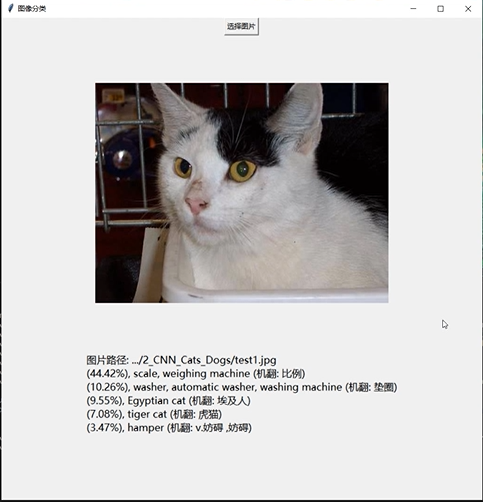

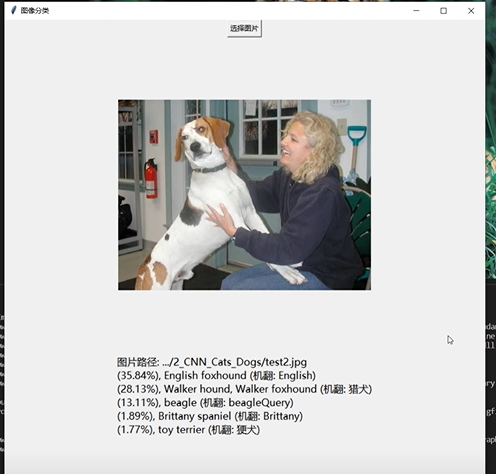

2 实现效果

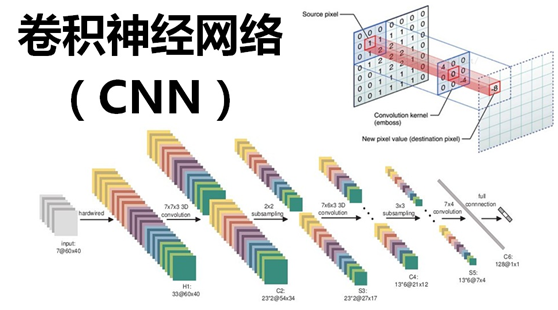

3 卷积神经网络

受到人类大脑神经突触结构相互连接的模式启发,神经网络作为人工智能领域的重要组成部分,通过分布式的方法处理信息,可以解决复杂的非线性问题,从构造方面来看,主要包括输入层、隐藏层、输出层三大组成结构。每一个节点被称为一个神经元,存在着对应的权重参数,部分神经元存在偏置,当输入数据x进入后,对于经过的神经元都会进行类似于:y=w*x+b的线性函数的计算,其中w为该位置神经元的权值,b则为偏置函数。通过每一层神经元的逻辑运算,将结果输入至最后一层的激活函数,最后得到输出output。

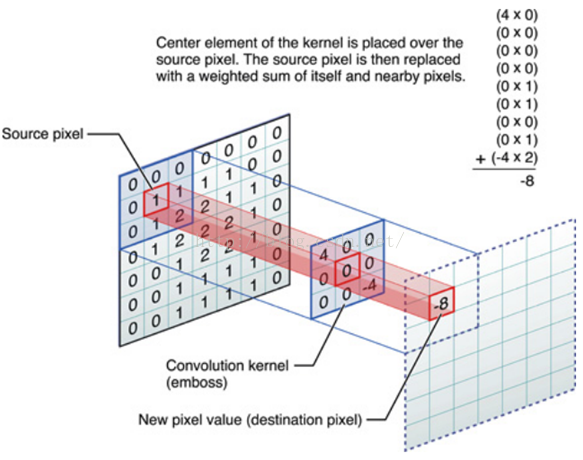

3.1卷积层

卷积核相当于一个滑动窗口,示意图中3x3大小的卷积核依次划过6x6大小的输入数据中的对应区域,并与卷积核滑过区域做矩阵点乘,将所得结果依次填入对应位置即可得到右侧4x4尺寸的卷积特征图,例如划到右上角3x3所圈区域时,将进行0x0+1x1+2x1+1x1+0x0+1x1+1x0+2x0x1x1=6的计算操作,并将得到的数值填充到卷积特征的右上角。

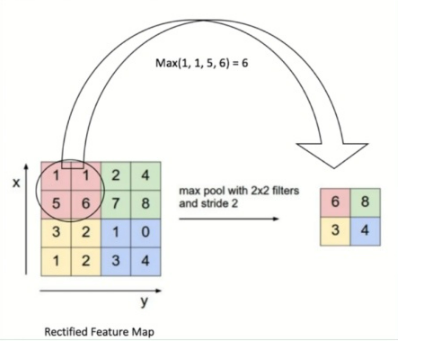

3.2 池化层

池化操作又称为降采样,提取网络主要特征可以在达到空间不变性的效果同时,有效地减少网络参数,因而简化网络计算复杂度,防止过拟合现象的出现。在实际操作中经常使用最大池化或平均池化两种方式,如下图所示。虽然池化操作可以有效的降低参数数量,但过度池化也会导致一些图片细节的丢失,因此在搭建网络时要根据实际情况来调整池化操作。

3.3 激活函数:

激活函数大致分为两种,在卷积神经网络的发展前期,使用较为传统的饱和激活函数,主要包括sigmoid函数、tanh函数等;随着神经网络的发展,研宄者们发现了饱和激活函数的弱点,并针对其存在的潜在问题,研宄了非饱和激活函数,其主要含有ReLU函数及其函数变体

3.4 全连接层

在整个网络结构中起到“分类器”的作用,经过前面卷积层、池化层、激活函数层之后,网络己经对输入图片的原始数据进行特征提取,并将其映射到隐藏特征空间,全连接层将负责将学习到的特征从隐藏特征空间映射到样本标记空间,一般包括提取到的特征在图片上的位置信息以及特征所属类别概率等。将隐藏特征空间的信息具象化,也是图像处理当中的重要一环。

3.5 使用tensorflow中keras模块实现卷积神经网络

class CNN(tf.keras.Model):

def __init__(self):

super().__init__()

self.conv1 = tf.keras.layers.Conv2D(

filters=32, # 卷积层神经元(卷积核)数目

kernel_size=[5, 5], # 感受野大小

padding='same', # padding策略(vaild 或 same)

activation=tf.nn.relu # 激活函数

)

self.pool1 = tf.keras.layers.MaxPool2D(pool_size=[2, 2], strides=2)

self.conv2 = tf.keras.layers.Conv2D(

filters=64,

kernel_size=[5, 5],

padding='same',

activation=tf.nn.relu

)

self.pool2 = tf.keras.layers.MaxPool2D(pool_size=[2, 2], strides=2)

self.flatten = tf.keras.layers.Reshape(target_shape=(7 * 7 * 64,))

self.dense1 = tf.keras.layers.Dense(units=1024, activation=tf.nn.relu)

self.dense2 = tf.keras.layers.Dense(units=10)

def call(self, inputs):

x = self.conv1(inputs) # [batch_size, 28, 28, 32]

x = self.pool1(x) # [batch_size, 14, 14, 32]

x = self.conv2(x) # [batch_size, 14, 14, 64]

x = self.pool2(x) # [batch_size, 7, 7, 64]

x = self.flatten(x) # [batch_size, 7 * 7 * 64]

x = self.dense1(x) # [batch_size, 1024]

x = self.dense2(x) # [batch_size, 10]

output = tf.nn.softmax(x)

return output

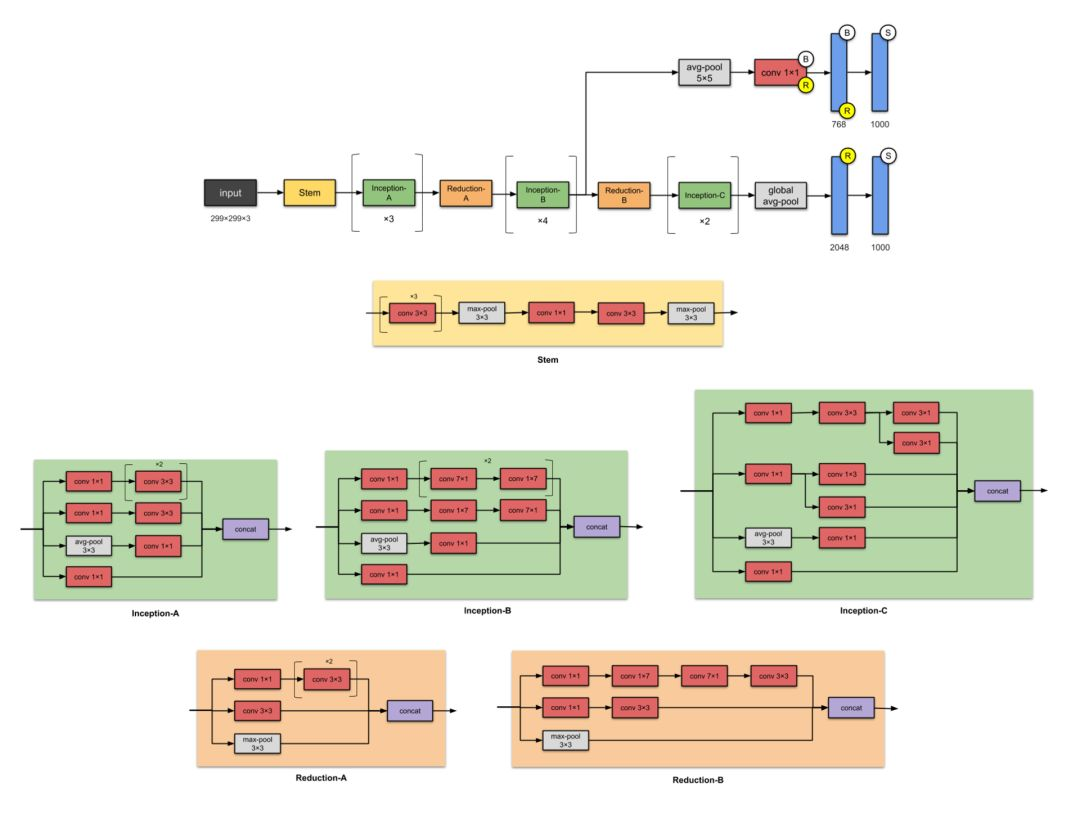

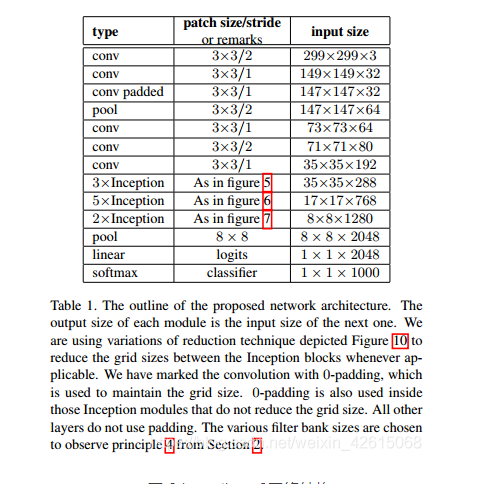

4 inception_v3网络

简介

如果 ResNet 是为了更深,那么 Inception 家族就是为了更宽。Inception 的作者对训练更大型网络的计算效率尤其感兴趣。换句话说:怎样在不增加计算成本的前提下扩展神经网络?

网路结构图

主要改动

- 将7×7卷积分解为3个3×3的卷积。

- 35×35的Inception模块采用图1所示结构,之后采用图5类似结构进行下采样

- 17×17的Inception模块采用图2所示结构,也是采用图5类似结构下采样

- 8×8的Inception模块采用图3所示结构,进行较大维度的提升。

Tensorflow实现代码

import os

import keras

import numpy as np

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras.models import Model

config = tf.compat.v1.ConfigProto()

config.gpu_options.allow_growth = True # TensorFlow按需分配显存

config.gpu_options.per_process_gpu_memory_fraction = 0.5 # 指定显存分配比例

inceptionV3_One={'1a':[64,48,64,96,96,32],

'2a':[64,48,64,96,96,64],

'3a':[64,48,64,96,96,64]

}

inceptionV3_Two={'1b':[192,128,128,192,128,128,128,128,192,192],

'2b':[192,160,160,192,160,160,160,160,192,192],

'3b':[192,160,160,192,160,160,160,160,192,192],

'4b':[192,192,192,192,192,192,192,192,192,192]

}

keys_two=(list)(inceptionV3_Two.keys())

inceptionV3_Three={

'1c':[320,384,384,384,448,384,384,384,192],

'2c':[320,384,384,384,448,384,384,384,192]

}

keys_three=(list)(inceptionV3_Three.keys())

def InceptionV3(inceptionV3_One,inceptionV3_Two,inceptionV3_Three):

keys_one=(list)(inceptionV3_One.keys())

keys_two = (list)(inceptionV3_Two.keys())

keys_three = (list)(inceptionV3_Three.keys())

input=layers.Input(shape=[299,299,3])

# 输入部分

conv1_one = layers.Conv2D(32, kernel_size=[3, 3], strides=[2, 2], padding='valid')(input)

conv1_batch=layers.BatchNormalization()(conv1_one)

conv1relu=layers.Activation('relu')(conv1_batch)

conv2_one = layers.Conv2D(32, kernel_size=[3, 3], strides=[1,1],padding='valid')(conv1relu)

conv2_batch=layers.BatchNormalization()(conv2_one)

conv2relu=layers.Activation('relu')(conv2_batch)

conv3_padded = layers.Conv2D(64, kernel_size=[3, 3], strides=[1,1],padding='same')(conv2relu)

conv3_batch=layers.BatchNormalization()(conv3_padded)

con3relu=layers.Activation('relu')(conv3_batch)

pool1_one = layers.MaxPool2D(pool_size=[3, 3], strides=[2, 2])(con3relu)

conv4_one = layers.Conv2D(80, kernel_size=[3,3], strides=[1,1], padding='valid')(pool1_one)

conv4_batch=layers.BatchNormalization()(conv4_one)

conv4relu=layers.Activation('relu')(conv4_batch)

conv5_one = layers.Conv2D(192, kernel_size=[3, 3], strides=[2,2], padding='valid')(conv4relu)

conv5_batch = layers.BatchNormalization()(conv5_one)

x=layers.Activation('relu')(conv5_batch)

"""

filter11:1x1的卷积核个数

filter13:3x3卷积之前的1x1卷积核个数

filter33:3x3卷积个数

filter15:使用3x3卷积代替5x5卷积之前的1x1卷积核个数

filter55:使用3x3卷积代替5x5卷积个数

filtermax:最大池化之后的1x1卷积核个数

"""

for i in range(3):

conv11 = layers.Conv2D((int)(inceptionV3_One[keys_one[i]][0]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion11 = layers.BatchNormalization()(conv11)

conv11relu = layers.Activation('relu')(batchnormaliztion11)

conv13 = layers.Conv2D((int)(inceptionV3_One[keys_one[i]][1]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion13 = layers.BatchNormalization()(conv13)

conv13relu = layers.Activation('relu')(batchnormaliztion13)

conv33 = layers.Conv2D((int)(inceptionV3_One[keys_one[i]][2]), kernel_size=[5, 5], strides=[1, 1], padding='same')(conv13relu)

batchnormaliztion33 = layers.BatchNormalization()(conv33)

conv33relu = layers.Activation('relu')(batchnormaliztion33)

conv1533 = layers.Conv2D((int)(inceptionV3_One[keys_one[i]][3]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion1533 = layers.BatchNormalization()(conv1533)

conv1522relu = layers.Activation('relu')(batchnormaliztion1533)

conv5533first = layers.Conv2D((int)(inceptionV3_One[keys_one[i]][4]), kernel_size=[3, 3], strides=[1, 1], padding='same')(conv1522relu)

batchnormaliztion5533first = layers.BatchNormalization()(conv5533first)

conv5533firstrelu = layers.Activation('relu')(batchnormaliztion5533first)

conv5533last = layers.Conv2D((int)(inceptionV3_One[keys_one[i]][4]), kernel_size=[3, 3], strides=[1, 1], padding='same')(conv5533firstrelu)

batchnormaliztion5533last = layers.BatchNormalization()(conv5533last)

conv5533lastrelu = layers.Activation('relu')(batchnormaliztion5533last)

maxpool = layers.AveragePooling2D(pool_size=[3, 3], strides=[1, 1], padding='same')(x)

maxconv11 = layers.Conv2D((int)(inceptionV3_One[keys_one[i]][5]), kernel_size=[1, 1], strides=[1, 1], padding='same')(maxpool)

batchnormaliztionpool = layers.BatchNormalization()(maxconv11)

convmaxrelu = layers.Activation('relu')(batchnormaliztionpool)

x=tf.concat([

conv11relu,conv33relu,conv5533lastrelu,convmaxrelu

],axis=3)

conv1_two = layers.Conv2D(384, kernel_size=[3, 3], strides=[2, 2], padding='valid')(x)

conv1batch=layers.BatchNormalization()(conv1_two)

conv1_tworelu=layers.Activation('relu')(conv1batch)

conv2_two = layers.Conv2D(64, kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

conv2batch=layers.BatchNormalization()(conv2_two)

conv2_tworelu=layers.Activation('relu')(conv2batch)

conv3_two = layers.Conv2D( 96, kernel_size=[3, 3], strides=[1,1], padding='same')(conv2_tworelu)

conv3batch=layers.BatchNormalization()(conv3_two)

conv3_tworelu=layers.Activation('relu')(conv3batch)

conv4_two = layers.Conv2D( 96, kernel_size=[3, 3], strides=[2, 2], padding='valid')(conv3_tworelu)

conv4batch=layers.BatchNormalization()(conv4_two)

conv4_tworelu=layers.Activation('relu')(conv4batch)

maxpool = layers.MaxPool2D(pool_size=[3, 3], strides=[2, 2])(x)

x=tf.concat([

conv1_tworelu,conv4_tworelu,maxpool

],axis=3)

"""

filter11:1x1的卷积核个数

filter13:使用1x3,3x1卷积代替3x3卷积之前的1x1卷积核个数

filter33:使用1x3,3x1卷积代替3x3卷积的个数

filter15:使用1x3,3x1,1x3,3x1卷积卷积代替5x5卷积之前的1x1卷积核个数

filter55:使用1x3,3x1,1x3,3x1卷积代替5x5卷积个数

filtermax:最大池化之后的1x1卷积核个数

"""

for i in range(4):

conv11 = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][0]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion11 = layers.BatchNormalization()(conv11)

conv11relu=layers.Activation('relu')(batchnormaliztion11)

conv13 = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][1]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion13 = layers.BatchNormalization()(conv13)

conv13relu=layers.Activation('relu')(batchnormaliztion13)

conv3313 = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][2]), kernel_size=[1, 7], strides=[1, 1], padding='same')(conv13relu)

batchnormaliztion3313 = layers.BatchNormalization()(conv3313)

conv3313relu=layers.Activation('relu')(batchnormaliztion3313)

conv3331 = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][3]), kernel_size=[7, 1], strides=[1, 1], padding='same')(conv3313relu)

batchnormaliztion3331 = layers.BatchNormalization()(conv3331)

conv3331relu=layers.Activation('relu')(batchnormaliztion3331)

conv15 = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][4]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion15 = layers.BatchNormalization()(conv15)

conv15relu=layers.Activation('relu')(batchnormaliztion15)

conv1513first = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][5]), kernel_size=[1, 7], strides=[1, 1], padding='same')(conv15relu)

batchnormaliztion1513first = layers.BatchNormalization()(conv1513first)

conv1513firstrelu=layers.Activation('relu')(batchnormaliztion1513first)

conv1531second = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][6]), kernel_size=[7, 1], strides=[1, 1], padding='same')(conv1513firstrelu)

batchnormaliztion1531second = layers.BatchNormalization()(conv1531second)

conv1531second=layers.Activation('relu')(batchnormaliztion1531second)

conv1513third = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][7]), kernel_size=[1, 7], strides=[1, 1], padding='same')(conv1531second)

batchnormaliztion1513third = layers.BatchNormalization()(conv1513third)

conv1513thirdrelu=layers.Activation('relu')(batchnormaliztion1513third)

conv1531last = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][8]), kernel_size=[7, 1], strides=[1, 1], padding='same')(conv1513thirdrelu)

batchnormaliztion1531last = layers.BatchNormalization()(conv1531last)

conv1531lastrelu=layers.Activation('relu')(batchnormaliztion1531last)

maxpool = layers.AveragePooling2D(pool_size=[3, 3], strides=[1, 1], padding='same')(x)

maxconv11 = layers.Conv2D((int)(inceptionV3_Two[keys_two[i]][9]), kernel_size=[1, 1], strides=[1, 1], padding='same')(maxpool)

maxconv11relu = layers.BatchNormalization()(maxconv11)

maxconv11relu = layers.Activation('relu')(maxconv11relu)

x=tf.concat([

conv11relu,conv3331relu,conv1531lastrelu,maxconv11relu

],axis=3)

conv11_three=layers.Conv2D(192, kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

conv11batch=layers.BatchNormalization()(conv11_three)

conv11relu=layers.Activation('relu')(conv11batch)

conv33_three=layers.Conv2D(320, kernel_size=[3, 3], strides=[2, 2], padding='valid')(conv11relu)

conv33batch=layers.BatchNormalization()(conv33_three)

conv33relu=layers.Activation('relu')(conv33batch)

conv7711_three=layers.Conv2D(192, kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

conv77batch=layers.BatchNormalization()(conv7711_three)

conv77relu=layers.Activation('relu')(conv77batch)

conv7717_three=layers.Conv2D(192, kernel_size=[1, 7], strides=[1, 1], padding='same')(conv77relu)

conv7717batch=layers.BatchNormalization()(conv7717_three)

conv7717relu=layers.Activation('relu')(conv7717batch)

conv7771_three=layers.Conv2D(192, kernel_size=[7, 1], strides=[1, 1], padding='same')(conv7717relu)

conv7771batch=layers.BatchNormalization()(conv7771_three)

conv7771relu=layers.Activation('relu')(conv7771batch)

conv33_three=layers.Conv2D(192, kernel_size=[3, 3], strides=[2, 2], padding='valid')(conv7771relu)

conv3377batch=layers.BatchNormalization()(conv33_three)

conv3377relu=layers.Activation('relu')(conv3377batch)

convmax_three=layers.MaxPool2D(pool_size=[3, 3], strides=[2, 2])(x)

x=tf.concat([

conv33relu,conv3377relu,convmax_three

],axis=3)

"""

filter11:1x1的卷积核个数

filter13:使用1x3,3x1卷积代替3x3卷积之前的1x1卷积核个数

filter33:使用1x3,3x1卷积代替3x3卷积的个数

filter15:使用3x3卷积代替5x5卷积之前的1x1卷积核个数

filter55:使用3x3卷积代替5x5卷积个数

filtermax:最大池化之后的1x1卷积核个数

"""

for i in range(2):

conv11 = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][0]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion11 = layers.BatchNormalization()(conv11)

conv11relu=layers.Activation('relu')(batchnormaliztion11)

conv13 = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][1]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion13 = layers.BatchNormalization()(conv13)

conv13relu=layers.Activation('relu')(batchnormaliztion13)

conv33left = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][2]), kernel_size=[1, 3], strides=[1, 1], padding='same')(conv13relu)

batchnormaliztion33left = layers.BatchNormalization()(conv33left)

conv33leftrelu=layers.Activation('relu')(batchnormaliztion33left)

conv33right = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][3]), kernel_size=[3, 1], strides=[1, 1], padding='same')(conv33leftrelu)

batchnormaliztion33right = layers.BatchNormalization()(conv33right)

conv33rightrelu=layers.Activation('relu')(batchnormaliztion33right)

conv33rightleft=tf.concat([

conv33leftrelu,conv33rightrelu

],axis=3)

conv15 = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][4]), kernel_size=[1, 1], strides=[1, 1], padding='same')(x)

batchnormaliztion15 = layers.BatchNormalization()(conv15)

conv15relu=layers.Activation('relu')(batchnormaliztion15)

conv1533 = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][5]), kernel_size=[3, 3], strides=[1, 1], padding='same')(conv15relu)

batchnormaliztion1533 = layers.BatchNormalization()(conv1533)

conv1533relu=layers.Activation('relu')(batchnormaliztion1533)

conv1533left = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][6]), kernel_size=[1, 3], strides=[1, 1], padding='same')(conv1533relu)

batchnormaliztion1533left = layers.BatchNormalization()(conv1533left)

conv1533leftrelu=layers.Activation('relu')(batchnormaliztion1533left)

conv1533right = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][6]), kernel_size=[3, 1], strides=[1, 1], padding='same')(conv1533leftrelu)

batchnormaliztion1533right = layers.BatchNormalization()(conv1533right)

conv1533rightrelu=layers.Activation('relu')(batchnormaliztion1533right)

conv1533leftright=tf.concat([

conv1533right,conv1533rightrelu

],axis=3)

maxpool = layers.AveragePooling2D(pool_size=[3, 3], strides=[1, 1],padding='same')(x)

maxconv11 = layers.Conv2D((int)(inceptionV3_Three[keys_three[i]][8]), kernel_size=[1, 1], strides=[1, 1], padding='same')(maxpool)

batchnormaliztionpool = layers.BatchNormalization()(maxconv11)

maxrelu = layers.Activation('relu')(batchnormaliztionpool)

x=tf.concat([

conv11relu,conv33rightleft,conv1533leftright,maxrelu

],axis=3)

x=layers.GlobalAveragePooling2D()(x)

x=layers.Dense(1000)(x)

softmax=layers.Activation('softmax')(x)

model_inceptionV3=Model(inputs=input,outputs=softmax,name='InceptionV3')

return model_inceptionV3

model_inceptionV3=InceptionV3(inceptionV3_One,inceptionV3_Two,inceptionV3_Three)

model_inceptionV3.summary()

5 最后

最后

以上就是开朗板凳最近收集整理的关于【毕业设计】深度学习+python+opencv实现动物识别 - 图像识别0 前言1 课题背景2 实现效果3 卷积神经网络4 inception_v3网络5 最后的全部内容,更多相关【毕业设计】深度学习+python+opencv实现动物识别内容请搜索靠谱客的其他文章。

发表评论 取消回复