模型在各个系统部署汇总学习课程:

https://aistudio.baidu.com/aistudio/education/group/info/19084

服务构建

具有高性能C++和高易用Python 2套框架。C++框架基于高性能bRPC网络框架打造高吞吐、低延迟的推理服务,性能领先竞品。Python框架基于gRPC/gRPC-Gateway网络框架和Python语言构建高易用、高吞吐推理服务框架

HTTP

RPC

grpc

python

brpc

C++

服务请求可以兼容各种协议

C++ Serving基于BRPC进行服务构建,支持BRPC、GRPC、RESTful请求。请求数据为protobuf格式,详见core/general-server/proto/general_model_service.proto。本文介绍构建请求以及解析结果的方法。

docker部署

使用Docker安装Paddle Serving

https://gitee.com/AI-Mart/Serving/blob/v0.7.0/doc/Install_CN.md

https://github.com/PaddlePaddle/Serving/blob/v0.7.0/doc/Install_CN.md

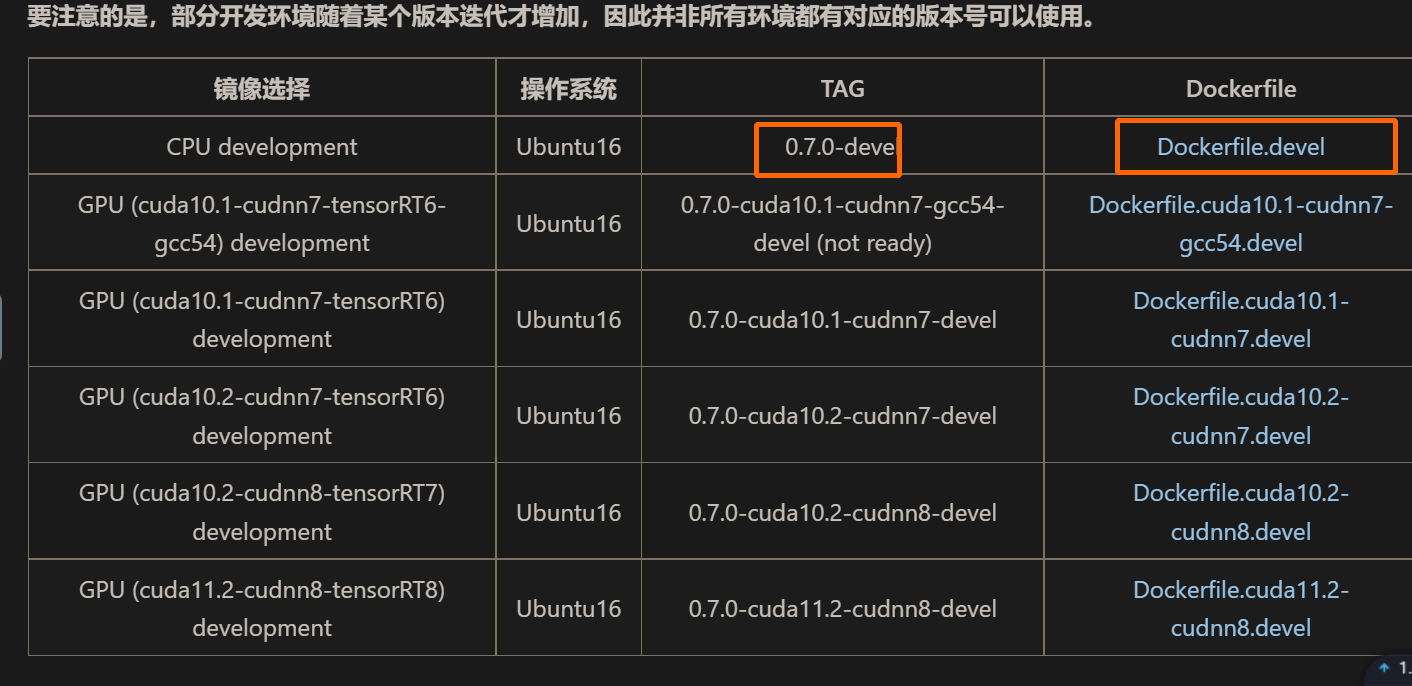

步骤一 选择合适的基础镜像

Docker 镜像列表

https://gitee.com/AI-Mart/Serving/blob/v0.7.0/doc/Docker_Images_CN.md

https://github.com/PaddlePaddle/Serving/blob/v0.7.0/doc/Docker_Images_CN.md

右边有dickerfile编写指导,可以参考放到自己的dockerfile

安装包下载

wget https://www.sqlite.org/2018/sqlite-autoconf-3250300.tar.gz

wget https://www.python.org/ftp/python/3.7.4/Python-3.7.4.tgz

wget https://paddle-ci.gz.bcebos.com/TRT/TensorRT6-cuda10.1-cudnn7.tar.gz

wget https://paddle-inference-lib.bj.bcebos.com/2.2.2/python/Linux/GPU/x86-64_gcc8.2_avx_mkl_cuda10.1_cudnn7.6.5_trt6.0.1.5/paddlepaddle_gpu-2.2.2.post101-cp37-cp37m-linux_x86_64.whl

dockerfile

FROM registry.baidubce.com/paddlepaddle/serving:0.7.0-cuda10.1-cudnn7-devel

COPY . /deploy

WORKDIR /deploy

# Install Python3.7

RUN mkdir -p /root/python_build/ &&

tar -zxf sqlite-autoconf-3250300.tar.gz && cd sqlite-autoconf-3250300 &&

./configure -prefix=/usr/local && make -j8 && make install && cd ../ && rm sqlite-autoconf-3250300.tar.gz

# Install Python3.7

RUN tar -xzf Python-3.7.4.tgz && cd Python-3.7.4 &&

CFLAGS="-Wformat" ./configure --prefix=/usr/local/ --enable-shared > /dev/null &&

make -j8 > /dev/null && make altinstall > /dev/null && ldconfig && cd .. && rm -rf Python-3.7.4*

ENV LD_LIBRARY_PATH=/usr/local/lib:${LD_LIBRARY_PATH}

RUN rm -rf /usr/local/bin/python3 && rm -rf /usr/bin/python3

RUN ln -sf /usr/local/bin/python3.7 /usr/local/bin/python3 && ln -sf /usr/local/bin/python3.7 /usr/bin/python3 && ln -sf /usr/local/bin/pip3.7 /usr/local/bin/pip3 && ln -sf /usr/local/bin/pip3.7 /usr/bin/pip3

RUN rm -r /root/python_build

# Install TensorRT6

RUN tar -zxf TensorRT6-cuda10.1-cudnn7.tar.gz -C /usr/local

&& cp -rf /usr/local/TensorRT6-cuda10.1-cudnn7/include/* /usr/include/ && cp -rf /usr/local/TensorRT6-cuda10.1-cudnn7/lib/* /usr/lib/

&& echo "cuda10.1 trt install ==============>>>>>>>>>>>>"

&& pip3 install /usr/local/TensorRT6-cuda10.1-cudnn7/python/tensorrt-6.0.1.5-cp37-none-linux_x86_64.whl

&& pip3 install /usr/local/TensorRT6-cuda10.1-cudnn7/graphsurgeon/graphsurgeon-0.4.1-py2.py3-none-any.whl

&& rm TensorRT6-cuda10.1-cudnn7.tar.gz

# Install requirements

RUN pip config set global.index-url https://mirror.baidu.com/pypi/simple

&& python3.7 -m pip install --upgrade setuptools

&& python3.7 -m pip install --upgrade pip

&& pip3 install -r requirements.txt

&& pip3 install paddlepaddle_gpu-2.2.2.post101-cp37-cp37m-linux_x86_64.whl

&& rm paddlepaddle_gpu-2.2.2.post101-cp37-cp37m-linux_x86_64.whl

&& python3 paddle_model.py

ENTRYPOINT python3 web_service.py

最后

以上就是舒适冬瓜最近收集整理的关于docker-gpu深度学习模型部署-PaddlePaddle模型在各个系统部署汇总学习课程:服务构建服务请求可以兼容各种协议docker部署的全部内容,更多相关docker-gpu深度学习模型部署-PaddlePaddle模型在各个系统部署汇总学习课程内容请搜索靠谱客的其他文章。

发表评论 取消回复