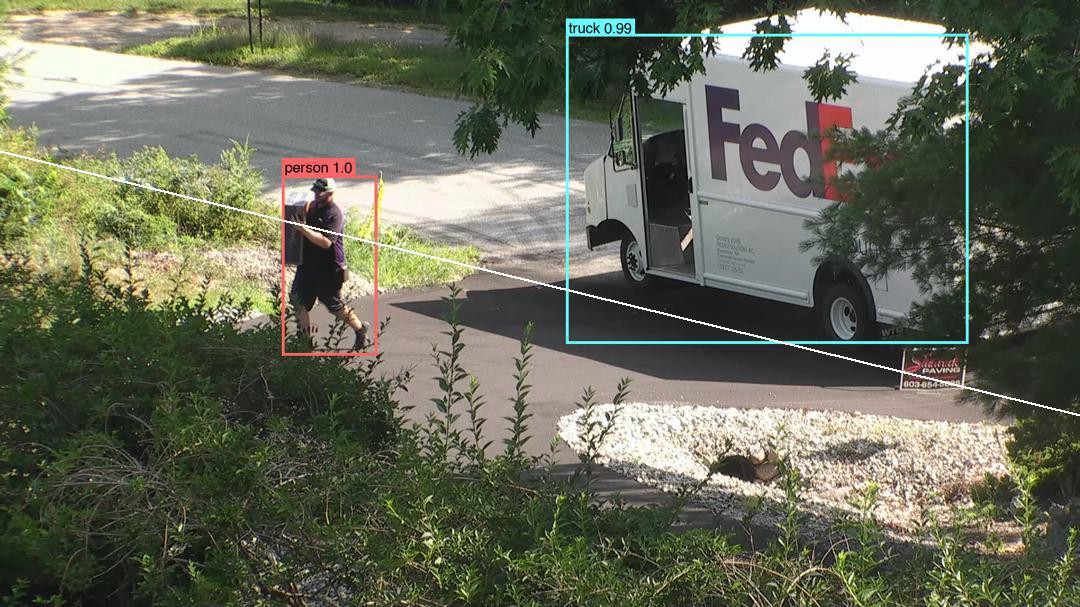

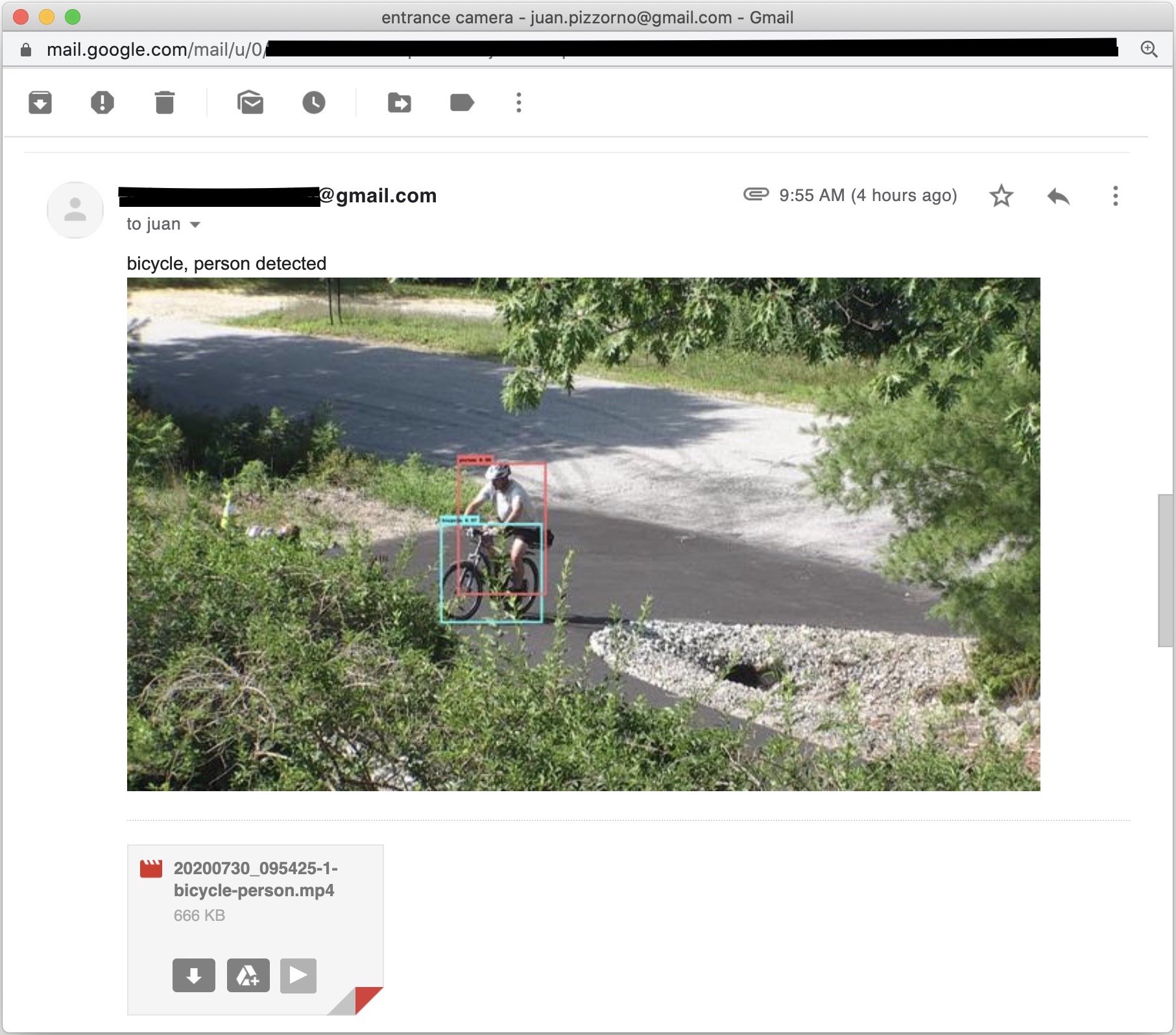

lytro相机拍摄技巧 Living in the boonies, I’m fortunate that for many food items I can just walk to the local farm. For most other things, though, my family shops online. This year our fairly long driveway really needed to be re-paved, which meant that for two months, no delivery or other heavy trucks could drive on it. We put a box for deliveries at the end of the driveway, but we can’t see it from our house, so both for convenience and safety we really wanted a camera on it. 生活在赏金中,我很幸运,对于很多食物,我都可以步行到当地的农场。 但是,对于大多数其他事情,我的家人在网上购物。 今年,我们确实需要重新铺设相当长的车道,这意味着两个月来,没有运输工具或其他重型卡车可以行驶。 我们在车道的尽头放了一个用于运输的盒子,但我们从家中看不到它,因此为了方便和安全起见,我们确实希望在其上安装一个摄像头。 I figured a good place to mount a camera was an existing utility pole by the driveway (inside the property), which is a bit less than 100m (328 ft) from the house and some 50m (164 ft) from the box; the question then was how to best bring power and connectivity to it. There are lots of ways one could do this, from batteries, solar cells and cell networks to radio links and cables. Wanting to keep costs down, I thought of the 10 or so year old “airport class” camera I had unused in my basement. 我认为安装摄像机的好地方是车道(物业内部)附近的一根电线杆,距离房屋不到100m(328英尺),离盒子不到50m(164 ft)。 然后的问题是如何最好地为其提供电源和连接性。 从电池,太阳能电池和电池网络到无线电链路和电缆,有许多方法可以做到这一点。 为了降低成本,我想到了我地下室未使用的10年前的“机场类”相机 。 The pole was within PoE range, so to run it I just needed some direct burial cable. I could solve the delivery box notification problem with minimal cost, put the camera to use AND use some deep learning to get a modern detector… that was too good a project to pass up! 极点在PoE范围内,因此要运行它,我只需要一些直埋电缆即可。 我可以用最少的成本解决送货箱通知问题,使用相机并进行深度学习,以获得现代化的探测器……这真是个好项目,无法通过! After most of a sweaty day climbing the pole, burying, routing, securing and crimping cables, I had the camera up and running. Following a path of least resistance, but also to see what I would learn, I decided to have the camera continuously record over the network onto a Linux system and to process it there using a YOLO v3/v4 object detector I wrote some time ago. I would have loved to run the inference right on the camera, but its CPU and storage are tiny and there’s no USB port for an accelerator. 经过一整天的汗水攀爬电线杆,埋没,布线,固定和压接电缆后,我才将相机启动并运行。 沿着一条阻力最小的途径,但也想了解我会学到的东西,我决定让摄像机通过网络连续记录到Linux系统上,并使用我前一段时间编写的YOLO v3 / v4对象检测器在那里进行处理。 我很想在相机上直接运行推理,但是它的CPU和存储空间很小,并且没有用于加速器的 USB端口。 While quite feature rich, the camera is old enough to make some things hard to use. It has a motion detection feature, for example, but requires Java applet support in the browser to easily configure… yeah, right. And it can record to network attached storage, but only using the SMBv1 protocol. Installing samba on my system was a matter of 相机虽然功能丰富,但已经足够老旧,难以使用。 例如,它具有运动检测功能,但需要浏览器中的Java applet支持才能轻松配置…… 是的,对 。 它可以记录到网络连接的存储,但只能使用SMBv1协议。 在我的系统上安装samba是 By recording to the Linux system, I got easy access to the data (no need to research its API for processing on the camera itself), the ability to re-process data at will as I tweaked my code and neural net, and longer term archival. I used OpenCV to read the files and to create small clips that I include in the notifications. 通过记录到Linux系统,我可以轻松访问数据(无需研究其API在相机本身上进行处理),可以在调整代码和神经网络时随意重新处理数据,并且可以长期使用档案。 我使用OpenCV读取文件并创建包含在通知中的小片段。 Running the detection off the files did introduce a bit of a delay, though: the recording is done in one-minute segments, and it isn’t processed until it is finished; add to that a few seconds for the script to notice that there is a new file, a few more for running inference on it, and a few more to send out any notifications. All in all, it could take about a minute and a half between the detected event and its notification. I thought that was good enough, but another option would have been to process the video using an RTSP stream, which both the camera and OpenCV support. 但是,对文件进行检测确实会带来一些延迟:录制是在1分钟的时间内完成的,直到完成后才进行处理; 再加上几秒钟,脚本便会注意到有一个新文件,还有更多文件可用于在其上进行推断,还有几秒钟可发出任何通知。 总而言之,检测到的事件与其通知之间可能要花费大约一分半钟。 我认为这已经足够好了,但是另一种选择是使用RTSP流来处理视频,而相机和OpenCV都支持RTSP流 。 The camera’s view of the driveway entrance also includes some of the road, and you wouldn’t believe just how much goes by a quiet country road… until you get notified about each one. Not just cars and trucks, but also neighbors walking their dog at 5 am, a turkey family or (non skid steer) bobcat going by. 摄像机在车道入口处的视野还包括一些道路,您不会相信一条安静的乡间小路会走多少路……直到您被告知每条道路。 不仅是汽车和卡车,还有邻居在凌晨5点walking狗,火鸡家庭或(防滑cat)山猫经过。 In my case, the border between “in” and “out” is a straight line, which makes the road simple to ignore: 在我的情况下,“ in”和“ out”之间的边界是一条直线,这使道路易于忽略: I also filter out all but a few detection classes: I used the original YOLOv3 weights trained using the COCO dataset, which includes classes such as “toothbrush”, “zebra” and “fire hydrant”. Realistically any such detections would be false positives. 我还过滤掉了除少数几个检测类之外的所有类:我使用了原始的YOLOv3权重,该权重是使用COCO数据集训练的,其中包括“牙刷”,“斑马”和“消火栓”等类。 实际上,任何此类检测都是误报。 Since the camera is PTZ, at the start I ambitiously thought I’d train a neural network to recognize where it was pointed and adjust the relevant detection area. I might still try that, but for now it was simpler to just not change the orientation. 由于摄像机是PTZ ,一开始我就雄心勃勃地以为我会训练一个神经网络来识别它指向的位置并调整相关的检测区域。 我可能仍会尝试,但是就目前而言,不更改方向更加简单。 For the notifications, given my previous work, it seemed easiest to email myself, with the credentials coming from a JSON file so that they’re not in the code. 对于通知,鉴于我以前的工作 ,用JSON文件提供凭据来使自己不在代码中似乎是最容易用电子邮件发送给自己的。 For each detection sequence, I include a small MP4 clip and a corresponding “representative” image in higher resolution. Usually the image is all I need, but the clip, which also plays on my phone, can be instrumental to understand what is going on, such as when someone decides to turn around at the entrance. I use the frame with the the largest area in bounding boxes for the “representative” image. 对于每个检测序列,我都包括一个小MP4剪辑和一个更高分辨率的对应“代表”图像。 通常,图像只是我所需要的,但是也可以在手机上播放的剪辑可以帮助您了解正在发生的事情,例如有人决定在入口处转身时。 我将边框中具有最大面积的框架用于“代表性”图像。 The camera is set to record 1080p video at 15 FPS. On my Linux system, using a 摄像机设置为以15 FPS录制1080p视频。 在我的Linux系统上,使用 use a smaller/faster network. There are so many options here… I tried 使用较小/较快的网络。 这里有很多选择……我尝试了 push the data into the cloud and process it there, but I was trying to keep costs down, and didn’t like the loss in bandwidth. No, this was an edge ML project; 将数据推送到云中并在那里进行处理,但是我试图降低成本,并且不喜欢带宽损失。 不,这是一个边缘ML项目; All in all, on my 4.5 GHz Intel i9–10900X, 32GB RAM Linux system I can process a one-minute segment in just below 10s, or less if I let it use the GPU. 总而言之,在我的4.5 GHz Intel i9-10900X,32GB RAM Linux系统上,我可以在不到10s的时间内处理一分钟的片段,如果我使用GPU,则可以处理的时间更少。 I didn’t really evaluate this accuracy wise, but did run into some false positives… If I decide to run this system longer term, I will likely fine tune it to avoid these. 我并没有真正评估这种准确性,但是确实遇到了一些误报……如果我决定长期运行该系统,我可能会对其进行微调以避免这些情况。 The driveway is drivable again and the deliveries box is gone… having said that, most of those things I mentioned not having done or completed could become projects. I might try running something on the camera itself or on a Raspberry Pi or Arduino. It would also be cool to use the camera’s PTZ to track detections. 车道又可行驶了,送货箱也消失了……说过,我提到的大多数未完成或尚未完成的事情都可能成为项目。 我可能会尝试在相机本身或Raspberry Pi或Arduino上运行某些内容。 使用摄像机的PTZ跟踪检测结果也很酷。 It’s down below, but you can also check it out on GitHub. 它在下面,但您也可以在GitHub上查看 。 Use at your own risk! I normally develop code test driven, but I was experimenting a lot here and didn’t treat this project to TDD. 使用风险自负! 我通常以代码测试驱动开发,但是我在这里做了很多实验,并且没有将此项目视为TDD。 Enjoy! 请享用! 翻译自: https://towardsdatascience.com/teaching-new-tricks-to-an-old-camera-5ab37f4a4406 lytro相机拍摄技巧

建立 (Setup)

记录与处理 (Recording and Processing)

sudo apt install samba, but it took some more serious research to find the “incantation” needed in /etc/samba/smb.conf so it’d speak SMBv1:sudo apt install samba ,但是花了一些更认真的研究才能在/etc/samba/smb.conf找到所需的“ 咒语 ”,所以它说的是SMBv1: min protocol = NT1

ntlm auth = ntlmv1-permitted 不相关的检测 (Irrelevant Detections)

通知事项 (Notifications)

性能 (Performance)

tf.data pipeline and only using the CPU, I can process that at about 4 FPS, so I needed it to be faster. Significantly faster, in fact, so that it could catch up if interrupted. Some options were:tf.data管道并且仅使用CPU,我可以大约4 FPS的速度处理它,因此我需要更快的速度。 实际上,速度显着提高,因此如果被中断,它可能会赶上。 一些选项是: yolov3-tiny but didn’t love the loss in accuracy. I tried OpenCV’s readNetFromDarknet, but it was actually a bit slower than my implementation. I have yet to try MobileNet SSD or something else from the TensorFlow object detection API;yolov3-tiny但不喜欢准确性方面的损失。 我尝试了OpenCVreadNetFromDarkne t ,但是实际上比我的实现要慢一些。 我还没有尝试过MobileNet SSD或TensorFlow对象检测API中的其他工具 ;

未来的工作 (Future Work)

代码 (The Code)

最后

以上就是魔幻鱼最近收集整理的关于lytro相机拍摄技巧_向旧相机教授新技巧 建立 (Setup) 记录与处理 (Recording and Processing) 不相关的检测 (Irrelevant Detections) 通知事项 (Notifications) 性能 (Performance) 未来的工作 (Future Work) 代码 (The Code)的全部内容,更多相关lytro相机拍摄技巧_向旧相机教授新技巧内容请搜索靠谱客的其他文章。

发表评论 取消回复