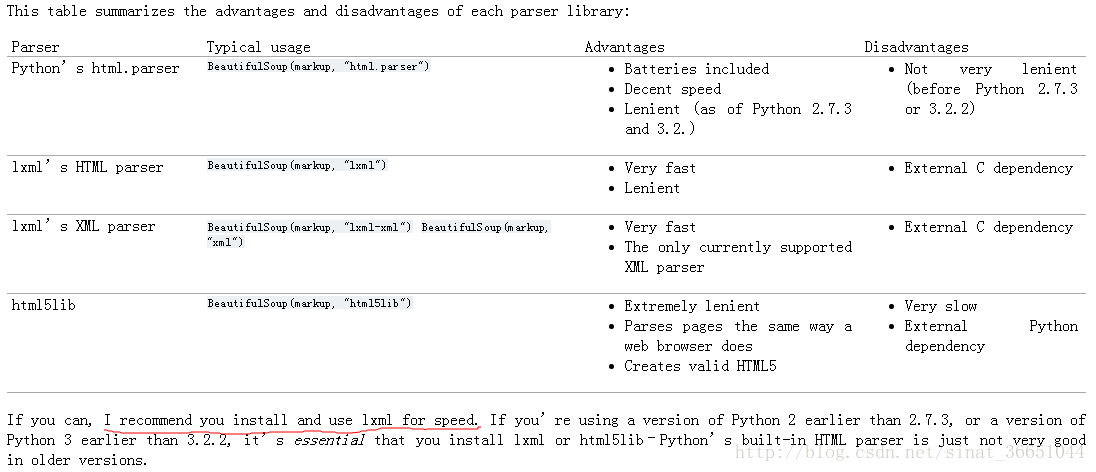

1 parsers

刚看bs4文档,就看到这样的代码:

>>> from bs4 import BeautifulSoup

>>> soup = BeautifulSoup(html_doc,"html.parser")

>>> soup.a

<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>这里的html.parser是Python标准库中的HTML解析器。BeautifulSoup还支持第三方的解析器,比如lxml和html5lib。

一般来说,lxml解析速度最快,效果会更好。

截图来自https://www.crummy.com/software/BeautifulSoup/bs4/doc/#installing-a-parser

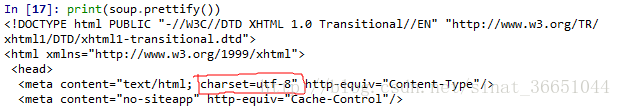

2 Encodings

每个网页都有一种特定的编码方式,国内常用的网页编码方式有utf-8和gb2312两种。

使用BeautifulSoup解析后,文档都会被转换成Unicode。

2.1 网页源码的前几行会有相关的编码信息:

<meta charset="utf-8"><meta http-equiv="Content-Type" content="text/html; charset=gb2312" />2.2 original_encoding属性记录了自动识别编码的结果

In [7]: soup.original_encoding

Out[7]: 'gb2312'2.3 通过Beautiful Soup输出文档时,不管输入文档是什么编码方式,输出编码均为UTF-8编码:

如果不想用utf-8格式输出,可以自己传入编码方式参数:

2.4 UnicodeDammit

A class for detecting the encoding of a *ML document and converting it to a Unicode string. If the source encoding is windows-1252, can replace MS smart quotes with their HTML or XML equivalents.

>>> from bs4 import UnicodeDammit

>>> dammit = UnicodeDammit("Sacrxc3xa9 bleu!")

>>> print(dammit.unicode_markup)

Sacré bleu!

>>> dammit.original_encoding

>>>

>>> snowmen = (u"N{SNOWMAN}" * 3)

>>> quote = (u"N{LEFT DOUBLE QUOTATION MARK}I like snowmen!N{RIGHT DOUBLE QUOTATION MARK}")

>>> doc = snowmen.encode("utf8") + quote.encode("windows_1252")

>>> print(doc)

b'xe2x98x83xe2x98x83xe2x98x83x93I like snowmen!x94'

>>> print(doc.decode("windows_1252"))

☃☃☃“I like snowmen!”

>>>

>>>

>>> new_doc = UnicodeDammit.detwingle(doc)

>>> print(new_doc.decode('utf-8'))

☃☃☃“I like snowmen!”

>>> UnicodeDammit.detwingle() 方法能解码包含在UTF-8编码中的Windows-1252编码内容,这已经解决了最常见的一类问题.

在创建 BeautifulSoup 或 UnicodeDammit 对象前一定要先对文档调用 UnicodeDammit.detwingle() 确保文档的编码方式正确。如果尝试去解析一段包含Windows-1252编码的UTF-8文档,就会得到一堆乱码,比如: ☃☃☃“I like snowmen!”。

>>> help(UnicodeDammit)

Help on class UnicodeDammit in module bs4.dammit:

class UnicodeDammit(builtins.object)

| A class for detecting the encoding of a *ML document and

| converting it to a Unicode string. If the source encoding is

| windows-1252, can replace MS smart quotes with their HTML or XML

| equivalents.

|

| Methods defined here:

|

| __init__(self, markup, override_encodings=[], smart_quotes_to=None, is_html=False, exclude_encodings=[])

| Initialize self. See help(type(self)) for accurate signature.

|

| find_codec(self, charset)

|

| ----------------------------------------------------------------------

| Class methods defined here:

|

| detwingle(in_bytes, main_encoding='utf8', embedded_encoding='windows-1252') from builtins.type

| Fix characters from one encoding embedded in some other encoding.

|

| Currently the only situation supported is Windows-1252 (or its

| subset ISO-8859-1), embedded in UTF-8.

|

| The input must be a bytestring. If you've already converted

| the document to Unicode, you're too late.

|

| The output is a bytestring in which `embedded_encoding`

| characters have been converted to their `main_encoding`

| equivalents.

......

最后,Python中安装了 chardet 或 cchardet 那么编码检测功能的准确率将大大提高。

3 diagnose()

使用diagnose()方法,BeautifulSoup会输出一份报告,说明不同的解析器会怎样处理这段文档,并标出当前的解析过程会使用哪种解析器:

In [19]: test_doc = "xc3xc3xd7xd3xcdxbc - xc7xe5xb4xbfxc3xc0xc5xae,xbfxc9xb0xaexc3xc0xc5xae,xc3xc0xc5xaexcdxbcxc6xac"

...:

In [20]: from bs4.diagnose import diagnose

In [21]: diagnose(test_doc)

Diagnostic running on Beautiful Soup 4.6.0

Python version 3.6.1 |Anaconda 4.4.0 (32-bit)| (default, May 11 2017, 14:16:49) [MSC v.1900 32 bit (Intel)]

Found lxml version 3.7.3.0

Found html5lib version 0.999

Trying to parse your markup with html.parser

Here's what html.parser did with the markup:

ÃÃ×Óͼ - Çå´¿ÃÀÅ®,¿É°®ÃÀÅ®,ÃÀŮͼƬ

--------------------------------------------------------------------------------

Trying to parse your markup with html5lib

Here's what html5lib did with the markup:

<html>

<head>

</head>

<body>

ÃÃ×Óͼ - Çå´¿ÃÀÅ®,¿É°®ÃÀÅ®,ÃÀŮͼƬ

</body>

</html>

--------------------------------------------------------------------------------

Trying to parse your markup with lxml

Here's what lxml did with the markup:

<html>

<body>

<p>

ÃÃ×Óͼ - Çå´¿ÃÀÅ®,¿É°®ÃÀÅ®,ÃÀŮͼƬ

</p>

</body>

</html>

--------------------------------------------------------------------------------

Trying to parse your markup with ['lxml', 'xml']

Here's what ['lxml', 'xml'] did with the markup:

<?xml version="1.0" encoding="utf-8"?>

--------------------------------------------------------------------------------

C:ProgramDataAnaconda3libsite-packagesbs4__init__.py:181: UserWarning: No parser was explicitly specified, so I'm using the best available XML parser for this system ("lxml-xml"). This usually isn't a problem, but if you run this code on another system, or in a different virtual environment, it may use a different parser and behave differently.

The code that caused this warning is on line 231 of the file C:ProgramDataAnaconda3libsite-packagesspyderutilsipythonstart_kernel.py. To get rid of this warning, change code that looks like this:

BeautifulSoup(YOUR_MARKUP})

to this:

BeautifulSoup(YOUR_MARKUP, "lxml-xml")

markup_type=markup_type))最后

以上就是难过发箍最近收集整理的关于BeautifulSoup学习笔记7的全部内容,更多相关BeautifulSoup学习笔记7内容请搜索靠谱客的其他文章。

![BeautifulSoupu’b’u’boldest’{u’class’: u’boldest’}u’Extremely bold’

No longer bold

u’[document]’u’Hey, buddy. Want to buy a used parser’

The Dormouse’s storyThe Dormouse’s storyThe Dormouse’s storyElsie[Elsie,Lacie,Tillie]The Dormouse’s storyThe](https://www.shuijiaxian.com/files_image/reation/bcimg10.png)

发表评论 取消回复