看过很多视频和教程,适用与windows和tf2.0版本的教程很少。。决定从tensorflow中文社区重新开始学习。但是也会有很多报错,把诸多修改后的小代码如下所示。

变量:

# -*- coding: utf-8 -*-

"""

Created on Sat Nov 2 10:59:18 2019

@author: lsf81

"""

import tensorflow as tf

state=tf.Variable(0,name="counter")

one=tf.constant(1)

new_value=tf.add(state,one)

update=tf.compat.v1.assign(state,new_value)

init_op=tf.compat.v1.global_variables_initializer()

with tf.compat.v1.Session() as sess:

sess.run(init_op)

print(sess.run(state))

for _ in range(3):

sess.run(update)

print(sess.run(state))

运行结果:

注意:原版update = tf.assign(state, new_value)都更新成了update=tf.compat.v1.assign(state,new_value)

Fetch:

import tensorflow as tf

input1 = tf.constant(3.0)

input2 = tf.constant(2.0)

input3 = tf.constant(5.0)

intermed = tf.compat.v1.add(input2, input3)

mul = tf.multiply(input1, intermed)

with tf.compat.v1.Session() as sess:

result = sess.run([mul, intermed])

print(result)

必须要注意,在现在版本中,mul调用方式变成了multiply,and sub 变成了subtract。

Feed:

```python

import tensorflow as tf

input1 = tf.placeholder(tf.float32)

input2 = tf.placeholder(tf.float32)

output = tf.multiply(input1, input2)

with tf.compat.v1.Session() as sess:

print(sess.run([output],feed_dict={input1:[7.],input2:[2.]}))

注意:官网上是input1 = tf.placeholder(tf.types.float32),其中types是多余的。

mnist详细版教程

https://blog.csdn.net/cqrtxwd/article/details/79028264

input_data.py下载地址

https://blog.csdn.net/weixin_43159628/article/details/83241345

搞了一下午:最新更新如下:

出现Please use alternatives such as official/mnist/dataset.py from tensorflow/models.的错误。原因是新版本不再支持tensorflow/example/tutorials函数库了,全部更新到keras里面了,具体代码如下

import tensorflow as tf

#from tensorflow.examples.tutorials.mnist import input_data

#mnist=input_data.read_data_sets('./MNIST_data/',one_hot=True)

#sess=tf.InteractiveSession()

#print('Training data size: ', mnist.train.num_examples)

mnist=tf.keras.datasets.mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()

print(X_train.shape) # out: (60000, 28, 28)

print(y_train.shape) # out: (60000,)

参考博文:https://blog.csdn.net/u011106767/article/details/93879120

后续问题遇到了keras中mnist分类的问题。见以下博文:

https://blog.csdn.net/Yumi_huang/article/details/82351173

参考上面的博文:

在这里插入代# -*- coding: utf-8 -*-

"""

Created on Sat Nov 2 18:56:47 2019

@author: lsf81

"""

import numpy as np

import matplotlib.pyplot as plt

path = r"F:pythonAnacondaLibsite-packageskeras_applicationsexamplesmnist.npz"

f = np.load(path)

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

f.close()

print(x_train.shape)

print(x_test.shape)

for i in range(9):

plt.subplot(3,3,i+1)

plt.imshow(x_train[i], cmap='gray', interpolation='none')

plt.title("Class {}".format(y_train[i]))

plt.show()

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers.core import Dense, Activation, Dropout

from keras.utils import np_utils

import numpy as np

import matplotlib.pyplot as plt

from keras.optimizers import RMSprop

path = r"F:pythonAnacondaLibsite-packageskeras_applicationsexamplesmnist.npz"

f = np.load(path)

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

f.close()

# print(x_train.shape)

# print(x_test.shape)

# for i in range(9):

# plt.subplot(3,3,i+1)

# plt.imshow(x_train[i], cmap='gray', interpolation='none')

# plt.title("Class {}".format(y_train[i]))

# plt.show()

#将二维数据变为一维

X_train = x_train.reshape(len(x_train), -1)

X_test = x_test.reshape(len(x_test), -1)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

# Normalization.scaling it so that all values are in the [0, 1] interval.

X_train = (X_train - 127) / 127

X_test = (X_test - 127) / 127

#one hot encoding

y_train = np_utils.to_categorical(y_train, num_classes=10)

y_test = np_utils.to_categorical(y_test, num_classes=10)

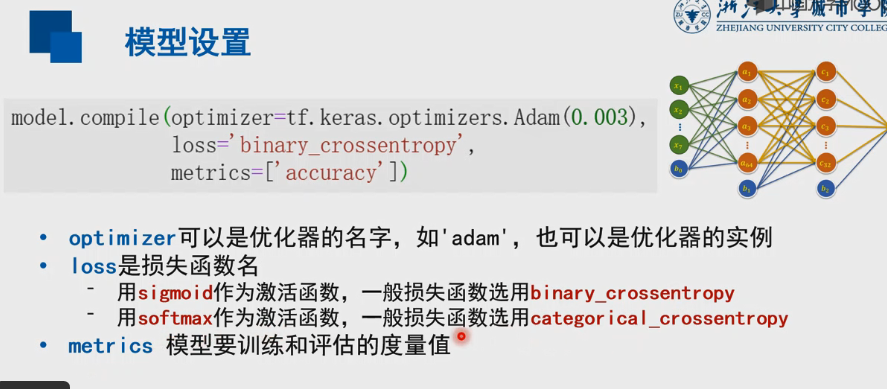

model = Sequential([

Dense(512, input_dim=784),

Activation('relu'),

Dropout(0.2),

# Dense(512),

# Activation('relu'),

# Dropout(0.2),

Dense(10),

Activation('softmax'),

])

rmsprop = RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0)

# We add metrics to get more results you want to see

model.compile(optimizer=rmsprop,

loss='categorical_crossentropy',

metrics=['accuracy'])

print('Training ------------')

# Another way to train the model

model.fit(X_train, y_train, epochs=20, batch_size=100)

print('nTesting ------------')

# Evaluate the model with the metrics we defined earlier

loss, accuracy = model.evaluate(X_test, y_test)

print('test loss: ', loss)

print('test accuracy: ', accuracy)

码片

最后的结果为:

runfile('E:/Users/lsf81/Desktop/cnnmnist2.py', wdir='E:/Users/lsf81/Desktop')

(60000, 28, 28)

(10000, 28, 28)

Using TensorFlow backend.

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packageskerasbackendtensorflow_backend.py:74: The name tf.get_default_graph is deprecated. Please use tf.compat.v1.get_default_graph instead.

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packageskerasbackendtensorflow_backend.py:517: The name tf.placeholder is deprecated. Please use tf.compat.v1.placeholder instead.

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packageskerasbackendtensorflow_backend.py:4138: The name tf.random_uniform is deprecated. Please use tf.random.uniform instead.

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packageskerasbackendtensorflow_backend.py:133: The name tf.placeholder_with_default is deprecated. Please use tf.compat.v1.placeholder_with_default instead.

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packageskerasbackendtensorflow_backend.py:3445: calling dropout (from tensorflow.python.ops.nn_ops) with keep_prob is deprecated and will be removed in a future version.

Instructions for updating:

Please use `rate` instead of `keep_prob`. Rate should be set to `rate = 1 - keep_prob`.

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packageskerasoptimizers.py:790: The name tf.train.Optimizer is deprecated. Please use tf.compat.v1.train.Optimizer instead.

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packageskerasbackendtensorflow_backend.py:3295: The name tf.log is deprecated. Please use tf.math.log instead.

Training ------------

WARNING:tensorflow:From F:pythonAnacondaenvstensorflowlibsite-packagestensorflowpythonopsmath_grad.py:1250: add_dispatch_support.<locals>.wrapper (from tensorflow.python.ops.array_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.where in 2.0, which has the same broadcast rule as np.where

Epoch 1/20

60000/60000 [==============================] - 5s 79us/step - loss: 0.4220 - acc: 0.8750

Epoch 2/20

60000/60000 [==============================] - 3s 58us/step - loss: 0.1797 - acc: 0.9443

Epoch 3/20

60000/60000 [==============================] - 5s 80us/step - loss: 0.1379 - acc: 0.9586

Epoch 4/20

60000/60000 [==============================] - 9s 146us/step - loss: 0.1224 - acc: 0.9636

Epoch 5/20

60000/60000 [==============================] - 9s 155us/step - loss: 0.1066 - acc: 0.9683

Epoch 6/20

60000/60000 [==============================] - 9s 148us/step - loss: 0.1005 - acc: 0.9701

Epoch 7/20

60000/60000 [==============================] - 9s 154us/step - loss: 0.0929 - acc: 0.9728

Epoch 8/20

60000/60000 [==============================] - 9s 155us/step - loss: 0.0866 - acc: 0.9754

Epoch 9/20

60000/60000 [==============================] - 9s 145us/step - loss: 0.0829 - acc: 0.9761

Epoch 10/20

60000/60000 [==============================] - 9s 154us/step - loss: 0.0766 - acc: 0.9773

Epoch 11/20

60000/60000 [==============================] - 9s 147us/step - loss: 0.0740 - acc: 0.9789

Epoch 12/20

60000/60000 [==============================] - 9s 153us/step - loss: 0.0683 - acc: 0.9814

Epoch 13/20

60000/60000 [==============================] - 7s 118us/step - loss: 0.0698 - acc: 0.9808

Epoch 14/20

60000/60000 [==============================] - 10s 158us/step - loss: 0.0652 - acc: 0.9823

Epoch 15/20

60000/60000 [==============================] - 9s 153us/step - loss: 0.0637 - acc: 0.9830

Epoch 16/20

60000/60000 [==============================] - 9s 144us/step - loss: 0.0634 - acc: 0.9831

Epoch 17/20

60000/60000 [==============================] - 9s 153us/step - loss: 0.0605 - acc: 0.9836

Epoch 18/20

60000/60000 [==============================] - 8s 140us/step - loss: 0.0559 - acc: 0.9845

Epoch 19/20

60000/60000 [==============================] - 9s 149us/step - loss: 0.0569 - acc: 0.9848

Epoch 20/20

60000/60000 [==============================] - 7s 118us/step - loss: 0.0552 - acc: 0.9859

Testing ------------

10000/10000 [==============================] - 1s 63us/step

test loss: 0.11286064563859545

test accuracy: 0.9791

刚刚试着修改一下那些警告,升完级后发现不能运行了。又把版本改回来了,暂时先这样。自己的第一个程序。

上面mnist博客中的程序,已经跑完,代码如下

import input_data

import tensorflow as tf

#读取数据

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

sess=tf.compat.v1.InteractiveSession()

#构建cnn网络结构

#自定义卷积函数(后面卷积时就不用写太多)

def conv2d(x,w):

return tf.nn.conv2d(x,w,strides=[1,1,1,1],padding='SAME')

#自定义池化函数

def max_pool_2x2(x):

return tf.nn.max_pool2d(x,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

#设置占位符,尺寸为样本输入和输出的尺寸

x=tf.compat.v1.placeholder(tf.float32,[None,784])

y_=tf.placeholder(tf.float32,[None,10])

x_img=tf.reshape(x,[-1,28,28,1])

#设置第一个卷积层和池化层

w_conv1=tf.Variable(tf.random.truncated_normal([3,3,1,32],stddev=0.1))

b_conv1=tf.Variable(tf.constant(0.1,shape=[32]))

h_conv1=tf.nn.relu(conv2d(x_img,w_conv1)+b_conv1)

h_pool1=max_pool_2x2(h_conv1)

#设置第二个卷积层和池化层

w_conv2=tf.Variable(tf.truncated_normal([3,3,32,50],stddev=0.1))

b_conv2=tf.Variable(tf.constant(0.1,shape=[50]))

h_conv2=tf.nn.relu(conv2d(h_pool1,w_conv2)+b_conv2)

h_pool2=max_pool_2x2(h_conv2)

#设置第一个全连接层

w_fc1=tf.Variable(tf.truncated_normal([7*7*50,1024],stddev=0.1))

b_fc1=tf.Variable(tf.constant(0.1,shape=[1024]))

h_pool2_flat=tf.reshape(h_pool2,[-1,7*7*50])

h_fc1=tf.nn.relu(tf.matmul(h_pool2_flat,w_fc1)+b_fc1)

#dropout(随机权重失活)

keep_prob=tf.placeholder(tf.float32)

h_fc1_drop=tf.nn.dropout(h_fc1,keep_prob)

#设置第二个全连接层

w_fc2=tf.Variable(tf.truncated_normal([1024,10],stddev=0.1))

b_fc2=tf.Variable(tf.constant(0.1,shape=[10]))

y_out=tf.nn.softmax(tf.matmul(h_fc1_drop,w_fc2)+b_fc2)

#建立loss function,为交叉熵

loss=tf.reduce_mean(-tf.reduce_sum(y_*tf.math.log(y_out),reduction_indices=[1]))

#配置Adam优化器,学习速率为1e-4

train_step=tf.compat.v1.train.AdamOptimizer(1e-4).minimize(loss)

#建立正确率计算表达式

correct_prediction=tf.equal(tf.argmax(y_out,1),tf.argmax(y_,1))

accuracy=tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

#开始喂数据,训练

tf.compat.v1.global_variables_initializer().run()

for i in range(20000):

batch=mnist.train.next_batch(50)

if i%100==0:

train_accuracy=accuracy.eval(feed_dict={x:batch[0],y_:batch[1],keep_prob:1})

print("step %d,train_accuracy= %g"%(i,train_accuracy))

train_step.run(feed_dict={x:batch[0],y_:batch[1],keep_prob:0.5})

#训练之后,使用测试集进行测试,输出最终结果

print("test_accuracy= %g"%accuracy.eval(feed_dict={x:mnist.test.images,y_:mnist.test.labels,keep_prob:1}))

结果为

F:pythonpycharmvenvScriptspython.exe F:/python/code/cnntest1.py

Successfully downloaded train-images-idx3-ubyte.gz 9912422 bytes.

Extracting MNIST_datatrain-images-idx3-ubyte.gz

Successfully downloaded train-labels-idx1-ubyte.gz 28881 bytes.

Extracting MNIST_datatrain-labels-idx1-ubyte.gz

Successfully downloaded t10k-images-idx3-ubyte.gz 1648877 bytes.

Extracting MNIST_datat10k-images-idx3-ubyte.gz

Successfully downloaded t10k-labels-idx1-ubyte.gz 4542 bytes.

Extracting MNIST_datat10k-labels-idx1-ubyte.gz

WARNING:tensorflow:From F:/python/code/cnntest1.py:5: The name tf.InteractiveSession is deprecated. Please use tf.compat.v1.InteractiveSession instead.

2019-11-05 09:27:27.623410: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

WARNING:tensorflow:From F:/python/code/cnntest1.py:14: The name tf.placeholder is deprecated. Please use tf.compat.v1.placeholder instead.

WARNING:tensorflow:From F:/python/code/cnntest1.py:19: The name tf.truncated_normal is deprecated. Please use tf.random.truncated_normal instead.

WARNING:tensorflow:From F:/python/code/cnntest1.py:12: The name tf.nn.max_pool is deprecated. Please use tf.nn.max_pool2d instead.

WARNING:tensorflow:From F:/python/code/cnntest1.py:38: calling dropout (from tensorflow.python.ops.nn_ops) with keep_prob is deprecated and will be removed in a future version.

Instructions for updating:

Please use `rate` instead of `keep_prob`. Rate should be set to `rate = 1 - keep_prob`.

WARNING:tensorflow:From F:/python/code/cnntest1.py:46: The name tf.log is deprecated. Please use tf.math.log instead.

WARNING:tensorflow:From F:/python/code/cnntest1.py:48: The name tf.train.AdamOptimizer is deprecated. Please use tf.compat.v1.train.AdamOptimizer instead.

WARNING:tensorflow:From F:/python/code/cnntest1.py:55: The name tf.global_variables_initializer is deprecated. Please use tf.compat.v1.global_variables_initializer instead.

step 0,train_accuracy= 0.08

step 100,train_accuracy= 0.84

step 200,train_accuracy= 0.92

step 300,train_accuracy= 0.84

step 400,train_accuracy= 0.94

step 500,train_accuracy= 0.84

step 600,train_accuracy= 1

step 700,train_accuracy= 0.94

step 800,train_accuracy= 0.9

step 900,train_accuracy= 1

step 1000,train_accuracy= 0.96

step 1100,train_accuracy= 0.96

step 1200,train_accuracy= 0.96

step 1300,train_accuracy= 0.94

step 1400,train_accuracy= 0.94

step 1500,train_accuracy= 0.96

step 1600,train_accuracy= 0.96

step 1700,train_accuracy= 0.96

step 1800,train_accuracy= 0.98

step 1900,train_accuracy= 0.96

step 2000,train_accuracy= 0.98

step 2100,train_accuracy= 1

step 2200,train_accuracy= 0.96

step 2300,train_accuracy= 1

step 2400,train_accuracy= 1

step 2500,train_accuracy= 0.96

step 2600,train_accuracy= 0.98

step 2700,train_accuracy= 0.98

step 2800,train_accuracy= 0.94

step 2900,train_accuracy= 0.96

step 3000,train_accuracy= 0.98

step 3100,train_accuracy= 0.98

step 3200,train_accuracy= 0.94

step 3300,train_accuracy= 1

step 3400,train_accuracy= 1

step 3500,train_accuracy= 1

step 3600,train_accuracy= 0.96

step 3700,train_accuracy= 1

step 3800,train_accuracy= 0.98

step 3900,train_accuracy= 0.96

step 4000,train_accuracy= 0.98

step 4100,train_accuracy= 0.96

step 4200,train_accuracy= 0.96

step 4300,train_accuracy= 0.98

step 4400,train_accuracy= 1

step 4500,train_accuracy= 0.98

step 4600,train_accuracy= 0.96

step 4700,train_accuracy= 0.96

step 4800,train_accuracy= 0.92

step 4900,train_accuracy= 0.96

step 5000,train_accuracy= 1

step 5100,train_accuracy= 1

step 5200,train_accuracy= 1

step 5300,train_accuracy= 1

step 5400,train_accuracy= 1

step 5500,train_accuracy= 0.98

step 5600,train_accuracy= 1

step 5700,train_accuracy= 0.98

step 5800,train_accuracy= 0.98

step 5900,train_accuracy= 1

step 6000,train_accuracy= 1

step 6100,train_accuracy= 1

step 6200,train_accuracy= 1

step 6300,train_accuracy= 1

step 6400,train_accuracy= 0.96

step 6500,train_accuracy= 0.98

step 6600,train_accuracy= 0.94

step 6700,train_accuracy= 1

step 6800,train_accuracy= 1

step 6900,train_accuracy= 1

step 7000,train_accuracy= 1

step 7100,train_accuracy= 0.98

step 7200,train_accuracy= 1

step 7300,train_accuracy= 1

step 7400,train_accuracy= 1

step 7500,train_accuracy= 1

step 7600,train_accuracy= 1

step 7700,train_accuracy= 1

step 7800,train_accuracy= 1

step 7900,train_accuracy= 1

step 8000,train_accuracy= 0.98

step 8100,train_accuracy= 1

step 8200,train_accuracy= 0.96

step 8300,train_accuracy= 0.98

step 8400,train_accuracy= 1

step 8500,train_accuracy= 1

step 8600,train_accuracy= 0.98

step 8700,train_accuracy= 1

step 8800,train_accuracy= 1

step 8900,train_accuracy= 1

step 9000,train_accuracy= 1

step 9100,train_accuracy= 1

step 9200,train_accuracy= 1

step 9300,train_accuracy= 1

step 9400,train_accuracy= 1

step 9500,train_accuracy= 1

step 9600,train_accuracy= 1

step 9700,train_accuracy= 0.98

step 9800,train_accuracy= 1

step 9900,train_accuracy= 0.98

step 10000,train_accuracy= 0.98

step 10100,train_accuracy= 1

step 10200,train_accuracy= 0.96

step 10300,train_accuracy= 1

step 10400,train_accuracy= 1

step 10500,train_accuracy= 1

step 10600,train_accuracy= 0.98

step 10700,train_accuracy= 1

step 10800,train_accuracy= 1

step 10900,train_accuracy= 0.98

step 11000,train_accuracy= 0.98

step 11100,train_accuracy= 1

step 11200,train_accuracy= 1

step 11300,train_accuracy= 0.98

step 11400,train_accuracy= 1

step 11500,train_accuracy= 0.98

step 11600,train_accuracy= 1

step 11700,train_accuracy= 0.98

step 11800,train_accuracy= 1

step 11900,train_accuracy= 0.98

step 12000,train_accuracy= 1

step 12100,train_accuracy= 1

step 12200,train_accuracy= 0.96

step 12300,train_accuracy= 0.98

step 12400,train_accuracy= 1

step 12500,train_accuracy= 1

step 12600,train_accuracy= 1

step 12700,train_accuracy= 1

step 12800,train_accuracy= 1

step 12900,train_accuracy= 1

step 13000,train_accuracy= 1

step 13100,train_accuracy= 1

step 13200,train_accuracy= 1

step 13300,train_accuracy= 1

step 13400,train_accuracy= 1

step 13500,train_accuracy= 1

step 13600,train_accuracy= 1

step 13700,train_accuracy= 1

step 13800,train_accuracy= 1

step 13900,train_accuracy= 1

step 14000,train_accuracy= 0.98

step 14100,train_accuracy= 1

step 14200,train_accuracy= 1

step 14300,train_accuracy= 0.98

step 14400,train_accuracy= 1

step 14500,train_accuracy= 1

step 14600,train_accuracy= 1

step 14700,train_accuracy= 1

step 14800,train_accuracy= 0.98

step 14900,train_accuracy= 1

step 15000,train_accuracy= 1

step 15100,train_accuracy= 1

step 15200,train_accuracy= 1

step 15300,train_accuracy= 1

step 15400,train_accuracy= 1

step 15500,train_accuracy= 1

step 15600,train_accuracy= 1

step 15700,train_accuracy= 1

step 15800,train_accuracy= 1

step 15900,train_accuracy= 1

step 16000,train_accuracy= 1

step 16100,train_accuracy= 1

step 16200,train_accuracy= 1

step 16300,train_accuracy= 1

step 16400,train_accuracy= 1

step 16500,train_accuracy= 1

step 16600,train_accuracy= 1

step 16700,train_accuracy= 1

step 16800,train_accuracy= 1

step 16900,train_accuracy= 1

step 17000,train_accuracy= 1

step 17100,train_accuracy= 1

step 17200,train_accuracy= 1

step 17300,train_accuracy= 1

step 17400,train_accuracy= 1

step 17500,train_accuracy= 1

step 17600,train_accuracy= 1

step 17700,train_accuracy= 1

step 17800,train_accuracy= 0.98

step 17900,train_accuracy= 1

step 18000,train_accuracy= 1

step 18100,train_accuracy= 1

step 18200,train_accuracy= 1

step 18300,train_accuracy= 1

step 18400,train_accuracy= 1

step 18500,train_accuracy= 1

step 18600,train_accuracy= 1

step 18700,train_accuracy= 1

step 18800,train_accuracy= 1

step 18900,train_accuracy= 1

step 19000,train_accuracy= 1

step 19100,train_accuracy= 1

step 19200,train_accuracy= 1

step 19300,train_accuracy= 1

step 19400,train_accuracy= 1

step 19500,train_accuracy= 1

step 19600,train_accuracy= 1

step 19700,train_accuracy= 1

step 19800,train_accuracy= 1

step 19900,train_accuracy= 1

2019-11-05 09:41:01.897069: W tensorflow/core/framework/allocator.cc:107] Allocation of 1003520000 exceeds 10% of system memory.

2019-11-05 09:41:02.535947: W tensorflow/core/framework/allocator.cc:107] Allocation of 250880000 exceeds 10% of system memory.

2019-11-05 09:41:02.763879: W tensorflow/core/framework/allocator.cc:107] Allocation of 392000000 exceeds 10% of system memory.

test_accuracy= 0.9908

Process finished with exit code 0

最后

以上就是孝顺黑米最近收集整理的关于小白初学tensorflow入手教程——持续更新的全部内容,更多相关小白初学tensorflow入手教程——持续更新内容请搜索靠谱客的其他文章。

发表评论 取消回复