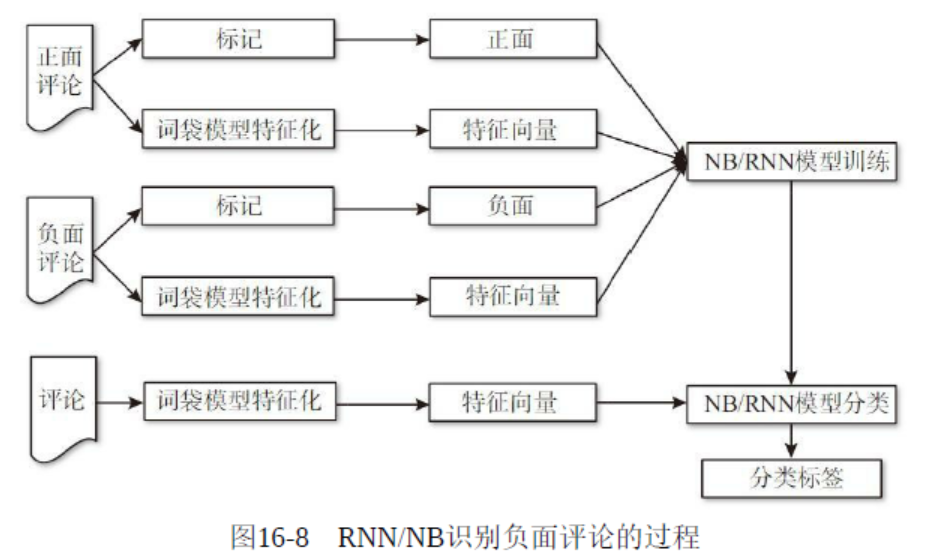

本小节使用NB和RNN两种方法识别恶意评论。

1、数据集获取

(1)正面影评

数据集的位置:../data/movie-review-data/review_polarity/txt_sentoken/pos/

x1,y1=load_files("../data/movie-review-data/review_polarity/txt_sentoken/pos/",0)(2)负面影评

数据集的位置:../data/movie-review-data/review_polarity/txt_sentoken/neg/

x2,y2=load_files("../data/movie-review-data/review_polarity/txt_sentoken/neg/", 1)(3)数据获取

def load_one_file(filename):

x=""

with open(filename) as f:

for line in f:

x+=line

return x

def load_files(rootdir,label):

list = os.listdir(rootdir)

x=[]

y=[]

for i in range(0, len(list)):

path = os.path.join(rootdir, list[i])

if os.path.isfile(path):

print("Load file %s" % path)

y.append(label)

x.append(load_one_file(path))

return x,y

def load_data():

x=[]

y=[]

x1,y1=load_files("../data/movie-review-data/review_polarity/txt_sentoken/pos/",0)

x2,y2=load_files("../data/movie-review-data/review_polarity/txt_sentoken/neg/", 1)

x=x1+x2

y=y1+y2

return x,y

def main(unused_argv):

global n_words

x,y=load_data()

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.4, random_state=0)

vp = learn.preprocessing.VocabularyProcessor(max_document_length=MAX_DOCUMENT_LENGTH, min_frequency=1)

vp.fit(x)

x_train = np.array(list(vp.transform(x_train)))

x_test = np.array(list(vp.transform(x_test)))

n_words=len(vp.vocabulary_)

print('Total words: %d' % n_words)打印结果

Total words: 28334

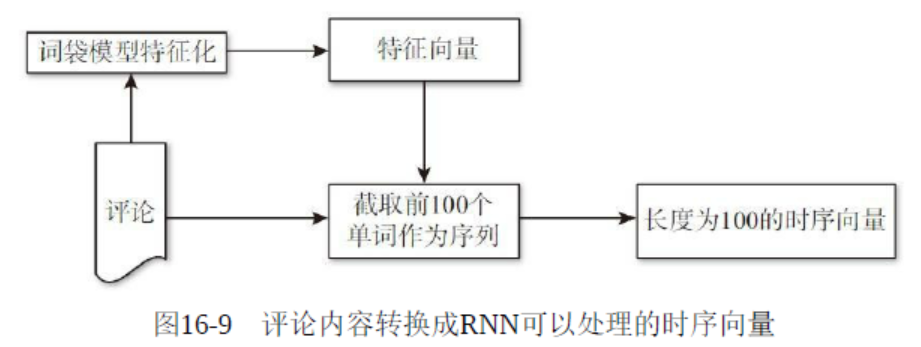

2、RNN模型

def do_rnn(trainX, testX, trainY, testY):

global n_words

# Data preprocessing

# Sequence padding

print("GET n_words embedding %d" % n_words)

trainX = pad_sequences(trainX, maxlen=MAX_DOCUMENT_LENGTH, value=0.)

testX = pad_sequences(testX, maxlen=MAX_DOCUMENT_LENGTH, value=0.)

# Converting labels to binary vectors

trainY = to_categorical(trainY, nb_classes=2)

testY = to_categorical(testY, nb_classes=2)

# Network building

net = tflearn.input_data([None, MAX_DOCUMENT_LENGTH])

net = tflearn.embedding(net, input_dim=n_words, output_dim=128)

net = tflearn.lstm(net, 128, dropout=0.8)

net = tflearn.fully_connected(net, 2, activation='softmax')

net = tflearn.regression(net, optimizer='adam', learning_rate=0.001,

loss='categorical_crossentropy')

# Training

model = tflearn.DNN(net, tensorboard_verbose=3)

model.fit(trainX, trainY, validation_set=(testX, testY), show_metric=True,

batch_size=32,run_id="maidou")3、NB模型

def do_NB(x_train, x_test, y_train, y_test):

gnb = GaussianNB()

y_predict = gnb.fit(x_train, y_train).predict(x_test)

score = metrics.accuracy_score(y_test, y_predict)

print('NB Accuracy: {0:f}'.format(score))4、完整代码

import tensorflow as tf

from tensorflow.contrib.learn.python import learn

from sklearn import metrics

from sklearn.model_selection import train_test_split

import numpy as np

from sklearn.naive_bayes import GaussianNB

import os

from sklearn.feature_extraction.text import CountVectorizer

from tensorflow.contrib.layers.python.layers import encoders

from sklearn import svm

import tflearn

from tflearn.data_utils import to_categorical, pad_sequences

from tflearn.datasets import imdb

MAX_DOCUMENT_LENGTH = 200

EMBEDDING_SIZE = 50

n_words=0

def load_one_file(filename):

x=""

with open(filename) as f:

for line in f:

x+=line

return x

def load_files(rootdir,label):

list = os.listdir(rootdir)

x=[]

y=[]

for i in range(0, len(list)):

path = os.path.join(rootdir, list[i])

if os.path.isfile(path):

print("Load file %s" % path)

y.append(label)

x.append(load_one_file(path))

return x,y

def load_data():

x=[]

y=[]

x1,y1=load_files("../data/movie-review-data/review_polarity/txt_sentoken/pos/",0)

x2,y2=load_files("../data/movie-review-data/review_polarity/txt_sentoken/neg/", 1)

x=x1+x2

y=y1+y2

return x,y

def do_rnn(trainX, testX, trainY, testY):

global n_words

# Data preprocessing

# Sequence padding

print("GET n_words embedding %d" % n_words)

trainX = pad_sequences(trainX, maxlen=MAX_DOCUMENT_LENGTH, value=0.)

testX = pad_sequences(testX, maxlen=MAX_DOCUMENT_LENGTH, value=0.)

# Converting labels to binary vectors

trainY = to_categorical(trainY, nb_classes=2)

testY = to_categorical(testY, nb_classes=2)

# Network building

net = tflearn.input_data([None, MAX_DOCUMENT_LENGTH])

net = tflearn.embedding(net, input_dim=n_words, output_dim=128)

net = tflearn.lstm(net, 128, dropout=0.8)

net = tflearn.fully_connected(net, 2, activation='softmax')

net = tflearn.regression(net, optimizer='adam', learning_rate=0.001,

loss='categorical_crossentropy')

# Training

model = tflearn.DNN(net, tensorboard_verbose=3)

model.fit(trainX, trainY, validation_set=(testX, testY), show_metric=True,

batch_size=32,run_id="maidou")

def do_NB(x_train, x_test, y_train, y_test):

gnb = GaussianNB()

y_predict = gnb.fit(x_train, y_train).predict(x_test)

score = metrics.accuracy_score(y_test, y_predict)

print('NB Accuracy: {0:f}'.format(score))

def main(unused_argv):

global n_words

x,y=load_data()

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.4, random_state=0)

vp = learn.preprocessing.VocabularyProcessor(max_document_length=MAX_DOCUMENT_LENGTH, min_frequency=1)

vp.fit(x)

x_train = np.array(list(vp.transform(x_train)))

x_test = np.array(list(vp.transform(x_test)))

n_words=len(vp.vocabulary_)

print('Total words: %d' % n_words)

do_NB(x_train, x_test, y_train, y_test)

do_rnn(x_train, x_test, y_train, y_test)

if __name__ == '__main__':

tf.app.run()5、运行结果

RNN算法结果

......

Training Step: 378 | total loss: 0.08034 | time: 15.892s

| Adam | epoch: 010 | loss: 0.08034 - acc: 0.9874 -- iter: 1152/1200

Training Step: 379 | total loss: 0.07429 | time: 16.334s

| Adam | epoch: 010 | loss: 0.07429 - acc: 0.9887 -- iter: 1184/1200

Training Step: 380 | total loss: 0.07552 | time: 18.796s

| Adam | epoch: 010 | loss: 0.07552 - acc: 0.9867

| val_loss: 1.07911 - val_acc: 0.6188 -- iter: 1200/1200

--

NB算法结果

NB Accuracy: 0.493750如上NB算法准确率仅为49.37%左右,RNN准确率仅61.88%左右。从RNN的训练准确率可达到98%以上,而测试准确率如此不理想,可见泛化性能差了些,还有调优空间

最后

以上就是害羞洋葱最近收集整理的关于《Web安全之机器学习入门》笔记:第十六章 16.3 恶意评论识别(二)的全部内容,更多相关《Web安全之机器学习入门》笔记:第十六章内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复