基于slurm框架的GPU服务器集群搭建操作文档

-

1. 环境基础

-

2. 环境配置

-

2.1 hostname配置

-

2.2 关闭SELinux (master, slave)

-

2.3 关闭Firewall (master, slave)

-

2.4 配置ip与hostname映射关系 (master, slave1)

-

-

3. 创建munge和slurm用户 (master, slave)

-

4. 安装munge

-

4.1 下载munge及依赖包 (master, slave)

-

4.2 生成munge.key并发送到各计算节点 (master)

-

4.3 修改munge.key权限并启动(slave)

-

-

5. 安装slurm

-

5.1 安装slurm依赖 (master, slave)

-

5.2 下载slurm源码包并编译 (master, slave)

-

5.3 安装生成的rpm文件 (master, slave)

-

5.4 修改配置文件 (master)

-

5.5 修改slurm文件权限,并启动slurm

-

a. 在master上执行

-

b. 在slave上执行

-

c. 在master上执行

-

d. 在slave上执行

-

e. 启动munge

-

-

-

6. 运行测试

1. 环境基础

系统环境: Centos7

管理结点: 1个 (192.168.76.130)hostname: master

计算节点: 1个 (192.168.76.131)hostname: slave1

2. 环境配置

2.1 hostname配置

-

查看hostname

hostnamectl status -

配置master机hostname

hostnamectl set-hostname master -

配置slave1机hostname

hostnamectl set-hostname slave1

2.2 关闭SELinux (master, slave)

vi /etc/sysconfig/selinux

# SELINUX=disable

reboot

getenforce

2.3 关闭Firewall (master, slave)

systemctl stop firewalld.service

systemctl disable firewalld.service

2.4 配置ip与hostname映射关系 (master, slave1)

vi /etc/hosts

添加映射关系:

192.168.76.133 master

192.168.76.132 slave1

3. 创建munge和slurm用户 (master, slave)

export MUNGEUSER=991 && groupadd -g $MUNGEUSER munge

useradd -m -c "MUNGE Uid 'N' Gid Emporium" -d /var/lib/munge -u $MUNGEUSER -g munge -s /sbin/nologin munge

export SLURMUSER=992 && groupadd -g $SLURMUSER slurm

useradd -m -c "SLURM workload manager" -d /var/lib/slurm -u $SLURMUSER -g slurm -s /bin/bash slurm

注:uid和gid可以根据情况自行确定,但要保证集群中的各结点uid和gid一致。

4. 安装munge

4.1 下载munge及依赖包 (master, slave)

yum install epel-release openssh-clients -y

yum install munge munge-libs munge-devel -y

yum install rng-tools -y

rngd -r /dev/urandom

4.2 生成munge.key并发送到各计算节点 (master)

/usr/sbin/create-munge-key -r

dd if=/dev/urandom bs=1 count=1024 > /etc/munge/munge.key

chown munge: /etc/munge/munge.key && chmod 400 /etc/munge/munge.key

scp /etc/munge/munge.key root@192.168.76.132:/etc/munge/

4.3 修改munge.key权限并启动(slave)

chown -R munge: /etc/munge/ /var/log/munge/ && chmod 0700 /etc/munge/ /var/log/munge/

systemctl enable munge

systemctl start munge

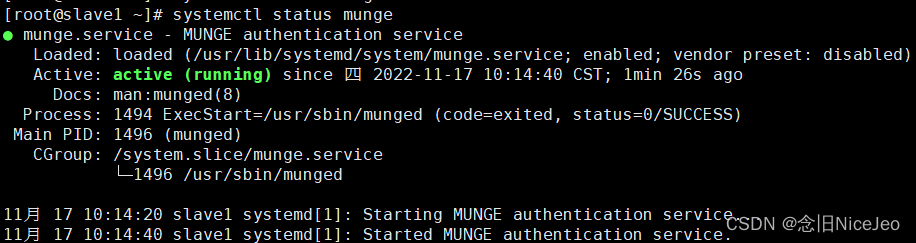

systemctl status munge

5. 安装slurm

5.1 安装slurm依赖 (master, slave)

yum install openssl openssl-devel pam-devel numactl numactl-devel hwloc hwloc-devel lua lua-devel readline-devel rrdtool-devel ncurses-devel man2html libibmad libibumad -y

yum install python3-pip perl-ExtUtils-MakeMaker gcc rpm-build mysql-devel json-c json-c-devel http-parser http-parser-devel -y

5.2 下载slurm源码包并编译 (master, slave)

# 安装下载工具

yum install wget -y

cd /usr/local/

wget https://download.schedmd.com/slurm/slurm-20.11.9.tar.bz2

rpmbuild -ta --with mysql slurm-20.11.9.tar.bz2

5.3 安装生成的rpm文件 (master, slave)

cd /root/rpmbuild/RPMS/x86_64

yum localinstall slurm-*.rpm -y

5.4 修改配置文件 (master)

cp /etc/slurm/slurm.conf.example /etc/slurm/slurm.conf

cp /etc/slurm/slurmdbd.conf.example /etc/slurm/slurmdbd.conf

cp /etc/slurm/cgroup.conf.example /etc/slurm/cgroup.conf

修改/etc/slurm/slurm.conf

vi /etc/slurm/slurm.conf

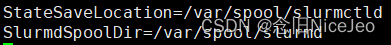

# 注意,有两个路径要格外注意修改

...

修改/etc/slurm/slurmdbd.conf

vi /etc/slurm/slurmdbd.conf

...

通过scp发动到各个计算节点

scp -p /etc/slurm/slurm.conf root@192.168.76.132:/etc/slurm/

scp -p /etc/slurm/slurmdbd.conf root@192.168.76.132:/etc/slurm/

scp -p /etc/slurm/cgroup.conf root@192.168.76.132:/etc/slurm/

5.5 修改slurm文件权限,并启动slurm

a. 在master上执行

mkdir /var/spool/slurmctld && chown slurm: /var/spool/slurmctld && chmod 755 /var/spool/slurmctld

mkdir /var/log/slurm && touch /var/log/slurm/slurmctld.log && chown slurm: /var/log/slurm/slurmctld.log

touch /var/log/slurm/slurm_jobacct.log /var/log/slurm/slurm_jobcomp.log && chown slurm: /var/log/slurm/slurm_jobacct.log /var/log/slurm/slurm_jobcomp.log

chown slurm: /etc/slurm/slurmdbd.conf

chmod 600 /etc/slurm/slurmdbd.conf

touch /var/log/slurm/slurmdbd.log

chown slurm: /var/log/slurm/slurmdbd.log

b. 在slave上执行

mkdir /var/spool/slurmd && chown slurm: /var/spool/slurmd && chmod 755 /var/spool/slurmd

mkdir /var/log/slurm && touch /var/log/slurm/slurmd.log && chown slurm: /var/log/slurm/slurmd.log

c. 在master上执行

# 启动slurmdbd

systemctl enable slurmdbd.service

systemctl start slurmdbd.service

systemctl status slurmdbd.service

# 启动slurmctld

systemctl enable slurmctld.service

systemctl start slurmctld.service

systemctl status slurmctld.service

d. 在slave上执行

systemctl enable slurmd.service

systemctl start slurmd.service

systemctl status slurmd.service

e. 启动munge

systemctl start munge

systemctl status munge

systemctl enable munge

6. 运行测试

us slurmctld.service

#### d. 在slave上执行

```bash

systemctl enable slurmd.service

systemctl start slurmd.service

systemctl status slurmd.service

e. 启动munge

systemctl start munge

systemctl status munge

systemctl enable munge

至此结束

最后

以上就是文艺裙子最近收集整理的关于基于slurm框架的GPU服务器集群搭建方法基于slurm框架的GPU服务器集群搭建操作文档的全部内容,更多相关基于slurm框架内容请搜索靠谱客的其他文章。

发表评论 取消回复