1. 简介

Beautiful Soup 是一个可以从HTML或XML文件中提取数据的Python库.

安装方法:

pip install beautifulsoup4

网页解析器

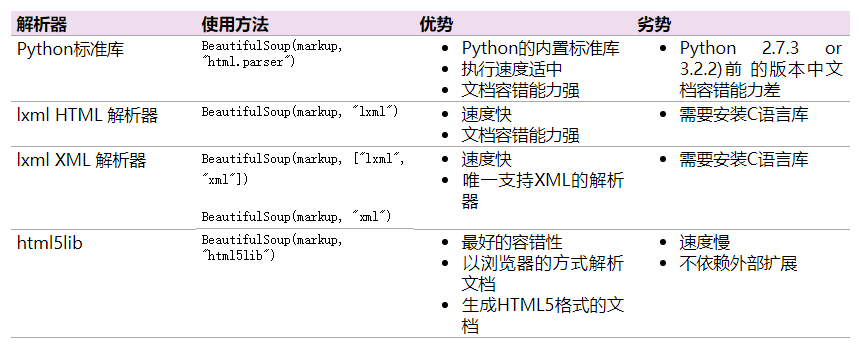

由于Beautiful Soup是对HTML文件进行提取数据,因此,需要安装网页解析器。

Beautiful Soup支持Python标准库中的HTML解析器,还支持一些第三方的解析器,其中一个是 lxml .根据操作系统不同,可以选择下列方法来安装lxml: $ pip install lxml

另一个可供选择的解析器是纯Python实现的 html5lib , html5lib的解析方式与浏览器相同,可以选择下列方法来安装html5lib:$ pip install html5lib

几种网页解析器对比

推荐使用lxml作为解析器,因为效率更高.

2. 方法

使用BeautifulSoup解析这段代码,能够得到一个 BeautifulSoup 的对象,并能按照标准的缩进格式的结构输出:

from bs4 import BeautifulSoup

soup = BeautifulSoup(html_doc)

print(soup.prettify())

效果:

# <html>

# <head>

# <title>

# The Dormouse's story

# </title>

# </head>

# <body>

# <p class="title">

# <b>

# The Dormouse's story

# </b>

# </p>

# <p class="story">

# Once upon a time there were three little sisters; and their names were

# <a class="sister" href="http://example.com/elsie" id="link1">

# Elsie

# </a>

# ,

# <a class="sister" href="http://example.com/lacie" id="link2">

# Lacie

# </a>

# and

# <a class="sister" href="http://example.com/tillie" id="link2">

# Tillie

# </a>

# ; and they lived at the bottom of a well.

# </p>

# <p class="story">

# ...

# </p>

# </body>

# </html>

几个简单的浏览结构化数据的方法:

soup.title

# <title>The Dormouse's story</title>

soup.title.name

# u'title'

soup.title.string

# u'The Dormouse's story'

soup.title.parent.name

# u'head'

soup.p

# <p class="title"><b>The Dormouse's story</b></p>

soup.p['class']

# u'title'

soup.a

# <a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>

soup.find_all('a')

# [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>,

# <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>,

# <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>]

soup.find(id="link3")

# <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>

从文档中找到所有<a>标签的链接:

for link in soup.find_all('a'):

print(link.get('href'))

# http://example.com/elsie

# http://example.com/lacie

# http://example.com/tillie

从文档中获取所有文字内容:

print(soup.get_text())

# The Dormouse's story

#

# The Dormouse's story

#

# Once upon a time there were three little sisters; and their names were

# Elsie,

# Lacie and

# Tillie;

# and they lived at the bottom of a well.

#

# ...

3. 简单的应用

爬取的网站:howbuy.com, 爬取基金的代码,名称等

from selenium import webdriver

from bs4 import BeautifulSoup

import time

driver = webdriver.Chrome(executable_path="E:Google Chromechromedriver_win32chromedriver.exe") #用chrome浏览器打开

url = "https://www.howbuy.com/fund/fundranking/"

driver.get(url)

time.sleep(2) #让操作稍微停一下

cookie=driver.get_cookies()

time.sleep(3)

# 网页内容

html = driver.page_source

soup1 = BeautifulSoup(html,'lxml')

for info in soup1.find_all('tr'):

td = info.find_all('td')

link = td.find('a').get('href')

name = td.find('a').contents[0]

print("基金名称:{},基金的详细链接:{}".format(name, link))

网页中是:

<tr><td class="ck" width="4%"><input onclick="move(this);" type="checkbox" value="007350"/></td><td width="4%">1</td><td width="6%"><a href="https://www.howbuy.com/fund/007350" target="_blank">007350</a></td><td class="tdl" width="13%"><a href="https://www.howbuy.com/fund/007350" target="_blank">华夏科技创新混合C</a></td><td width="5%">01-26</td><td class="tdr" width="6%">2.5793</td><td class="tdr" width="7%"><span class="cRed">3.02%</span></td><td class="tdr" width="7%"><span class="cRed">13.21%</span></td><td class="tdr" width="7%"><span class="cRed">157.93%</span></td><td class="tdr" width="7%"><span class="cRed">157.93%</span></td><td class="tdr" width="7%"><span class="cRed">157.93%</span></td><td class="tdr" width="8%"><span class="cRed">7.49%</span></td><td class="tdr" width="10%"><span class="cRed">13.21%</span></td><td class="handle" width="9%"><a href="https://trade.ehowbuy.com/newpc/pcfund/module/pcfund/view/buyFund.html?fundCode=007350" target="_blank">购买</a><a class="add_select addzx_007350" href="javascript:void(0)" jjdm-data="007350" onclick='MoveBox(this,"007350")' target="_self">自选</a><a class="c666 delzx_007350" href="javascript:void(0)" onclick='delFund("007350")' style="display: none; color: rgb(102, 102, 102);" target="_self">已自选</a></td></tr>

效果:

基金名称:浦银安盛环保新能源混合A,基金的详细链接:https://www.howbuy.com/fund/007163

参考:

- Beautiful Soup 4.2.0 文档;

最后

以上就是仁爱大神最近收集整理的关于python3爬虫(5): Beautiful Soup介绍的全部内容,更多相关python3爬虫(5):内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复