1The body of code

#include"mpi.h"

#include"stdio.h"

#include<stdlib.h>

const int rows = 3; //the rows of matrix

const int cols = 2; //the cols of matrix

int main(int argc, char **argv)

{

int rank,size,anstag;

int A[rows][cols],B[cols],C[rows];

int masterpro,buf[cols],ans,cnt;

double starttime,endtime;

double tmp,totaltime;

MPI_Status status;

MPI_Init(&argc,&argv);

MPI_Comm_rank(MPI_COMM_WORLD,&rank);

MPI_Comm_size(MPI_COMM_WORLD,&size);

if(size<2){

printf("Error:Too few processors!n");

MPI_Abort(MPI_COMM_WORLD,99);

}

if (0==rank){

for(int i=0;i<cols;i++)

{

B[i]=i;

for(int j=0;j<rows;j++){

A[j][i]=i+j;

}

}

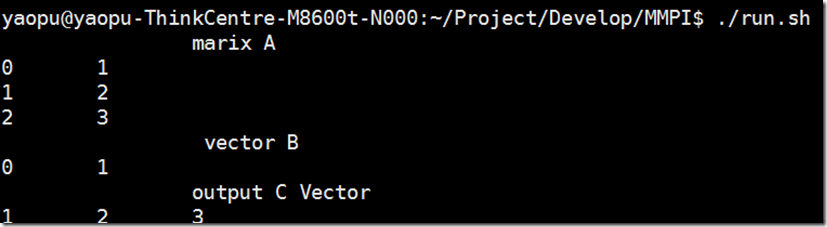

output matrix A and vector B ///

printf("ttmarix A n");

for(int i=0;i<rows;i++){

for(int j=0;j<cols;j++){

printf("%dt",A[i][j]);

}

printf("n");

}

printf("tt vector Bn");

for(int i=0;i<cols;i++)

printf("%dt",B[i]);

printf("n");

//bcast the B vector to all slave processors

MPI_Bcast(B,cols,MPI_INT,0,MPI_COMM_WORLD);

//partition the A matrix to all slave processors

for(int i=1;i<size;i++)

{

for(int k=i-1;k<rows;k+=size-1)

{

for(int j=0;j<cols;j++)

{

buf[j]=A[k][j];

}

MPI_Send(buf,cols,MPI_INT,i,k,MPI_COMM_WORLD);

}

}

}

else{

MPI_Bcast(B,cols,MPI_INT,0,MPI_COMM_WORLD);

//every processors receive the part of A matrix, and make Mul Operator with B vector

for(int k= rank-1;k<rows;k+=size-1){

MPI_Recv(buf,cols,MPI_INT,0,k,MPI_COMM_WORLD,&status);

ans=0;

for(int j=0;j<cols;j++)

{

ans=buf[j]*B[j];

}

MPI_Send(&ans,1,MPI_INT,0,k,MPI_COMM_WORLD);

}

}

if(0==rank){

//receive the result from all slave processor

printf("ttoutput C Vectorn");

for(int i=0;i<rows;i++)

{

MPI_Recv(&ans,1,MPI_INT,MPI_ANY_SOURCE,MPI_ANY_TAG,MPI_COMM_WORLD,&status);

anstag = status.MPI_TAG;

C[anstag] =ans;

printf("%dt",C[i]);

}

printf("n");

}

MPI_Finalize();

return 0;

}

2 Question Part

1) How to understant this part of code? partition the A matrix to all slave processors

for(int i=1;i<size;i++)

{

for(int k=i-1;k<rows;k+=size-1)

{

for(int j=0;j<cols;j++)

{

buf[j]=A[k][j];

}

MPI_Send(buf,cols,MPI_INT,i,k,MPI_COMM_WORLD);

}

}

**************************************************************

A[rows][cols] was divided by each rows.

buf[cols].

MPI_Send(buf,cols,MPI_INT,i,k,MPI_COMM_WORLD);

i ----- the destination of this message

k------the tag of this message

the same processor (the same i),receive the message with diffent k, and each k has the same interval size-1.

So that the same processor will receive the same interval k, ie the equal interval cols of A matrix

2) after MPI_FInalize(), whether all the parreling enviroment stoped ?

MPI_Finalize() means that all the resource that applied by MPI_intial is set free, But it does mean after the MPI_Finalize(),

there is only one processor works!

This question, you can refer to the blog for details

http://bbs.csdn.net/topics/391038020

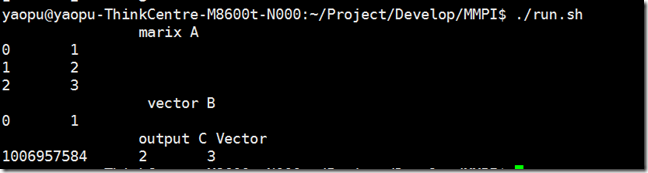

3 more informations

sometimes it works out like this, So what is wrong with the Code?

最后

以上就是狂野书包最近收集整理的关于MPI并行计算与矩阵(2)的全部内容,更多相关MPI并行计算与矩阵(2)内容请搜索靠谱客的其他文章。

发表评论 取消回复