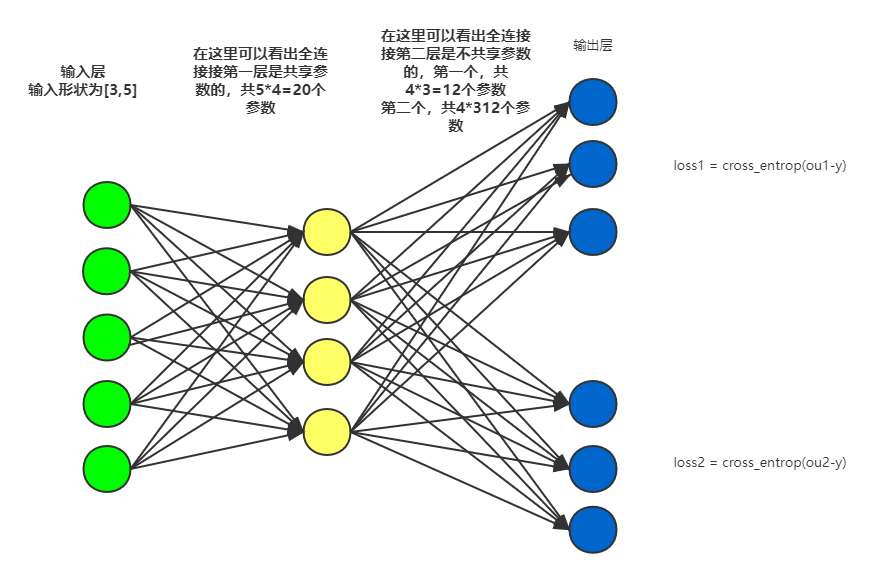

- 一个输入两个输出的全连接网络

import torch

import torch.nn as nn

import torch.nn.functional as F

class LinearNet(nn.Module):

def __init__(self):

super(LinearNet, self).__init__()

self.fc1 = nn.Linear(5, 4)

self.fc2 = nn.Linear(4, 3)

self.fc3 = nn.Linear(4, 3)

def forward(self, x):

mid = self.fc1(x)

out1 = self.fc2(mid)

out2 = self.fc3(mid)

return out1, out2

x = torch.randn((3, 5))

y = torch.torch.randint(3, (3,), dtype=torch.int64)

model = LinearNet()

model.train()

optim = torch.optim.RMSprop(model.parameters(), lr=0.001)

print(model.fc2.weight)

print(model.fc3.weight)

for i in range(5):

out1, out2 = model(x)

loss1 = F.cross_entropy(out1, y)

loss2 = F.cross_entropy(out2, y)

loss = 0*loss1 + 1*loss2

optim.zero_grad()

# loss1.backward(retain_graph=True)

# loss2.backward()

# print(loss)

loss.backward()

optim.step()

print("-------------after-----------")

print(model.fc2.weight)

print(model.fc3.weight)

上述代码是一个简单的双分支输出的全连接网络,可以通过控制loss1和loss2的权重分别去控制每个输出对网络的梯度影响。如果loss1或者loss2的权重设置为0则该损失所影响的部分梯度不再更新。

2. 一个输入两个输出的卷积网络

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

#使用super()方法调用基类的构造器,即nn.Module.__init__(self)

super(Net,self).__init__()

# 1 input image channel ,6 output channels,5x5 square convolution kernel

self.conv1=nn.Conv2d(1,6,5)

# 6 input channl,16 output channels,5x5 square convolution kernel

self.conv2=nn.Conv2d(6,16,5)

self.conv3 = nn.Conv2d(6, 16, 5)

# an affine operation:y=Wx+b

# self.fc1=nn.Linear(16*5*5,120)

# self.fc2=nn.Linear(120,84)

# self.fc3=nn.Linear(84,10)

def forward(self,x):

# x是网络的输入,然后将x前向传播,最后得到输出

# 下面两句定义了两个2x2的池化层

x=F.max_pool2d(F.relu(self.conv1(x)),(2,2))

# if the size is square you can only specify a single number

x1=F.max_pool2d(F.relu(self.conv2(x)),2)

x2 = F.max_pool2d(F.relu(self.conv3(x)), 2)

# x=x.view(-1,self.num_flat_features(x))

# x=F.relu(self.fc1(x))

# x=F.relu(self.fc2(x))

# x=self.fc3(x)

return x1,x2

def num_flat_features(self,x):

size=x.size()[1:] # all dimensions except the batch dimension

num_features=1

for s in size:

num_features*=s

return num_features

if __name__ == '__main__':

net=Net()

print(net)

net.train()

optim = torch.optim.RMSprop(net.parameters(), lr=0.001)

x = Variable(torch.randn(1, 1, 64, 64))

# y1,y2 = net(x)

y = Variable(torch.randn(1, 16, 13,13))

# print(y)

# print(y1.shape,y2.shape)

print(net.conv2.weight)

print(net.conv3.weight)

mseloss = torch.nn.MSELoss()

for i in range(4):

y1, y2 = net(x)

print(y1[0].shape,y.shape)

loss1 = mseloss(y1,y)

loss2 = mseloss(y2,y)

loss = 0*loss1 + 1 * loss2

optim.zero_grad()

# loss1.backward(retain_graph=True)

# loss2.backward()

# print(loss)

loss.backward()

optim.step()

print("-------------after-----------")

print(net.conv2.weight)

print(net.conv3.weight)

上述代码是一个简单的双分支输出的卷积神经网络,与全连接网络类似,该网络也可以通过控制loss1和loss2的权重分别去控制每个输出对网络的梯度影响。而且通过加权计算的loss统一进行梯度回归计算出的值和loss1和loss2分别进行梯度回归计算出的值大小是一致的。

参考链接,我是参考这个博主的资料的,感谢该博主。多损失回传机制

最后

以上就是可爱板栗最近收集整理的关于pytorch多损失回传案例的全部内容,更多相关pytorch多损失回传案例内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复