目录

Flume工作原理

flume安装

下载解压

配置环境变量

配置flume-env.sh文件

版本验证

Flume部署示例

Avro

Spool

Exec

后台运行flume

MongoDB写入

Windows配置flume

配置flume环境变量

两个flume做集群

监控文件

flume

centos:https://blog.csdn.net/qq_39160721/article/details/80255194

Linux:https://blog.csdn.net/u011254180/article/details/80000763

官网:http://flume.apache.org/

文档:http://flume.apache.org/FlumeUserGuide.html

蜜罐系统:Linux

项目用的1.8

下载详细:https://blog.csdn.net/qq_41910230/article/details/80920873

https://yq.aliyun.com/ask/236859

管道配置详解:http://www.cnblogs.com/gongxijun/p/5661037.html

参考Linux下直接下载(Linux公社):https://www.linuxidc.com/Linux/2016-12/138722.htm

目前搭建时用到的安装包与jar包:https://download.csdn.net/download/lagoon_lala/10949262

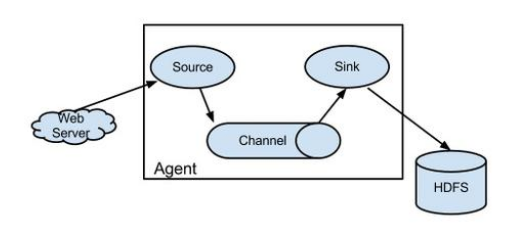

Flume工作原理

Flume的数据流由事件(Event)贯穿始终。事件是Flume的基本数据单位,它携带日志数据(字节数组形式)并且携带有头信息,这些Event由Agent外部的Source生成,当Source捕获事件后会进行特定的格式化,然后Source会把事件推入(单个或多个)Channel中。可以把Channel看作是一个缓冲区,它将保存事件直到Sink处理完该事件。Sink负责持久化日志或者把事件推向另一个Source。以下是Flume的一些核心概念:

(1)Events:一个数据单元,带有一个可选的消息头,可以是日志记录、avro 对象等。

(2)Agent:JVM中一个独立的Flume进程,包含组件Source、Channel、Sink。

(3)Client:运行于一个独立线程,用于生产数据并将其发送给Agent。

(4)Source:用来消费传递到该组件的Event,从Client收集数据,传递给Channel。

(5)Channel:中转Event的一个临时存储,保存Source组件传递过来的Event,其实就是连接 Source 和 Sink ,有点像一个消息队列。

(6)Sink:从Channel收集数据,运行在一个独立线程。

Flume以Agent为最小的独立运行单位,一个Agent就是一个JVM。单Agent由Source、Sink和Channel三大组件构成,如下图所示:

值得注意的是,Flume提供了大量内置的Source、Channel和Sink类型。不同类型的Source、Channel和Sink可以自由组合。组合方式基于用户设置的配置文件,非常灵活。比如:Channel可以把事件暂存在内存里,也可以持久化到本地硬盘上;Sink可以把日志写入HDFS、Hbase、ES甚至是另外一个Source等等。Flume支持用户建立多级流,也就是说多个Agent可以协同工作

flume安装

下载解压

下载命令:wget

flume只需下载二进制文件(bin)

flume官网下载:http://mirrors.hust.edu.cn/apache/flume/1.8.0/apache-flume-1.8.0-bin.tar.gz

$ wget http://archive.cloudera.com/cdh5/cdh/5/flume-ng-1.6.0-cdh5.7.1.tar.gz

| 操作显示: $ wget http://mirrors.hust.edu.cn/apache/flume/1.8.0/apache-flume-1.8.0-bin.tar.gz --2018-12-24 15:36:52-- apache-flume-1.8.0-bi 100%[=======================>] 55.97M 5.21MB/s in 12s 2018-12-24 15:37:04 (4.83 MB/s) - ‘apache-flume-1.8.0-bin.tar.gz’ saved [58688757/58688757] $ ls有apache-flume-1.8.0-bin.tar.gz |

$ tar -xvf flume-ng-1.6.0-cdh5.7.1.tar.gz

| 操作显示: $ tar -xvf apache-flume-1.8.0-bin.tar.gz $ ls有 apache-flume-1.8.0-bin apache-flume-1.8.0-bin.tar.gz |

$ rm flume-ng-1.6.0-cdh5.7.1.tar.gz

$ mv apache-flume-1.6.0-cdh5.7.1-bin flume-1.6.0-cdh5.7.1

(删除、重命名没有操作)

配置环境变量

$ cd /home/Hadoop

$ vim .bash_profile(没找到这文件,可能用的是.profile,但用这个也可以)

export FLUME_HOME=/home/hadoop/app/cdh/flume-1.6.0-cdh5.7.1

export PATH=$PATH:$FLUME_HOME/bin

| 操作:

$ cd ~ $ vim .bash_profile export FLUME_HOME=~/software/apache-flume-1.8.0-bin export PATH=$PATH:$FLUME_HOME/bin |

$ source .bash_profile

| 操作显示: -bash: export: `/home/user/software/apache-flume-1.8.0-bin': not a valid identifier 删除FLUME_HOME等号后的空格后不再报错 |

版本验证失败,将.bash_profile复制到home下

| ~/hadoop$ cp .bash_profile ~/ ~$ source .bash_profile |

配置flume-env.sh文件

修改conf下的flume-env.sh,在里面配置JAVA_HOME

*echo JAVA_HOME为空:

(linux-jdk下载https://blog.csdn.net/mlym521/article/details/82622625

安装:https://blog.csdn.net/weixin_37352094/article/details/80372821

不能直接wget,oracle需要接受协议

| wget --no-check-certificate --no-cookies --header "Cookie: oraclelicense=accept-securebackup-cookie" http://download.oracle.com/otn-pub/java/jdk/8u131-b11/d54c1d3a095b4ff2b6607d096fa80163/jdk-8u131-linux-x64.tar.gz |

| tar -zxvf jdk-8u131-linux-x64.tar.gz |

$ cd app/cdh/flume-1.6.0-cdh5.7.1/conf/

$ cp flume-env.sh.template flume-env.sh

$ vim flume-env.sh

export JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

export HADOOP_HOME=/home/hadoop/app/cdh/hadoop-2.6.0-cdh5.7.1

| 操作:(jdk位置:/home/user/jdk1.8.0_171、Hadoop位置:/home/user/hadoop) ~/software/apache-flume-1.8.0-bin/conf$ cp flume-env.sh.template flume-env.sh $ vim flume-env.sh export JAVA_HOME=/home/user/jdk1.8.0_171 export HADOOP_HOME=/home/user/hadoop |

| 文件中原有文字: # If this file is placed at FLUME_CONF_DIR/flume-env.sh, it will be sourced during Flume startup. 如果此文件放置在 flume _ conf _ dir/fume-env. sh, 它将被获取 在flume启动过程中。 # Enviroment variables can be set here. 环境变量可以在这里设置。 # export JAVA_HOME=/usr/lib/jvm/java-8-oracle

# Give Flume more memory and pre-allocate, enable remote monitoring via JMX # export JAVA_OPTS="-Xms100m -Xmx2000m -Dcom.sun.management.jmxremote"

# Let Flume write raw event data and configuration information to its log files for debugging purposes. Enabling these flags is not recommended in production, # as it may result in logging sensitive user information or encryption secrets. # export JAVA_OPTS="$JAVA_OPTS -Dorg.apache.flume.log.rawdata=true -Dorg.apache.flume.log.printconfig=true "

# Note that the Flume conf directory is always included in the classpath. #FLUME_CLASSPATH="" 翻译: # 给 flume 更多的内存和预分配, 通过 jmx 启用远程监控 # 导出 java _ opts = "-xms100-xmx2000 m-Dcom.sun.management.jmxremote"

# 让 flume 将原始事件数据和配置信息写入其日志文件, 以便进行调试。在生产中不建议启用这些标志,因为它可能会导致记录敏感的用户信息或加密机密。 # export java _ opts = "$JAVA _ opts-Dorg.apache.flume.log.rawdata = true-Dorg.apache.flume.log.printconfig = true "

# 请注意, flume conf 目录始终包含在类路径中。 #FLUME_CLASSPATH = "" |

| 第二次配置记录: (Jdk位置:~/java/jdk1.8.0_131) ~/flume/flume-ng-1.6.0-cdh5.7.1/conf]$ vim flume-env.sh |

| 报错: [tsec@obedientcorrespondent:~]$ flume-ng version Error: Unable to find java executable. Is it in your PATH? 需要配置环境变量: [root@localhost bin]# vi /etc/profile 在文件最后添加: JAVA_HOME=~/java/jdk1.8.0_131 PATH=$PATH:$JAVA_HOME/bin CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export JAVA_HOME export PATH export CLASSPATH [root@localhost bin]# source /etc/profile 使文件修改立即生效 [root@localhost bin]# java -version java version "1.8.0_191" Java(TM) SE Runtime Environment (build 1.8.0_191-b12) Java HotSpot(TM) 64-Bit Server VM (build 25.191-b12, mixed mode) 验证修改生效 |

| 报错,文件没有写权限: sudo chmod 777 /etc/profile |

| 配置成功 |

版本验证

$ flume-ng version

| 显示: -bash: flume: command not found |

版本验证失败,将.bash_profile复制到home下

| 操作: ~/hadoop$ cp .bash_profile ~/ ~$ source .bash_profile |

版本验证成功

| 显示:Flume 1.8.0 Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git Revision: 99f591994468633fc6f8701c5fc53e0214b6da4f Compiled by denes on Fri Sep 15 14:58:00 CEST 2017 From source with checksum fbb44c8c8fb63a49be0a59e27316833d |

Flume部署示例

Avro

Flume可以通过Avro监听某个端口并捕获传输的数据,具体示例如下:

// 创建一个Flume配置文件

$ cd app/cdh/flume-1.6.0-cdh5.7.1

$ mkdir example

$ cp conf/flume-conf.properties.template example/netcat.conf

| 操作: $ cd ~/software/apache-flume-1.8.0-bin $ mkdir example $ cp conf/flume-conf.properties.template example/netcat.conf |

| 查看: ~/software/apache-flume-1.8.0-bin/example$ ls netcat.conf |

// 配置netcat.conf用于实时获取另一终端输入的数据

$ vim example/netcat.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel that buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

| 操作: $ vim netcat.conf 源文件显示: # The configuration file needs to define the sources, # the channels and the sinks. 配置文件需要定义源、通道和接收器。 # Sources, channels and sinks are defined per agent, # in this case called 'agent'源、通道和接收器是为代理定义的, 在这种情况下称为 "agent " agent.sources = seqGenSrc agent.channels = memoryChannel agent.sinks = loggerSink # For each one of the sources, the type is defined定义源 agent.sources.seqGenSrc.type = seq # The channel can be defined as follows.定义通道 agent.sources.seqGenSrc.channels = memoryChannel # Each sink's type must be defined定义接收器 agent.sinks.loggerSink.type = logger #Specify the channel the sink should use定义接收器使用的通道 agent.sinks.loggerSink.channel = memoryChannel # Each channel's type is defined.定义通道类型 agent.channels.memoryChannel.type = memory # Other config values specific to each type of channel(sink or source) can be defined as well定义每种类型通道的 # In this case, it specifies the capacity of the memory channel内存容量 agent.channels.memoryChannel.capacity = 100 |

| 修改文件如上 |

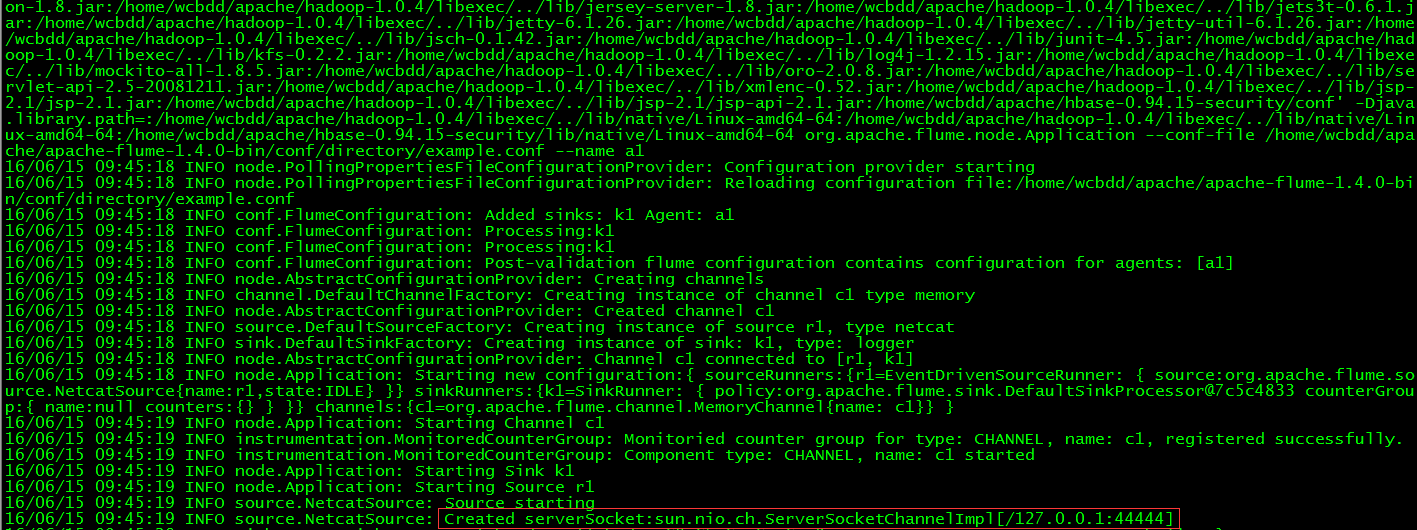

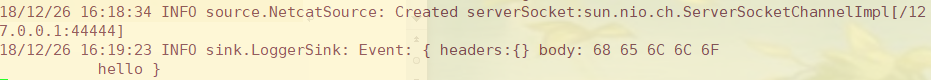

// 运行FlumeAgent,监听本机的44444端口

$ flume-ng agent -c conf -f example/netcat.conf -n a1 -Dflume.root.logger=INFO,console

| 操作(绝对路径,根据实际替换): $flume-ng agent -c conf -f ~/software/apache-flume-1.8.0-bin/example/netcat.conf -n a1 -Dflume.root.logger=INFO,console |

![]()

// 打开另一终端,通过telnet登录localhost的44444,输入测试数据

$ telnet localhost 44444

|

|

安装telnet后还是这样,尝试用Windows往虚拟机端口写

|

|

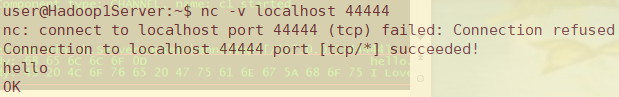

| 改用nc登录localhost的44444,输入测试数据: nc -v localhost 44444 |

// 查看flume收集数据情况

Spool

Spool用于监测配置的目录下新增的文件,并将文件中的数据读取出来。需要注意两点:拷贝到spool目录下的文件不可以再打开编辑、spool目录下不可包含相应的子目录。具体示例如下:

// 创建两个Flume配置文件

$ cd app/cdh/flume-1.6.0-cdh5.7.1

$ cp conf/flume-conf.properties.template example/spool1.conf

$ cp conf/flume-conf.properties.template example/spool2.conf

| 操作: $ cd ~/software/apache-flume-1.8.0-bin $ cp conf/flume-conf.properties.template example/spool1.conf $ cp conf/flume-conf.properties.template example/spool2.conf |

// 配置spool1.conf用于监控目录avro_data的文件,将文件内容发送到本地60000端口。

//监控目录需要换

| 操作: $ vim example/spool1.conf

# Namethe components local1.sources= r1 local1.sinks= k1 local1.channels= c1 # Source local1.sources.r1.type= spooldir local1.sources.r1.spoolDir= ~/avro_data # Sink local1.sinks.k1.type= avro local1.sinks.k1.hostname= localhost local1.sinks.k1.port= 60000 #Channel local1.channels.c1.type= memory # Bindthe source and sink to the channel local1.sources.r1.channels= c1 local1.sinks.k1.channel= c1 |

// 配置spool2.conf用于从本地60000端口获取数据并写入HDFS

创建HDFS测试目录

| $ vim example/spool2.conf

# Namethe components a1.sources= r1 a1.sinks= k1 a1.channels= c1 # Source a1.sources.r1.type= avro a1.sources.r1.channels= c1 a1.sources.r1.bind= localhost a1.sources.r1.port= 60000 # Sink a1.sinks.k1.type= hdfs a1.sinks.k1.hdfs.path= hdfs://localhost:9000/user/wcbdd/flumeData a1.sinks.k1.rollInterval= 0 a1.sinks.k1.hdfs.writeFormat= Text a1.sinks.k1.hdfs.fileType= DataStream # Channel a1.channels.c1.type= memory a1.channels.c1.capacity= 10000 # Bind the source and sink to the channel a1.sources.r1.channels= c1 a1.sinks.k1.channel= c1 |

// 分别打开两个终端,运行如下命令启动两个Flume Agent

$ flume-ng agent -c conf -f example/spool2.conf -n a1

$ flume-ng agent -c conf -f example/spool1.conf -n local1

| 操作: cd ~/software/apache-flume-1.8.0-bin $ flume-ng agent -c conf -f example/spool2.conf -n a1 $ flume-ng agent -c conf -f example/spool1.conf -n local1 |

// 查看本地文件系统中需要监控的avro_data目录内容(文件不存在,分别在本地和HDFS创建文件夹)

$ cd avro_data

$ cat avro_data.txt

| 显示: -bash: cd: avro_data/: No such file or directory cat: avro_data.txt: No such file or directory |

| 操作 ~$ mkdir avro_data ~/avro_data$ touch avro_data.txt

|

创建HDFS文件夹

原hdfs://localhost:9000/user/wcbdd/flumeData

修改spool配置文件监听、写入路径

查看HDFS文件中的创建文件夹命令,

sink配置详解:

https://blog.csdn.net/xiaolong_4_2/article/details/81945204

Exec

原hdfs://localhost:9000/user/wcbdd/flumeData

提交目录:hdfs://master:9000/flume/suricata/%y-%m-%d

sink配置详解:

https://blog.csdn.net/xiaolong_4_2/article/details/81945204

avro-hdfs.conf

| agent1.sources=source1 agent1.channels=channel1 agent1.sinks=sink1

# 收集蜜罐上传输过来的日志 agent1.sources.source1.type = avro agent1.sources.source1.channels = channel1 agent1.sources.source1.bind = 10.2.68.104 agent1.sources.source1.port = 5000 agent1.sources.source1.fileHeader = false agent1.sources.source1.interceptors = i1 agent1.sources.source1.interceptors.i1.type = timestamp

# 定义memory形式管道也可以改为file agent1.channels.channel1.type = memory agent1.channels.channel1.capacity = 10000 agent1.channels.channel1.transactionCapacity = 1000

agent1.sinks.sink1.type = hdfs agent1.sinks.sink1.channel = channel1 agent1.sinks.sink1.hdfs.path = hdfs://master:9000/flume/suricata/%y-%m-%d agent1.sinks.sink1.hdfs.filePrefix = %Y-%m-%d

# 60 分钟就改目录 # agent1.sinks.sink1.hdfs.round = true # agent1.sinks.sink1.hdfs.roundValue = 60 # agent1.sinks.sink1.hdfs.roundUnit = minute

# 更改文件类型(解决乱码问题) agent1.sinks.sink1.hdfs.fileType=DataStream # 文件滚动之前的等待时间(秒) agent1.sinks.sink1.hdfs.rollInterval = 120

# 文件滚动的大小限制(bytes) 禁用大小滚动策略 agent1.sinks.sink1.hdfs.rollSize = 0 # 禁用文件通过events数量滚动 agent1.sinks.sink1.hdfs.rollCount = 0 # 设置hdfs副本个数为1,防止在创建副本时滚动文件 agent1.sinks.sink1.hdfs.minBlockReplicas = 1 |

启动:

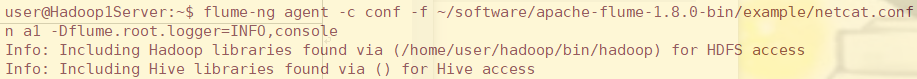

| $flume-ng agent -c conf -f ~/software/apache-flume-1.8.0-bin/conf/avro-hdfs.conf -n agent1 -Dflume.root.logger=INFO,console |

| 报错: 19/03/06 22:16:18 INFO source.AvroSource: Starting Avro source source1: { bindAddress: 10.2.68.104, port: 5000 }... 19/03/06 22:16:19 ERROR source.AvroSource: Avro source source1 startup failed. Cannot initialize Netty server org.jboss.netty.channel.ChannelException: Failed to bind to: /10.2.68.104:5000 |

| 成功启动: 19/03/06 19:00:16 INFO node.Application: Starting Sink sink1 19/03/06 19:00:16 INFO node.Application: Starting Source source1 19/03/06 19:00:16 INFO source.AvroSource: Starting Avro source source1: { bindAddress: 10.2.68.104, port: 5000 }... 19/03/06 19:00:16 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SINK, name: sink1: Successfully registered new MBean. 19/03/06 19:00:16 INFO instrumentation.MonitoredCounterGroup: Component type: SINK, name: sink1 started 19/03/06 19:00:16 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SOURCE, name: source1: Successfully registered new MBean. 19/03/06 19:00:16 INFO instrumentation.MonitoredCounterGroup: Component type: SOURCE, name: source1 started 19/03/06 19:00:16 INFO source.AvroSource: Avro source source1 started. |

tail-avro.conf

在蜜罐配置tail-avro.conf(改传输ip):

| agent1.sources=source1 agent1.channels=channel1 agent1.sinks=sink1

# describe/configure source1 # type:exec is through linux command like 'tail -F' to collect logData agent1.sources.source1.type=exec agent1.sources.source1.command=tail -F /data/suricata/log/eve.json agent1.sources.source1.channels=channel1

# use a channel which buffers events in memory # type:memory or file is to temporary to save buffer data which is sink using agent1.channels.channel1.type=memory agent1.channels.channel1.capacity=10000 agent1.channels.channel1.transactionCapacity=1000

agent1.sinks.sink1.type=avro agent1.sinks.sink1.channel=channel1 agent1.sinks.sink1.hostname=10.2.68.104 agent1.sinks.sink1.port=5000 agent1.sinks.sink1.batch-size=5 |

启动:

| $flume-ng agent -c conf -f ~/flume/flume-ng-1.6.0-cdh5.7.1/conf/tail-avro.conf -n agent1 -Dflume.root.logger=INFO,console |

| 显示: log4j:WARN No appenders could be found for logger (org.apache.flume.lifecycle.LifecycleSupervisor). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.问题原因是因为 -c 没有找到 路径 |

| $flume-ng agent -c ~/flume/flume-ng-1.6.0-cdh5.7.1/conf -f ~/flume/flume-ng-1.6.0-cdh5.7.1/conf/tail-avro.conf -n agent1 -Dflume.root.logger=INFO,console |

| 启动成功显示 2019-03-06 12:48:48,271 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.sink.AbstractRpcSink.createConnection(AbstractRpcSink.java:206)] Rpc sink sink1: Building RpcClient with hostname: 10.2.68.104, port: 5000 2019-03-06 12:48:48,271 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.sink.AvroSink.initializeRpcClient(AvroSink.java:126)] Attempting to create Avro Rpc client. 2019-03-06 12:48:48,278 (lifecycleSupervisor-1-3) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:120)] Monitored counter group for type: SOURCE, name: source1: Successfully registered new MBean. 2019-03-06 12:48:48,278 (lifecycleSupervisor-1-3) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:96)] Component type: SOURCE, name: source1 started 2019-03-06 12:48:48,318 (lifecycleSupervisor-1-1) [WARN - org.apache.flume.api.NettyAvroRpcClient.configure(NettyAvroRpcClient.java:634)] Using default maxIOWorkers 2019-03-06 12:48:49,221 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.sink.AbstractRpcSink.start(AbstractRpcSink.java:303)] Rpc sink sink1 started. |

两边成功连接:

| 19/03/06 20:46:54 INFO ipc.NettyServer: [id: 0xb3031653, /10.2.192.196:47608 => /10.2.68.104:5000] OPEN 19/03/06 20:46:54 INFO ipc.NettyServer: [id: 0xb3031653, /10.2.192.196:47608 => /10.2.68.104:5000] BOUND: /10.2.68.104:5000 19/03/06 20:46:54 INFO ipc.NettyServer: [id: 0xb3031653, /10.2.192.196:47608 => /10.2.68.104:5000] CONNECTED: /10.2.192.196:47608 19/03/06 20:48:38 INFO ipc.NettyServer: [id: 0xb3031653, /10.2.192.196:47608 :> /10.2.68.104:5000] DISCONNECTED 19/03/06 20:48:38 INFO ipc.NettyServer: [id: 0xb3031653, /10.2.192.196:47608 :> /10.2.68.104:5000] UNBOUND 19/03/06 20:48:38 INFO ipc.NettyServer: [id: 0xb3031653, /10.2.192.196:47608 :> /10.2.68.104:5000] CLOSED 19/03/06 20:48:38 INFO ipc.NettyServer: Connection to /10.2.192.196:47608 disconnected. 19/03/06 20:48:48 INFO ipc.NettyServer: [id: 0x6fbc9596, /10.2.192.196:47878 => /10.2.68.104:5000] OPEN 19/03/06 20:48:48 INFO ipc.NettyServer: [id: 0x6fbc9596, /10.2.192.196:47878 => /10.2.68.104:5000] BOUND: /10.2.68.104:5000 19/03/06 20:48:48 INFO ipc.NettyServer: [id: 0x6fbc9596, /10.2.192.196:47878 => /10.2.68.104:5000] CONNECTED: /10.2.192.196:47878 |

Hdfs目录中无文件出现,尝试更改hdfs上传路径:

https://www.cnblogs.com/growth-hong/p/6396332.html

| 2.HDFS文件系统会给客户端提供一个统一的抽象目录树,客户端通过路径来访问文件,形如:hdfs://namenode:port/dir-a/dir-b/dir-c/file.data

3.目录结构及文件分块信息(元数据)的管理由namenode节点承担——namenode是HDFS集群主节点,负责维护整个hdfs文件系统的目录树,以及每一个路径(文件)所对应的block块信息(block的id,及所在的datanode服务器)

4.文件的各个block的存储管理由datanode节点承担---- datanode是HDFS集群从节点,每一个block都可以在多个datanode上存储多个副本(副本数量也可以通过参数设置dfs.replication)

5.HDFS是设计成适应一次写入,多次读出的场景,且不支持文件的修改 |

查看hostname:

改hadoop上层ip为Hadoop1Server

hdfs source.bind有问题,复查:

| flume-ng agent -c conf -f ~/software/apache-flume-1.8.0-bin/conf/avro-hdfs.conf -n agent1 -Dflume.root.logger=INFO,console |

| 19/03/07 15:49:43 INFO source.AvroSource: Starting Avro source source1: { bindAddress: 10.2.68.104, port: 5000 }... 19/03/07 15:49:43 ERROR source.AvroSource: Avro source source1 startup failed. Cannot initialize Netty server org.jboss.netty.channel.ChannelException: Failed to bind to: /10.2.68.104:5000 |

怀疑端口被占用

| user@Hadoop1Server:~/software/apache-flume-1.8.0-bin/conf$ ps aux | fgrep avro-hdfs.conf user 17760 0.0 1.4 2474000 115940 ? Sl Mar06 0:32 /home/user/jdk1.8.0_171/bin/java -Xmx20m -Dflume.root.logger=INFO,console -cp conf:/home/user/software/apache-flume-1.8.0-bin/lib/*:/home/user/hadoop/etc/hadoop:/home/user/hadoop/share/hadoop/common/lib/*:/home/user/hadoop/share/hadoop/common/*:/home/user/hadoop/share/hadoop/hdfs:/home/user/hadoop/share/hadoop/hdfs/lib/*:/home/user/hadoop/share/hadoop/hdfs/*:/home/user/hadoop/share/hadoop/yarn/lib/*:/home/user/hadoop/share/hadoop/yarn/*:/home/user/hadoop/share/hadoop/mapreduce/lib/*:/home/user/hadoop/share/hadoop/mapreduce/*:/home/user/hadoop/contrib/capacity-scheduler/*.jar:/lib/* -Djava.library.path=:/home/user/hadoop/lib/native org.apache.flume.node.Application -f /home/user/software/apache-flume-1.8.0-bin/conf/avro-hdfs.conf -n agent1 user 24869 0.0 0.0 14228 928 pts/0 S+ 16:33 0:00 grep -F avro-hdfs.conf |

| kill 17760 |

没有产生新文件

先排除废进程

| ps aux | fgrep tail-avro.conf没有废进程 |

配置agent1.sinks.sink1.hdfs.path = hdfs://master:9000/flume/suricata/%y-%m-%d

用你的hadoop地址

master是hosts文件里配置的

把master:9000替换成你的hdfs的 ip:port

端口是9000嘛,看一下hadoop的配置,这个9000是不是默认端口

还有hadoop的防火墙关了嘛。hadoop的防火墙怎么看sudo ufw disable

参考网上的配置很多没有写端口,修改为:

| agent1.sinks.sink1.hdfs.path = hdfs://Hadoop1Server/flume/suricata/%y-%m-%d |

| 或/flume/suricata/%y-%m-%d |

查看配置文件:

| <name>fs.defaultFS</name> <value>hdfs://Hadoop1Server:9000</value> |

关闭防火墙:

| user@Hadoop1Server:~/hadoop/etc/hadoop$ service iptables status ● iptables.service Loaded: not-found (Reason: No such file or directory) Active: inactive (dead) user@Hadoop1Server:~/hadoop/etc/hadoop$ service iptables stop ==== AUTHENTICATING FOR org.freedesktop.systemd1.manage-units === Authentication is required to stop 'iptables.service'. Multiple identities can be used for authentication:

Failed to stop iptables.service: Access denied See system logs and 'systemctl status iptables.service' for details. |

防火墙命令:https://blog.csdn.net/qq_27229113/article/details/81249743

| systemctl stop firewalld |

使用ufw:

| 报错ufw: command not found |

安装

| sudo apt-get install ufw |

sudo ufw disable

| 显示Firewall stopped and disabled on system startup |

查看sudo tail -F /data/suricata/log/eve.json能看到,没加sudo会拒绝,可能是我启动的时候没用root

| sudo nohup flume-ng agent -c ~/flume/flume-ng-1.6.0-cdh5.7.1/conf -f ~/flume/flume-ng-1.6.0-cdh5.7.1/conf/tail-avro.conf -n agent1 -Dflume.root.logger=INFO,console |

用root用户或sudo启动报错:

| failed to run command 'flume-ng': No such file or directory |

查看环境变量设置:

| ./etc/skel/.profile ./home/tsec/.profile ./root/.profile用户root需要设置 |

| Vim打开添加 export FLUME_HOME=/home/tsec/flume/flume-ng-1.6.0-cdh5.7.1/ export PATH=$PATH:$FLUME_HOME/bin |

| 生效 source ./root/.profile |

配置flume中java环境变量(查看用户profile文件中没有特地配置java)

| export JAVA_HOME=/home/tsec/java/jdk1.8.0_131 |

后台运行flume

Nohup与&详解:https://blog.csdn.net/hl449006540/article/details/80216061

使用nohup+命令+&

nohup可以让命令忽略hangup(hup)的影响,在后台一直执行。

&是在xshell上也不输出内容,保持在后台执行

| nohup flume-ng agent -c conf -f ~/software/apache-flume-1.8.0-bin/conf/avro-hdfs.conf -n agent1 -Dflume.root.logger=INFO,console |

| 显示: nohup: ignoring input and appending output to 'nohup.out' |

&免疫 Ctrl + C

nohup免疫关闭session发送的SIGHUP信号

查看nohu.out确定正常启动

在根目录启动tail-avro.conf:

| nohup flume-ng agent -c ~/flume/flume-ng-1.6.0-cdh5.7.1/conf -f ~/flume/flume-ng-1.6.0-cdh5.7.1/conf/tail-avro.conf -n agent1 -Dflume.root.logger=INFO,console |

切换root执行后直接~找不到-c路径,报错:

| log4j:WARN No appenders could be found for logger |

更改启动参数:

| nohup flume-ng agent -c /home/tsec/flume/flume-ng-1.6.0-cdh5.7.1/conf -f /home/tsec/flume/flume-ng-1.6.0-cdh5.7.1/conf/tail-avro.conf -n agent1 -Dflume.root.logger=INFO,console |

| 报错: /home/tsec/flume/flume-ng-1.6.0-cdh5.7.1//bin/flume-ng: line 250: /root/java/jdk1.8.0_131/bin/java: No such file or directory 可能需要重新配java环境变量 |

MongoDB写入

http://www.cnblogs.com/cswuyg/p/4498804.html

https://java-my-life.iteye.com/blog/2238085

https://blog.csdn.net/tinico/article/details/41079825?utm_source=blogkpcl14

flume+mongodb流式日志采集:

https://wenku.baidu.com/view/66f1e436ba68a98271fe910ef12d2af90242a81b.html

下载mongodb插件源码:mongosink(打成jar包),和mongodb java驱动

mongosink下载地址:https://github.com/leonlee/flume-ng-mongodb-sink

| Clone the repository Install latest Maven and build source by 'mvn package' Generate classpath by 'mvn dependency:build-classpath' Append classpath in $FLUME_HOME/conf/flume-env.sh Add the sink definition according to Configuration |

通过mvn包build编译,下载依赖项,追加flume-env.sh,根据sink官网的配置说明配置sink definition,打包

mongosink打jar包

工程报错:The method configure(Context) of type MongoSink must override a superclass method

https://blog.csdn.net/kwuwei/article/details/38365839

经过查看,compiler已经是1.8

是因为Build path未修改

打包方法:

https://blog.csdn.net/ssbb1995/article/details/78983915

cd 到pom.xml所在位置,然后输出命令 mvn clean package

报错:

| [ERROR] Unknown lifecycle phase "?clean?package". You must specify a valid lifecycle phase or a goal in the format <plugin-prefix>:<goal> or <plugin-group-id>:<plugin-artifact-id>[:<plugin-version>]:<goal>. Available lifecycle phases are: validate, initialize, generate-sources, process-sources, generate-resources, process-resources, compile, process-classes, generate-test-sources, process-test-sources, generate-test-resources, process-test-resources, test-compile, process-test-classes, test, prepare-package, package, pre-integration-test, integration-test, post-integration-test, verify, install, deploy, pre-site, site, post-site, site-deploy, pre-clean, clean, post-clean. -> [Help 1] [ERROR] [ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch. [ERROR] Re-run Maven using the -X switch to enable full debug logging. [ERROR] [ERROR] For more information about the errors and possible solutions, please read the following articles: [ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/LifecyclePhaseNotFoundException |

先尝试用eclipse自带的mvn install打了一个

尝试Maven build:https://blog.csdn.net/qq_28553681/article/details/80988190

在Goals上填写:clean package 打包成功,显示

| [INFO] Building jar: E:studyMaterialworkeclipseflume-ng-mongodb-sinktargetflume-ng-mongodb-sink-1.0.0.jar |

Flume配置参考:https://www.cnblogs.com/ywjy/p/5255161.html(引用https://blog.csdn.net/tinico/article/details/41079825)

开启MongoDB

| D:Program FilesMongoDBServer3.4bin>mongod.exe --port 65521 --dbpath "D:MongoDBDBData" 或 mongod --dbpath="D:MongoDBDBData" |

启动Flume

|

报错:

| 2019-02-03 19:47:47,926 (New I/O worker #1) [WARN - org.apache.avro.ipc.NettyServer$NettyServerAvroHandler.exceptionCaught(NettyServer.java:201)] Unexpected exception from downstream. org.apache.avro.AvroRuntimeException: Excessively large list allocation request detected: 1863125608 items! Connection closed. at org.apache.avro.ipc.NettyTransportCodec$NettyFrameDecoder.decodePackHeader(NettyTransportCodec.java:167) at org.apache.avro.ipc.NettyTransportCodec$NettyFrameDecoder.decode(NettyTransportCodec.java:139) at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:425) at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:310) at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70) at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564) at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:559) at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268) at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255) at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88) at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:108) at org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:318) at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:89) at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:178) at org.jboss.netty.util.ThreadRenamingRunnable.run(ThreadRenamingRunnable.java:108) at org.jboss.netty.util.internal.DeadLockProofWorker$1.run(DeadLockProofWorker.java:42) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:748) |

修改flume配置中MongoDB端口无果

查阅https://blog.csdn.net/ma0903/article/details/48209681?utm_source=blogxgwz1

有可能flume端接收的协议,与client发送数据的协议不一致。

比如:flume接收avro,client发送tcp

先尝试用eclipse自带的mvn install打了一个

尝试Maven build:https://blog.csdn.net/qq_28553681/article/details/80988190

在Goals上填写:clean package 打包成功,显示

| [INFO] Building jar: E:studyMaterialworkeclipseflume-ng-mongodb-sinktargetflume-ng-mongodb-sink-1.0.0.jar |

Flume配置参考:https://www.cnblogs.com/ywjy/p/5255161.html(引用https://blog.csdn.net/tinico/article/details/41079825)

开启MongoDB

| D:Program FilesMongoDBServer3.4bin>mongod.exe --port 65521 --dbpath "D:MongoDBDBData" 或 mongod --dbpath="D:MongoDBDBData" |

启动Flume

|

启动时报错:

| [ERROR - org.apache.flume.node.AbstractConfigurationProvider.loadSinks(AbstractConfigurationProvider.java:426)] Sink s1 has been removed due to an error during configuration java.lang.IllegalArgumentException: No enum constant org.riderzen.flume.sink.MongoSink.CollectionModel.single 由于配置过程中出现错误, 已删除接收器 s1 |

尝试改变开启mongo时监听端口:

D:Program FilesMongoDBServer3.4bin>mongod.exe --port 27017 --dbpath "D:MongoDBDBData"

拒绝访问了

传输报错:

| 2019-02-03 19:47:47,926 (New I/O worker #1) [WARN - org.apache.avro.ipc.NettyServer$NettyServerAvroHandler.exceptionCaught(NettyServer.java:201)] Unexpected exception from downstream. org.apache.avro.AvroRuntimeException: Excessively large list allocation request detected: 1863125608 items! Connection closed. |

修改flume配置中MongoDB端口无果

查阅https://blog.csdn.net/ma0903/article/details/48209681?utm_source=blogxgwz1

有可能flume端接收的协议,与client发送数据的协议不一致。

比如:flume接收avro,client发送tcp

Windows配置flume

- 到Apache的Flume官网(http://flume.apache.org/download.html)下载apache-flume-8.0-bin.tar.gz

(http://www.apache.org/dist/flume/1.8.0/)

安装包:链接: https://pan.baidu.com/s/1w4FEmq9FkR6EMjqz0_YTWg 提取码: ptt5

解压文件中打开docs文件夹中的index.html即可本地查看文档

2.解压到目录,例如D:softwareapache-flume-1.8.0-bin

3.新建FLUME_HOME变量,填写flume安装目录D:softwareapache-flume-1.8.0-bin

4.编辑系统变量path,追加%FLUME_HOME%conf和%FLUME_HOME%bin

5.复制并重命名flumeconfig目录下的三个文件,并去掉.template后缀

Win+R输入cmd,进入命令窗口,输入

flume-ng version正常,证明环境是ok的。

| 直接用1.9的没配环境变量,加了example.conf文件,出现报错 flume-ng agent --conf ../conf --conf-file ../conf/example.conf --name a1 -property flume.root.logger=INFO,console

D:Program Files (x86)flumewin-apache-flume-1.9.0-binapache-flume-1.9.0-binbin>powershell.exe -NoProfile -InputFormat none -ExecutionPolicy unrestricted -File D:Program Files (x86)flumewin-apache-flume-1.9.0-binapache-flume-1.9.0-binbinflume-ng.ps1 agent --conf ../conf --conf-file ../conf/example.conf --name a1 -property flume.root.logger=INFO,console 处理 -File“D:Program”失败,因为该文件不具有 '.ps1' 扩展名。请指定一个有效的 Windows PowerShell 脚本文件名,然后重试。 Windows PowerShell 原因:软件中调用了一个.bat文件.bat文件无法识别路径中有空格,更改安装路径https://blog.csdn.net/yanhuatangtang/article/details/80404097 更换路径重新解压后:在bin目录下可以运行flume-ng version,但在默认目录不行(已经配过环境变量) |

| bin目录下查看version成功但显示(注意,如果出现问题尝试回来修复): WARN: Config directory not set. Defaulting to D:Programsflumeapache-flume-1.8.0-binconf Sourcing environment configuration script D:Programsflumeapache-flume-1.8.0-binconfflume-env.ps1 WARN: Did not find D:Programsflumeapache-flume-1.8.0-binconfflume-env.ps1 WARN: HADOOP_PREFIX or HADOOP_HOME not found WARN: HADOOP_PREFIX not set. Unable to include Hadoop's classpath & java.library.path WARN: HBASE_HOME not found WARN: HIVE_HOME not found |

使用以下资料测试:https://blog.csdn.net/ycf921244819/article/details/80341502

| 前面配置都正常,在用第二个窗口进行telnet时显示: 正在连接localhost...无法打开到主机的连接。 在端口 50000: 连接失败 |

Centos每次开机需要在右上角手动联网

192.168.43.156

测试:

在apache-flume-1.7.0-binbin 目录下进入cmd界面输入:

| flume-ng agent --conf ../conf --conf-file ../conf/test.conf --name a1 -property flume.root.logger=INFO,console |

|

|

看看能不能查看到学校虚拟机的mongoDB,使用学校的,避免网络传输问题

尝试avro:

| flume-ng agent -c ../conf -f ../conf/netcat.conf -n a1 -property flume.root.logger=INFO,console |

这个是成功的,备份一下:

配置文件:

| # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # Describe the sink a1.sinks.k1.type = logger # Use a channel that buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 |

启动命令:

| flume-ng agent -c ../conf -f ../conf/netcat.conf -n a1 -property flume.root.logger=INFO,console |

在Linux虚拟机中使用nc连接44444端口传输数据

| nc -v 192.168.56.1 44444 |

报错不能连接,connection refused

可能是因为配置中数据源绑定在localhost,修改配置文件

数据源source类型说明:https://blog.csdn.net/zhongguozhichuang/article/details/72866461

将source.bind绑定为虚拟机ip后报错

| org.apache.flume.source.NetcatSource.start(NetcatSource.java:169)] Unable to bind to socket. Exception follows. java.net.BindException: Cannot assign requested address: bind |

改回localhost

配置flume环境变量

flume/conf下的fime-env.sh更改JAVA_HOME

java –verbose查看jdk位置

(本地为C:Program FilesJavajdk1.8.0_131)

两个flume做集群

例:https://www.cnblogs.com/LHWorldBlog/p/8305177.html

(第一个agent的sink作为第二个agent的source)

对比例子中的接收结点上a1.sources.r1.type = avro,修改此处

连接还是被拒绝(我到底是哪个ip)

| node01服务器中,配置文件 |

| node02服务器中,安装Flume(步骤略) |

先启动node02的Flume

flume-ng agent -n a1 -c conf -f avro.conf -Dflume.root.logger=INFO,console

| 操作: flume-ng agent -n a1 -c ../conf -f ../conf/avro.conf -property flume.root.logger=INFO,console |

再启动node01的Flume

flume-ng agent -n a1 -c conf -f simple.conf2 -Dflume.root.logger=INFO,console

打开telnet 测试 node02控制台输出结果

检测端口是否开启监听:链接: https://pan.baidu.com/s/1y6lC2fuvLqpfj10p2pc0vQ 提取码: 4qck

| 操作: flume-ng agent -n a1 -c ../conf -f ../conf/simple.conf2 -Dflume.root.logger=INFO,console 无法解析地址,搭建集群: sudo vim /etc/hosts

127.0.0.1 localhost 127.0.1.1 obedientcorrespondent 10.2.192.232 mongoNode1

|

| 报错: Attempting to create Avro Rpc client. 2019-03-04 07:33:28,429 (lifecycleSupervisor-1-3) [ERROR - org.apache.flume.source.NetcatSource.start(NetcatSource.java:172)] Unable to bind to socket. Exception follows. java.net.SocketException: Unresolved address |

node2的source不能bind localhost,要写局域网地址

打开telnet 测试 node02控制台输出结果

开启HTTP监控

https://blog.csdn.net/u014039577/article/details/51536753

例:

|

|

|

|

其中-Dflume.monitoring.type=http表示使用http方式来监控,后面的-Dflume.monitoring.port=1234表示我们需要启动的监控服务的端口号为1234,这个端口号可以自己随意配置。然后启动flume之后,通过http://ip:1234/metrics就可以得到flume的一个json格式的监控数据。

报错:

找不到与参数名称“Dflume.monitoring .type=http”匹配的参数。

监控文件

例:https://www.cnblogs.com/LHWorldBlog/p/8305177.html

您的应用程序在使用单向异步接口 (如 exersource) 时, 永远无法保证已收到数据!作为这一警告的延伸----而且要完全清楚----对事件的释放绝对没有保证

配置文件

| ############################################################ |

创建空文件演示 touch flume.exec.log

循环添加数据

for i in {1..50}; do echo "$i hi flume" >> flume.exec.log ; sleep 0.1; done

启动Flume

flume-ng agent -n a1 -c conf -f exec.conf -Dflume.root.logger=INFO,console

操作:

| flume-ng agent -n a1 -c ../conf -f ../conf/exec.conf -property flume.root.logger=INFO,console |

tail -f查看日志文件

tail 文件名,默认显示最后10行。

tail -f 文件名,查看日志后并没有退出,一直在等待刷新日志尾部信息。

最后

以上就是风趣水池最近收集整理的关于flume安装与配置的全部内容,更多相关flume安装与配置内容请搜索靠谱客的其他文章。

![[ 安装 ] Flume安装步骤!一、准备工作二、安装步骤](https://www.shuijiaxian.com/files_image/reation/bcimg4.png)

发表评论 取消回复