参考文档

TiDB 慢日志在伴鱼的实践

filebeat配置

vim /home/gaofeng/ypack-filebeat.yml

- type: "log"

paths:

- "/disk1/tidb/deploy2/tidb-4001/log/tidb_slow_query.log"

multiline:

pattern: "^# Time:"

negate: true

match: "after"

max_lines: 8000

fields:

log_topic: "ypack-cdmp_tidb_prod_slow_log"

ignore_older: "1h"

tail_files: true

max_bytes: 102400

close_eof: false

clean_inactive: "10h"

cd /home/gaofeng/ypack-filebeat-all

xpack.monitoring:

enabled: true

elasticsearch:

hosts: ["http://elk.com:80"]

output:

kafka:

partition:

round_robin:

reachable_only: true

hosts:

- "kafka1:9092"

- "kafka2:9092"

- "kafka3:9092"

max_message_bytes: 102400

topic: "%{[fields.log_topic]}"

workers: 2

logging:

level: "info"

filebeat:

config.inputs:

enabled: true

path: /home/gaofeng/ypack-filebeat.yml

reload.enabled: true

reload.period: 10s

cd /home/gaofeng/ypack-filebeat-all

sh start.sh

#!/bin/sh

nohup ./filebeat -c filebeat.yml 2>&1 &

logstash配置

logstash转换

配置如下

消费

"kafka1:9092,kafka2:9092,kafka3:9092"集群的

topics => [“ypack-cdmp_tidb_prod_slow_log”]

filter没有过滤、

先写入ES集群中

input {

kafka {

id => "kafka-cdmp_tidb_prod_slow_log"

topics => ["ypack-cdmp_tidb_prod_slow_log"]

group_id => "ypack-logstash-cdmp_tidb_prod_slow_log"

client_id => "ypack-logstash-cdmp_tidb_prod_slow_log"

consumer_threads => 1

bootstrap_servers => "kafka1:9092,kafka2:9092,kafka3:9092"

auto_offset_reset => "latest"

codec => "json"

}

}

filter {

}

output {

elasticsearch {

id => "elasticsearch-cdmp_tidb_prod_slow_log"

hosts => ["kafka1:9200","kafka2:9200","kafka3:9200"]

index => "ypack-cdmp_tidb_prod_slow_log-%{+YYYY.MM.dd}"

pipeline => "ypack-cdmp_tidb_prod_slow_log"

}

}

Ingest解析

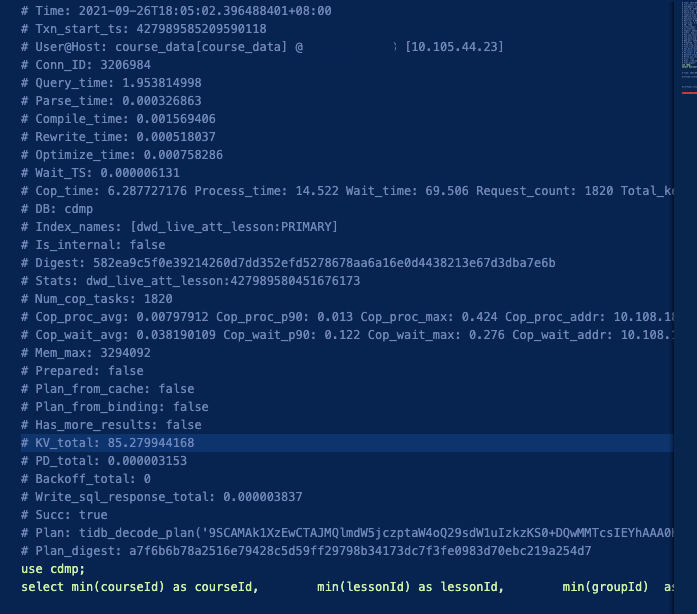

TiDB 4.0.0.1版本对应slowlog日志如下图所示

ES的Ingest对应Grok解析如下,写这个Grok语法花费了不少时间

这样即可把上图日志中字段都解析出来了

[

{

"grok": {

"field": "message",

"patterns": [

"#\s+Time:\s%{TIMESTAMP_ISO8601:generate_time}(\n#\s+Txn_start_ts:\s+%{WORD:txn_start_ts})?(\n#\s+User@Host: %{USER:query_user}\[[^\]]+\] @ (?:(?<query_host>\S*) )?\[(?:%{IP:query_ip})?\])?(\n#\sConn_ID:\s+%{WORD:conn_id})?(\n#\s+Query_time:\s+%{NUMBER:query_time:float})?(\n#\s+Parse_time:\s+%{NUMBER:parse_time:float})?(\n#\s+Compile_time:\s%{NUMBER:compile_time:float})?(\n#\s+Rewrite_time:\s+%{NUMBER:rewrite_time:float})?(\n#\s+Optimize_time:\s+%{NUMBER:optimize_time:float})?(\n#\s+Wait_TS:\s+%{NUMBER:wait_ts:float})?(\n#\s+Cop_time:\s+%{GREEDYDATA:cop_time})?(\n#\s+Prewrite_time:\s+%{GREEDYDATA:prewrite_time})?(\n#\s+DB:\s+%{WORD:db_name})?(\n#\s+Index_names:\s+%{GREEDYDATA:index_names})?(\n#\s+Is_internal:\s+%{WORD:is_internal})?(\n#\s+Digest:\s+%{WORD:digest})?(\n#\s+Stats:\s+%{GREEDYDATA:stats})?(\n#\s+Num_cop_tasks:\s+%{GREEDYDATA:num_cop_tasks})?(\n#\s+Cop_proc_avg:\s+%{GREEDYDATA:cop_proc_avg})?(\n#\s+Cop_wait_avg:\s+%{GREEDYDATA:cop_wait_avg})?(\n#\s+Mem_max:\s+%{GREEDYDATA:mem_max})?(\n#\s+Prepared:\s+%{GREEDYDATA:prepared})?(\n#\s+Plan_from_cache:\s+%{GREEDYDATA:Plan_from_cache})?(\n#\s+Plan_from_binding:\s+%{GREEDYDATA:Plan_from_binding})?(\n#\s+Has_more_results:\s+%{GREEDYDATA:Has_more_results})?(\n#\s+KV_total:\s+%{GREEDYDATA:KV_total})?(\n#\s+PD_total:\s+%{GREEDYDATA:PD_total})?(\n#\s+Backoff_total:\s+%{GREEDYDATA:Backoff_total})?(\n#\s+Write_sql_response_total:\s+%{GREEDYDATA:Write_sql_response_total})?(\n#\s+Succ:\s+%{GREEDYDATA:Succ})?(\n#\s+Plan:\s+%{GREEDYDATA:Plan})?(\n#\s+Plan_digest:\s+%{GREEDYDATA:Plan_digest})?\n(?<sql>[\s\S]*)",

"^(?<message>[\s\S]*)$"

],

"trace_match": false,

"ignore_missing": true

}

}

]

报警规则

对User_A,User_B的非insert into的查询语句进行监控告警,如果超过2s则告警

sql: SELECT AND query_user: User_A AND query_time: [2 TO *] NOT sql: INSERT INTO

sql: SELECT AND query_user: User_B AND query_time: [2 TO *] NOT sql: INSERT INTO

最后

以上就是含糊柚子最近收集整理的关于TiDB 慢日志ELK检索加告警参考文档filebeat配置logstash配置报警规则的全部内容,更多相关TiDB内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复