坐井观天说Devops--2--实验环境准备

- 一.实验说明

- 二.实验环境准备

- 1.docker-compose部署harbor

- 2.k8s环境集群搭建

- 3.k8s集群搭建helm

- 4.k8s集群搭建nfs类型的StorageClass

- a.nfs的安装

- b.StorageClass的部署

- 5.k8s集群部署nginx-ingress

- 6.k8s集群部署metrics server

- 7.k8s集群部署kube-prometheus

- 8.k8s集群部署gitlab

- 9.k8s集群部署jenkins

- 10.k8s集群部署sonarqube

- a.helm方式部署

- b.k8s方式部署

- c.部署验证

- 三.遗留问题

- 四.参考资料

一.实验说明

目前搭建的这些网站,全部进行容器化,并且搭建到k8s集群中。搭建的过程中,遇到的问题,我把解决问题的链接,都附在了博客里。

二.实验环境准备

1.docker-compose部署harbor

关于docker-compose部署harbor,可以参考我之前写的文章,k8s学习笔记2-搭建harbor私有仓库,这里不在赘述。

2.k8s环境集群搭建

步骤:

1.关于k8s的集群搭建,如果想搭建1.23.6的版本,可以参考我之前写的文档,

k8s安装笔记(ubuntu)

2.如果想搭建1.24.2版本的k8s集群,可以参考,我之前写的文档,k8s学习笔记1-搭建k8s环境(k8s版本1.24.3,ubuntu版本22.04)

3.如果想搭建1.25.2版本的k8s集群,还可以继续参考步骤2中的链接,里面只有一个地方需要更改,博客中cri-dockerd的版本是0.2.3,到时候下载一个0.2.6版本的即可,其他不变。

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane 80m v1.25.2

k8s-node1 Ready <none> 25m v1.25.2

k8s-node2 Ready <none> 76s v1.25.2

root@k8s-master1:~# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-c676cc86f-52d68 1/1 Running 0 80m

coredns-c676cc86f-7hbs2 1/1 Running 0 80m

etcd-k8s-master1 1/1 Running 0 80m

kube-apiserver-k8s-master1 1/1 Running 0 80m

kube-controller-manager-k8s-master1 1/1 Running 16 (7m18s ago) 80m

kube-proxy-2qmg2 1/1 Running 0 93s

kube-proxy-9dt9l 1/1 Running 0 25m

kube-proxy-vwpnd 1/1 Running 0 80m

kube-scheduler-k8s-master1 1/1 Running 17 (9m38s ago) 80m

root@k8s-master1:~# kubectl get pods -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-648q4 1/1 Running 0 110s

kube-flannel-ds-szhrf 1/1 Running 0 71m

kube-flannel-ds-vlhsq 1/1 Running 0 25m

root@k8s-master1:~#

PS:

1.本次搭建的是1.25.2的k8s集群,参考我之前写的文档,真是快,半小时这样,就把集群搭建好了,积累和形成文档,真的很重要,这样做事情会比较快。

2.本次搭建k8s集群中还是使用cri-dockerd,等有时间,使用contarinerd搭建,感觉如下两篇文档可以参考:

ubuntu22.04安装Kubernetes1.25.0(k8s1.25.0)高可用集群

从0开始安装k8s1.25【最新k8s版本——20220904】

3.k8s集群搭建helm

我使用的系统是Ubuntu22.04,所以安装的时候,直接snap install helm --classic,就可以了。

关于helm的使用,可以参考如下几篇文档

helm官方文档

K8S学习之helm

helm 工具命令补全

helm 错误 Error: INSTALLATION FAILED: must either provide a name or specify --generate-name

4.k8s集群搭建nfs类型的StorageClass

a.nfs的安装

关于存储卷nfs的安装,可以参考博客,Ubuntu 20.04 Server 安装nfs或者Ubuntu 20.04 中配置NFS服务

在k8s-master1上,设置的共享文件夹为:

root@k8s-master1:/opt/nfsv4/data# cat /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/opt/nfsv4 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check,crossmnt,fsid=0)

/opt/nfsv4/data 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

/opt/nfsv4/back 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

/home/k8s-nfs/gitlab/config 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

/home/k8s-nfs/gitlab/logs 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

/home/k8s-nfs/gitlab/data 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

/home/k8s-nfs/jenkins 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

/home/k8s-nfs/ 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

/home/k8s-nfs/data 192.168.100.0/24(rw,sync,no_root_squash,no_subtree_check)

root@k8s-master1:/opt/nfsv4/data#

在k8s-node1和k8s-node2上去挂载k8s-master1上的/opt/nfsv4/data,挂载到本地的/root/data

k8s-node1上

(base) root@k8s-node1:~# mkdir data

(base) root@k8s-node1:~# mount -t nfs 192.168.100.200:/opt/nfsv4/data data

(base) root@k8s-node1:~# cd data/

(base) root@k8s-node1:~/data# ls

1111.txt 1.txt 2.txt

(base) root@k8s-node1:~/data#

k8s-node2上

root@k8s-node2:~# mkdir data

root@k8s-node2:~# mount -t nfs 192.168.100.200:/opt/nfsv4/data data

root@k8s-node2:~# cd data/

root@k8s-node2:~/data# ls

1111.txt 1.txt 2.txt

root@k8s-node2:~/data#

b.StorageClass的部署

深深的被这篇博客,认识PV/PVC/StorageClass,理解的也很到位,感觉参考价值还是很大的,但是,部署之后,创建pvc后,发现一直处于pending状态,查看pod的日志,出现如下错误:

root@k8s-master1:~/k8s/nfs# kubectl logs nfs-client-provisioner-99cb8db6c-gm6g9

I1012 05:01:40.018676 1 leaderelection.go:185] attempting to acquire leader lease default/fuseim.pri-ifs...

E1012 05:01:57.425646 1 event.go:259] Could not construct reference to: '&v1.Endpoints{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"fuseim.pri-ifs", GenerateName:"", Namespace:"default", SelfLink:"", UID:"53ba6be8-0a89-4652-88e2-82a9e217a58e", ResourceVersion:"658122", Generation:0, CreationTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:63801140588, loc:(*time.Location)(0x1956800)}}, DeletionTimestamp:(*v1.Time)(nil), DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string{"control-plane.alpha.kubernetes.io/leader":"{"holderIdentity":"nfs-client-provisioner-99cb8db6c-gm6g9_f50386f0-49ea-11ed-ad07-8ab7727f2abd","leaseDurationSeconds":15,"acquireTime":"2022-10-12T05:01:57Z","renewTime":"2022-10-12T05:01:57Z","leaderTransitions":4}"}, OwnerReferences:[]v1.OwnerReference(nil), Initializers:(*v1.Initializers)(nil), Finalizers:[]string(nil), ClusterName:""}, Subsets:[]v1.EndpointSubset(nil)}' due to: 'selfLink was empty, can't make reference'. Will not report event: 'Normal' 'LeaderElection' 'nfs-client-provisioner-99cb8db6c-gm6g9_f50386f0-49ea-11ed-ad07-8ab7727f2abd became leader'

I1012 05:01:57.425724 1 leaderelection.go:194] successfully acquired lease default/fuseim.pri-ifs

I1012 05:01:57.425800 1 controller.go:631] Starting provisioner controller fuseim.pri/ifs_nfs-client-provisioner-99cb8db6c-gm6g9_f50386f0-49ea-11ed-ad07-8ab7727f2abd!

I1012 05:01:57.526029 1 controller.go:680] Started provisioner controller fuseim.pri/ifs_nfs-client-provisioner-99cb8db6c-gm6g9_f50386f0-49ea-11ed-ad07-8ab7727f2abd!

I1012 05:01:57.526091 1 controller.go:987] provision "default/www-web-0" class "managed-nfs-storage": started

E1012 05:01:57.529005 1 controller.go:1004] provision "default/www-web-0" class "managed-nfs-storage": unexpected error getting claim reference: selfLink was empty, can't make reference

root@k8s-master1:~/k8s/nfs#

网上查找,基本上所有的文章,都提供了一种解决方案,那就是,编辑/etc/kubernetes/manifests/kube-apiserver.yaml这里,启动参数添加"- --feature-gates=RemoveSelfLink=false"这行命令。我这边添加好之后,api-server无法使用,kubectl命令无法使用,如果删除这条命令,就好了。我猜最新的k8s(版本是1.25.2)已结不支持这个启动参数了,添加这个参数后,直接k8s的api-server无法启动歇菜了。nfs的provision版本也是最新的,调查了半天,没办法,我尝试使用helm进行部署,发现是可以的。

使用helm部署nfs,可以参考这篇文档,Helm搭建NFS的StorageClass(安装Helm)(坑) ,需要修改nfs server的地址和路径,如果想要进行创建pvc的时候,关联sc,直接把名字“nfs-client”填上去即可

ps:对比了那篇文档的部署和helm部署的区别,那篇文档,没有role和rolebanding方面的部署,其他大致都一样。

root@k8s-master1:/home/k8s-nfs/data# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-nfs-subdir-external-provisioner Delete Immediate true 34m

root@k8s-master1:/home/k8s-nfs/data# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-for-storageclass Bound pvc-e4e3ab4b-11a4-4b3b-a3f0-77c6737cfbb6 500Mi RWX nfs-client 3m26s

root@k8s-master1:/home/k8s-nfs/data# ls

default-pvc-for-storageclass-pvc-e4e3ab4b-11a4-4b3b-a3f0-77c6737cfbb6

root@k8s-master1:/home/k8s-nfs/data#

5.k8s集群部署nginx-ingress

1.关于k8s集群的ingress-nginx搭建,我是参考,我自己之前写的文档,k8s学习笔记5-部署和应用ingress-nginx-controller(v1.3.0),省了很多时间,本次集群中的nginx-ingress-contoller的版本是v1.4.0

root@k8s-master1:~/k8s/ingress-nginx# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-fpvp8 0/1 Completed 0 9m9s 10.244.1.4 k8s-node1 <none> <none>

ingress-nginx-admission-patch-c472b 0/1 Completed 0 9m9s 10.244.1.5 k8s-node1 <none> <none>

ingress-nginx-controller-9zjq9 1/1 Running 0 9m9s 192.168.100.201 k8s-node1 <none> <none>

ingress-nginx-controller-qkkll 1/1 Running 0 5m37s 192.168.100.202 k8s-node2 <none> <none>

root@k8s-master1:~/k8s/ingress-nginx#

6.k8s集群部署metrics server

部署metrics server,直接参考我之前写的文档,k8s学习笔记3-搭建k8s metrics server

部署完成之后,如下

root@k8s-master1:~/k8s/metrics-server# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master1 487m 12% 2483Mi 65%

k8s-node1 1251m 10% 8580Mi 54%

k8s-node2 182m 2% 3234Mi 43%

root@k8s-master1:~/k8s/metrics-server# kubectl top pods -n monitoring

NAME CPU(cores) MEMORY(bytes)

alertmanager-main-0 7m 30Mi

alertmanager-main-1 7m 30Mi

alertmanager-main-2 7m 30Mi

blackbox-exporter-6d897d5f66-ptkz2 1m 23Mi

grafana-b97f877f9-kns4l 11m 65Mi

kube-state-metrics-76c8879968-96npl 2m 38Mi

node-exporter-fqd5v 12m 19Mi

node-exporter-j45gv 10m 25Mi

node-exporter-kxnpc 5m 20Mi

prometheus-adapter-5d496859bc-5cg6x 10m 29Mi

prometheus-adapter-5d496859bc-gdtmq 7m 27Mi

prometheus-k8s-0 23m 310Mi

prometheus-k8s-1 21m 319Mi

prometheus-operator-7876d45fb6-2plrq 2m 41Mi

root@k8s-master1:~/k8s/metrics-server#

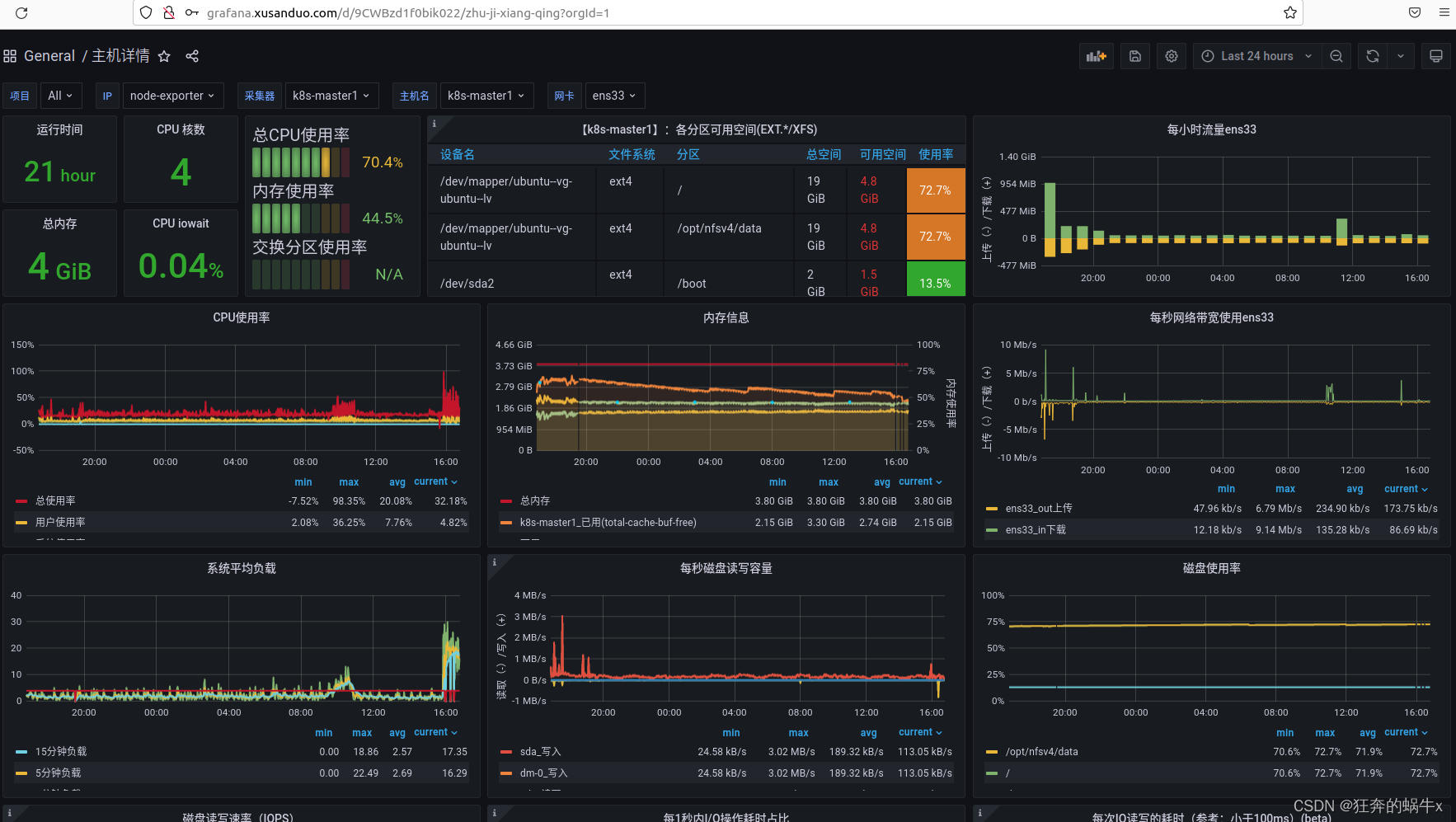

7.k8s集群部署kube-prometheus

关于k8s的性能监控kube-prometheus的搭建,也会参考我之前写的文档,k8s学习笔记6-搭建监控kube-prometheus

tips:

1.镜像k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.6.0和registry.k8s.io/prometheus-adapter/prometheus-adapter:v0.10.0无法下载,通过search方式,找到dyrnq/kube-state-metrics:v2.6.0和v5cn/prometheus-adapter:v0.10.0去进行下载

2.不需要通过nodeport的方式,讲grafana和prometheus服务,对外进行访问,我们可以通过ingress-Nginx的方式,进行访问这两种服务器,配置如下:

root@k8s-master1:~/k8s/ingress# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: grafana.xusanduo.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 3000

- host: prometheus.xusanduo.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus-k8s

port:

number: 9090

root@k8s-master1:~/k8s/ingress#

查看名称空间下monitoring的pod,服务等

root@k8s-master1:~/k8s/ingress# kubectl get all -n monitoring

NAME READY STATUS RESTARTS AGE

pod/alertmanager-main-0 2/2 Running 4 (17m ago) 24m

pod/alertmanager-main-1 2/2 Running 5 (15m ago) 24m

pod/alertmanager-main-2 2/2 Running 4 (17m ago) 24m

pod/blackbox-exporter-6d897d5f66-ptkz2 3/3 Running 0 24m

pod/grafana-b97f877f9-kns4l 1/1 Running 0 24m

pod/kube-state-metrics-76c8879968-96npl 3/3 Running 0 24m

pod/node-exporter-fqd5v 2/2 Running 0 24m

pod/node-exporter-j45gv 2/2 Running 0 24m

pod/node-exporter-kxnpc 2/2 Running 0 24m

pod/prometheus-adapter-5d496859bc-5cg6x 1/1 Running 0 24m

pod/prometheus-adapter-5d496859bc-gdtmq 1/1 Running 0 24m

pod/prometheus-k8s-0 2/2 Running 0 24m

pod/prometheus-k8s-1 2/2 Running 0 24m

pod/prometheus-operator-7876d45fb6-2plrq 2/2 Running 0 24m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager-main ClusterIP 10.110.98.190 <none> 9093/TCP,8080/TCP 24m

service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 24m

service/blackbox-exporter ClusterIP 10.102.133.171 <none> 9115/TCP,19115/TCP 24m

service/grafana ClusterIP 10.97.155.183 <none> 3000/TCP 24m

service/kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 24m

service/node-exporter ClusterIP None <none> 9100/TCP 24m

service/prometheus-adapter ClusterIP 10.97.164.28 <none> 443/TCP 24m

service/prometheus-k8s ClusterIP 10.98.59.39 <none> 9090/TCP,8080/TCP 24m

service/prometheus-operated ClusterIP None <none> 9090/TCP 24m

service/prometheus-operator ClusterIP None <none> 8443/TCP 24m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/node-exporter 3 3 3 3 3 kubernetes.io/os=linux 24m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/blackbox-exporter 1/1 1 1 24m

deployment.apps/grafana 1/1 1 1 24m

deployment.apps/kube-state-metrics 1/1 1 1 24m

deployment.apps/prometheus-adapter 2/2 2 2 24m

deployment.apps/prometheus-operator 1/1 1 1 24m

NAME DESIRED CURRENT READY AGE

replicaset.apps/blackbox-exporter-6d897d5f66 1 1 1 24m

replicaset.apps/grafana-b97f877f9 1 1 1 24m

replicaset.apps/kube-state-metrics-76c8879968 1 1 1 24m

replicaset.apps/prometheus-adapter-5d496859bc 2 2 2 24m

replicaset.apps/prometheus-operator-7876d45fb6 1 1 1 24m

NAME READY AGE

statefulset.apps/alertmanager-main 3/3 24m

statefulset.apps/prometheus-k8s 2/2 24m

root@k8s-master1:~/k8s/ingress#

查看grafana和prometheus的状态

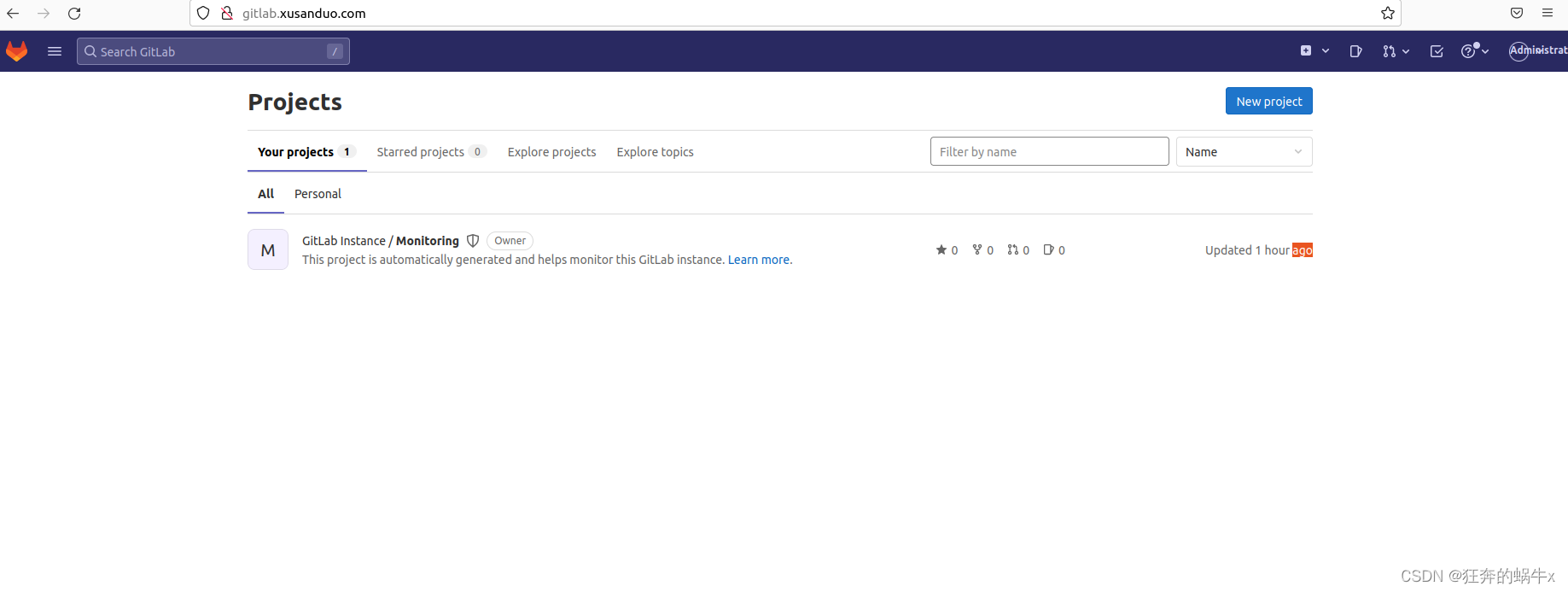

8.k8s集群部署gitlab

参考文档,k8s部署gitlab,链接中的博客,没有ingress,本篇文章,讲ingress添加进去了,有些地方做了细微的修改。

root@k8s-master1:~/k8s/gitlab# cat gitlab-deploy.yaml 前搭建的这些网站,全部进行容器化,并且搭建到k8s集群中。

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx

spec:

ingressClassName: nginx

rules:

- host: gitlab.xusanduo.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: gitlab

port:

number: 80

---

apiVersion: v1

kind: Service

metadata:

name: gitlab

spec:

# type: NodePort

ports:

# Port上的映射端口

- port: 443

targetPort: 443

name: gitlab443

- port: 80

targetPort: 80

name: gitlab80

- port: 22

targetPort: 22

name: gitlab22

selector:

app: gitlab

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: gitlab

spec:

replicas: 1

selector:

matchLabels:

app: gitlab

revisionHistoryLimit: 2

template:

metadata:

labels:

app: gitlab

spec:

containers:

# 应用的镜像

- image: gitlab/gitlab-ce

name: gitlab

imagePullPolicy: IfNotPresent

# 应用的内部端口

ports:

- containerPort: 443

name: gitlab443

- containerPort: 80

name: gitlab80

- containerPort: 22

name: gitlab22

volumeMounts:

# gitlab持久化

- name: gitlab-persistent-config

mountPath: /etc/gitlab

- name: gitlab-persistent-logs

mountPath: /var/log/gitlab

- name: gitlab-persistent-data

mountPath: /var/opt/gitlab

volumes:

# 使用nfs互联网存储

- name: gitlab-persistent-config

nfs:

server: 192.168.100.200

path: /home/k8s-nfs/gitlab/config

- name: gitlab-persistent-logs

nfs:

server: 192.168.100.200

path: /home/k8s-nfs/gitlab/logs

- name: gitlab-persistent-data

nfs:

server: 192.168.100.200

path: /home/k8s-nfs/gitlab/data

root@k8s-master1:~/k8s/gitlab#

部署成功后

root@k8s-master1:/home/k8s-nfs/gitlab# ls

config data logs

root@k8s-master1:/home/k8s-nfs/gitlab# cd config/

root@k8s-master1:/home/k8s-nfs/gitlab/config# ls

gitlab-secrets.json gitlab.rb initial_root_password ssh_host_ecdsa_key ssh_host_ecdsa_key.pub ssh_host_ed25519_key ssh_host_ed25519_key.pub ssh_host_rsa_key ssh_host_rsa_key.pub trusted-certs

root@k8s-master1:/home/k8s-nfs/gitlab/config# ls ../data/

alertmanager bootstrapped gitaly gitlab-exporter gitlab-rails gitlab-workhorse nginx postgresql public_attributes.json trusted-certs-directory-hash

backups git-data gitlab-ci gitlab-kas gitlab-shell logrotate postgres-exporter prometheus redis

root@k8s-master1:/home/k8s-nfs/gitlab/config# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/gitlab-556ccb5864-cw6zl 1/1 Running 0 19m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/gitlab ClusterIP 10.98.219.97 <none> 443/TCP,80/TCP,22/TCP 56m

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d18h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/gitlab 1/1 1 1 56m

NAME DESIRED CURRENT READY AGE

replicaset.apps/gitlab-556ccb5864 1 1 1 56m

root@k8s-master1:/home/k8s-nfs/gitlab/config#

特别说明

1.关于gitlab的docker私有化部署及邮箱配置,可以参考如下链接

https://blog.csdn.net/IT_rookie_newbie/article/details/126674484

2.gitlab 取消注册功能

http://t.zoukankan.com/mengyu-p-6780616.html

3.gitlab修改root密码

https://blog.csdn.net/qq_50247813/article/details/125072969

https://docs.gitlab.com/ee/security/reset_user_password.html#reset-your-root-password

9.k8s集群部署jenkins

部署时,可以参考文档,这是我看过最详细的k8s中部署Jenkins教程,由于文档时间比较久了,无法直接使用,我修改后的部署文件,如下:

apiVersion: v1

kind: Service

metadata:

name: jenkins

labels:

app: jenkins

spec:

type: NodePort

ports:

- name: http

port: 8080 #服务端口

targetPort: 8080

nodePort: 32001 #NodePort方式暴露 Jenkins 端口

- name: jnlp

port: 50000 #代理端口

targetPort: 50000

nodePort: 32002

selector:

app: jenkins

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

labels:

app: jenkins

spec:

selector:

matchLabels:

app: jenkins

replicas: 1

template:

metadata:

labels:

app: jenkins

spec:

nodeName: k8s-node1

dnsPolicy: None

dnsConfig:

nameservers:

- 10.96.0.10

- 114.114.114.114

- 8.8.8.8

searches:

- default.svc.cluster.local

options:

- name: ndots

value: "5"

containers:

- name: jenkins

image: jenkinsci/blueocean

securityContext:

runAsUser: 0 #设置以ROOT用户运行容器

privileged: true #拥有特权

ports:

- name: http

containerPort: 8080

- name: jnlp

containerPort: 50000

volumeMounts: #设置要挂在的目录

- name: data

mountPath: /var/jenkins_home

- name: docker-sock

mountPath: /var/run/docker.sock

volumes:

- name: data

nfs:

server: 192.168.100.200

path: /home/k8s-nfs/jenkins

# - name: data

# hostPath:

# path: /home/jenkins

- name: docker-sock

hostPath:

path: /var/run/docker.sock

现在已经将gitlab和jenkins的ingress整合到一个文件中,这样方便管理,如下

root@k8s-master1:~/k8s/ingress# cat ingress-default.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx

namespace: default

spec:

ingressClassName: nginx

rules:

- host: gitlab.xusanduo.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: gitlab

port:

number: 80

- host: jenkins.xusanduo.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: jenkins

port:

number: 8080

root@k8s-master1:~/k8s/ingress#

安装Jenkins遇到问题1:

2022-10-10 06:22:25.168+0000 [id=28] WARNING hudson.model.UpdateCenter#updateDefaultSite: Upgrading Jenkins. Failed to update the default Update Site 'default'. Plugin upgrades may fail.

java.net.UnknownHostException: updates.jenkins.io

at java.base/java.net.AbstractPlainSocketImpl.connect(Unknown Source)

at java.base/java.net.SocksSocketImpl.connect(Unknown Source)

at java.base/java.net.Socket.connect(Unknown Source)

at java.base/sun.security.ssl.SSLSocketImpl.connect(Unknown Source)

at java.base/sun.net.NetworkClient.doConnect(Unknown Source)

at java.base/sun.net.www.http.HttpClient.openServer(Unknown Source)

at java.base/sun.net.www.http.HttpClient.openServer(Unknown Source)

at java.base/sun.net.www.protocol.https.HttpsClient.<init>(Unknown Source)

at java.base/sun.net.www.protocol.https.HttpsClient.New(Unknown Source)

at java.base/sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.getNewHttpClient(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.plainConnect0(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.plainConnect(Unknown Source)

at java.base/sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.connect(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInpu八tStream0(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInputStream(Unknown Source)

at java.base/sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(Unknown Source)

at hudson.model.DownloadService.loadJSON(DownloadService.java:122)

at hudson.model.UpdateSite.updateDirectlyNow(UpdateSite.java:219)

at hudson.model.UpdateSite.updateDirectlyNow(UpdateSite.java:214)

at hudson.model.UpdateCenter.updateDefaultSite(UpdateCenter.java:2667)

at jenkins.install.SetupWizard.init(SetupWizard.java:206)

at jenkins.install.InstallState$InitialSecuritySetup.initializeState(InstallState.java:182)

at jenkins.model.Jenkins.setInstallState(Jenkins.java:1131)

at jenkins.install.InstallUtil.proceedToNextStateFrom(InstallUtil.java:98)

at jenkins.install.InstallState$Unknown.initializeState(InstallState.java:88)

at jenkins.model.Jenkins$15.run(Jenkins.java:3497)

at org.jvnet.hudson.reactor.TaskGraphBuilder$TaskImpl.run(TaskGraphBuilder.java:175)

at org.jvnet.hudson.reactor.Reactor.runTask(Reactor.java:305)

at jenkins.model.Jenkins$5.runTask(Jenkins.java:1158)

at org.jvnet.hudson.reactor.Reactor$2.run(Reactor.java:222)

at org.jvnet.hudson.reactor.Reactor$Node.run(Reactor.java:121)

at jenkins.security.ImpersonatingExecutorService$1.run(ImpersonatingExecutorService.java:68)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

2022-10-10 06:22:25.168+0000 [id=43] INFO hudson.util.Retrier#start: The attempt #1 to do the action check updates server failed with an allowed exception:

java.net.UnknownHostException: updates.jenkins.io

at java.base/java.net.AbstractPlainSocketImpl.connect(Unknown Source)

at java.base/java.net.SocksSocketImpl.connect(Unknown Source)

at java.base/java.net.Socket.connect(Unknown Source)

at java.base/sun.security.ssl.SSLSocketImpl.connect(Unknown Source)

at java.base/sun.net.NetworkClient.doConnect(Unknown Source)

at java.base/sun.net.www.http.HttpClient.openServer(Unknown Source)

at java.base/sun.net.www.http.HttpClient.openServer(Unknown Source)

at java.base/sun.net.www.protocol.https.HttpsClient.<init>(Unknown Source)

at java.base/sun.net.www.protocol.https.HttpsClient.New(Unknown Source)

at java.base/sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.getNewHttpClient(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.plainConnect0(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.plainConnect(Unknown Source)

at java.base/sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.connect(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInputStream0(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInputStream(Unknown Source)

at java.base/sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(Unknown Source)

at hudson.model.DownloadService.loadJSON(DownloadService.java:122)

at hudson.model.UpdateSite.updateDirectlyNow(UpdateSite.java:219)

at hudson.model.UpdateSite.updateDirectlyNow(UpdateSite.java:214)

at hudson.PluginManager.checkUpdatesServer(PluginManager.java:1960)

at hudson.util.Retrier.start(Retrier.java:62)

at hudson.PluginManager.doCheckUpdatesServer(PluginManager.java:1931)

at jenkins.DailyCheck.execute(DailyCheck.java:93)

at hudson.model.AsyncPeriodicWork.lambda$doRun$1(AsyncPeriodicWork.java:102)

at java.base/java.lang.Thread.run(Unknown Source)

解决方式1(不推荐):

在部署文件中增加域名解析

.............

hostAliases:

- ip: 52.202.51.185

hostnames:

- "updates.jenkins.io"

............

解决方式2(推荐):

在部署文件中增加dns server地址,关于k8s的dns设置,可以参考文档,k8s-dns设置,(注意:更改namespace)

......................

dnsPolicy: None

dnsConfig:

nameservers:

- 10.96.0.10

- 114.114.114.114

- 8.8.8.8

searches:

- default.svc.cluster.local

options:

- name: ndots

value: "5"

.......................

ps:发现如果用docker部署,不会出现这种,而用k8s的方式部署,就会出现无法获取更新中心的地址,主要是两者的dns地址存在差别

k8s方式部署时:

root@k8s-master1:~/k8s/jenkins# kubectl exec -it jenkins-6c8bf954bd-dpgt5 -- bash

bash-5.1# cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local 114.114.114.114

options ndots:5

docker直接部署

(base) root@k8s-node1:/home/jenkins# docker exec -it 4b8 bash

bash-5.1# cat /etc/resolv.conf

nameserver 192.168.100.1

search 114.114.114.114

bash-5.1# exit

Jenkins部署成功后:

10.k8s集群部署sonarqube

a.helm方式部署

目前我是通过使用helm安装的sonarqube,helm安装sonarqube(bitnami) 9.3

添加的mv-value.yaml文件为:

root@k8s-master1:~/k8s/helm3/sonarqube# cat my-values.yaml

global:

storageClass: "nfs-client"

sonarqubeUsername: admin

sonarqubePassword: "www123456"

service:

type: ClusterIP

ingress:

enabled: true

ingressClassName: "nginx"

hostname: sonarqube.xusanduo.com

persistence:

enabled: true

size: 2Gi

postgresql:

enabled: true

persistence:

enabled: true

size: 2Gi

root@k8s-master1:~/k8s/helm3/sonarqube#

b.k8s方式部署

k8s方式部署,可以参考博客,k8s部署SonarQube

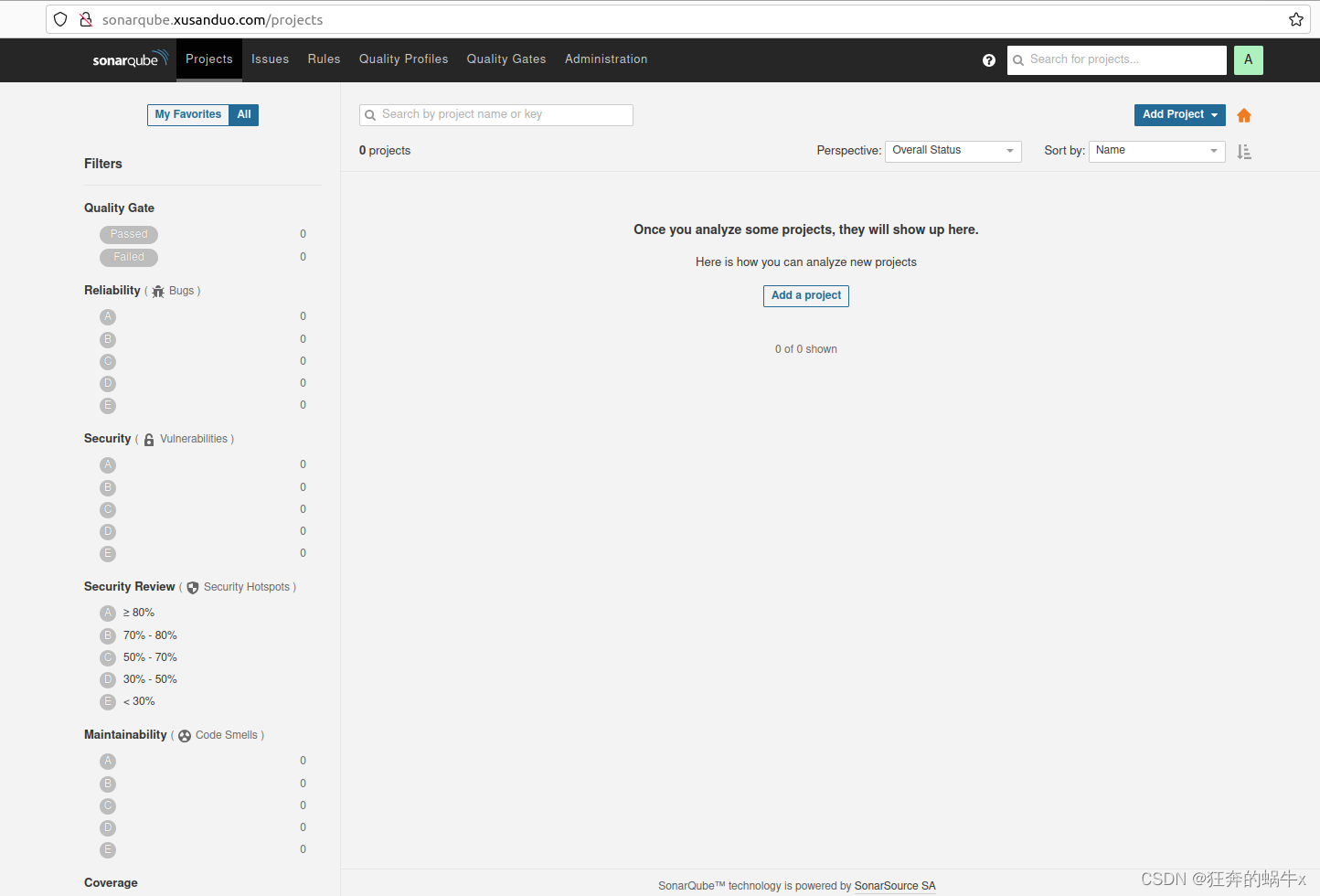

c.部署验证

部署成功后的网站:

三.遗留问题

1.有一部分网站,也可以考虑使用helm进行安装

四.参考资料

VMvare官方地址

https://www.vmware.com/products/workstation-pro/workstation-pro-evaluation.html

Ubuntu系统下 VMware tools安装

https://blog.csdn.net/MR_lihaonan/article/details/125479384

重新开机后,vmvare的虚拟机硬盘无法打开

https://blog.csdn.net/o396032767/article/details/84805532

k8s学习笔记2-搭建harbor私有仓库

https://blog.csdn.net/weixin_43501172/article/details/125937610

Ubuntu 20.04 Server 安装nfs

https://www.jianshu.com/p/391822b208f0

mount.nfs: access denied by server while mounting

https://blog.csdn.net/ding_xc/article/details/123183499

如何在Ubuntu上配置NFS(着重看权限配置)

http://www.manongjc.com/detail/51-yuyinvlieoydurz.html

Jenkins忘记管理员密码的解决方法

http://www.ay1.cc/article/10151.html

最后

以上就是冷酷音响最近收集整理的关于坐井观天说Devops--2--实验环境准备一.实验说明二.实验环境准备三.遗留问题四.参考资料的全部内容,更多相关坐井观天说Devops--2--实验环境准备一内容请搜索靠谱客的其他文章。

发表评论 取消回复