前言

数据集有点大,大概是3千万的数据,1G左右,如果用机器学习方法,预计需要内存32G左右,至少需要24G,或者自己分批慢慢跑特征,很多人用自然语言处理的时序模型来做本次比赛,需要的机器会更高一些,是在没机器跑了。本代码是在腾讯钛平台上跑的,用的8核32G的机器。

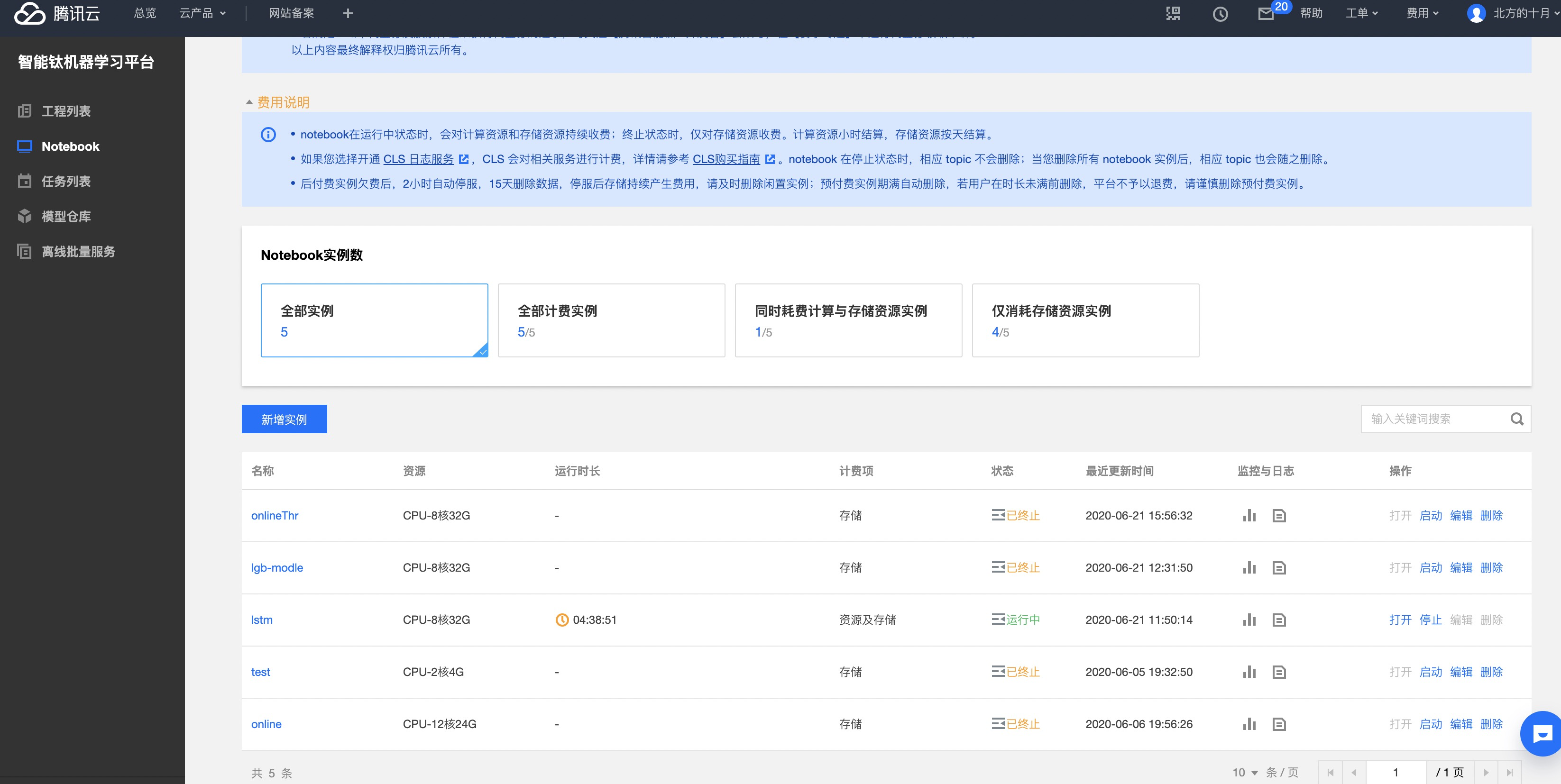

关于腾讯钛平台

这个平台代码和数据是分开的,代码可以在notebook平台、可视化拖拽机器平台等部署,这两种方式都可以,我这里利用的notebook平台,界面如下:

这里要强调一下,数据集是在COS存储平台,notebook会打通存储平台,通过代码的方式去读取COS文件,也就是说你要把数据集上传到COS平台,然后在钛平台上通过代码去读取文件,代码如下:

import os

from qcloud_cos import CosConfig

from qcloud_cos import CosS3Client

from ti.utils import get_temporary_secret_and_token

#### 指定本地文件路径,可根据需要修改。

local_file = "/home/tione/notebook/df_fea_result.csv"

#### 用户的存储桶,修改为存放所需数据文件的存储桶,存储桶获取参考腾讯云对象存储

bucket="game"

#### 用户的数据,修改为对应的数据文件路径,文件路径获取参考腾讯云对象存储

data_key="contest/df_fea_result.csv"

#### 获取用户临时密钥

secret_id, secret_key, token = get_temporary_secret_and_token()

config = CosConfig(Region=os.environ.get('REGION'), SecretId=secret_id, SecretKey=secret_key, Token=token, Scheme='https')

client = CosS3Client(config)

#### 获取文件到本地

response = client.get_object(

Bucket=bucket,

Key=data_key,

)

response['Body'].get_stream_to_file(local_file)

data_key="contest/df_fea_tfidf.csv"

# cos 文件会被复制到本地文件,所以local_file 就是本地文件,notebook中要读取的是本地文件

local_file = "/home/tione/notebook/df_fea_tfidf.csv"

response = client.get_object(

Bucket=bucket,

Key=data_key,

)

response['Body'].get_stream_to_file(local_file)

print("load data file over ")

这里跟kaggle平台、谷歌平台有点类似,只不过方式有点差别。

钛平台安装库

!pip install lightgbm

比赛的问题描述

- 输入:以用户在广告系统中的交互行为作为输入,用户点击了哪些广告、素材

- 输出:预测用户性别和年龄

数据集

供一组用户在长度为 91 天(3 个月)的时间窗口内的广告点击历史记录作为训练数据集。每条记录中包含了:

- 日期(从 1 到 91)、用户信息

- 年龄,性别

- 被点击的广告的信息:素材 id、广告 id、产品 id、产品类目 id、广告主id、广告主行业 id 等

- 用户当天点击该广告的次数。

测试数据集将会是另一组用户的广告点击历史记录。提供给参赛者的测试数据集中不会包含这些用户的年龄和性别信息,输出:给出用户的年龄和性别。

主要的数据集有三个文件:

-

user.csv

‘user_id’,

‘age’:分段表示的用户年龄,取值范围[1-10] ,

‘gender’:用户性别,取值范围[1,2] -

ad.csv

creative_id’

‘ad_id’:该素材所归属的广告的 id,采用类似于 user_id 的方式生成。每个广告可能包

含多个可展示的素材

‘product_id’:该广告中所宣传的产品的 id,采用类似于 user_id 的方式生成。

, ‘product_category’:该广告中所宣传的产品的类别 id

‘advertiser_id’:广告主的 id,采用类似于 user_id 的方式生成

'industry:广告主所属行业的 id -

click_log.csv

time:天粒度的时间,整数值,取值范围[1, 91]

user_id:从1到N随机编号生成的不重复的加密的用户id,其中N为用户总数目(训

练集和测试集)

creative_id:用户点击的广告素材的 id,采用类似于 user_id 的方式生成。

click_times:点击次数

思路

就是分类方法,采用lgb方法来做非分类。

特征提取

数据平铺开,每个用户都有91天的数据,那么

1、91天点击最多的广告信息特征

product_id、product_category、advertiser_id、industry

max 80% 75% 50% 25% 等数据指标

根据性别和年龄,反推 ,比如男性 最喜欢点击的product_id、product_category、advertiser_id、industry,这样根据EDA信息来推导来获取特征。

特征代码

直接上代码吧,有些特征想到了没写,因为机器是在跑不动。

!pip install wget

import wget, tarfile

import zipfile

def getData(file):

filename = wget.download(file)

print(filename)

zFile = zipfile.ZipFile(filename, "r")

res=[]

for fileM in zFile.namelist():

zFile.extract(fileM, "./")

res.append(fileM)

zFile.close();

return res

train_file ="https://tesla-ap-shanghai-1256322946.cos.ap-shanghai.myqcloud.com/cephfs/tesla_common/deeplearning/dataset/algo_contest/train_preliminary.zip"

test_file = "https://tesla-ap-shanghai-1256322946.cos.ap-shanghai.myqcloud.com/cephfs/tesla_common/deeplearning/dataset/algo_contest/test.zip"

train_data_file = getData(train_file)

print("train_data_file = ",train_data_file)

test_data_file = getData(test_file)

print("test_data_file = ",test_data_file)

print("load data file over ")

%matplotlib inline

import pandas as pd

import numpy as np

import os

from tqdm import tqdm

from sklearn.model_selection import StratifiedKFold

from sklearn import metrics

import time

#import lightgbm as lgb

import os, sys, gc, time, warnings, pickle, random

from sklearn.decomposition import TruncatedSVD

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

#from gensim.models import Word2Vec

import gc

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

%%time

def q10(x):

return x.quantile(0.1)

def q20(x):

return x.quantile(0.2)

def q30(x):

return x.quantile(0.3)

def q40(x):

return x.quantile(0.4)

def q60(x):

return x.quantile(0.6)

def q70(x):

return x.quantile(0.7)

def q80(x):

return x.quantile(0.8)

def q90(x):

return x.quantile(0.9)

# 组合变量的统计数据

def basic3_digital_features(group):

data = group

fea = []

fea.append(data.mode().values[0])

fea.append(data.max())

fea.append(data.min())

fea.append(data.mean())

fea.append(data.ptp())

fea.append(data.std())

fea.append(data.median())

return fea

# 获取这个特征下的统计次数

def get_fea_max(df,df_train,cols,flag):

for col in cols:

df[col+'_click_sum'] = df.groupby(['user_id',col])['click_times'].transform('sum')

df = df.sort_values([col+'_click_sum','product_id'], ascending=[False,False])

df_new=df.drop_duplicates('user_id', keep='first')

#df_new = df.sort_values([col+'_click_sum','product_id'], ascending=[False,False])[['user_id',col+'_click_sum',col]].groupby('user_id', as_index=False).first()

df_new[str(flag)+'_max_'+col]=df_new[col].astype(int)

df_train = pd.merge(df_train, df_new[['user_id',str(flag)+'_max_'+col]], on='user_id', how='left')

return df_train

def get_fea_time_max(df,cols,flag):

print(" - - "*5+" flag = "+str(flag)+" - - "*5)

timeSplit = getSplitList(df,"time",flag)

df_fea = df[['user_id']].drop_duplicates('user_id')

i=1

for times in timeSplit:

time_set = set(times)

df_new = df.loc[df['time'].isin(time_set)]

#print(" df_new.shape = ",df_new.shape)

df_key=df_new[['user_id']].drop_duplicates('user_id')

df_fea_max = get_fea_max(df_new,df_key,cols,str(flag)+"_"+str(i))

#df_fea = pd.concat([df_fea,df_fea_max],ignore_index=True)

#print("df_fea_max.columns",df_fea_max.columns)

df_fea = pd.merge(df_fea, df_fea_max, on='user_id', how='left')

i=i+1

return df_fea

def get_fea_time(df,df_train,cols):

# df_new = get_fea_time_max(df,cols,1)

# df_train = pd.merge(df_train, df_new, on='user_id', how='left')

# del df_new

df_new = get_fea_time_max(df,cols,7)

df_train = pd.merge(df_train, df_new, on='user_id', how='left')

del df_new

df_new = get_fea_time_max(df,cols,10)

df_train = pd.merge(df_train, df_new, on='user_id', how='left')

del df_new

return df_train

# 点击次数统计

def get_click_max(df,df_train):

df['click_sum'] = df.groupby('user_id')['click_times'].transform('sum')

df_new=df.drop_duplicates('user_id')

df_train = pd.merge(df_train, df_new[['user_id','click_sum']], on='user_id', how='left')

print("get_click_max df_train.shape=",df_train.shape)

return df_train

def get_fea(df):

df_train = df[['user_id']].drop_duplicates('user_id') #.to_frame()

fea_columns=['creative_id', 'ad_id', 'product_id', 'product_category','advertiser_id', 'industry']

df_train = get_fea_time(df,df_train,fea_columns)

#print("df_train.shpe = ",df_train.shape)

df_train = get_click_max(df,df_train)

#df_train = get_statistic_fea(df,df_train,fea_columns)

stat_functions = ['min', 'mean', 'median', 'nunique', q20, q40, q60, q80]

stat_ways = ['min', 'mean', 'median', 'nunique', 'q_20', 'q_40', 'q_60', 'q_80']

feat_col = ['creative_id', 'ad_id','advertiser_id',]

group_tmp = df.groupby('user_id')[feat_col].agg(stat_functions).reset_index()

group_tmp.columns = ['user_id'] + ['{}_{}'.format(i, j) for i in feat_col for j in stat_ways]

df_train = df_train.merge(group_tmp, on='user_id', how='left')

df_train.replace("\N",'0',inplace=True)

return df_train

def get_data_fea(df_train,df_test):

#df_litt = df_train[(df_train['time']<92) & (df_train['time']>88) ]

df_all = pd.concat([df_train,df_test],ignore_index=True)

print("df_all.shape=",df_all.shape)

user_key = df_all[['user_id','age','gender']].drop_duplicates('user_id')#.to_frame()

df_fea = pd.DataFrame()

keys = list(df_all['user_id'].drop_duplicates())

print("keys.keys=",len(keys))

page_size = 100000

user_list = [keys[i:i+page_size] for i in range(0,len(keys),page_size)]

#user_list = np.array(keys).reshape(-1, 10)

i=0

for users in user_list:

i=i+1

print(' i = ',i)

user_set = set(users)

df_new = df_all.loc[df_all['user_id'].isin(user_set)]

df_new_fea = get_fea(df_new)

df_fea = pd.concat([df_fea,df_new_fea],ignore_index=True)

print("df_fea.shape=",df_fea.shape)

del df_new_fea

gc.collect()

df_fea = df_fea.merge(user_key, on='user_id', how='left')

return df_fea

train_data_file = ['train_preliminary/', 'train_preliminary/ad.csv', 'train_preliminary/click_log.csv', 'train_preliminary/user.csv', 'train_preliminary/README']

test_data_file = ['test/', 'test/ad.csv', 'test/click_log.csv', 'test/README']

file_Path = '/Users/zn/Public/work/data/train_preliminary/'

file_Path = "train_preliminary/"

click_file_name = 'click_log.csv'

user_file_name = 'user.csv'

ad_file_name = 'ad.csv'

file_Path_test = "test/"

test_ad_file_name = 'ad.csv'

test_click_file_name = 'click_log.csv'

bool_only_test_flag = False # True False

train_filter = False

df_user =pd.read_csv(file_Path+user_file_name,na_values=['n'])

df_ad =pd.read_csv(file_Path+ad_file_name,na_values=['n'])

df_ad_test =pd.read_csv(file_Path_test+test_ad_file_name,na_values=['n'])

%%time

# 这个数据集最大

def get_data(file_name,flag,sample_flag):

df_click = pd.DataFrame()

nrows = 40000

if sample_flag:

df_click = pd.read_csv(file_name,nrows=nrows,na_values=['n'])

else :

df_click =pd.read_csv(file_name,na_values=['n'])

if flag =="train":

df = pd.merge(df_click,df_ad,on='creative_id', how='left')

df = pd.merge(df,df_user,on='user_id', how='left')

else :

df = pd.merge(df_click,df_ad_test,on='creative_id', how='left')

df = df.fillna(0)

print("df.shape=",df.shape)

return df

sample_flag = False # False True

df_train = get_data(file_Path+click_file_name,"train",sample_flag)

keys = list(df_train['user_id'].drop_duplicates())

print("df_train keys.keys=",len(keys))

df_test = get_data(file_Path_test+test_click_file_name,"test",sample_flag)

keys = list(df_test['user_id'].drop_duplicates())

print("df_test keys.keys=",len(keys))

print(" load data over")

#df_train = df_train[(df_train['time']<92) & (df_train['time']>80) ]

df_test['age'] = -1

df_test['gender'] = -1

df_train.replace("\N",'0',inplace=True)

print("df_train.shape",df_train.shape)

df_test.replace("\N",'0',inplace=True)

print("df_test.shape",df_test.shape)

df_fea = get_data_fea(df_train,df_test)

#df_fea_test = get_data_fea(pd.DataFrame(),df_test)

#df_fea = pd.concat([df_fea_train,df_fea_test],ignore_index=True)

fea_path = 'df_fea_result.csv'

df_fea.to_csv(fea_path, index=None, encoding='utf_8_sig')

print("df_fea.shape = ",df_fea.shape)

from ti import session

ti_session = session.Session()

inputs = ti_session.upload_data(path=fea_path, bucket="game-1253710071", key_prefix="contest")

print("upload over ")

ldg模型

性别和年龄分别预测,建立了两个模型,也可以性别+年龄的方式,这样就20个类搞定。

%matplotlib inline

import pandas as pd

import numpy as np

import os

from tqdm import tqdm

from sklearn.model_selection import StratifiedKFold

from sklearn import metrics

import time

import lightgbm as lgb

import os, sys, gc, time, warnings, pickle, random

from sklearn.decomposition import TruncatedSVD

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

#from gensim.models import Word2Vec

from ti import session

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

%%time

def lgb_model(X,y,x_test,user_label,n_class,flag):

params = {

'learning_rate': 0.05,

'boosting_type': 'gbdt',

'objective': 'multiclass',

'metric': 'None',

'num_leaves': 63,

'feature_fraction': 0.8,

'bagging_fraction': 0.8,

'bagging_freq': 5,

'seed': 1,

'bagging_seed': 1,

'feature_fraction_seed': 7,

'min_data_in_leaf': 20,

'num_class': n_class,

'nthread': 8,

'verbose': -1

}

fold = StratifiedKFold(n_splits=5, shuffle=True, random_state=64)

lgb_models = []

lgb_pred = np.zeros((len(user_label),n_class))

lgb_oof = np.zeros((len(X), n_class))

for index, (train_idx, val_idx) in enumerate(fold.split(X, y)):

train_set = lgb.Dataset(X.iloc[train_idx], y.iloc[train_idx])

val_set = lgb.Dataset(X.iloc[val_idx], y.iloc[val_idx])

model = lgb.train(params, train_set, valid_sets=[train_set, val_set], verbose_eval=200)

lgb_models.append(model)

val_pred = model.predict(X.iloc[val_idx])

#result_to_csv(train_label,model.predict(X),"lgb_"+str(index))

lgb_oof[val_idx] = val_pred

val_y = y.iloc[val_idx]

val_pred = np.argmax(val_pred, axis=1)

print(index, 'val f1', metrics.f1_score(val_y, val_pred, average='macro'))

test_pred = model.predict(x_test)

lgb_pred += test_pred/5

oof_new = np.argmax(lgb_oof, axis=1)

print('oof f1', metrics.f1_score(oof_new, y, average='macro'))

pred_new = np.argmax(lgb_pred, axis=1)

sub = user_label[['user_id']]

sub[flag] = pred_new+1

print(sub[flag].value_counts(1))

sub.to_csv(flag+'_result.csv', index=None, encoding='utf_8_sig')

file_Path = "df_fea_result.csv"

df_fea =pd.read_csv(file_Path,na_values=['n'])

print("df_fea.shape = ",df_fea.shape)

print("df_fea.columns = ",df_fea.columns)

#df_fea = pd.read_csv(file_Path,nrows=50000,na_values=['n'])

tfidf_file_Path="df_fea_tfidf.csv"

df_fea_tfidf =pd.read_csv(tfidf_file_Path,na_values=['n'])

print("df_fea_tfidf.shape = ",df_fea_tfidf.shape)

print("df_fea_tfidf.columns = ",df_fea_tfidf.columns)

#df = df_fea.merge(df_fea_tfidf)

fea = df_fea_tfidf.columns

fea_filter= ['age','gender']

fea_merge = [col for col in fea if col not in fea_filter]

df = pd.merge(df_fea,df_fea_tfidf[fea_merge], on="user_id",how='left')

print(" df.shape = ",df.shape)

print(" merge data over ")

%%time

fea = df.columns

fea_filter= ['user_id','age','gender']

fea_train = [col for col in fea if col not in fea_filter]

df_train = df[df['age']> -1]

df_test = df[df['age']==-1]

print(" df_train.shape = ",df_train.shape)

print(" df_test.shape = ",df_test.shape)

X= df_train[fea_train]

y= df_train['age']-1

x_test = df_test[fea_train]

user_label = df_test['user_id']

print("len(user_label)=",len(user_label))

age_class =10

lgb_model(X,y,x_test,df_test,age_class,'predicted_age')

print(" - "*7+" 性别 "+" - "*7)

x_test = df_test[fea_train]

user_label = df_test['user_id']

y= df_train['gender']-1

gender_class = 2

lgb_model(X,y,x_test,df_test,gender_class,'predicted_gender')

age_result = "predicted_age_result.csv"

gender_result = "predicted_gender_result.csv"

df_age_test = pd.read_csv(age_result)

df_gender_test = pd.read_csv(gender_result)

print(df_age_test.head(3))

df = pd.merge(df_age_test,df_gender_test[['user_id','predicted_gender']],on='user_id',how='left')

df.to_csv('submission.csv', index=None, encoding='utf_8_sig')

总结

多学习开源的代码,了解别人的思路,继续前进。

参考博客

腾讯广告大赛

钛平台

智能钛机器学习平台

最后

以上就是安详鞋子最近收集整理的关于2020腾讯广告大赛 :13.5 baseline的全部内容,更多相关2020腾讯广告大赛内容请搜索靠谱客的其他文章。

发表评论 取消回复