【FFmpeg编程实战】(2)分离视频文件中的视频流每一张图片(进阶)(C)

- 一、代码修改

- 二、运行结果

- 三、完整代码

在前文《【FFmpeg解码实战】(1)分离视频文件中的音频流和视频流》中,

我们实现了对视频的解复用功能,以MP4为例,解复用后为 H264视频流 和 AAC音频流。

本文在前文的基础上,来实现将前面的代码修改为不保存H264文件,而是保存成一张一张的yuv420p图片。

本文VS2019项目工程所有文件已打包上传到CSDN,欢迎下载:《VS2019-解码视频-工程所有文件.zip》,

注意工程中需要配置ffmpeg 的lib库路径及头文件路径才可使用本文链接:《【FFmpeg解码实战】(2)分离视频文件中的视频流每一张图片(进阶)(C)》

一、代码修改

其实很简闲单,修改的地方也不多,如下:

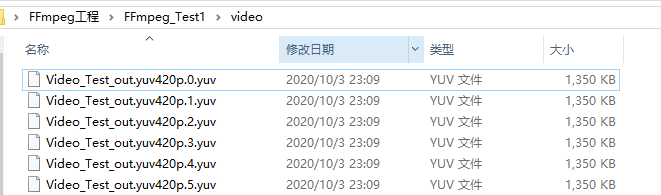

在保存 h264 video 视频流时,分开来,保存成 video/Video_Test_out.yuv420p.0.yuv ,

图片名字,根据 video_frame_count++ 的自加而变化。

#define YUV420P_FILE 1 // 视频流保存成 yuv420p 图片

//#define H264_FILE 1 // 视频流保存成 H264 文件

#ifdef H264_FILE

sprintf_s(video_dst_filename, 50, "%s.%s", "Video_Test_out", video_dec->name);

ret = fopen_s(&video_dst_file, video_dst_filename, "wb");

printf("open file:%s ret:%dn", video_dst_filename, ret);

#endif

while (av_read_frame(fmt_ctx, &pkt) >= 0) {

// 10. 视频数据解码

if (pkt.stream_index == video_stream_idx)

{

......

#ifdef YUV420P_FILE

// 10.4 写入文件

sprintf_s(video_dst_filename, 50, "video/%s.%s.%d.yuv", "Video_Test_out",

av_get_pix_fmt_name(video_dec_ctx->pix_fmt), video_frame_count++);

ret = fopen_s(&video_dst_file, video_dst_filename, "wb");

//printf("open file:%s ret:%dn", video_dst_filename, ret);

#endif

ret = (int)fwrite(video_dst_data[0], 1, video_dst_bufsize, video_dst_file);

//printf("Write Size:%dn", ret);

#ifdef YUV420P_FILE

fclose(video_dst_file);

#endif

}

......

}

......

#ifdef H264_FILE

fclose(video_dst_file);

#endif

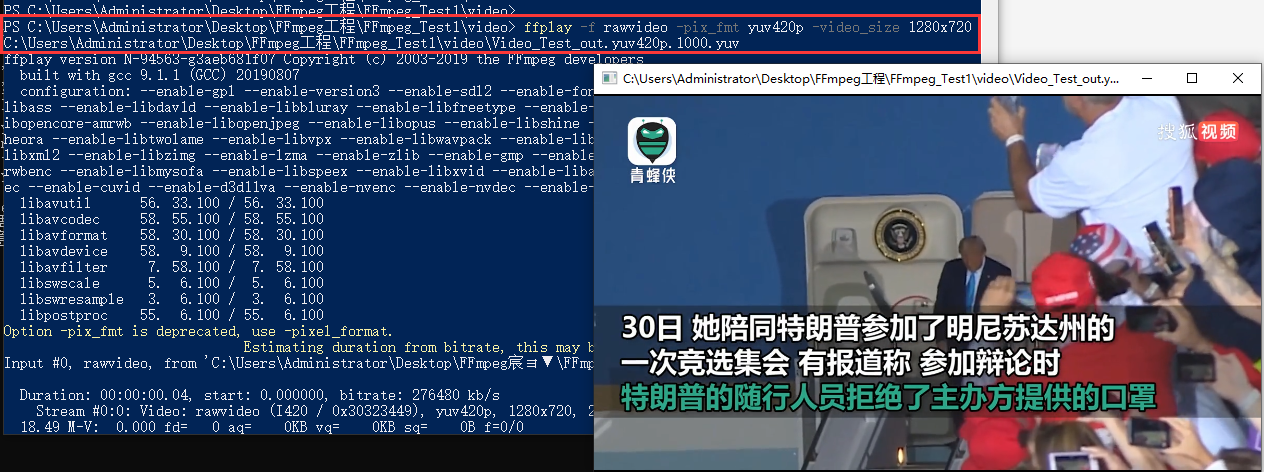

二、运行结果

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from 'Video_Test.mp4':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41

Duration: 00:02:45.92, start: 0.000000, bitrate: 1076 kb/s

Stream #0:0(und): Video: h264 (High) (avc1 / 0x31637661), yuv420p, 1280x720 [SAR 1:1 DAR 16:9], 975 kb/s, 24.98 fps, 25 tbr, 12800 tbn, 50 tbc (default)

Metadata:

handler_name : VideoHandler

Stream #0:1(und): Audio: aac (HE-AAC) (mp4a / 0x6134706D), 44100 Hz, stereo, fltp, 96 kb/s (default)

Metadata:

handler_name : SoundHandler

#===> Find video_stream_idx = 0

#===> Find decoder: h264, coded_id:27 long name: H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10 pix_fmt=0 (yuv420p)

#===> Find audio_stream_idx = 1

#===> Find decoder: aac, coded_id:86018 long name: AAC (Advanced Audio Coding)

open file:Video_Test_out.aac ret:0

Start read frame

Demuxing succeeded.

Play the output video file with the command:

ffplay -f rawvideo -pix_fmt yuv420p -video_size 1280x720 video/Video_Test_out.yuv420p.4139.yuv

Warning: the sample format the decoder produced is planar (fltp). This example will output the first channel only.

Play the output audio file with the command:

ffplay -f f32le -ac 1 -ar 44100 Video_Test_out.aac

查看yuv图片, ffplay -f rawvideo -pix_fmt yuv420p -video_size 1280x720 Video_Test_out.yuv420p.1000.yuv

三、完整代码

#include <stdio.h>

#include <stdlib.h>

#include <stdbool.h>

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libavutil/imgutils.h>

#include <libavutil/timestamp.h> //av_ts2timestr

#include <libavutil/samplefmt.h>

#define YUV420P_FILE 1 // 视频流保存成 yuv420p 图片

//#define H264_FILE 1 // 视频流保存成 H264 文件

static int get_format_from_sample_fmt(const char** fmt, enum AVSampleFormat sample_fmt)

{

int i;

struct sample_fmt_entry {

enum AVSampleFormat sample_fmt; const char* fmt_be, * fmt_le;

} sample_fmt_entries[] = {

{ AV_SAMPLE_FMT_U8, "u8", "u8" },

{ AV_SAMPLE_FMT_S16, "s16be", "s16le" },

{ AV_SAMPLE_FMT_S32, "s32be", "s32le" },

{ AV_SAMPLE_FMT_FLT, "f32be", "f32le" },

{ AV_SAMPLE_FMT_DBL, "f64be", "f64le" },

};

*fmt = NULL;

for (i = 0; i < FF_ARRAY_ELEMS(sample_fmt_entries); i++) {

struct sample_fmt_entry* entry = &sample_fmt_entries[i];

if (sample_fmt == entry->sample_fmt) {

*fmt = AV_NE(entry->fmt_be, entry->fmt_le);

return 0;

}

}

fprintf(stderr,"sample format %s is not supported as output formatn", av_get_sample_fmt_name(sample_fmt));

return -1;

}

// 参考:ffplay.c、demuxing_decoding.c

int main(int argc, char* argv[])

{

int ret = 0;

//printf("%s n",avcodec_configuration());

// 定义文件名

unsigned char input_filename[] = "video.mp4";

unsigned char out_filename[] = "video_out";

unsigned char video_dst_filename[50]; // = "Video_Test_out.h264";

unsigned char audio_dst_filename[50]; // = "Video_Test_out.aac";

memset(video_dst_filename, '�', 50);

memset(audio_dst_filename, '�', 50);

// 1. 打开文件,分配AVFormatContext 结构体上下文

AVFormatContext* fmt_ctx = NULL; // 定义音视频格式上下文结构体

if (avformat_open_input(&fmt_ctx, input_filename, NULL, NULL) < 0) {

printf("Could not open source file %sn", input_filename);

return 0;

}

// 2. 查找文件对应的流信息

if (avformat_find_stream_info(fmt_ctx, NULL) < 0) {

printf("Could not find stream informationn");

return 0;

}

// 3. 打印流信息

av_dump_format(fmt_ctx, 0, input_filename, 0);

// 4. 视频解码器初始化

// 4.1 获取视频对应的stream_index

int video_stream_idx = av_find_best_stream(fmt_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);

printf("n#===> Find video_stream_idx = %dn", video_stream_idx);

// 4.2 获取到stream 数据

AVStream* video_st = fmt_ctx->streams[video_stream_idx];

// 4.3 根据 codec_id 查找解码器

AVCodec* video_dec = avcodec_find_decoder(video_st->codecpar->codec_id);

// 4.4 初始化解码器上下文信息

AVCodecContext* video_dec_ctx = avcodec_alloc_context3(video_dec);

// 4.5 复制 codec 相关参数到解码器上下文中

avcodec_parameters_to_context(video_dec_ctx, video_st->codecpar);

printf("n#===> Find decoder: %s, coded_id:%d long name: %s pix_fmt=%d (%s)n", video_dec->name, video_dec->id, video_dec->long_name, video_dec_ctx->pix_fmt, av_get_pix_fmt_name(video_dec_ctx->pix_fmt));

// 4.6 初始化并打开解码器

AVDictionary* video_opts = NULL;

avcodec_open2(video_dec_ctx, video_dec, &video_opts);

// 5. 音频解码器初始化

// 5.1 获取音频对应的stream_index

int audio_stream_idx = av_find_best_stream(fmt_ctx, AVMEDIA_TYPE_AUDIO, -1, -1, NULL, 0);

printf("n#===> Find audio_stream_idx = %dn", audio_stream_idx);

// 5.2 获取到stream 数据

AVStream* audio_st = fmt_ctx->streams[audio_stream_idx];

// 5.3 根据 codec_id 查找解码器

AVCodec* audio_dec = avcodec_find_decoder(audio_st->codecpar->codec_id);

printf("n#===> Find decoder: %s, coded_id:%d long name: %sn", audio_dec->name, audio_dec->id, audio_dec->long_name);

// 5.4 初始化解码器上下文信息

AVCodecContext* audio_dec_ctx = avcodec_alloc_context3(audio_dec);

// 5.5 复制 codec 相关参数到解码器上下文中

avcodec_parameters_to_context(audio_dec_ctx, audio_st->codecpar);

// 5.6 初化并打开音频解码器

AVDictionary* audio_opts = NULL;

avcodec_open2(audio_dec_ctx, audio_dec, &audio_opts);

// 6. 配置视频解复用后要保存的位置

FILE* video_dst_file = NULL, * audio_dst_file = NULL;

#ifdef H264_FILE

sprintf_s(video_dst_filename, 50, "%s.%s", out_filename, video_dec->name);

ret = fopen_s(&video_dst_file, video_dst_filename, "wb");

printf("open file:%s ret:%dn", video_dst_filename, ret);

#endif

#ifdef YUV420P_FILE

//文件夹名称

char folderName[] = "video";

// 文件夹不存在则创建文件夹

if (_access(folderName, 0) == -1)

_mkdir(folderName);

sprintf_s(audio_dst_filename, 50, "video/%s.%s", out_filename, audio_dec->name);

#else

sprintf_s(audio_dst_filename, 50, "%s.%s", out_filename, audio_dec->name);

#endif

ret = fopen_s(&audio_dst_file, audio_dst_filename, "wb");

printf("open file:%s ret:%dn", audio_dst_filename, ret);

uint8_t* video_dst_data[4] = { NULL };

int video_dst_linesize[4] = { 0 };

// 7. 计算视频数据大小

size_t video_dst_bufsize = av_image_alloc(video_dst_data, video_dst_linesize,

video_dec_ctx->width, video_dec_ctx->height, video_dec_ctx->pix_fmt, 1);

// 8. 分配并初始化 AVFrame、AVPacket

AVFrame* frame = av_frame_alloc();

AVPacket pkt;

av_init_packet(&pkt);

pkt.data = NULL;

pkt.size = 0;

int video_frame_count = 0, audio_frame_count = 0;

printf("Start read framen");

// 9. 循环读取 一帧数据

while (av_read_frame(fmt_ctx, &pkt) >= 0) {

// 10. 视频数据解码

if (pkt.stream_index == video_stream_idx)

{

// 10.1 将 packet 数据 发送给解码器

ret = avcodec_send_packet(video_dec_ctx, &pkt);

// 10.2 获取解码后的帧数据

while (ret >= 0) {

ret = avcodec_receive_frame(video_dec_ctx, frame);

if (ret == AVERROR_EOF || ret == AVERROR(EAGAIN))

{

ret = 0;

break;

}

// 10.3 保存帧数据到视频文件中

//printf("#===> video_frame n:%d coded_n:%d ", video_frame_count++, frame->coded_picture_number);

av_image_copy(video_dst_data, video_dst_linesize,

(const uint8_t**)(frame->data), frame->linesize, video_dec_ctx->pix_fmt, video_dec_ctx->width, video_dec_ctx->height);

#ifdef YUV420P_FILE

// 10.4 写入文件

sprintf_s(video_dst_filename, 50, "video/%s.%s.%d.yuv", out_filename, av_get_pix_fmt_name(video_dec_ctx->pix_fmt), video_frame_count++);

ret = fopen_s(&video_dst_file, video_dst_filename, "wb");

//printf("open file:%s ret:%dn", video_dst_filename, ret);

#endif

ret = (int)fwrite(video_dst_data[0], 1, video_dst_bufsize, video_dst_file);

//printf("Write Size:%dn", ret);

#ifdef YUV420P_FILE

fclose(video_dst_file);

#endif

}

}

// 11. 音频数据解码

else if (pkt.stream_index == audio_stream_idx)

{

// 11.1 将 packet 数据 发送给解码器

ret = avcodec_send_packet(audio_dec_ctx, &pkt);

// 11.2 获取解码后的帧数据

while (ret >= 0) {

ret = avcodec_receive_frame(audio_dec_ctx, frame);

if (ret == AVERROR_EOF || ret == AVERROR(EAGAIN))

{

ret = 0;

break;

}

// 11.3 写入文件

//printf("#===> audio_frame n:%d nb_samples:%d pts:%s ",

// audio_frame_count++, frame->nb_samples, av_ts2timestr(frame->pts, &audio_dec_ctx->time_base));

ret = (int)fwrite(frame->extended_data[0], 1, frame->nb_samples * av_get_bytes_per_sample(frame->format), audio_dst_file);

//printf("Write Size:%dn", ret);

}

}

av_frame_unref(frame);

// 清空AVPacket结构体数据

av_packet_unref(&pkt);

if (ret < 0)

break;

}

// 12.发送一个空包,刷新解码器

ret = avcodec_send_packet(video_dec_ctx, NULL);

// 12.1 获取解码后的帧数据

while (ret >= 0) {

ret = avcodec_receive_frame(video_dec_ctx, frame);

if (ret == AVERROR_EOF || ret == AVERROR(EAGAIN))

{

printf("break, video ret=%d n", ret);

ret = 0;

break;

}

}

ret = avcodec_send_packet(audio_dec_ctx, NULL);

// 12.2 获取解码后的帧数据

while (ret >= 0) {

ret = avcodec_receive_frame(audio_dec_ctx, frame);

if (ret == AVERROR_EOF || ret == AVERROR(EAGAIN))

{

printf("break, audio ret=%d n", ret);

ret = 0;

break;

}

}

// 13. 解复用完毕

printf("Demuxing succeeded.n");

printf("Play the output video file with the command:n"

"ffplay -f rawvideo -pix_fmt %s -video_size %dx%d %sn",

av_get_pix_fmt_name(video_dec_ctx->pix_fmt), video_dec_ctx->width, video_dec_ctx->height, video_dst_filename);

#ifdef YUV420P_FILE

memset(video_dst_filename, '�', 50);

sprintf_s(video_dst_filename, 50, "%s.%s.%d.yuv", out_filename, av_get_pix_fmt_name(video_dec_ctx->pix_fmt), 0);

#endif

FILE* bat_dst_file = NULL;

char bat_out[100] = "";

memset(bat_out, '�', 100);

sprintf_s(bat_out, 99, "ffplay -f rawvideo -pix_fmt %s -video_size %dx%d %s",

av_get_pix_fmt_name(video_dec_ctx->pix_fmt), video_dec_ctx->width, video_dec_ctx->height, video_dst_filename);

#ifdef YUV420P_FILE

ret = fopen_s(&bat_dst_file, "video/play_video.bat", "wb");

#else

ret = fopen_s(&bat_dst_file, "play_video.bat", "wb");

#endif

ret = (int)fwrite(bat_out, 1, sizeof(bat_out), bat_dst_file);

fclose(bat_dst_file);

enum AVSampleFormat sfmt = audio_dec_ctx->sample_fmt;

int n_channels = audio_dec_ctx->channels;

const char* fmt;

if (av_sample_fmt_is_planar(sfmt)) {

const char* packed = av_get_sample_fmt_name(sfmt);

printf("Warning: the sample format the decoder produced is planar "

"(%s). This example will output the first channel only.n",packed ? packed : "?");

sfmt = av_get_packed_sample_fmt(sfmt);

n_channels = 1;

}

if ((ret = get_format_from_sample_fmt(&fmt, sfmt)) < 0)

goto end;

printf("Play the output audio file with the command:n"

"ffplay -f %s -ac %d -ar %d %sn",fmt, n_channels, audio_dec_ctx->sample_rate,audio_dst_filename);

#ifdef YUV420P_FILE

memset(audio_dst_filename, '�', 50);

sprintf_s(audio_dst_filename, 50, "%s.%s", out_filename, audio_dec->name);

#endif

memset(bat_out, '�', 100);

sprintf_s(bat_out, 99, "ffplay -f %s -ac %d -ar %d %s",fmt, n_channels, audio_dec_ctx->sample_rate,audio_dst_filename);

#ifdef YUV420P_FILE

ret = fopen_s(&video_dst_file, "video/play_audio.bat", "wb");

#else

ret = fopen_s(&video_dst_file, "play_audio.bat", "wb");

#endif

ret = (int)fwrite(bat_out, 1, sizeof(bat_out), bat_dst_file);

fclose(video_dst_file);

end:

avcodec_free_context(&video_dec_ctx);

avcodec_free_context(&audio_dec_ctx);

avformat_close_input(&fmt_ctx);

#ifdef H264_FILE

fclose(video_dst_file);

#endif

fclose(audio_dst_file);

av_frame_free(&frame);

av_free(video_dst_data[0]);

return 0;

}

最后

以上就是高贵舞蹈最近收集整理的关于【FFmpeg解码实战】(2)分离视频文件中的视频流每一张图片(进阶)(C)的全部内容,更多相关【FFmpeg解码实战】(2)分离视频文件中内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复